Model Card for Model ID

The model detects hallucination and outputs NLI metrics. It has been trained on: TRUE Dataset(93k samples) - 0.91 F1 score

Model Details

Crossencoder model which has been trained on TRUE dataset to detect hallucination focussed on summarization. Natural Language Inference (NLI) involves deciding if a "hypothesis" is logically supported by a "premise." Simply put, it's about figuring out if a given statement (the hypothesis) is true based on another statement (the premise) that serves as your sole information about the topic.

Uses

Bias, Risks, and Limitations

You can use this to finetune for specific tasks but using directly on intense financial or medical based documents is not recommended.

How to Get Started with the Model

Use the code below to get started with the model.

model = AutoModelForSequenceClassification.from_pretrained('vikash06/Hallucination-model-True-dataset')

tokenizer = AutoTokenizer.from_pretrained('vikash06/Hallucination-model-True-dataset')

inputs = tokenizer.batch_encode_plus(pairs, return_tensors='pt', padding=True, truncation=True)

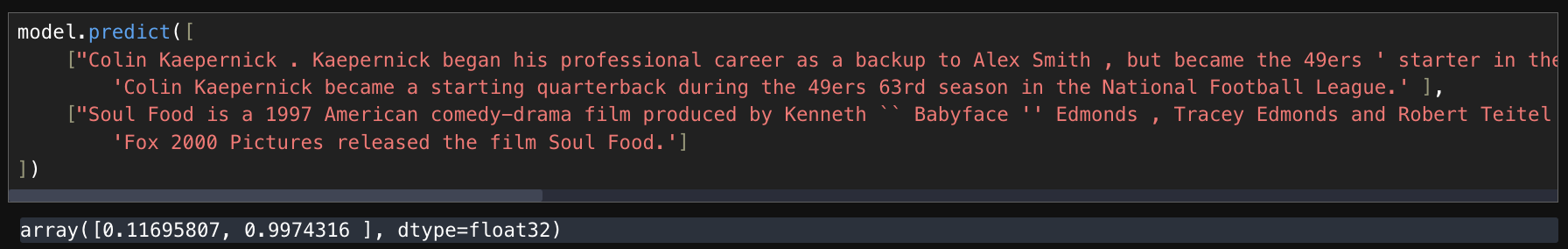

pairs = [["Colin Kaepernick . Kaepernick began his professional career as a backup to Alex Smith , but became the 49ers ' starter in the middle of the 2012 season after Smith suffered a concussion . He remained the team 's starting quarterback for the rest of the season and went on to lead the 49ers to their first Super Bowl appearance since 1994 , losing to the Baltimore Ravens .", 'Colin Kaepernick became a starting quarterback during the 49ers 63rd season in the National Football League.' ], ["Soul Food is a 1997 American comedy-drama film produced by Kenneth `` Babyface '' Edmonds , Tracey Edmonds and Robert Teitel and released by Fox 2000 Pictures .", 'Fox 2000 Pictures released the film Soul Food.']]

inputs = inputs.to("cuda:0")

model.eval()

with torch.no_grad():

outputs = model(**inputs)

logits = outputs.logits # ensure your model outputs logits directly

scores = 1 / (1 + np.exp(-logits.cpu().detach().numpy())).flatten()

The scores lie between 0-1 where 1 represents no hallucination and 0 represents hallucination.

Training Data

TRUE Dataset all 93k samples: https://arxiv.org/pdf/2204.04991

- Downloads last month

- 17