File size: 4,770 Bytes

84d98bb 5e1b48a 49b5993 843f3d5 49b5993 84d98bb ca45ce2 84d98bb ca45ce2 84d98bb |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 |

---

license: llama3

datasets:

- taddeusb90/finbro-v0.1.0

language:

- en

library_name: transformers

tags:

- finance

---

Fibro v0.1.0 Llama 3 8B Model with 1Million token context window

======================

Model Description

-----------------

The Fibro Llama 3 8B model is language model optimized for financial applications. This model aims to enhance financial analysis, automate data extraction, and improve financial literacy across various user expertise levels. It utilizes a massive 1 million token context window.

This is just a sneak peek into what's coming, and future releases will be done periodically consistently improving it's performance.

Training:

-----------------

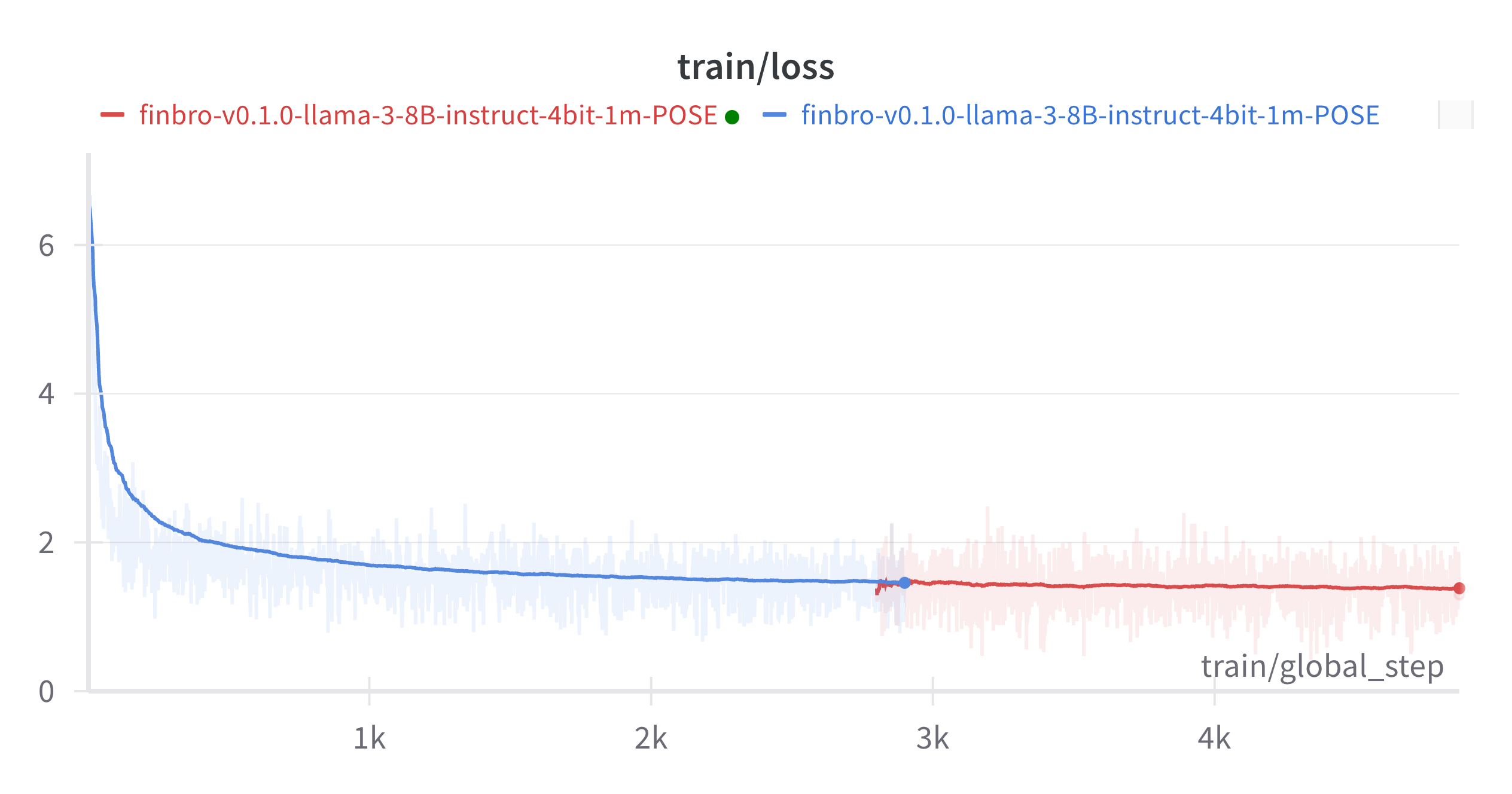

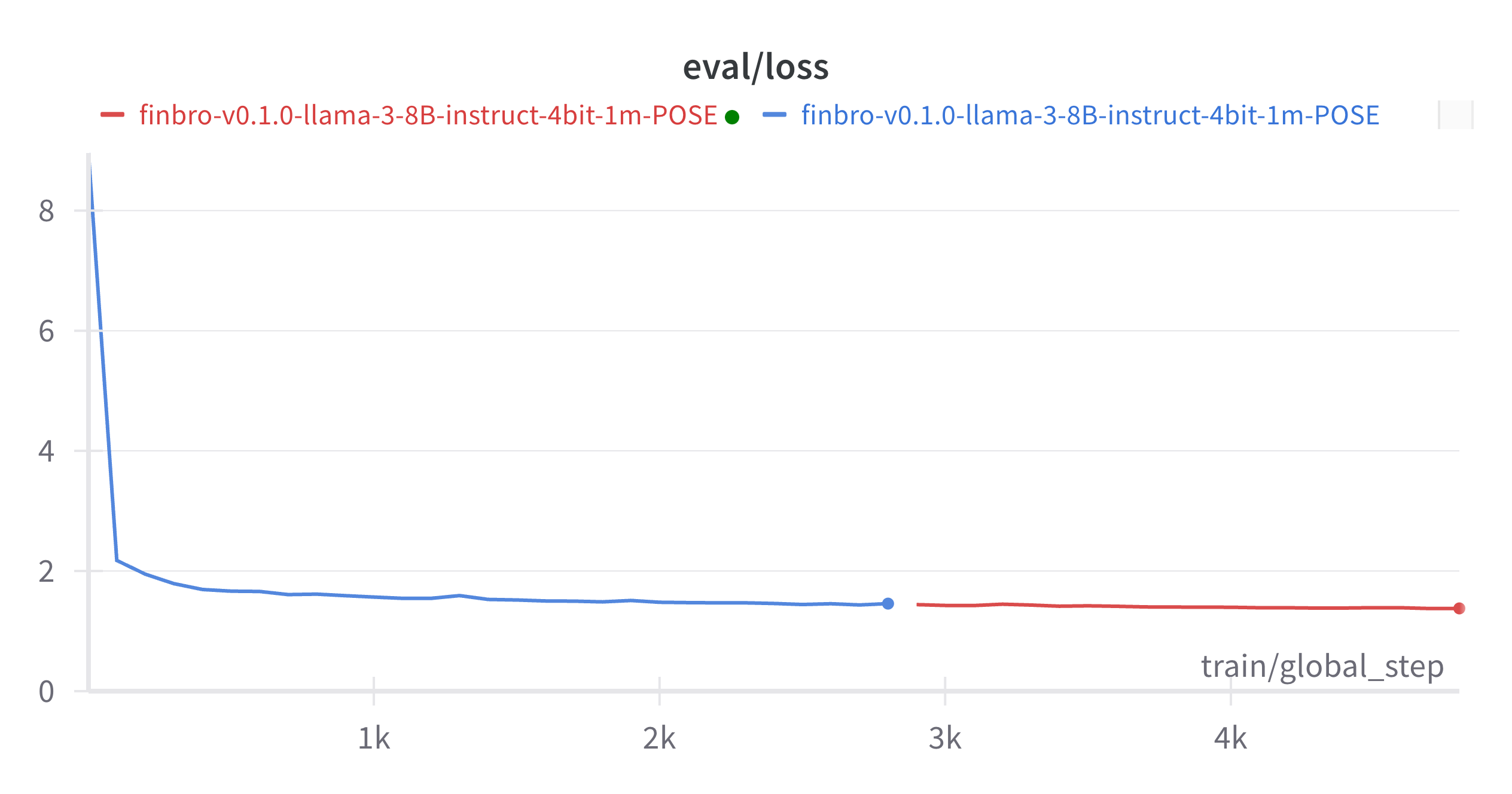

The model is still training, I will be sharing new incremental releases while it's improving so you have time to play around with it.

What's Next?

-----------

* **Extended Capability:** Continue training on the 8B model as it hasn't converged yet as I only scratched the surface here and transitioning to scale up with a 70B model for deeper insights and broader financial applications.

* **Dataset Expansion:** Continuous enhancement by integrating more diverse and comprehensive real and synthetic financial data.

* **Advanced Financial Analysis:** Future versions will support complex financial decision-making processes by interpreting and analyzing financial data within agentive workflows.

* **Incremental Improvements:** Regular updates are made to increase the model's efficiency and accuracy and extend it's capabilities in financial tasks.

Model Applications

------------------

* **Information Extraction:** Automates the process of extracting valuable data from unstructured financial documents.

* **Financial Literacy:** Provides explanations of financial documents at various levels, making financial knowledge more accessible.

How to Use

----------

Here is how to load and use the model in your Python projects:

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "taddeusb90/finbro-v0.1.0-llama-3-8B-instruct-1m-POSE"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

text = "Your financial query here"

inputs = tokenizer(text, return_tensors="pt")

outputs = model.generate(inputs['input_ids'])

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

```

Training Data

-------------

The Fibro Llama 3 8B model was trained on the Finbro Dataset, an extensive compilation of over 300,000 entries sourced from Investopedia and Sujet Finance. This dataset includes structured Q&A pairs, financial reports, and a variety of financial tasks pooled from multiple datasets.

The dataset can be found [here](https://huggingface.co/datasets/taddeusb90/finbro-v0.1.0)

This dataset will be extended to contain real and synthetic data on a wide range of financial tasks such as:

- Investment valuation

- Value investing

- Security analysis

- Derivatives

- Asset and portfolio management

- Financial information extraction

- Quantitative finance

- Econometrics

- Applied computer science in finance

and much more

Notice

--------

Please exercise caution and use it at your own risk. I assume no responsibility for any losses incurred if used.

Licensing

---------

This model is released under the [META LLAMA 3 COMMUNITY LICENSE AGREEMENT](https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct/blob/main/LICENSE).

Citation

--------

If you use this model in your research, please cite it as follows:

```bibtex

@misc{

finbro_v0.1.0-llama-3-8B-1m-POSE,

author = {Taddeus Buica}, title = {Fibro Llama 3 8B Model for Financial Analysis},

year = {2024},

journal = {Hugging Face repository},

howpublished = {\url{https://huggingface.co/taddeusb90/finbro-v0.1.0-llama-3-8B-instruct-1m-POSE}}

}

```

Special thanks to the folks from AI@Meta for powering this project with their awesome models.

References

--------

[[1](https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct)] Llama 3 Model Card by AI@Meta, Year: 2024

[[2](https://huggingface.co/datasets/sujet-ai/Sujet-Finance-Instruct-177k)] Sujet Finance Dataset

[[3](https://huggingface.co/datasets/FinLang/investopedia-instruction-tuning-dataset)] Dataset Card for investopedia-instruction-tuning |