Spaces:

No application file

No application file

Add Initial implementation of the deepforest-agent

#1

by

SamiaHaque

- opened

- LICENSE +21 -0

- README.md +100 -12

- app.py +501 -0

- pyproject.toml +66 -0

- requirements.txt +43 -0

- src/__init__.py +0 -0

- src/deepforest_agent/__init__.py +0 -0

- src/deepforest_agent/agents/__init__.py +0 -0

- src/deepforest_agent/agents/deepforest_detector_agent.py +403 -0

- src/deepforest_agent/agents/ecology_analysis_agent.py +92 -0

- src/deepforest_agent/agents/memory_agent.py +238 -0

- src/deepforest_agent/agents/orchestrator.py +795 -0

- src/deepforest_agent/agents/visual_analysis_agent.py +307 -0

- src/deepforest_agent/conf/__init__.py +0 -0

- src/deepforest_agent/conf/config.py +60 -0

- src/deepforest_agent/models/__init__.py +0 -0

- src/deepforest_agent/models/llama32_3b_instruct.py +242 -0

- src/deepforest_agent/models/qwen_vl_3b_instruct.py +152 -0

- src/deepforest_agent/models/smollm3_3b.py +244 -0

- src/deepforest_agent/prompts/__init__.py +0 -0

- src/deepforest_agent/prompts/prompt_templates.py +257 -0

- src/deepforest_agent/tools/__init__.py +0 -0

- src/deepforest_agent/tools/deepforest_tool.py +323 -0

- src/deepforest_agent/tools/tool_handler.py +188 -0

- src/deepforest_agent/utils/__init__.py +0 -0

- src/deepforest_agent/utils/cache_utils.py +306 -0

- src/deepforest_agent/utils/detection_narrative_generator.py +445 -0

- src/deepforest_agent/utils/image_utils.py +465 -0

- src/deepforest_agent/utils/logging_utils.py +449 -0

- src/deepforest_agent/utils/parsing_utils.py +238 -0

- src/deepforest_agent/utils/rtree_spatial_utils.py +394 -0

- src/deepforest_agent/utils/state_manager.py +574 -0

- src/deepforest_agent/utils/tile_manager.py +211 -0

- tests/test_deepforest_tool.py +465 -0

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2025 DeepForest Agent

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,12 +1,100 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# DeepForest Multi-Agent System

|

| 2 |

+

|

| 3 |

+

The DeepForest Multi-Agent System provides ecological image analysis by orchestrating multiple AI agents that work together to understand ecological images. Simply upload an image of a forest, wildlife habitat, or ecological scene, and ask questions in natural language.

|

| 4 |

+

|

| 5 |

+

## Installation

|

| 6 |

+

|

| 7 |

+

### 1. Clone the repository

|

| 8 |

+

|

| 9 |

+

```bash

|

| 10 |

+

git clone https://github.com/weecology/deepforest-agent.git

|

| 11 |

+

cd deepforest-agent

|

| 12 |

+

```

|

| 13 |

+

|

| 14 |

+

### 2. Create and activate a Conda environment

|

| 15 |

+

|

| 16 |

+

```bash

|

| 17 |

+

conda create -n deepforest_agent python=3.12.11

|

| 18 |

+

conda activate deepforest_agent

|

| 19 |

+

```

|

| 20 |

+

|

| 21 |

+

### 3. Install dependencies

|

| 22 |

+

|

| 23 |

+

```bash

|

| 24 |

+

pip install -r requirements.txt

|

| 25 |

+

pip install -e .

|

| 26 |

+

```

|

| 27 |

+

|

| 28 |

+

### 4. Configure the HuggingFace Token

|

| 29 |

+

Create a `.env` file in the root directory of the deepforest-agent project and add your HuggingFace token like below:

|

| 30 |

+

|

| 31 |

+

```bash

|

| 32 |

+

HF_TOKEN="your_huggingface_token_here"

|

| 33 |

+

```

|

| 34 |

+

|

| 35 |

+

You can obtain your token from [HuggingFace Access Token](https://huggingface.co/settings/tokens). Make sure the Token type is "Write".

|

| 36 |

+

|

| 37 |

+

## Usage

|

| 38 |

+

|

| 39 |

+

The DeepForest Agent runs through a Gradio web interface. To start the interface, execute:

|

| 40 |

+

|

| 41 |

+

```bash

|

| 42 |

+

python app.py

|

| 43 |

+

```

|

| 44 |

+

|

| 45 |

+

A link like http://127.0.0.1:7860 will appear in the terminal. Open it in your browser to interact with the agent. A public Gradio link may also be provided if available.

|

| 46 |

+

|

| 47 |

+

**Sample Recording of Running the System:** [Drive Link](https://drive.google.com/file/d/1gNMn-xJd48Ld3TZU4oiYvTbiWaiLsc8G/view?usp=sharing)

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

### How to Use

|

| 51 |

+

|

| 52 |

+

1. Upload an ecological image (aerial/drone photography works best)

|

| 53 |

+

2. Ask questions about wildlife, forest health, or ecological patterns. For example:

|

| 54 |

+

- How many trees are detected, and how many of them are alive vs dead?

|

| 55 |

+

- How many birds are around each dead tree?

|

| 56 |

+

- What objects are in the northwest region of the image?

|

| 57 |

+

- Do any birds overlap with livestock in this image?

|

| 58 |

+

- What percentage of the image is covered by trees vs birds vs livestock?

|

| 59 |

+

3. Get comprehensive analysis combining computer vision and ecological insights. The gallery shows the annotated image with objects and the detection monitor presents the summary of DeepForest detection.

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

## Features

|

| 63 |

+

|

| 64 |

+

- **Multi-Species Detection**: Automatically detects trees, birds, and livestock using specialized DeepForest models

|

| 65 |

+

- **Tree Health Assessment**: Identifies alive and dead trees using DeepForest Tree Detector whenever user asks.

|

| 66 |

+

- **Visual Analysis**: Dual analysis of original and annotated images using Qwen2.5-VL-3B-Instruct model

|

| 67 |

+

- **Memory Context**: Maintains conversation history for contextual understanding across multiple queries

|

| 68 |

+

- **Tiling Image for Visual Agent:** Larger images are tiled and processed individually for the visual agent.

|

| 69 |

+

- **R-Tree Spatial Indexing:** Stores DeepForest Results in an R-Tree spatial index structure and use spatial queries to retrieve relevant information and present it to the user.

|

| 70 |

+

- **Ecological Insights**: Synthesizes detection data with visual analysis and memory context for comprehensive ecological understanding

|

| 71 |

+

- **Streaming Responses**: Real-time updates as each agent processes your query

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

## Requirements

|

| 75 |

+

|

| 76 |

+

### Hardware Requirements

|

| 77 |

+

- **GPU**: GPU with at least 24GB VRAM (recommended for optimal performance). The system is optimized for GPU execution. Running on CPU will take significantly longer processing times

|

| 78 |

+

- **Storage**: At least 35GB free space for model downloads

|

| 79 |

+

|

| 80 |

+

### API Requirements

|

| 81 |

+

- **HuggingFace Token**: Required for model access.

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

## Image Processing Times

|

| 85 |

+

|

| 86 |

+

- **Standard Images**: Most ecological images process within 30 seconds on GPU

|

| 87 |

+

- **Large GeoTIFF Files**: Larger geospatial images may require significant time for complete analysis

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

## Models Used

|

| 91 |

+

|

| 92 |

+

- **SmolLM3-3B**: For Memory Agent to get context, and for Detector Agent to call the tool with appropriate parameters

|

| 93 |

+

- **Qwen2.5-VL-3B-Instruct**: Used in Visual agent for multimodal image-text understanding

|

| 94 |

+

- **Llama-3.2-3B-Instruct**: For Ecology agents for text understanding and generation

|

| 95 |

+

- **DeepForest Models**: For tree, bird, and livestock detection. Also used for alive/dead tree classification.

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

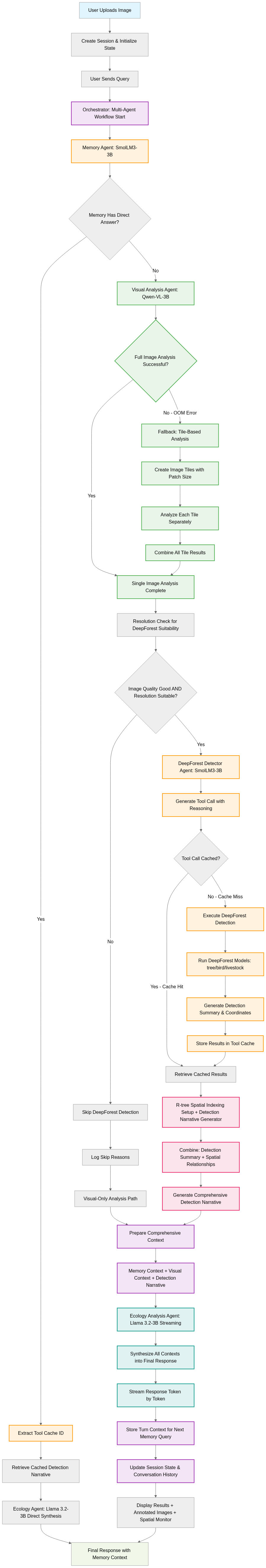

## Multi-Agent Workflow

|

| 99 |

+

|

| 100 |

+

[](https://mermaid.live/edit#pako:eNqlV9ty4jgQ_RWVt2pexmQI9_hhtwiQK4SLgVyceVBMO7giLEq2kzAk_75tSTYiM9k8LA8UpruPuk93H9tby-cLsBzrUdD1kky79xHBT9ubxSDIbM04XcTkfEUf4Scplf4mx15HAE2AuBDHIY_IN3IehUlIWfgL_0zQ9FNhHEv_jkJyIUKccQpio80dae56Q-EvIU4ETbhwyCBlSVhqP0KUkGsungLGXzJUkegw9d2VwT1vACsuNkT6O8RdcdYfVEvVY-3ck24nW-12RmPSDQX4CWlH8QuIf95N0JPM--0W4jdy6vVeMSV0nHLOSIdijuS8q2FPJeyZN4FEhPAMyr4gXUgQOyPligosKHzOuTiTEedez-eMPxYJ9xldUVI9qGDKeWbuJkqQkDDWoecy9M47CSPKyATiNY9iIC9hsiS6rg6PEnjdZ0gVc8XfyIU3D-MUY9sIsEHg_PTxC0SleX9H14U86nJ7kjKmer6LcVPfx44HKdsn7XJHWt9zw-iRwcfQDl-tGRRzcakzIyUyHA5ITwgu3sjAO6GMPVD_ySHTkEHpmMZIaQ6iYwcyw6t8BtVBmXusCBnRxF8SF0dRB1zJgKEncXBAe9gpGYATuabYI2D5QA6l68jDdB_CCPNHEqQnco5Tmacwkm59k4O-_GuM8xBzlsoB6CzBfyIBFzgUsD7hAkccOQwT-hCyMMnPHMvIyVYVMsYuoY2cco6VX3WJAahiGeyzPym67HruU7g2TyumUZ_lyrOmXp8_Euk7ARrjLGnzVJpnelhKw4htdi1EXpc_fz9Ytn3u_XYolv3ZSs7lMdfeKUSQ0Z8vGJItO6iSwjnS_tfS_2a7c1PLts_DTZ4ODpVa1rMweSO3v63ofi9vdqOoogZhjBW127j-4KeY3X_wqZWyrWTx2Jukkek-QF1lsUMSAfDjIRSLHwz1IE64_5QLpFbIzo6MnYK46WpFcbe_4fpwscDlTyBPu6O1s-u5SHUxoCSMDLnSvl0llbdmzrdq0kfepJRlR9w1zQQchXwBr0g97kaSrsn3Pwka0blyke-DWojxOF8c5w9VfC_OmACjmSlehuu8nrFeg8mOiEwzBCwhirMzPxdW9T2T8a7rjUS21R_D9_VxMtHeei30Xky9fTXFnLVu7v74Ko-pXqLZTug_aO6e4rvIPl3tZn2m6pjPvfwm8EvJkM4g63DCyf6dIN8rvVjXnkLd3Smm_Aki8rBRP_K10nt1o0domoqoKDSTravsh2bEvG3rxblRU3XrzdYL82lAPgDIoY2eQcSy1biLOPYFwq0av7s7rxvGa0Y3xfx-R7oiniEslLTHxuAMUBV2U6e-7-4UlPlfnGxQs9skCBlz_oLDoB6AaelqS1CFelA3Lb3cEqCtbFoucrRWUIeWaZl_GoN7oU0-1MA3TdhnjVcOKsGhabrLgw6DFhyZdfmMxnEXArKSTVGPSObhNj5EYYezy6NWOb8svYSLZOlU1q8fYJ7lcJswqroCpubToP4lzEIL_v_OJ1Z9LhbGJK-AgqNDaFS_ggK1fHu1SaYLnHL5qNFqfYXDjUfTvakpcI78SvPhs9IMNNKzT83GmaYL-9Lu2wP7yh7aI7MtptPcvrbbbfv42O50bNT0PdpNx9HIHo9t1LgPfJo-s5k9R8DrPaJMh67tuvZ0at_c2LitJg2WjW8K4cJyAspisK0ViBXNrq1tBnBvoWyt4N5y8GcEKQaxe-s-ese4NY3uOF9ZTiJSjBQ8fVzmF6lUkW5I8TVkVYALfGkA0eFplFhOrXwoMSxna71azmGzfFArN8qNZr1Rb7RqRw3b2lhOqVKttA6alWat1qgf1hvVZu3dtn7JcxsH5VqzUa81Kq1mvdpoVGwLFpmmDNQrkHwTev8XjGAuiw)

|

app.py

ADDED

|

@@ -0,0 +1,501 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import sys

|

| 2 |

+

import os

|

| 3 |

+

from pathlib import Path

|

| 4 |

+

import time

|

| 5 |

+

import json

|

| 6 |

+

import gradio as gr

|

| 7 |

+

|

| 8 |

+

# This allows imports to work when app.py is in root but modules are in src/

|

| 9 |

+

current_dir = Path(__file__).parent.absolute()

|

| 10 |

+

src_dir = current_dir / "src"

|

| 11 |

+

|

| 12 |

+

if not src_dir.exists():

|

| 13 |

+

raise RuntimeError(f"Source directory not found: {src_dir}")

|

| 14 |

+

|

| 15 |

+

# Add to Python path if not already there

|

| 16 |

+

if str(src_dir) not in sys.path:

|

| 17 |

+

sys.path.insert(0, str(src_dir))

|

| 18 |

+

|

| 19 |

+

print(f"App running from: {current_dir}")

|

| 20 |

+

print(f"Source directory: {src_dir}")

|

| 21 |

+

print(f"Python path includes src: {str(src_dir) in sys.path}")

|

| 22 |

+

|

| 23 |

+

from deepforest_agent.agents.orchestrator import AgentOrchestrator

|

| 24 |

+

from deepforest_agent.utils.state_manager import session_state_manager

|

| 25 |

+

from deepforest_agent.utils.image_utils import (

|

| 26 |

+

encode_pil_image_to_base64_url,

|

| 27 |

+

load_pil_image_from_path,

|

| 28 |

+

get_image_info,

|

| 29 |

+

validate_image_path

|

| 30 |

+

)

|

| 31 |

+

from deepforest_agent.utils.logging_utils import multi_agent_logger

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

def upload_image(image_path):

|

| 35 |

+

"""

|

| 36 |

+

Handle image upload and initialize a new session for the multi-agent workflow.

|

| 37 |

+

|

| 38 |

+

This function is triggered when a user uploads an image. It creates a new

|

| 39 |

+

session with isolated state and updates the UI to show the chat interface

|

| 40 |

+

and monitoring components.

|

| 41 |

+

|

| 42 |

+

Args:

|

| 43 |

+

image_path (str or None): The file path to uploaded image from Gradio

|

| 44 |

+

|

| 45 |

+

Returns:

|

| 46 |

+

tuple: A tuple containing 9 Gradio component updates:

|

| 47 |

+

- gr.Chatbot: Chat interface (visible/hidden)

|

| 48 |

+

- image: Uploaded image state

|

| 49 |

+

- str: Upload status message

|

| 50 |

+

- gr.Textbox: Message input field (visible/hidden)

|

| 51 |

+

- gr.Button: Send button (visible/hidden)

|

| 52 |

+

- gr.Button: Clear button (visible/hidden)

|

| 53 |

+

- gr.Gallery: Generated images gallery (visible/hidden)

|

| 54 |

+

- str: Monitor text with session information

|

| 55 |

+

- str: Session ID for this user

|

| 56 |

+

"""

|

| 57 |

+

if image_path is None:

|

| 58 |

+

return (

|

| 59 |

+

gr.Chatbot(visible=False),

|

| 60 |

+

None, # uploaded_image_state

|

| 61 |

+

"No image uploaded",

|

| 62 |

+

gr.Textbox(visible=False),

|

| 63 |

+

gr.Button(visible=False), # send_btn

|

| 64 |

+

gr.Button(visible=False), # clear_btn

|

| 65 |

+

gr.Gallery(visible=False),

|

| 66 |

+

"No image uploaded",

|

| 67 |

+

None # session_id

|

| 68 |

+

)

|

| 69 |

+

|

| 70 |

+

if not validate_image_path(image_path):

|

| 71 |

+

return (

|

| 72 |

+

gr.Chatbot(visible=False),

|

| 73 |

+

None,

|

| 74 |

+

"Invalid image file or path not accessible",

|

| 75 |

+

gr.Textbox(visible=False),

|

| 76 |

+

gr.Button(visible=False),

|

| 77 |

+

gr.Button(visible=False),

|

| 78 |

+

gr.Gallery(visible=False),

|

| 79 |

+

"Invalid image file for analysis.",

|

| 80 |

+

None

|

| 81 |

+

)

|

| 82 |

+

|

| 83 |

+

try:

|

| 84 |

+

pil_image = load_pil_image_from_path(image_path)

|

| 85 |

+

if pil_image is None:

|

| 86 |

+

raise Exception("Failed to load image")

|

| 87 |

+

image_info = get_image_info(image_path)

|

| 88 |

+

except Exception as e:

|

| 89 |

+

return (

|

| 90 |

+

gr.Chatbot(visible=False),

|

| 91 |

+

None,

|

| 92 |

+

f"Error loading image: {str(e)}",

|

| 93 |

+

gr.Textbox(visible=False),

|

| 94 |

+

gr.Button(visible=False),

|

| 95 |

+

gr.Button(visible=False),

|

| 96 |

+

gr.Gallery(visible=False),

|

| 97 |

+

"Error loading image for analysis.",

|

| 98 |

+

None

|

| 99 |

+

)

|

| 100 |

+

|

| 101 |

+

# Create new session for this user

|

| 102 |

+

session_id = session_state_manager.create_session(pil_image)

|

| 103 |

+

session_state_manager.set(session_id, "image_file_path", image_path)

|

| 104 |

+

|

| 105 |

+

detection_monitor = ""

|

| 106 |

+

|

| 107 |

+

multi_agent_logger.log_session_event(

|

| 108 |

+

session_id=session_id,

|

| 109 |

+

event_type="session_created",

|

| 110 |

+

details={

|

| 111 |

+

"image_size": image_info.get("size") if image_info else pil_image.size,

|

| 112 |

+

"image_mode": image_info.get("mode") if image_info else pil_image.mode,

|

| 113 |

+

"image_path": image_path,

|

| 114 |

+

"file_size_bytes": image_info.get("file_size_bytes") if image_info else "unknown"

|

| 115 |

+

}

|

| 116 |

+

)

|

| 117 |

+

|

| 118 |

+

return (

|

| 119 |

+

gr.Chatbot(visible=True, value=[]),

|

| 120 |

+

pil_image,

|

| 121 |

+

f"Image uploaded successfully! Size: {pil_image.size}",

|

| 122 |

+

gr.Textbox(visible=True),

|

| 123 |

+

gr.Button(visible=True), # send_btn

|

| 124 |

+

gr.Button(visible=True), # clear_btn

|

| 125 |

+

gr.Gallery(visible=True, value=[]),

|

| 126 |

+

detection_monitor,

|

| 127 |

+

session_id # Return session ID

|

| 128 |

+

)

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

def process_message_streaming(user_message, chatbot_history, generated_images, detection_monitor, session_id):

|

| 132 |

+

"""

|

| 133 |

+

Process user message through the multi-agent workflow with streaming updates.

|

| 134 |

+

|

| 135 |

+

Args:

|

| 136 |

+

user_message (str): The user's input message

|

| 137 |

+

chatbot_history (list): Current chat history for display

|

| 138 |

+

generated_images (list): List of annotated images in PIL Image objects

|

| 139 |

+

detection_monitor (str): Current detection data monitoring text

|

| 140 |

+

session_id (str): Unique session identifier for this user

|

| 141 |

+

|

| 142 |

+

Yields:

|

| 143 |

+

tuple: A tuple containing 6 updated components:

|

| 144 |

+

- chatbot_history: Updated conversation history

|

| 145 |

+

- msg_input_clear: Empty string to clear message input field

|

| 146 |

+

- generated_images: Updated list of annotated images

|

| 147 |

+

- detection_monitor: Updated detection data monitor

|

| 148 |

+

- send_btn: Button component with interactive state

|

| 149 |

+

- msg_input: Input field component with interactive state

|

| 150 |

+

"""

|

| 151 |

+

if not user_message.strip():

|

| 152 |

+

yield chatbot_history, "", generated_images, detection_monitor, gr.Button(interactive=True), gr.Textbox(interactive=True)

|

| 153 |

+

return

|

| 154 |

+

|

| 155 |

+

# Check if session exists

|

| 156 |

+

if session_id is None or not session_state_manager.session_exists(session_id):

|

| 157 |

+

error_msg = "Session expired or invalid. Please upload an image to start a new session."

|

| 158 |

+

chatbot_history.append({"role": "user", "content": user_message})

|

| 159 |

+

chatbot_history.append({"role": "assistant", "content": error_msg})

|

| 160 |

+

yield chatbot_history, "", generated_images, detection_monitor, gr.Button(interactive=True), gr.Textbox(interactive=True)

|

| 161 |

+

return

|

| 162 |

+

|

| 163 |

+

# Check if image is available in session

|

| 164 |

+

current_image = session_state_manager.get(session_id, "current_image")

|

| 165 |

+

if current_image is None:

|

| 166 |

+

error_msg = "No image found in your session. Please upload an image first."

|

| 167 |

+

chatbot_history.append({"role": "user", "content": user_message})

|

| 168 |

+

chatbot_history.append({"role": "assistant", "content": error_msg})

|

| 169 |

+

yield chatbot_history, "", generated_images, detection_monitor, gr.Button(interactive=True), gr.Textbox(interactive=True)

|

| 170 |

+

return

|

| 171 |

+

|

| 172 |

+

total_execution_start = time.perf_counter()

|

| 173 |

+

|

| 174 |

+

multi_agent_logger.log_user_query(

|

| 175 |

+

session_id=session_id,

|

| 176 |

+

user_message=user_message

|

| 177 |

+

)

|

| 178 |

+

|

| 179 |

+

try:

|

| 180 |

+

if session_state_manager.get(session_id, "first_message", True):

|

| 181 |

+

image_base64_url = encode_pil_image_to_base64_url(current_image)

|

| 182 |

+

user_msg = {

|

| 183 |

+

"role": "user",

|

| 184 |

+

"content": [

|

| 185 |

+

{"type": "image", "image": image_base64_url},

|

| 186 |

+

{"type": "text", "text": user_message}

|

| 187 |

+

]

|

| 188 |

+

}

|

| 189 |

+

session_state_manager.set(session_id, "first_message", False)

|

| 190 |

+

else:

|

| 191 |

+

user_msg = {

|

| 192 |

+

"role": "user",

|

| 193 |

+

"content": [

|

| 194 |

+

{"type": "text", "text": user_message}

|

| 195 |

+

]

|

| 196 |

+

}

|

| 197 |

+

|

| 198 |

+

session_state_manager.add_to_conversation(session_id, user_msg)

|

| 199 |

+

chatbot_history.append({"role": "user", "content": user_message})

|

| 200 |

+

|

| 201 |

+

chatbot_history.append({"role": "assistant", "content": "Starting analysis..."})

|

| 202 |

+

|

| 203 |

+

yield chatbot_history, "", generated_images, detection_monitor, gr.Button(interactive=False), gr.Textbox(interactive=False)

|

| 204 |

+

|

| 205 |

+

conversation_history = session_state_manager.get(session_id, "conversation_history", [])

|

| 206 |

+

|

| 207 |

+

print(f"Session {session_id} - User message: {user_message}")

|

| 208 |

+

|

| 209 |

+

orchestrator = AgentOrchestrator()

|

| 210 |

+

|

| 211 |

+

start_time = time.perf_counter()

|

| 212 |

+

|

| 213 |

+

try:

|

| 214 |

+

# Process with streaming updates

|

| 215 |

+

final_result = None

|

| 216 |

+

|

| 217 |

+

for result in orchestrator.process_user_message_streaming(

|

| 218 |

+

user_message=user_message,

|

| 219 |

+

conversation_history=conversation_history,

|

| 220 |

+

session_id=session_id

|

| 221 |

+

):

|

| 222 |

+

if result["type"] == "progress":

|

| 223 |

+

chatbot_history[-1] = {"role": "assistant", "content": result["message"]}

|

| 224 |

+

|

| 225 |

+

yield chatbot_history, "", generated_images, detection_monitor, gr.Button(interactive=False), gr.Textbox(interactive=False)

|

| 226 |

+

|

| 227 |

+

elif result["type"] == "memory_direct":

|

| 228 |

+

final_response = result["message"]

|

| 229 |

+

chatbot_history[-1] = {"role": "assistant", "content": final_response}

|

| 230 |

+

|

| 231 |

+

updated_detection_monitor = result.get("detection_data", "")

|

| 232 |

+

|

| 233 |

+

final_result = result

|

| 234 |

+

|

| 235 |

+

yield chatbot_history, "", generated_images, updated_detection_monitor, gr.Button(interactive=True), gr.Textbox(interactive=True)

|

| 236 |

+

break

|

| 237 |

+

|

| 238 |

+

elif result["type"] == "streaming":

|

| 239 |

+

# Update the last message with streaming response

|

| 240 |

+

chatbot_history[-1] = {"role": "assistant", "content": result["message"]}

|

| 241 |

+

|

| 242 |

+

yield chatbot_history, "", generated_images, detection_monitor, gr.Button(interactive=False), gr.Textbox(interactive=False)

|

| 243 |

+

|

| 244 |

+

if result.get("is_complete", False):

|

| 245 |

+

final_response = result["message"]

|

| 246 |

+

|

| 247 |

+

elif result["type"] == "final":

|

| 248 |

+

final_response = result["message"]

|

| 249 |

+

chatbot_history[-1] = {"role": "assistant", "content": final_response}

|

| 250 |

+

|

| 251 |

+

final_result = result

|

| 252 |

+

break

|

| 253 |

+

|

| 254 |

+

if final_result:

|

| 255 |

+

total_execution_time = time.perf_counter() - total_execution_start

|

| 256 |

+

|

| 257 |

+

execution_summary = final_result.get("execution_summary", {})

|

| 258 |

+

agent_results = final_result.get("agent_results", {})

|

| 259 |

+

execution_time = final_result.get("execution_time", 0)

|

| 260 |

+

|

| 261 |

+

assistant_msg = {

|

| 262 |

+

"role": "assistant",

|

| 263 |

+

"content": [{"type": "text", "text": final_response}]

|

| 264 |

+

}

|

| 265 |

+

session_state_manager.add_to_conversation(session_id, assistant_msg)

|

| 266 |

+

|

| 267 |

+

multi_agent_logger.log_agent_execution(

|

| 268 |

+

session_id=session_id,

|

| 269 |

+

agent_name="ecology",

|

| 270 |

+

agent_input="Final synthesis of all agent outputs",

|

| 271 |

+

agent_output=final_response,

|

| 272 |

+

execution_time=total_execution_time

|

| 273 |

+

)

|

| 274 |

+

|

| 275 |

+

annotated_image = session_state_manager.get(session_id, "annotated_image")

|

| 276 |

+

if annotated_image:

|

| 277 |

+

generated_images.append(annotated_image)

|

| 278 |

+

|

| 279 |

+

updated_detection_monitor = final_result.get("detection_data", "")

|

| 280 |

+

|

| 281 |

+

yield chatbot_history, "", generated_images, updated_detection_monitor, gr.Button(interactive=True), gr.Textbox(interactive=True)

|

| 282 |

+

|

| 283 |

+

finally:

|

| 284 |

+

orchestrator.cleanup_all_agents()

|

| 285 |

+

|

| 286 |

+

except Exception as e:

|

| 287 |

+

total_execution_time = time.perf_counter() - total_execution_start

|

| 288 |

+

error_msg = f"Workflow error: {str(e)}"

|

| 289 |

+

print(f"MAIN APP ERROR (Session {session_id}): {error_msg}")

|

| 290 |

+

|

| 291 |

+

multi_agent_logger.log_error(

|

| 292 |

+

session_id=session_id,

|

| 293 |

+

error_type="app_workflow_error",

|

| 294 |

+

error_message=f"Workflow failed after {total_execution_time:.2f}s: {str(e)}"

|

| 295 |

+

)

|

| 296 |

+

|

| 297 |

+

if chatbot_history and chatbot_history[-1]["role"] == "assistant":

|

| 298 |

+

chatbot_history[-1] = {"role": "assistant", "content": error_msg}

|

| 299 |

+

else:

|

| 300 |

+

chatbot_history.append({"role": "assistant", "content": error_msg})

|

| 301 |

+

|

| 302 |

+

error_detection_monitor = "ERROR: Workflow failed - no detection data available"

|

| 303 |

+

|

| 304 |

+

yield chatbot_history, "", generated_images, error_detection_monitor, gr.Button(interactive=True), gr.Textbox(interactive=True)

|

| 305 |

+

|

| 306 |

+

def clear_chat(session_id):

|

| 307 |

+

"""

|

| 308 |

+

Clear chat history and cancel any ongoing processing for the session.

|

| 309 |

+

|

| 310 |

+

Args:

|

| 311 |

+

session_id (str): The session identifier to clear. Must correspond to

|

| 312 |

+

an existing active session.

|

| 313 |

+

|

| 314 |

+

Returns:

|

| 315 |

+

tuple: A tuple containing 5 updated components:

|

| 316 |

+

- chatbot_history: Empty list clearing chat display

|

| 317 |

+

- generated_images: Empty list clearing image gallery

|

| 318 |

+

- monitor_message: Status message indicating successful clear

|

| 319 |

+

operation and session ID

|

| 320 |

+

- send_btn: Re-enabled send button component

|

| 321 |

+

- msg_input: Re-enabled message input component

|

| 322 |

+

|

| 323 |

+

"""

|

| 324 |

+

if session_id and session_state_manager.session_exists(session_id):

|

| 325 |

+

session_state_manager.cancel_session(session_id)

|

| 326 |

+

session_state_manager.clear_conversation(session_id)

|

| 327 |

+

|

| 328 |

+

multi_agent_logger.log_session_event(

|

| 329 |

+

session_id=session_id,

|

| 330 |

+

event_type="conversation_cleared"

|

| 331 |

+

)

|

| 332 |

+

|

| 333 |

+

return (

|

| 334 |

+

[], # chatbot

|

| 335 |

+

[], # generated_images

|

| 336 |

+

"",

|

| 337 |

+

gr.Button(interactive=True), # Re-enable send button

|

| 338 |

+

gr.Textbox(interactive=True) # Re-enable message input

|

| 339 |

+

)

|

| 340 |

+

else:

|

| 341 |

+

return (

|

| 342 |

+

[], # chatbot

|

| 343 |

+

[], # generated_images

|

| 344 |

+

"",

|

| 345 |

+

gr.Button(interactive=True), # Re-enable send button

|

| 346 |

+

gr.Textbox(interactive=True) # Re-enable message input

|

| 347 |

+

)

|

| 348 |

+

|

| 349 |

+

|

| 350 |

+

def create_interface():

|

| 351 |

+

"""

|

| 352 |

+

Create and configure the complete Gradio web interface with streaming support.

|

| 353 |

+

|

| 354 |

+

Returns:

|

| 355 |

+

gr.Blocks: Complete Gradio application interface

|

| 356 |

+

"""

|

| 357 |

+

|

| 358 |

+

with gr.Blocks(

|

| 359 |

+

title="DeepForest Multi-Agent System",

|

| 360 |

+

theme=gr.themes.Default(

|

| 361 |

+

spacing_size=gr.themes.sizes.spacing_sm,

|

| 362 |

+

radius_size=gr.themes.sizes.radius_none,

|

| 363 |

+

primary_hue=gr.themes.colors.emerald,

|

| 364 |

+

secondary_hue=gr.themes.colors.lime

|

| 365 |

+

)

|

| 366 |

+

) as app:

|

| 367 |

+

|

| 368 |

+

# Gradio State variables

|

| 369 |

+

uploaded_image_state = gr.State(None)

|

| 370 |

+

generated_images_state = gr.State([])

|

| 371 |

+

session_id_state = gr.State(None)

|

| 372 |

+

|

| 373 |

+

gr.Markdown("# DeepForest Multi-Agent System")

|

| 374 |

+

gr.Markdown("*DeepForest with SmolLM3-3B + Qwen-VL-3B-Instruct + Llama 3.2-3B-Instruct*")

|

| 375 |

+

|

| 376 |

+

with gr.Row():

|

| 377 |

+

# Left column

|

| 378 |

+

with gr.Column(scale=1):

|

| 379 |

+

image_upload = gr.Image(

|

| 380 |

+

type="filepath",

|

| 381 |

+

label="Upload Ecological Image",

|

| 382 |

+

height=300

|

| 383 |

+

)

|

| 384 |

+

upload_status = gr.Textbox(

|

| 385 |

+

label="Upload Status",

|

| 386 |

+

value="Upload an image to begin analysis",

|

| 387 |

+

interactive=False

|

| 388 |

+

)

|

| 389 |

+

|

| 390 |

+

# Right column

|

| 391 |

+

with gr.Column(scale=2):

|

| 392 |

+

chatbot = gr.Chatbot(

|

| 393 |

+

label="Multi-Agent Ecological Analysis",

|

| 394 |

+

height=400,

|

| 395 |

+

visible=False,

|

| 396 |

+

show_copy_button=True,

|

| 397 |

+

type='messages'

|

| 398 |

+

)

|

| 399 |

+

|

| 400 |

+

with gr.Row():

|

| 401 |

+

msg_input = gr.Textbox(

|

| 402 |

+

placeholder="Ask about wildlife, forest health, ecological patterns...",

|

| 403 |

+

scale=4,

|

| 404 |

+

visible=False

|

| 405 |

+

)

|

| 406 |

+

send_btn = gr.Button("Analyze", scale=1, visible=False, variant="primary")

|

| 407 |

+

clear_btn = gr.Button("Clear", scale=1, visible=False)

|

| 408 |

+

|

| 409 |

+

with gr.Row():

|

| 410 |

+

generated_images_display = gr.Gallery(

|

| 411 |

+

label="Annotated Images after DeepForest Detection",

|

| 412 |

+

columns=2,

|

| 413 |

+

height=400,

|

| 414 |

+

visible=False,

|

| 415 |

+

show_label=True

|

| 416 |

+

)

|

| 417 |

+

|

| 418 |

+

with gr.Row():

|

| 419 |

+

with gr.Column():

|

| 420 |

+

gr.Markdown("### Detection Data Monitor")

|

| 421 |

+

|

| 422 |

+

detection_data_monitor = gr.Textbox(

|

| 423 |

+

label="Detection Data Monitor",

|

| 424 |

+

value="Upload an image and ask a question to see detection data",

|

| 425 |

+

interactive=False,

|

| 426 |

+

show_copy_button=True

|

| 427 |

+

)

|

| 428 |

+

|

| 429 |

+

with gr.Row(visible=False) as example_row:

|

| 430 |

+

gr.Markdown("""

|

| 431 |

+

**Multi-agent test questions:**

|

| 432 |

+

- How many trees are detected, and how many of them are alive vs dead?

|

| 433 |

+

- How many birds are around each dead tree?

|

| 434 |

+

- What objects are in the northwest region of the image?

|

| 435 |

+

- Do any birds overlap with livestock in this image?

|

| 436 |

+

- What percentage of the image is covered by trees vs birds vs livestock?

|

| 437 |

+

""")

|

| 438 |

+

|

| 439 |

+

# Image upload

|

| 440 |

+

image_upload.change(

|

| 441 |

+

fn=upload_image,

|

| 442 |

+

inputs=[image_upload],

|

| 443 |

+

outputs=[

|

| 444 |

+

chatbot,

|

| 445 |

+

uploaded_image_state,

|

| 446 |

+

upload_status,

|

| 447 |

+

msg_input,

|

| 448 |

+

send_btn,

|

| 449 |

+

clear_btn,

|

| 450 |

+

generated_images_display,

|

| 451 |

+

detection_data_monitor,

|

| 452 |

+

session_id_state

|

| 453 |

+

]

|

| 454 |

+

).then(

|

| 455 |

+

fn=lambda: gr.Row(visible=True),

|

| 456 |

+

outputs=[example_row]

|

| 457 |

+

)

|

| 458 |

+

|

| 459 |

+

# Send button with streaming

|

| 460 |

+

send_btn.click(

|

| 461 |

+

fn=process_message_streaming,

|

| 462 |

+

inputs=[msg_input, chatbot, generated_images_state, detection_data_monitor, session_id_state],

|

| 463 |

+

outputs=[chatbot, msg_input, generated_images_state, detection_data_monitor, send_btn, msg_input]

|

| 464 |

+

).then(

|

| 465 |

+

fn=lambda images: images,

|

| 466 |

+

inputs=[generated_images_state],

|

| 467 |

+

outputs=[generated_images_display]

|

| 468 |

+

)

|

| 469 |

+

|

| 470 |

+

# Enter key with streaming

|

| 471 |

+

msg_input.submit(

|

| 472 |

+

fn=process_message_streaming,

|

| 473 |

+

inputs=[msg_input, chatbot, generated_images_state, detection_data_monitor, session_id_state],

|

| 474 |

+

outputs=[chatbot, msg_input, generated_images_state, detection_data_monitor, send_btn, msg_input]

|

| 475 |

+

).then(

|

| 476 |

+

fn=lambda images: images,

|

| 477 |

+

inputs=[generated_images_state],

|

| 478 |

+

outputs=[generated_images_display]

|

| 479 |

+

)

|

| 480 |

+

|

| 481 |

+

clear_btn.click(

|

| 482 |

+

fn=clear_chat,

|

| 483 |

+

inputs=[session_id_state],

|

| 484 |

+

outputs=[chatbot, generated_images_state, detection_data_monitor, send_btn, msg_input]

|

| 485 |

+

).then(

|

| 486 |

+

fn=lambda: [],

|

| 487 |

+

outputs=[generated_images_display]

|

| 488 |

+

)

|

| 489 |

+

|

| 490 |

+

return app

|

| 491 |

+

|

| 492 |

+

|

| 493 |

+

app = create_interface()

|

| 494 |

+

|

| 495 |

+

if __name__ == "__main__":

|

| 496 |

+

app.launch(

|

| 497 |

+

share=True,

|

| 498 |

+

debug=True,

|

| 499 |

+

show_error=True,

|

| 500 |

+

max_threads=3

|

| 501 |

+

)

|

pyproject.toml

ADDED

|

@@ -0,0 +1,66 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[project]

|

| 2 |

+

name = "deepforest_agent"

|

| 3 |

+

version = "0.1.0"

|

| 4 |

+

description = "AI Agent for DeepForest object detection"

|

| 5 |

+

authors = [

|

| 6 |

+

{name = "Your Name", email = "you@example.com"}

|

| 7 |

+

]

|

| 8 |

+

requires-python = ">=3.12"

|

| 9 |

+

readme = "README.md"

|

| 10 |

+

dependencies = [

|

| 11 |

+

"accelerate",

|

| 12 |

+

"albumentations<2.0",

|

| 13 |

+

"deepforest",

|

| 14 |

+

"fastapi",

|

| 15 |

+

"geopandas",

|

| 16 |

+

"google-genai",

|

| 17 |

+

"google-generativeai",

|

| 18 |

+

"gradio",

|

| 19 |

+

"gradio-image-annotation",

|

| 20 |

+

"langchain",

|

| 21 |

+

"langchain-community",

|

| 22 |

+

"langchain-google-genai",

|

| 23 |

+

"langchain-huggingface",

|

| 24 |

+

"langgraph",

|

| 25 |

+

"matplotlib",

|

| 26 |

+

"numpy",

|

| 27 |

+

"rtree",

|

| 28 |

+

"num2words",

|

| 29 |

+

"openai",

|

| 30 |

+

"opencv-python",

|

| 31 |

+

"outlines",

|

| 32 |

+

"pandas",

|

| 33 |

+

"pillow",

|

| 34 |

+

"scikit-learn",

|

| 35 |

+

"plotly",

|

| 36 |

+

"pydantic",

|

| 37 |

+

"pydantic-settings",

|

| 38 |

+

"pytest",

|

| 39 |

+

"pytest-cov",

|

| 40 |

+

"python-dotenv",

|

| 41 |

+

"pyyaml",

|

| 42 |

+

"qwen-vl-utils",

|

| 43 |

+

"rasterio",

|

| 44 |

+

"requests",

|

| 45 |

+

"scikit-image",

|

| 46 |

+

"seaborn",

|

| 47 |

+

"shapely",

|

| 48 |

+

"streamlit",

|

| 49 |

+

"torch",

|

| 50 |

+

"torchvision",

|

| 51 |

+

"tqdm",

|

| 52 |

+

"transformers",

|

| 53 |

+

"bitsandbytes",

|

| 54 |

+

]

|

| 55 |

+

|

| 56 |

+

[project.optional-dependencies]

|

| 57 |

+

dev = [

|

| 58 |

+

"pre-commit",

|

| 59 |

+

"pytest",

|

| 60 |

+

"pytest-profiling",

|

| 61 |

+

"yapf"

|

| 62 |

+

]

|

| 63 |

+

|

| 64 |

+

[build-system]

|

| 65 |

+

requires = ["setuptools>=61.0"]

|

| 66 |

+

build-backend = "setuptools.build_meta"

|

requirements.txt

ADDED

|

@@ -0,0 +1,43 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

accelerate

|

| 2 |

+

albumentations<2.0

|

| 3 |

+

deepforest

|

| 4 |

+

fastapi

|

| 5 |

+

geopandas

|

| 6 |

+

google-genai

|

| 7 |

+

google-generativeai

|

| 8 |

+

gradio

|

| 9 |

+

gradio-image-annotation

|

| 10 |

+

langchain

|

| 11 |

+

langchain-community

|

| 12 |

+

langchain-google-genai

|

| 13 |

+

langchain-huggingface

|

| 14 |

+

langgraph

|

| 15 |

+

matplotlib

|

| 16 |

+

numpy

|

| 17 |

+

rtree

|

| 18 |

+

num2words

|

| 19 |

+

openai

|

| 20 |

+

opencv-python

|

| 21 |

+

outlines

|

| 22 |

+

pandas

|

| 23 |

+

scikit-learn

|

| 24 |

+

pillow

|

| 25 |

+

plotly

|

| 26 |

+

pydantic

|

| 27 |

+

pydantic-settings

|

| 28 |

+

pytest

|

| 29 |

+

pytest-cov

|

| 30 |

+

python-dotenv

|

| 31 |

+

pyyaml

|

| 32 |

+

qwen-vl-utils

|

| 33 |

+

rasterio

|

| 34 |

+

requests

|

| 35 |

+

scikit-image

|

| 36 |

+

seaborn

|

| 37 |

+

shapely

|

| 38 |

+

streamlit

|

| 39 |

+

torch

|

| 40 |

+

torchvision

|

| 41 |

+

tqdm

|

| 42 |

+

transformers

|

| 43 |

+

bitsandbytes

|

src/__init__.py

ADDED

|

File without changes

|

src/deepforest_agent/__init__.py

ADDED

|

File without changes

|

src/deepforest_agent/agents/__init__.py

ADDED

|

File without changes

|

src/deepforest_agent/agents/deepforest_detector_agent.py

ADDED

|

@@ -0,0 +1,403 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|