Spaces:

Paused

Apply for community grant: Academic project

MAPL: Parameter-Efficient Adaptation of Unimodal Pre-Trained Models for Vision-Language Few-Shot Prompting

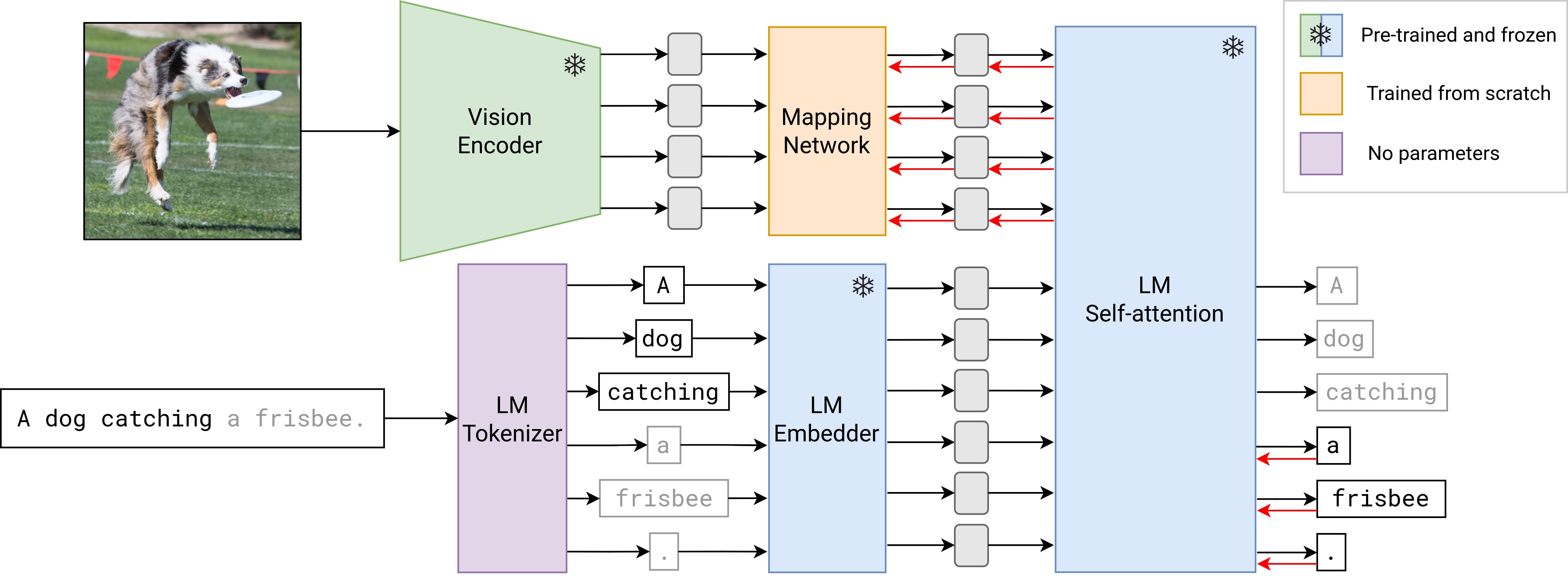

Large pre-trained models have proved to be remarkable zero- and (prompt-based) few-shot learners in unimodal vision and language tasks. We propose MAPL, a simple and parameter-efficient method that reuses frozen pre-trained unimodal models and leverages their strong generalization capabilities in multimodal vision-language (VL) settings. MAPL learns a lightweight mapping between the representation spaces of unimodal models using aligned image-text data, and can generalize to unseen VL tasks from just a few in-context examples. MAPL can be trained in just a few hours using modest computational resources and public datasets.

MAPL was recently accepted at EACL 2023! This generous GPU grant would be immensely beneficial in helping us showcase our paper through a demo at the conference.

Paper: https://arxiv.org/abs/2210.07179

Code + weights: https://github.com/mair-lab/mapl