Spaces:

Sleeping

Apply for community grant: Personal project (gpu)

Hugging Research - Community Grant Application

Overview

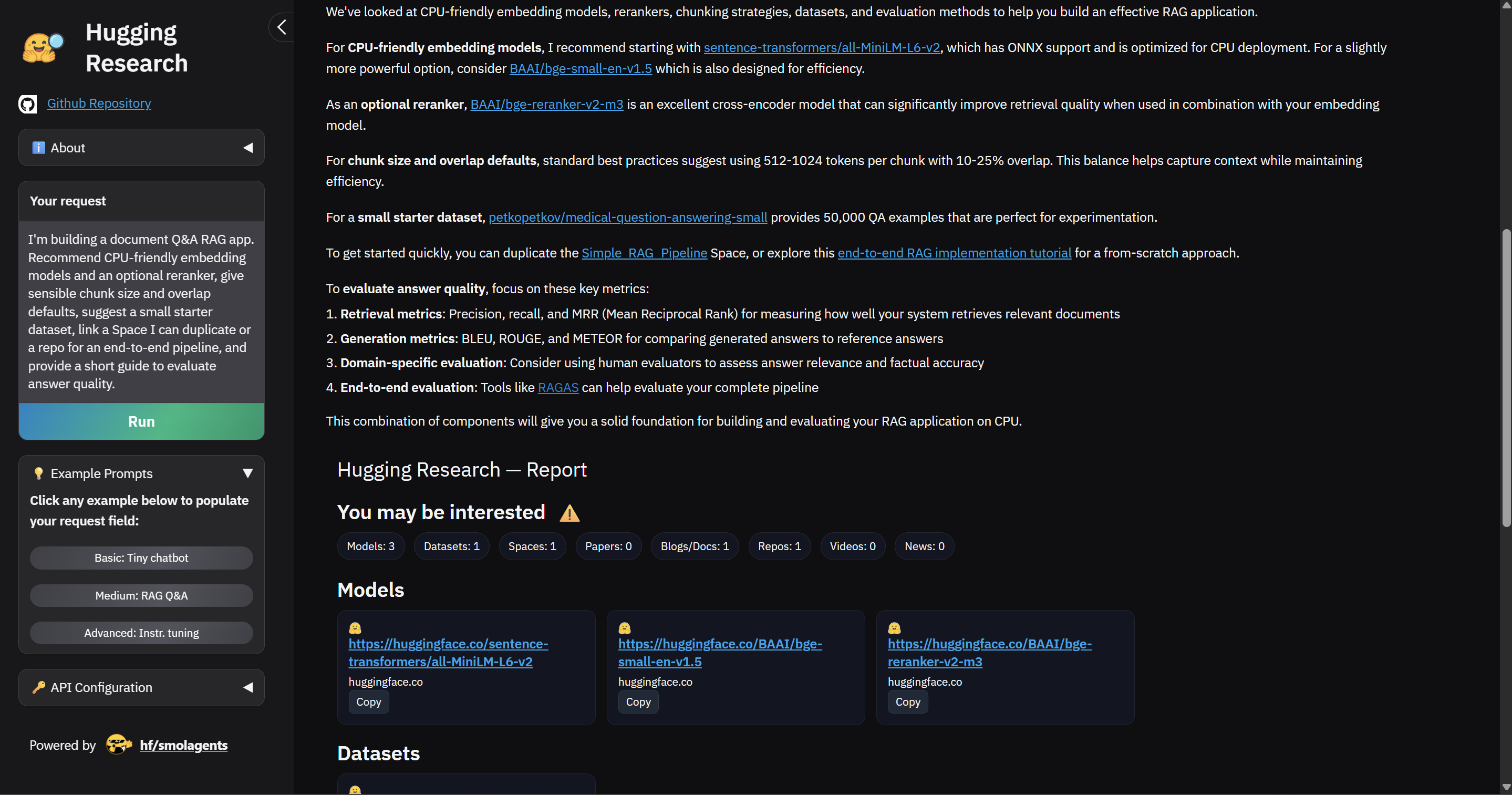

HuggingResearch is a CodeAgent built on Hugging Face’s Open Deep Research project — see the blog for context and goals: Open-source DeepResearch – Freeing our search agents. It lets anyone ask natural‑language questions about the Hugging Face ecosystem and get sourced answers that connect models, datasets, papers, Spaces, docs, and tutorials. The app is live and free today. I currently cover inference with my personal API key; depending on community usage, that becomes unsustainable. Because the primary model (Qwen/Qwen3-Coder-480B-A35B-Instruct) is large, I’m requesting inference credits (preferred) — or alternatively a GPU-backed Space — so the community can use the tool at no cost.

Why this matters now

Many people seek up‑to‑date, practical guidance: which HF tutorials to follow, how to actually use Hugging Face in their setup, and where to start if they’re completely new. Recent threads include:

- r/huggingface — tutorial suggestions: https://www.reddit.com/r/huggingface/comments/1esthg0/what_huggingface_tutorials_would_you_suggest/

- r/SillyTavernAI — how to use Hugging Face: https://www.reddit.com/r/SillyTavernAI/comments/1ct56vy/how_do_i_use_huggingface/

- r/SillyTavernAI — completely new and need help: https://www.reddit.com/r/SillyTavernAI/comments/1iiv9b8/completely_new_to_this_and_need_help_on_how_to/

- r/learnmachinelearning — how HF is actually used: https://www.reddit.com/r/learnmachinelearning/comments/1ldw0bt/confused_about_how_hugging_face_is_actually_used/

It also serves advanced practitioners and researchers: faster surveying of model/dataset options, staying on top of recent updates, and jumping straight to cited HF resources for quicker decision‑making in daily workflows.

What’s built (on Open Deep Research)

- A domain‑specific CodeAgent built on the Open Deep Research project, focused solely on the Hugging Face platform and everything it offers.

- Natural‑language search across models, datasets, papers, Spaces, and docs, with citations.

- Clear related context for any item (e.g., for a model: show related datasets, papers, and example Spaces) and simple how‑tos for common HF tasks (fine‑tune, deploy to Spaces).

Request

- Inference credits (preferred), or a Space with a dedicated GPU (e.g., A10G/24GB or similar) to ensure stable latency and scale without shifting costs to the community.

Next steps (if usage grows)

- Keep it fully open‑source and transparent (sources cited, methods documented).

- Focus on clear, helpful answers for both newcomers and power users; iterate based on real usage.

- Explore further retrieval and latency improvements and, if requested by the community, additional language support.

Technical details

- Available tools in agent:

- Hugging Face Hub API endpoints (via hf_* tools) — Hub API docs: https://huggingface.co/docs/hub/en/api

- Web search + basic navigation (DuckDuckGo search, visit/page up/down/find, archive search)

- Default model configuration:

- Qwen/Qwen3-Coder-480B-A35B-Instruct (HF Inference API)

- Optional deployment with local/Ollama

- Rationale for model choice and trade‑offs:

- We prioritize a very large context window to synthesize multiple long HF resources (model cards, docs, tutorials, papers) in a single pass. Smaller models such as Qwen/Qwen3-Coder-30B-A3B-Instruct or Qwen/Qwen2.5-Coder-32B-Instruct can work, but the agent’s tool use can accumulate long contexts; if the window is exceeded, response quality may degrade or the model may fail to answer. Dedicated GPU/credits allow us to sustain the large‑context setup reliably for community use.

Note: The project is new, so usage may start small or spike. The goal is to keep the tool ready and available for the whole community to use freely without hitting paywalls.

Feedback

Please let me know your thoughts. This is a community preview aligned with the Open Deep Research effort. If Hugging Face is developing something similar officially, I’m happy to align. The goal is to help people who are not yet familiar with everything the HF ecosystem offers. Comments and feedback are very welcome.