A newer version of the Gradio SDK is available:

5.9.1

title: cmkj-gpt

app_file: web_demo.py

sdk: gradio

sdk_version: 3.41.2

Qwen-7B 🤖 | 🤗 | Qwen-7B-Chat 🤖 | 🤗 | Qwen-7B-Chat-Int4 🤗

WeChat | Discord | Demo | Report

We opensource Qwen-7B and Qwen-7B-Chat on both 🤖 ModelScope and 🤗 Hugging Face (Click the logos on top to the repos with codes and checkpoints). This repo includes the brief introduction to Qwen-7B, the usage guidance, and also a technical memo link that provides more information.

Qwen-7B is the 7B-parameter version of the large language model series, Qwen (abbr. Tongyi Qianwen), proposed by Alibaba Cloud. Qwen-7B is a Transformer-based large language model, which is pretrained on a large volume of data, including web texts, books, codes, etc. Additionally, based on the pretrained Qwen-7B, we release Qwen-7B-Chat, a large-model-based AI assistant, which is trained with alignment techniques. The features of the Qwen-7B series include:

- Trained with high-quality pretraining data. We have pretrained Qwen-7B on a self-constructed large-scale high-quality dataset of over 2.2 trillion tokens. The dataset includes plain texts and codes, and it covers a wide range of domains, including general domain data and professional domain data.

- Strong performance. In comparison with the models of the similar model size, we outperform the competitors on a series of benchmark datasets, which evaluates natural language understanding, mathematics, coding, etc.

- Better support of languages. Our tokenizer, based on a large vocabulary of over 150K tokens, is a more efficient one compared with other tokenizers. It is friendly to many languages, and it is helpful for users to further finetune Qwen-7B for the extension of understanding a certain language.

- Support of 8K Context Length. Both Qwen-7B and Qwen-7B-Chat support the context length of 8K, which allows inputs with long contexts.

- Support of Plugins. Qwen-7B-Chat is trained with plugin-related alignment data, and thus it is capable of using tools, including APIs, models, databases, etc., and it is capable of playing as an agent.

The following sections include information that you might find it helpful. Specifically, we advise you to read the FAQ section before you launch issues.

News and Updates

- 2023.8.21 We release the Int4 quantized model for Qwen-7B-Chat, Qwen-7B-Chat-Int4, which requires low memory costs but achieves improved inference speed. Besides, there is no significant performance degradation on the benchmark evaluation.

- 2023.8.3 We release both Qwen-7B and Qwen-7B-Chat on ModelScope and Hugging Face. We also provide a technical memo for more details about the model, including training details and model performance.

Performance

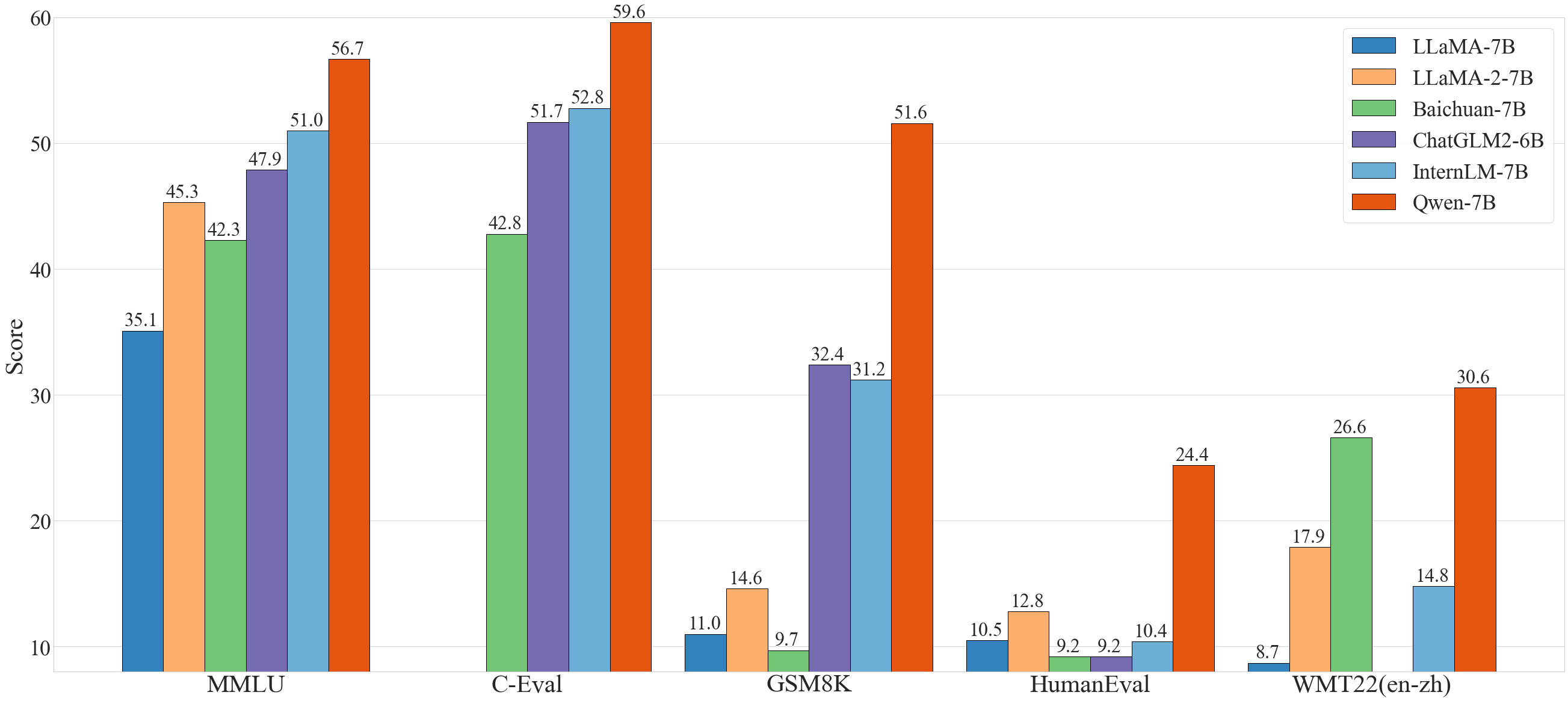

In general, Qwen-7B outperforms the baseline models of a similar model size, and even outperforms larger models of around 13B parameters, on a series of benchmark datasets, e.g., MMLU, C-Eval, GSM8K, HumanEval, and WMT22, CMMLU, etc., which evaluate the models' capabilities on natural language understanding, mathematic problem solving, coding, etc. See the results below.

| Model | MMLU | C-Eval | GSM8K | HumanEval | WMT22 (en-zh) | CMMLU |

|---|---|---|---|---|---|---|

| LLaMA-7B | 35.1 | - | 11.0 | 10.5 | 8.7 | - |

| LLaMA 2-7B | 45.3 | - | 14.6 | 12.8 | 17.9 | - |

| Baichuan-7B | 42.3 | 42.8 | 9.7 | 9.2 | 26.6 | 44.4 |

| ChatGLM2-6B | 47.9 | 51.7 | 32.4 | 9.2 | - | 48.8 |

| InternLM-7B | 51.0 | 52.8 | 31.2 | 10.4 | 14.8 | - |

| Baichuan-13B | 51.6 | 53.6 | 26.6 | 12.8 | 30.0 | 55.8 |

| LLaMA-13B | 46.9 | 35.5 | 17.8 | 15.8 | 12.0 | - |

| LLaMA 2-13B | 54.8 | - | 28.7 | 18.3 | 24.2 | - |

| ChatGLM2-12B | 56.2 | 61.6 | 40.9 | - | - | - |

| Qwen-7B | 56.7 | 59.6 | 51.6 | 24.4 | 30.6 | 58.8 |

Additionally, according to the third-party evaluation of large language models, conducted by OpenCompass, Qwen-7B and Qwen-7B-Chat are the top 7B-parameter models. This evaluation consists of a large amount of public benchmarks for the evaluation of language understanding and generation, coding, mathematics, reasoning, etc.

For more experimental results (detailed model performance on more benchmark datasets) and details, please refer to our technical memo by clicking here.

Requirements

- python 3.8 and above

- pytorch 1.12 and above, 2.0 and above are recommended

- CUDA 11.4 and above are recommended (this is for GPU users, flash-attention users, etc.)

Quickstart

Below, we provide simple examples to show how to use Qwen-7B with 🤖 ModelScope and 🤗 Transformers.

Before running the code, make sure you have setup the environment and installed the required packages. Make sure you meet the above requirements, and then install the dependent libraries.

pip install -r requirements.txt

If your device supports fp16 or bf16, we recommend installing flash-attention for higher efficiency and lower memory usage. (flash-attention is optional and the project can run normally without installing it)

git clone -b v1.0.8 https://github.com/Dao-AILab/flash-attention

cd flash-attention && pip install .

# Below are optional. Installing them might be slow.

# pip install csrc/layer_norm

# pip install csrc/rotary

Now you can start with ModelScope or Transformers.

🤗 Transformers

To use Qwen-7B-Chat for the inference, all you need to do is to input a few lines of codes as demonstrated below. However, please make sure that you are using the latest code.

from transformers import AutoModelForCausalLM, AutoTokenizer

from transformers.generation import GenerationConfig

# Note: The default behavior now has injection attack prevention off.

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen-7B-Chat", trust_remote_code=True)

# use bf16

# model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen-7B-Chat", device_map="auto", trust_remote_code=True, bf16=True).eval()

# use fp16

# model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen-7B-Chat", device_map="auto", trust_remote_code=True, fp16=True).eval()

# use cpu only

# model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen-7B-Chat", device_map="cpu", trust_remote_code=True).eval()

# use auto mode, automatically select precision based on the device.

model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen-7B-Chat", device_map="auto", trust_remote_code=True).eval()

# Specify hyperparameters for generation

model.generation_config = GenerationConfig.from_pretrained("Qwen/Qwen-7B-Chat", trust_remote_code=True)

# 第一轮对话 1st dialogue turn

response, history = model.chat(tokenizer, "你好", history=None)

print(response)

# 你好!很高兴为你提供帮助。

# 第二轮对话 2nd dialogue turn

response, history = model.chat(tokenizer, "给我讲一个年轻人奋斗创业最终取得成功的故事。", history=history)

print(response)

# 这是一个关于一个年轻人奋斗创业最终取得成功的故事。

# 故事的主人公叫李明,他来自一个普通的家庭,父母都是普通的工人。从小,李明就立下了一个目标:要成为一名成功的企业家。

# 为了实现这个目标,李明勤奋学习,考上了大学。在大学期间,他积极参加各种创业比赛,获得了不少奖项。他还利用课余时间去实习,积累了宝贵的经验。

# 毕业后,李明决定开始自己的创业之路。他开始寻找投资机会,但多次都被拒绝了。然而,他并没有放弃。他继续努力,不断改进自己的创业计划,并寻找新的投资机会。

# 最终,李明成功地获得了一笔投资,开始了自己的创业之路。他成立了一家科技公司,专注于开发新型软件。在他的领导下,公司迅速发展起来,成为了一家成功的科技企业。

# 李明的成功并不是偶然的。他勤奋、坚韧、勇于冒险,不断学习和改进自己。他的成功也证明了,只要努力奋斗,任何人都有可能取得成功。

# 第三轮对话 3rd dialogue turn

response, history = model.chat(tokenizer, "给这个故事起一个标题", history=history)

print(response)

# 《奋斗创业:一个年轻人的成功之路》

Running Qwen-7B pretrained base model is also simple.

Running Qwen-7B

from transformers import AutoModelForCausalLM, AutoTokenizer

from transformers.generation import GenerationConfig

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen-7B", trust_remote_code=True)

# use bf16

# model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen-7B", device_map="auto", trust_remote_code=True, bf16=True).eval()

# use fp16

# model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen-7B", device_map="auto", trust_remote_code=True, fp16=True).eval()

# use cpu only

# model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen-7B", device_map="cpu", trust_remote_code=True).eval()

# use auto mode, automatically select precision based on the device.

model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen-7B", device_map="auto", trust_remote_code=True).eval()

# Specify hyperparameters for generation

model.generation_config = GenerationConfig.from_pretrained("Qwen/Qwen-7B", trust_remote_code=True)

inputs = tokenizer('蒙古国的首都是乌兰巴托(Ulaanbaatar)\n冰岛的首都是雷克雅未克(Reykjavik)\n埃塞俄比亚的首都是', return_tensors='pt')

inputs = inputs.to(model.device)

pred = model.generate(**inputs)

print(tokenizer.decode(pred.cpu()[0], skip_special_tokens=True))

# 蒙古国的首都是乌兰巴托(Ulaanbaatar)\n冰岛的首都是雷克雅未克(Reykjavik)\n埃塞俄比亚的首都是亚的斯亚贝巴(Addis Ababa)...

🤖 ModelScope

ModelScope is an opensource platform for Model-as-a-Service (MaaS), which provides flexible and cost-effective model service to AI developers. Similarly, you can run the models with ModelScope as shown below:

import os

from modelscope.pipelines import pipeline

from modelscope.utils.constant import Tasks

from modelscope import snapshot_download

model_id = 'QWen/qwen-7b-chat'

revision = 'v1.0.0'

model_dir = snapshot_download(model_id, revision)

pipe = pipeline(

task=Tasks.chat, model=model_dir, device_map='auto')

history = None

text = '浙江的省会在哪里?'

results = pipe(text, history=history)

response, history = results['response'], results['history']

print(f'Response: {response}')

text = '它有什么好玩的地方呢?'

results = pipe(text, history=history)

response, history = results['response'], results['history']

print(f'Response: {response}')

Tokenizer

Our tokenizer based on tiktoken is different from other tokenizers, e.g., sentencepiece tokenizer. You need to pay attention to special tokens, especially in finetuning. For more detailed information on the tokenizer and related use in fine-tuning, please refer to the documentation.

Quantization

Usage

Note: we provide a new solution based on AutoGPTQ, and release an Int4 quantized model for Qwen-7B-Chat Click here, which achieves nearly lossless model effects but improved performance on both memory costs and inference speed, in comparison with the previous solution.

Here we demonstrate how to use our provided quantized models for inference. Before you start, make sure you meet the requirements of AutoGPTQ and install it from source (temporarily the codes for Qwen are not yet released in the latest version of PyPI package):

git clone https://github.com/PanQiWei/AutoGPTQ.git && cd AutoGPTQ

pip install .

Then you can load the quantized model easily as shown below:

from auto_gptq import AutoGPTQForCausalLM

model = AutoGPTQForCausalLM.from_quantized("Qwen/Qwen-7B-Chat-Int4", device_map="auto", trust_remote_code=True, use_safetensors=True).eval()

To run inference, it is similar to the basic usage demonstrated above, but remember to pass in the generation configuration explicitly:

from transformers import GenerationConfig

config = GenerationConfig.from_pretrained("Qwen/Qwen-7B-Chat-Int4", trust_remote_code=True)

response, history = model.chat(tokenizer, "Hi", history=None, generation_config=config)

Performance

We illustrate the model performance of both BF16 and Int4 models on the benchmark, and we find that the quantized model does not suffer from significant performance degradation. Results are shown below:

| Quantization | MMLU | CEval (val) | GSM8K | Humaneval |

|---|---|---|---|---|

| BF16 | 53.9 | 54.2 | 41.1 | 24.4 |

| Int4 | 52.6 | 52.9 | 38.1 | 23.8 |

Inference Speed

We measured the average inference speed (tokens/s) of generating 2048 and 8192 tokens under BF16 precision and Int4 quantization, respectively.

| Quantization | Speed (2048 tokens) | Speed (8192 tokens) |

|---|---|---|

| BF16 | 30.34 | 29.32 |

| Int4 | 43.56 | 33.92 |

In detail, the setting of profiling is generating 8192 new tokens with 1 context token. The profiling runs on a single A100-SXM4-80G GPU with PyTorch 2.0.1 and CUDA 11.4. The inference speed is averaged over the generated 8192 tokens.

GPU Memory Usage

We also profile the peak GPU memory usage for encoding 2048 tokens as context (and generating single token) and generating 8192 tokens (with single token as context) under BF16 or Int4 quantization level, respectively. The results are shown below.

| Quantization | Peak Usage for Encoding 2048 Tokens | Peak Usage for Generating 8192 Tokens |

|---|---|---|

| BF16 | 17.66GB | 22.58GB |

| Int4 | 8.21GB | 13.62GB |

The above speed and memory profiling are conducted using this script.

Demo

Web UI

We provide code for users to build a web UI demo (thanks to @wysaid). Before you start, make sure you install the following packages:

pip install -r requirements_web_demo.txt

Then run the command below and click on the generated link:

python web_demo.py

CLI Demo

We provide a CLI demo example in cli_demo.py, which supports streaming output for the generation. Users can interact with Qwen-7B-Chat by inputting prompts, and the model returns model outputs in the streaming mode. Run the command below:

python cli_demo.py

API

We provide methods to deploy local API based on OpenAI API (thanks to @hanpenggit). Before you start, install the required packages:

pip install fastapi uvicorn openai pydantic sse_starlette

Then run the command to deploy your API:

python openai_api.py

You can change your arguments, e.g., -c for checkpoint name or path, --cpu-only for CPU deployment, etc. If you meet problems launching your API deployment, updating the packages to the latest version can probably solve them.

Using the API is also simple. See the example below:

import openai

openai.api_base = "http://localhost:8000/v1"

openai.api_key = "none"

# create a request activating streaming response

for chunk in openai.ChatCompletion.create(

model="Qwen",

messages=[

{"role": "user", "content": "你好"}

],

stream=True

# Specifying stop words in streaming output format is not yet supported and is under development.

):

if hasattr(chunk.choices[0].delta, "content"):

print(chunk.choices[0].delta.content, end="", flush=True)

# create a request not activating streaming response

response = openai.ChatCompletion.create(

model="Qwen",

messages=[

{"role": "user", "content": "你好"}

],

stream=False,

stop=[] # You can add custom stop words here, e.g., stop=["Observation:"] for ReAct prompting.

)

print(response.choices[0].message.content)

Function calling is also supported (but only when stream=False for the moment). See the example usage here.

Deployment

It is simple to run the model on CPU, which requires your specification of device:

model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen-7B-Chat", device_map="cpu", trust_remote_code=True).eval()

If you suffer from lack of GPU memory and you would like to run the model on more than 1 GPU, you can use our provided script utils.py:

from utils import load_model_on_gpus

model = load_model_on_gpus('Qwen/Qwen-7B-Chat', num_gpus=2)

Then you can run the 7B chat model on 2 GPUs using the above scripts.

Tool Usage

Qwen-7B-Chat is specifically optimized for tool usage, including API, database, models, etc., so that users can build their own Qwen-7B-based LangChain, Agent, and Code Interpreter. In our evaluation benchmark for assessing tool usage capabilities, we find that Qwen-7B reaches stable performance.

| Model | Tool Selection (Acc.↑) | Tool Input (Rouge-L↑) | False Positive Error↓ |

|---|---|---|---|

| GPT-4 | 95% | 0.90 | 15% |

| GPT-3.5 | 85% | 0.88 | 75% |

| Qwen-7B-Chat | 99% | 0.89 | 9.7% |

For how to write and use prompts for ReAct Prompting, please refer to the ReAct examples. The use of tools can enable the model to better perform tasks.

Additionally, we provide experimental results to show its capabilities of playing as an agent. See Hugging Face Agent for more information. Its performance on the run-mode benchmark provided by Hugging Face is as follows:

| Model | Tool Selection↑ | Tool Used↑ | Code↑ |

|---|---|---|---|

| GPT-4 | 100 | 100 | 97.41 |

| GPT-3.5 | 95.37 | 96.30 | 87.04 |

| StarCoder-15.5B | 87.04 | 87.96 | 68.89 |

| Qwen-7B-Chat | 90.74 | 92.59 | 74.07 |

Long-Context Understanding

To extend the context length and break the bottleneck of training sequence length, we introduce several techniques, including NTK-aware interpolation, window attention, and LogN attention scaling, to extend the context length to over 8K tokens. We conduct language modeling experiments on the arXiv dataset with the PPL evaluation and find that Qwen-7B can reach outstanding performance in the scenario of long context. Results are demonstrated below:

| Model | Sequence Length | ||||

|---|---|---|---|---|---|

| 1024 | 2048 | 4096 | 8192 | 16384 | |

| Qwen-7B | 4.23 | 3.78 | 39.35 | 469.81 | 2645.09 |

| + dynamic_ntk | 4.23 | 3.78 | 3.59 | 3.66 | 5.71 |

| + dynamic_ntk + logn | 4.23 | 3.78 | 3.58 | 3.56 | 4.62 |

| + dynamic_ntk + logn + window_attn | 4.23 | 3.78 | 3.58 | 3.49 | 4.32 |

Reproduction

For your reproduction of the model performance on benchmark datasets, we provide scripts for you to reproduce the results. Check eval/EVALUATION.md for more information. Note that the reproduction may lead to slight differences from our reported results.

FAQ

If you meet problems, please refer to FAQ and the issues first to search a solution before you launch a new issue.

License Agreement

Researchers and developers are free to use the codes and model weights of both Qwen-7B and Qwen-7B-Chat. We also allow their commercial use. Check our license at LICENSE for more details. If you have requirements for commercial use, please fill out the form to apply.

Contact Us

If you are interested to leave a message to either our research team or product team, feel free to send an email to qianwen_opensource@alibabacloud.com.