File size: 1,620 Bytes

130fa6f 91c66a5 130fa6f de7a49d 130fa6f f2555f9 130fa6f |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 |

---

license: apache-2.0

library_name: pytorch

---

# identity

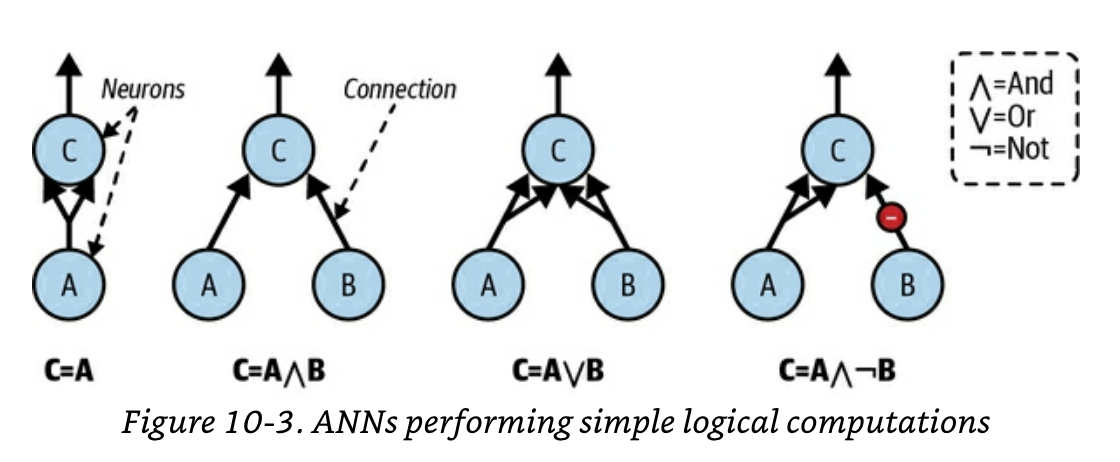

A neuron that performs the IDENTITY logical computation. It generates the following truth table:

| A | C |

| - | - |

| 0 | 0 |

| 1 | 1 |

It is inspired by McCulloch & Pitts' 1943 paper 'A Logical Calculus of the Ideas Immanent in Nervous Activity'.

It doesn't contain any parameters.

It takes as input a single column vector of zeros and ones. It outputs a single column vector of zeros and ones.

Its mechanism is outlined in Figure 10-3 of Aurelien Geron's book 'Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow'.

Like all the other neurons in Figure 10-3, it is activated when at least two of its input connections are active.

Code: https://github.com/sambitmukherjee/handson-ml3-pytorch/blob/main/chapter10/logical_computations_with_neurons.ipynb

## Usage

```

import torch

import torch.nn as nn

from huggingface_hub import PyTorchModelHubMixin

# Let's create a column vector containing two values - `0` and `1`:

batch = torch.tensor([[0], [1]])

class IDENTITY(nn.Module, PyTorchModelHubMixin):

def __init__(self):

super().__init__()

self.operation = "C = A"

def forward(self, x):

a = x

inputs = torch.cat([a, a], dim=1)

column_sum = torch.sum(inputs, dim=1, keepdim=True)

output = (column_sum >= 2).long()

return output

# Instantiate:

identity = IDENTITY.from_pretrained("sadhaklal/identity")

# Forward pass:

output = identity(batch)

print(output)

``` |