You need to agree to share your contact information to access this model

This repository is publicly accessible, but you have to accept the conditions to access its files and content.

The collected information will help acquire a better knowledge of pyannote.audio userbase and help its maintainers improve it further. Though this model uses MIT license and will always remain open-source, we will occasionnally email you about premium models and paid services around pyannote.

Log in or Sign Up to review the conditions and access this model content.

Using this open-source model in production?

Consider switching to pyannoteAI for better and faster options.

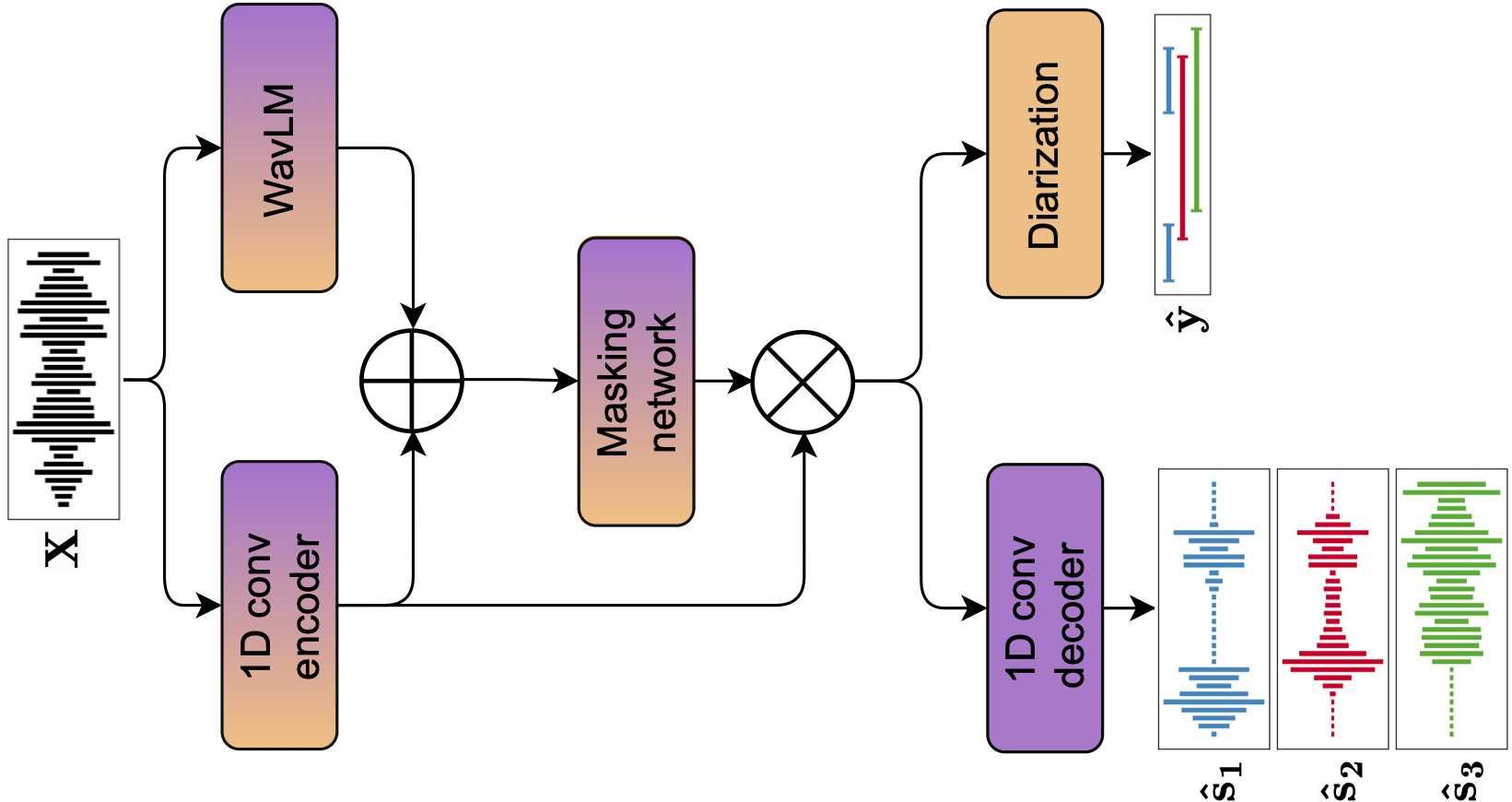

🎹 ToTaToNet / joint speaker diarization and speech separation

This model ingests 5 seconds of mono audio sampled at 16 kHz and outputs speaker diarization AND speech separation for up to 3 speakers.

It has been trained by Joonas Kalda with pyannote.audio 3.3.0 using the AMI dataset (single distant microphone, SDM). These paper and companion repository describe the approach in more details.

Requirements

- Install

pyannote.audio3.3.0withpip install pyannote.audio[separation]==3.3.0 - Accept

pyannote/separation-ami-1.0user conditions - Create access token at

hf.co/settings/tokens.

from pyannote.audio import Model

model = Model.from_pretrained(

"pyannote/separation-ami-1.0",

use_auth_token="HUGGINGFACE_ACCESS_TOKEN_GOES_HERE")

Usage

# model ingests 5s of mono audio sampled at 16kHz...

duration = 5.0

num_channels = 1

sample_rate = 16000

waveforms = torch.randn(batch_size, num_channels, duration * sample_rate)

waveforms.shape

# (batch_size, num_channels = 1, num_samples = 80000)

# ... and outputs both speaker diarization and separation

with torch.inference_mode():

diarization, sources = model(waveform)

diarization.shape

# (batch_size, num_frames = 624, max_num_speakers = 3)

# with values between 0 (speaker inactive) and 1 (speaker active)

sources.shape

# (batch_size, num_samples = 80000, max_num_speakers = 3)

Limitations

This model cannot be used to perform speaker diarization and speech separation of full recordings on its own (it only processes 5s chunks): see pyannote/speech-separation-ami-1.0 pipeline that uses an additional speaker embedding model to do that.

Citations

@inproceedings{Kalda24,

author={Joonas Kalda and Clément Pagés and Ricard Marxer and Tanel Alumäe and Hervé Bredin},

title={{PixIT: Joint Training of Speaker Diarization and Speech Separation from Real-world Multi-speaker Recordings}},

year=2024,

booktitle={Proc. Odyssey 2024},

}

@inproceedings{Bredin23,

author={Hervé Bredin},

title={{pyannote.audio 2.1 speaker diarization pipeline: principle, benchmark, and recipe}},

year=2023,

booktitle={Proc. INTERSPEECH 2023},

}