PDS-DPO-7B-LoRA Model Card

PDS-DPO-7B is a vision-language model built upon LLaVA 1.5 7B and trained using the proposed Preference Data Synthetic Direct Preference Optimization (PDS-DPO) framework. This approach leverages synthetic data generated using generative and reward models as proxies for human preferences to improve alignment, reduce hallucinations, and enhance reasoning capabilities.

Model Details

- Model Name: PDS-DPO-7B-LoRA

- Base Model: LLaVA 1.5 (Vicuna-7B)

- Framework: Preference Data Synthetic Alignment using Direct Preference Optimization (PDS-DPO)

- Dataset: 9K synthetic image-text pairs (positive and negative responses), generated via Stable Diffusion, LLaVA, and scored by reward models like ImageReward and Llama-3-8B-ArmoRM.

- Training Hardware: 2 × A100 GPUs

- Training Optimization: LoRA fine-tuning

Key Features

- Synthetic Data Alignment

- Utilizes generative models and leverages reward models for quality control, filtering the best images and responses to align with human preferences.

- Improved Hallucination Control

- Achieves significant reduction in hallucination rates on benchmarks like Object HalBench, MMHal-Bench, and POPE.

- Competitive Benchmark Performance

- Demonstrates strong results across vision-language tasks like VQAv2, SQA, MM-Vet, and TextVQA.

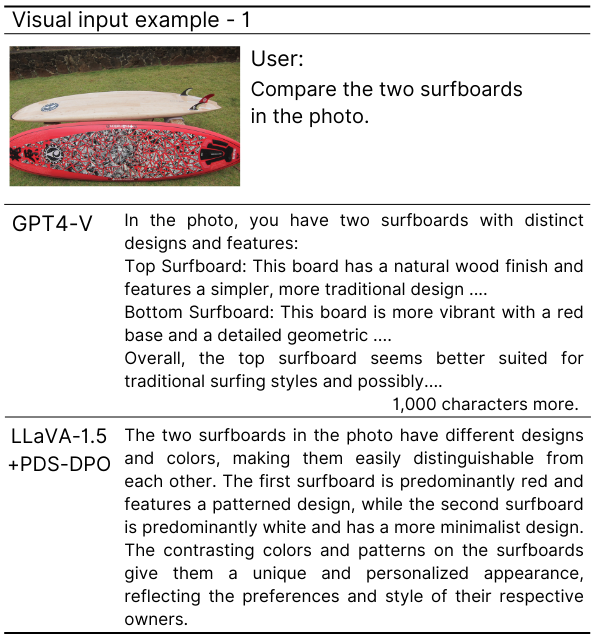

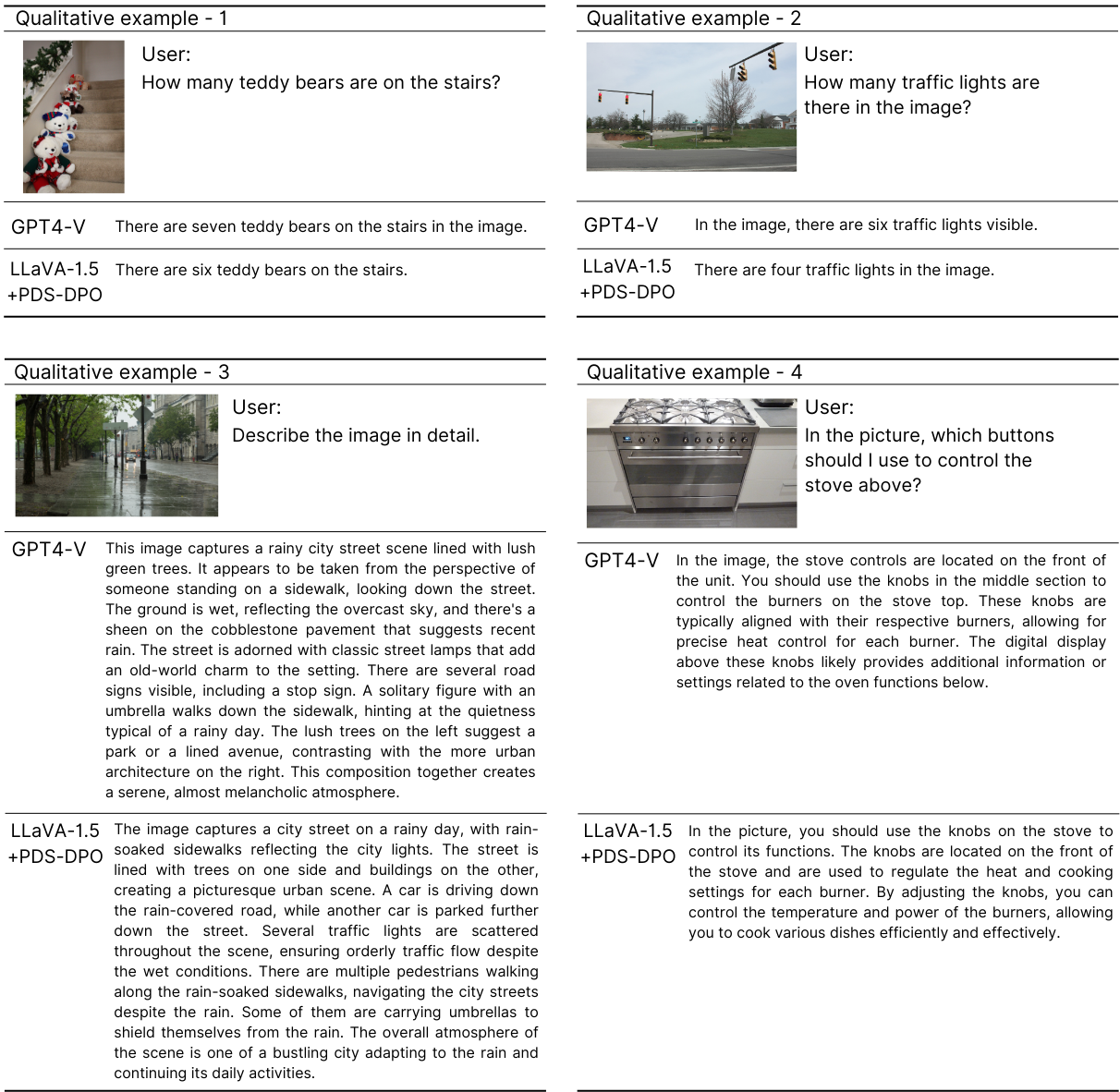

Examples

Citation

@article{wijaya2024multimodal,

title={Multimodal Preference Data Synthetic Alignment with Reward Model},

author={Wijaya, Robert and Nguyen, Ngoc-Bao and Cheung, Ngai-Man},

journal={arXiv preprint arXiv:2412.17417},

year={2024}

}

- Downloads last month

- 2

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support