Kyle - The Pinnacle of Robot Walker Ragdoll Training

Kyle represents the zenith of active ragdoll training environments, meticulously crafted within the Unity framework. This innovative platform is the result of extensive enhancements and optimizations applied to the foundational codebase of the renowned Ragdoll Trainer. By integrating cutting-edge vision technology and leveraging the robustness of LSTM (Long Short-Term Memory) networks, Kyle offers an unparalleled simulation experience that pushes the boundaries of AI training.

In-Depth Model Description

At the heart of Kyle lies a revolutionary active ragdoll training environment, engineered to simulate complex physical interactions with an unprecedented level of realism. The core architecture of Kyle is derived from the original Ragdoll Trainer, which has been heavily modified and refined to achieve optimal performance.

The enhancements include:

- Advanced Vision Capabilities: Kyle's sensory apparatus is equipped with state-of-the-art visual processing features that enable the recognition and interpretation of intricate visual cues within the training environment.

- Adaptive Short-Term Variable Memory: Utilizing LSTM networks, Kyle boasts a dynamic memory system capable of capturing and utilizing temporal information, which is crucial for tasks that require an understanding of sequences and patterns over time.

- Sophisticated Simulation for AI Training: The combination of these technologies provides a rich and complex simulation platform. It allows for the training of AI models that can navigate and interact with their surroundings in a manner akin to sentient beings, paving the way for advancements in the field of artificial intelligence.

By fostering a more sophisticated simulation environment, Kyle empowers researchers and developers to train AI models that can perform a diverse array of tasks, from simple locomotion to navigating intricate obstacle courses, all while responding to an ever-changing array of stimuli and challenges.

Comprehensive Guide on Utilizing Kyle

Kyle is not just a model; it's a gateway to a new realm of AI-driven ragdoll physics in gaming. To harness the full potential of Kyle within the Neko Cat Game, it's imperative to integrate the skeletal armature GameObject that accompanies our project. This GameObject is the linchpin of our Ragdoll Trainer, meticulously designed to work in harmony with Kyle's advanced functionalities.

Step-by-Step Usage Instructions

Incorporating the ONNX Model: Begin by importing the ONNX model file into your Unity project. This file encapsulates the essence of Kyle's learning and is the first step towards a new dimension of ragdoll simulation.

GameObject Integration: Seamlessly attach the model to the skeletal armature GameObject found within our project. This GameObject is the skeleton key to unlocking Kyle's capabilities, ensuring that the ragdoll movements are not just realistic but also cognitively rich.

Simulation and Training: With the model and GameObject in place, you're now equipped to train and simulate nuanced ragdoll movements. Kyle's cognitive functions, powered by LSTM networks, allow for a simulation that's not just reactive but also anticipatory, adapting to the environment with a semblance of understanding.

Unity Asset for Inference

In the spirit of accessibility and ease of use, we're excited to announce that a Unity asset will soon be available for download. This asset will enable users to implement Kyle's inference capabilities directly within their projects, bypassing the complexities of the development setup.

Future Developments

While training Kyle currently necessitates a basic development setup involving ML-Agents and a Python environment, we're diligently working on streamlining this process. Our vision is to introduce an automated feature within the game that will eliminate the need for manual setup of ML-Agents and the Python environment. This upcoming feature will empower users to train Kyle with the simplicity of a few clicks, making advanced AI training accessible to all.

Stay tuned for these exciting updates, as we continue to refine and enhance the user experience, making it as intuitive and user-friendly as possible.

Training Data: A Foundation Built on Diversity and Complexity

The training regime of Kyle is a testament to the diversity and complexity of the data it was exposed to. The model's foundation is built upon a dataset that encompasses a wide array of ragdoll movements, each enriched with visual inputs and memory components. This rich dataset ensures that Kyle can simulate real-world scenarios with remarkable effectiveness, allowing it to understand and predict physical interactions in a dynamic environment.

The current iteration of Kyle has been trained through a sequence of 20 million steps, a journey that has imbued it with the finesse to handle a multitude of tasks. As we continue to evolve our training scenes, we plan to release additional sequences. These will serve as building blocks that can be combined to forge new models, each specializing in different fitness applications, from the delicate balance required for acrobatics to the precision needed for sorting tasks.

Evaluation Results: Demonstrating Mastery Over Movement

Kyle's proficiency in simulating lifelike ragdoll physics is not just a claim; it's a proven fact. The model has undergone extensive testing, revealing its remarkable ability to not only mimic but also understand the nuances of movement. The added depth of vision and memory-based learning allows Kyle to anticipate and adapt, showcasing a level of cognitive function rarely seen in simulated environments.

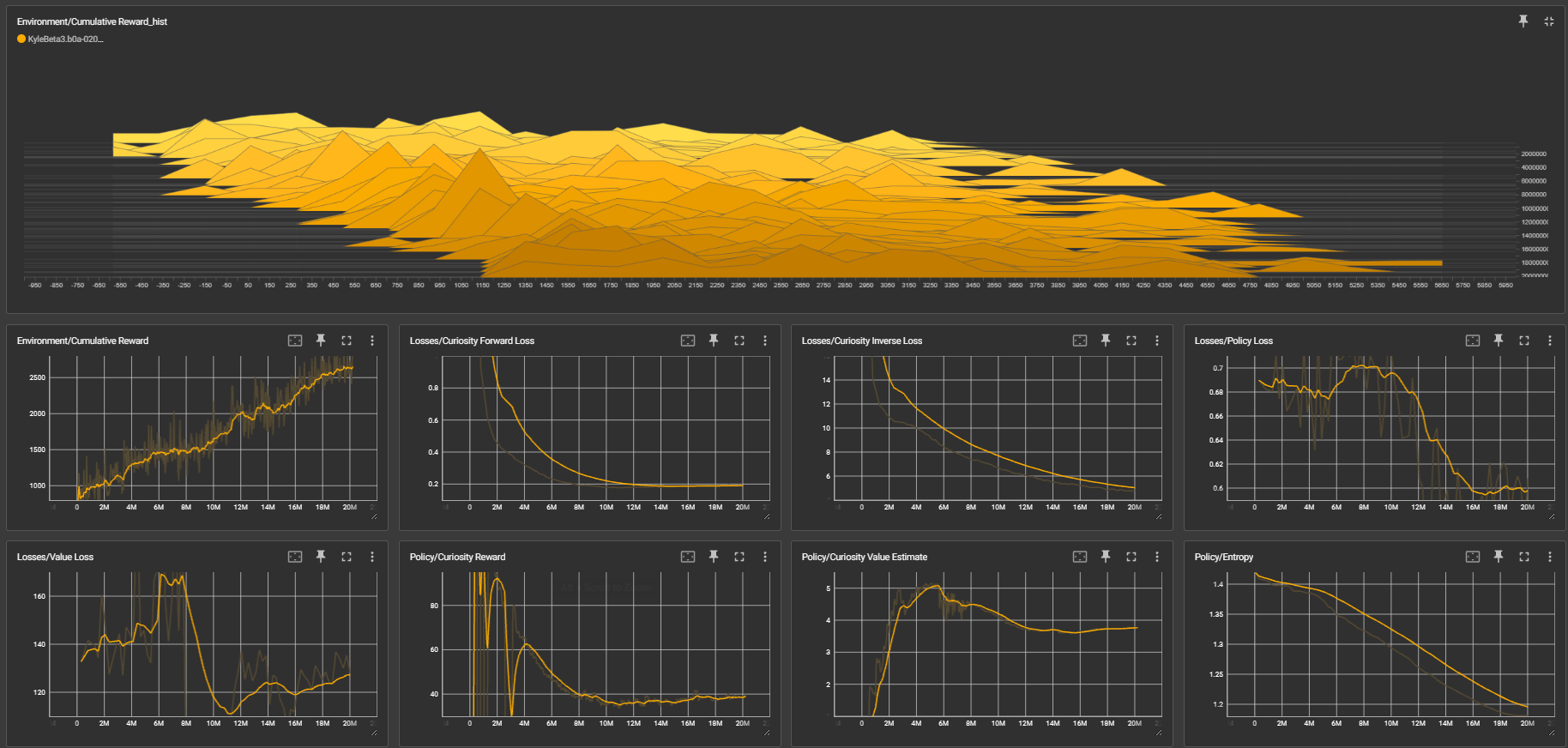

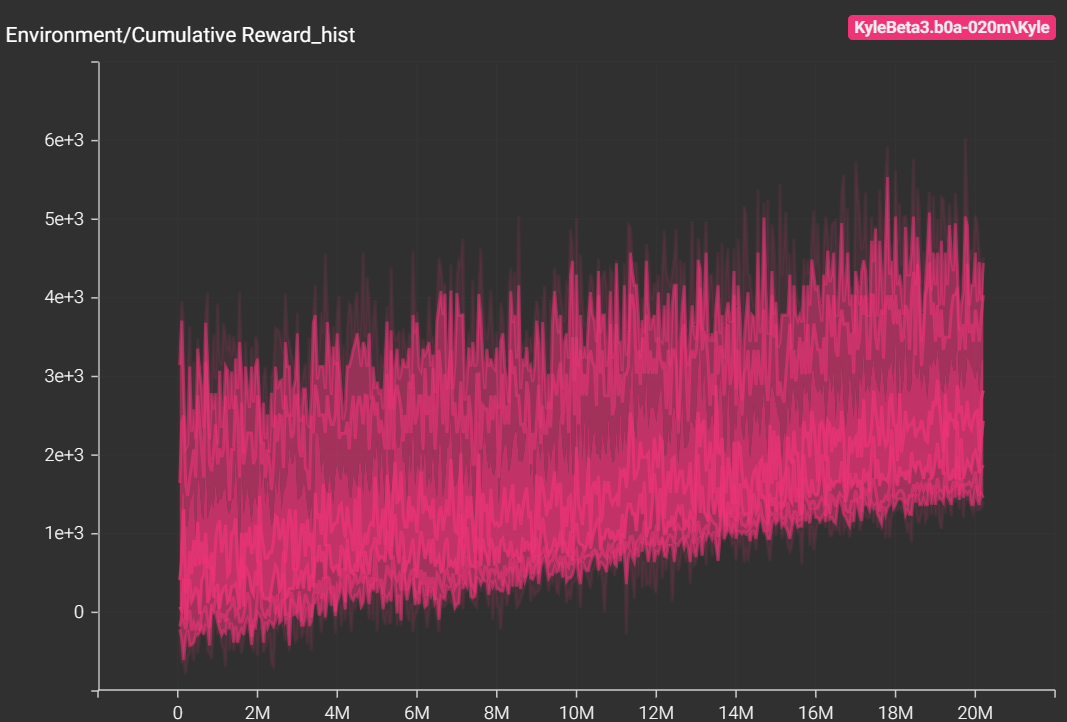

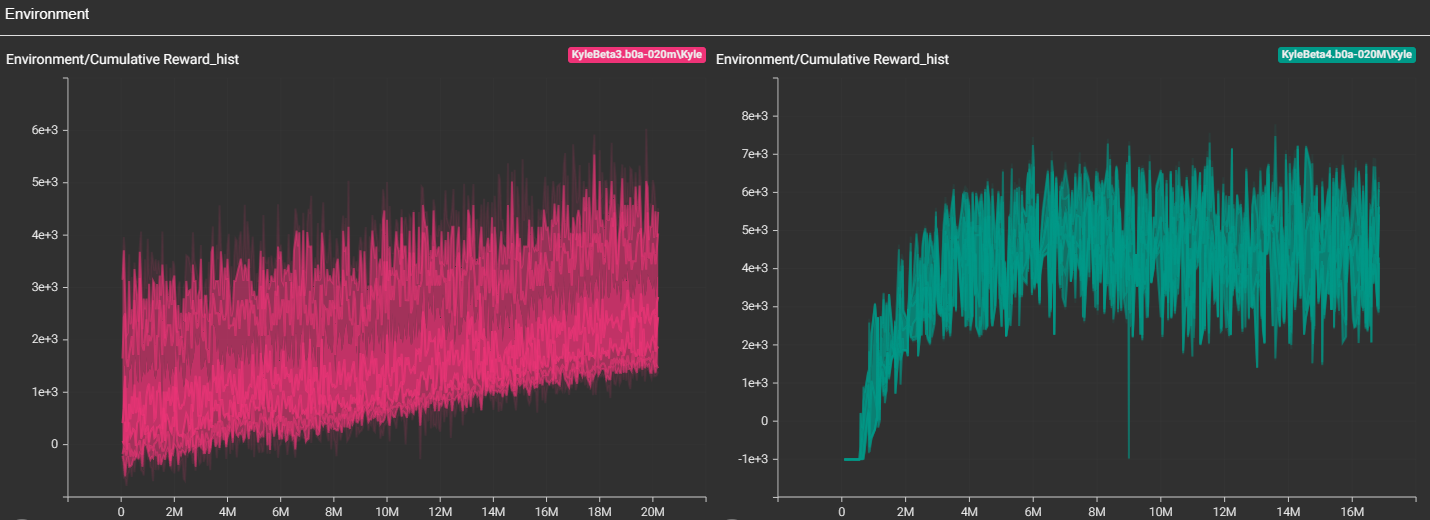

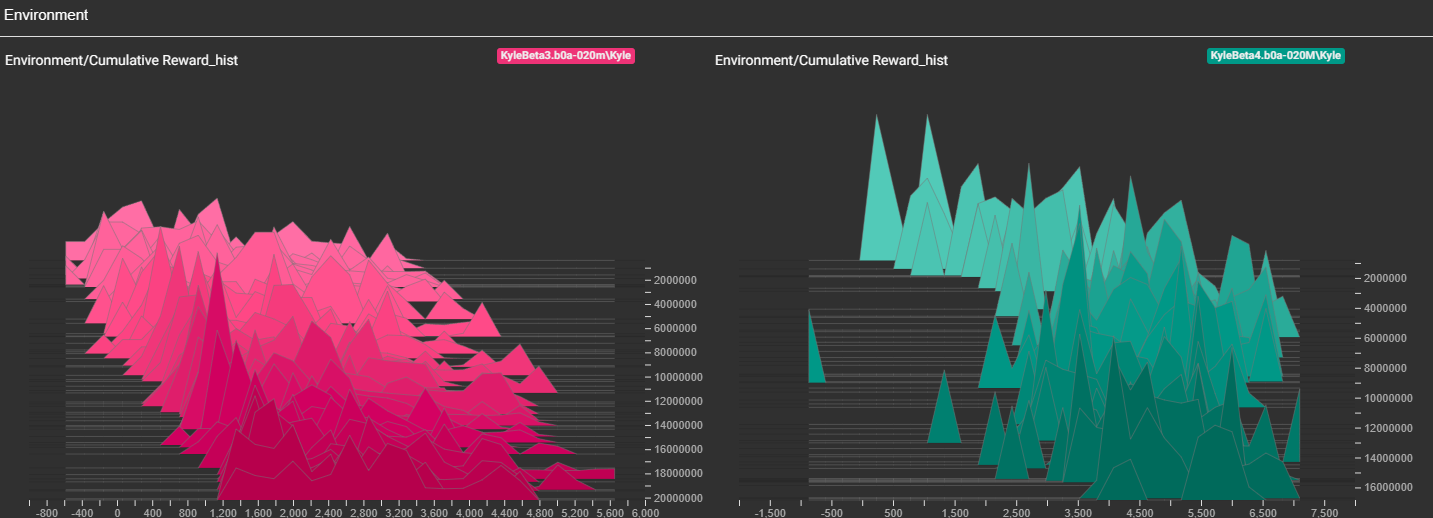

TensorBoard Results: Visual Testaments to Progress

The TensorBoard visualizations offer a window into Kyle's training journey and the strides it has made:

Figure 1: A graph depicting the training progress over time, illustrating a consistent reduction in loss and a corresponding improvement in accuracy.

Figure 1: A graph depicting the training progress over time, illustrating a consistent reduction in loss and a corresponding improvement in accuracy.

Figure 2: Validation results that shine a light on the model's ability to generalize, indicating robust performance on unseen data.

Figure 2: Validation results that shine a light on the model's ability to generalize, indicating robust performance on unseen data.

Figure 3: A comparative analysis that lays bare the model's predictions against the ground truth, underscoring the precision of Kyle's learning process.

Figure 3: A comparative analysis that lays bare the model's predictions against the ground truth, underscoring the precision of Kyle's learning process.

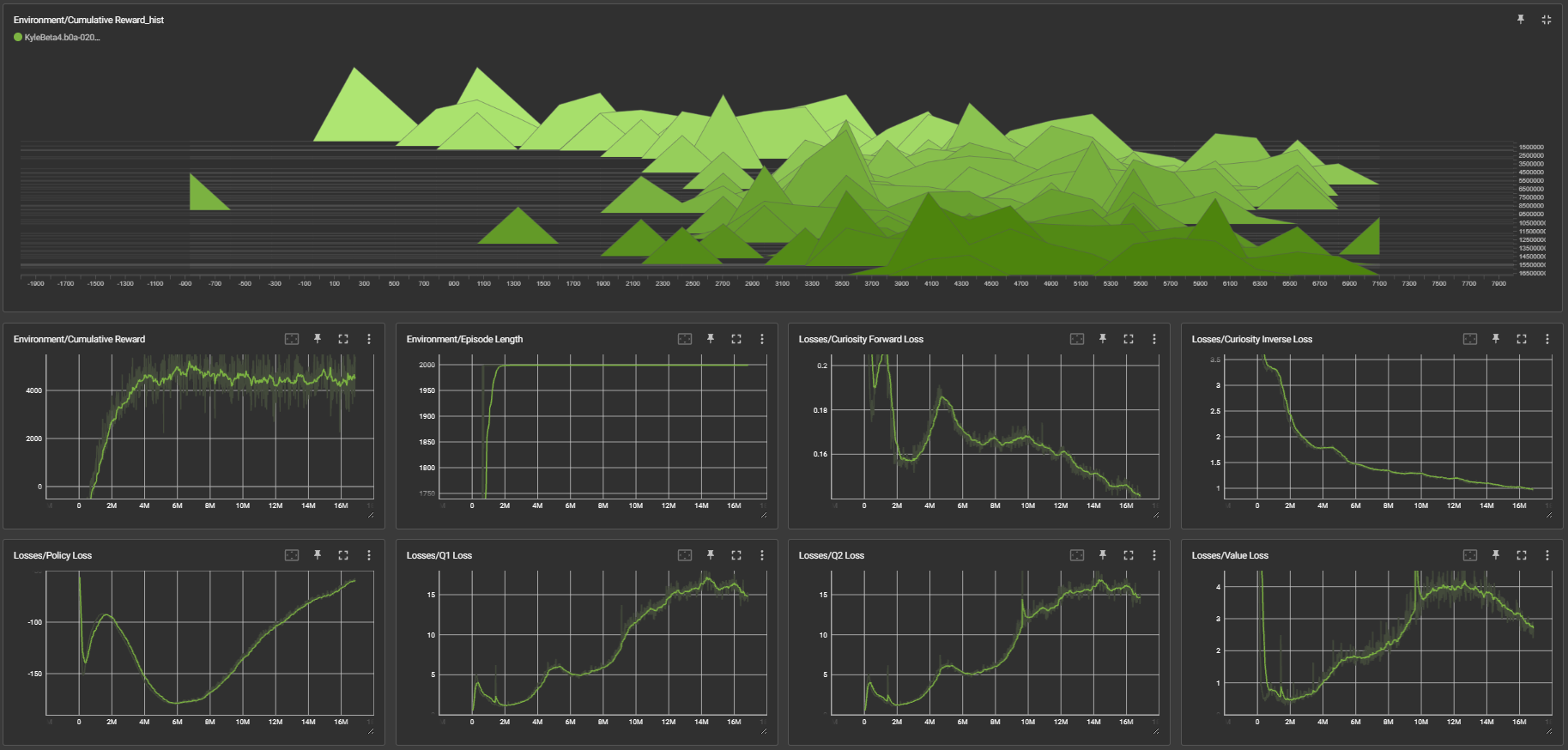

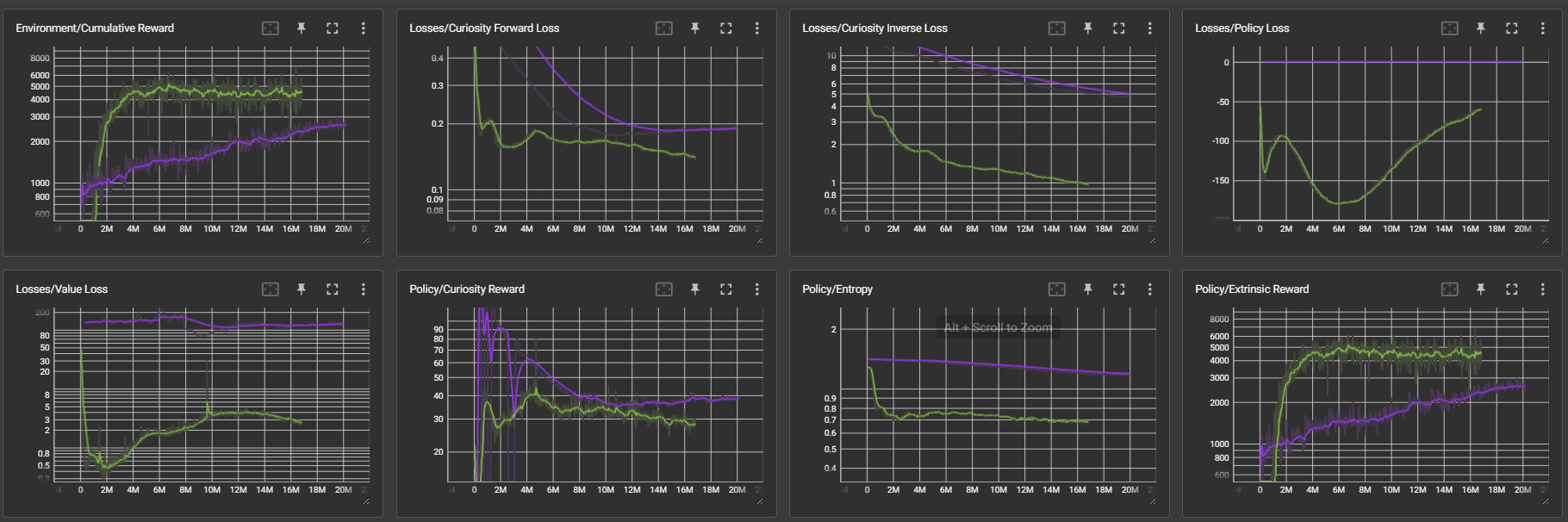

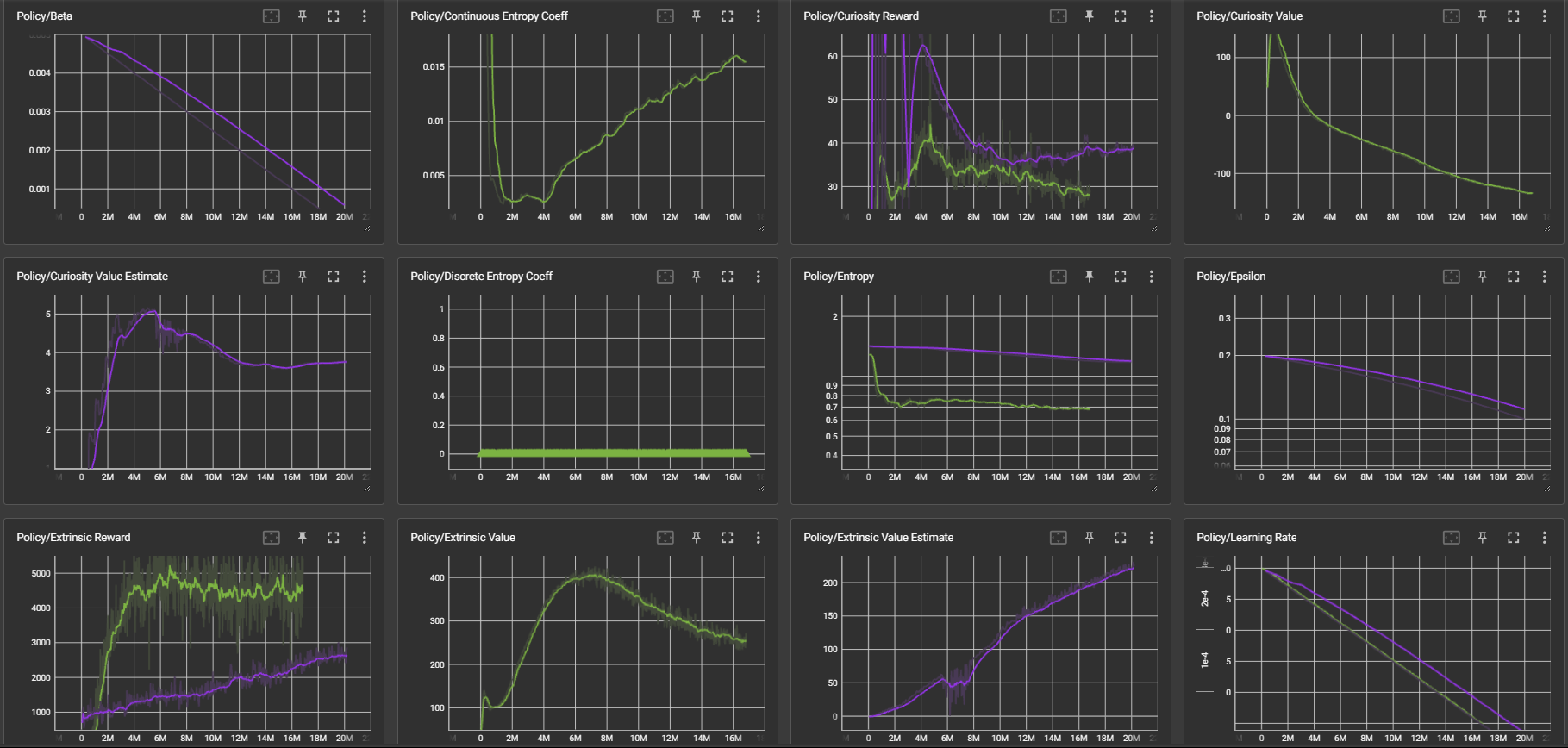

We recently have switched over to SAC training algorithms which has yeilded much better performance. The tensorboard charts below reflect the training of Kyle using Sac on a training sequence of 20 million steps. We also set our training buffer to 1 million steps from which our SAC agent learns from.

Figure 4: A graph depicting the training progress over time, illustrating a consistent reduction in loss and a corresponding improvement in accuracy.

Figure 4: A graph depicting the training progress over time, illustrating a consistent reduction in loss and a corresponding improvement in accuracy.

Figure 5: Validation results that shine a light on the model's ability to generalize, indicating robust performance on unseen data.

Figure 5: Validation results that shine a light on the model's ability to generalize, indicating robust performance on unseen data.

Figure 6: A comparative analysis that lays bare the model's predictions against the ground truth, underscoring the precision of Kyle's learning process.

Figure 6: A comparative analysis that lays bare the model's predictions against the ground truth, underscoring the precision of Kyle's learning process.

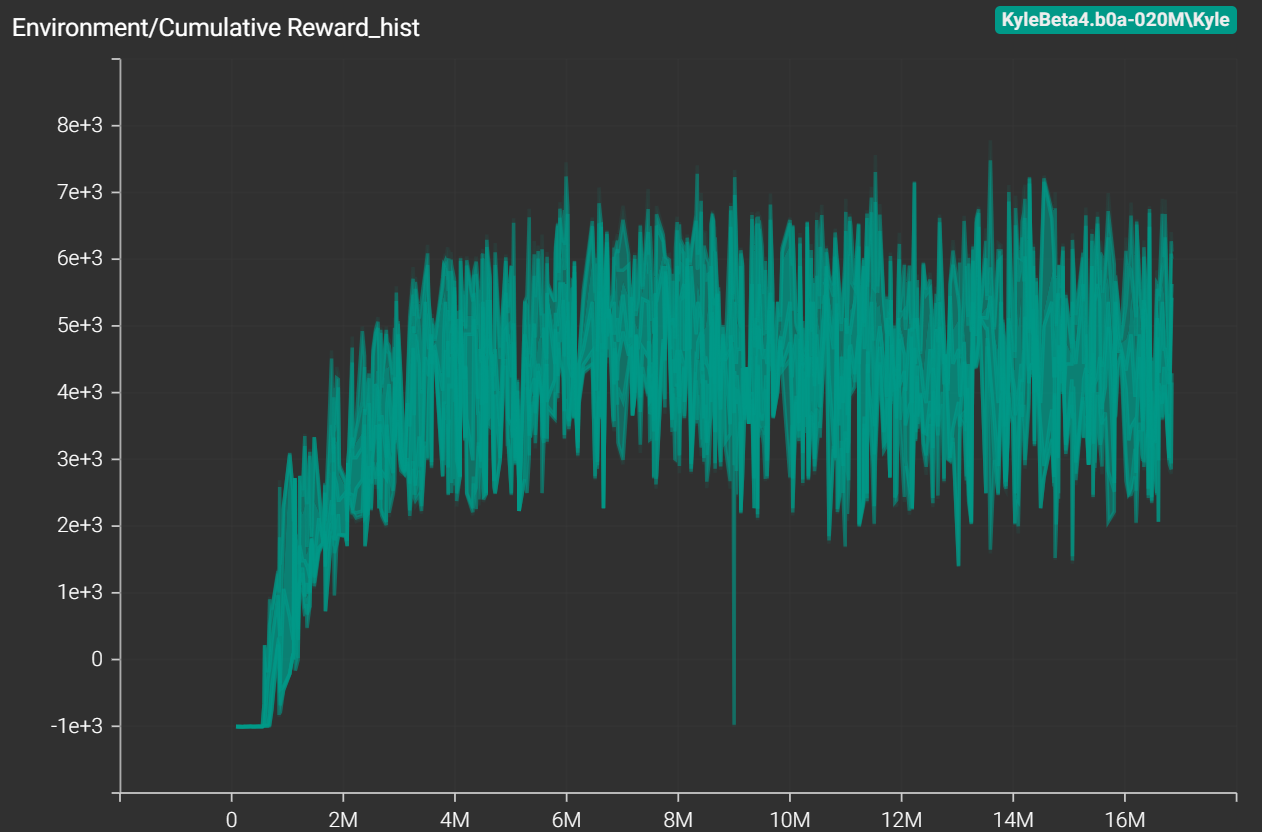

And the following is a compariative analysis of a sequence of 20 million turns of PPO verses SAC training. Both of these were trained using the same hardware and software configurations.

Figure 7: A graph depicting the training progress over time, illustrating a consistent reduction in loss and a corresponding improvement in accuracy.

Figure 7: A graph depicting the training progress over time, illustrating a consistent reduction in loss and a corresponding improvement in accuracy.

Figure 8: A graph depicting the training progress over time, illustrating a consistent reduction in loss and a corresponding improvement in accuracy.

Figure 8: A graph depicting the training progress over time, illustrating a consistent reduction in loss and a corresponding improvement in accuracy.

Figure 8: A graph depicting the training progress over time, illustrating a consistent reduction in loss and a corresponding improvement in accuracy.

Figure 8: A graph depicting the training progress over time, illustrating a consistent reduction in loss and a corresponding improvement in accuracy.

Figure 8: A graph depicting the training progress over time, illustrating a consistent reduction in loss and a corresponding improvement in accuracy.

Figure 8: A graph depicting the training progress over time, illustrating a consistent reduction in loss and a corresponding improvement in accuracy.

The Kyle Model: A Base for Customization

At its core, Kyle is a base biped model designed for direct customization to fit the unique requirements of your project. Whether you aim to fine-tune Kyle for a fighting simulation, choreograph a dance of acrobatics, or develop a system for sorting objects, the model's adaptable nature makes it an ideal starting point. The potential applications are as varied as they are exciting, and we eagerly anticipate the innovative ways in which Kyle will be utilized.

Ethical Considerations: Commitment to Responsible AI

In the development of Kyle, we have steadfastly upheld the principles of ethical AI. Our commitment to creating models that embody fairness, accountability, and transparency is unwavering. We recognize the profound impact AI can have on society, and we strive to ensure that our models contribute positively, enhancing the lives of those they touch.

- Fairness: We have taken rigorous steps to prevent biases in our model, ensuring that Kyle's learning and outputs are equitable and just.

- Accountability: Our development process is meticulously documented, providing clear traceability and fostering a culture of responsibility.

- Transparency: We believe in the open exchange of ideas and methodologies, which is why we are transparent about our development practices and the capabilities of our models.

Acknowledgements: A Tribute to Collaborative Innovation

We owe a debt of gratitude to the visionaries behind the original Ragdoll Trainer project. Their pioneering work laid the groundwork for what Kyle has become today. By building upon their innovative approach to active ragdoll training, we have been able to push the boundaries and achieve new heights.

- Foundational Work: The original Ragdoll Trainer's codebase served as the foundation upon which we built and optimized Kyle.

- Based On: Orginal project work and experiment discovered at https://github.com/kressdev/RagdollTrainer

- Collaborative Spirit: We celebrate the collaborative spirit of the AI and gaming communities, which has been instrumental in our project's success.

Additional Information: The Pillars of Kyle's Development

The development of Kyle is characterized by a blend of cutting-edge technology and meticulous craftsmanship.

Author: p3nGu1nZz

Github: https://github.com/cat-game-research/Neko/tree/main/RagdollTrainer

Technological Stack:

- Unity Version: 2023.2.7f1 - The canvas upon which Kyle's world comes to life.

- ONNX Version: 1.12.0 - Ensuring interoperability and state-of-the-art performance.

- ML-Agents Version: 3.0.0-exp.1 - The backbone of Kyle's learning capabilities.

- ML-Agents Extensions Version: 0.6.1-preview - Extending functionality and pushing the envelope of what's possible.

- Sentis Version: 1.3.0-pre.2 - Empowering Kyle with the ability to perceive and interact with its environment.

Features:

- Enhanced Rig: Crafted in Blender, this rig provides Kyle with fluid and natural movement.

- Heuristic Function: A tool for joint control that was pivotal during development testing.

- Stabilizers: Implemented for hips and spine, these stabilizers are key to Kyle's balance.

- Training Settings: Early training settings were crucial for establishing initial balance and target-oriented movement.

- Obstacle Navigation: Using Ray Perception Sensor 3D, Kyle can adeptly navigate around obstacles.

- Step and Stair Training: Kyle has been trained to navigate steps and stairs, adjusting to varying levels of difficulty.

Setup Process:

- Local Miniconda: The starting point for setting up the development environment.

- Cloning ML-Agents: Integrating the latest advancements from ML-Agents into Kyle.

- Script Configuration: Tailoring scripts to fine-tune models based on Kyle's unique requirements.

Each element of Kyle's development has been carefully considered and implemented to ensure that the model not only meets but exceeds the expectations of those who use it.

@misc{RagdollTrainer,

author = {cat-game-research},

title = {RagdollTrainer - The Evolution of Active Ragdoll Simulation in Unity},

year = {2024},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\\url{https://github.com/yourusername/LocalLlama}}

}