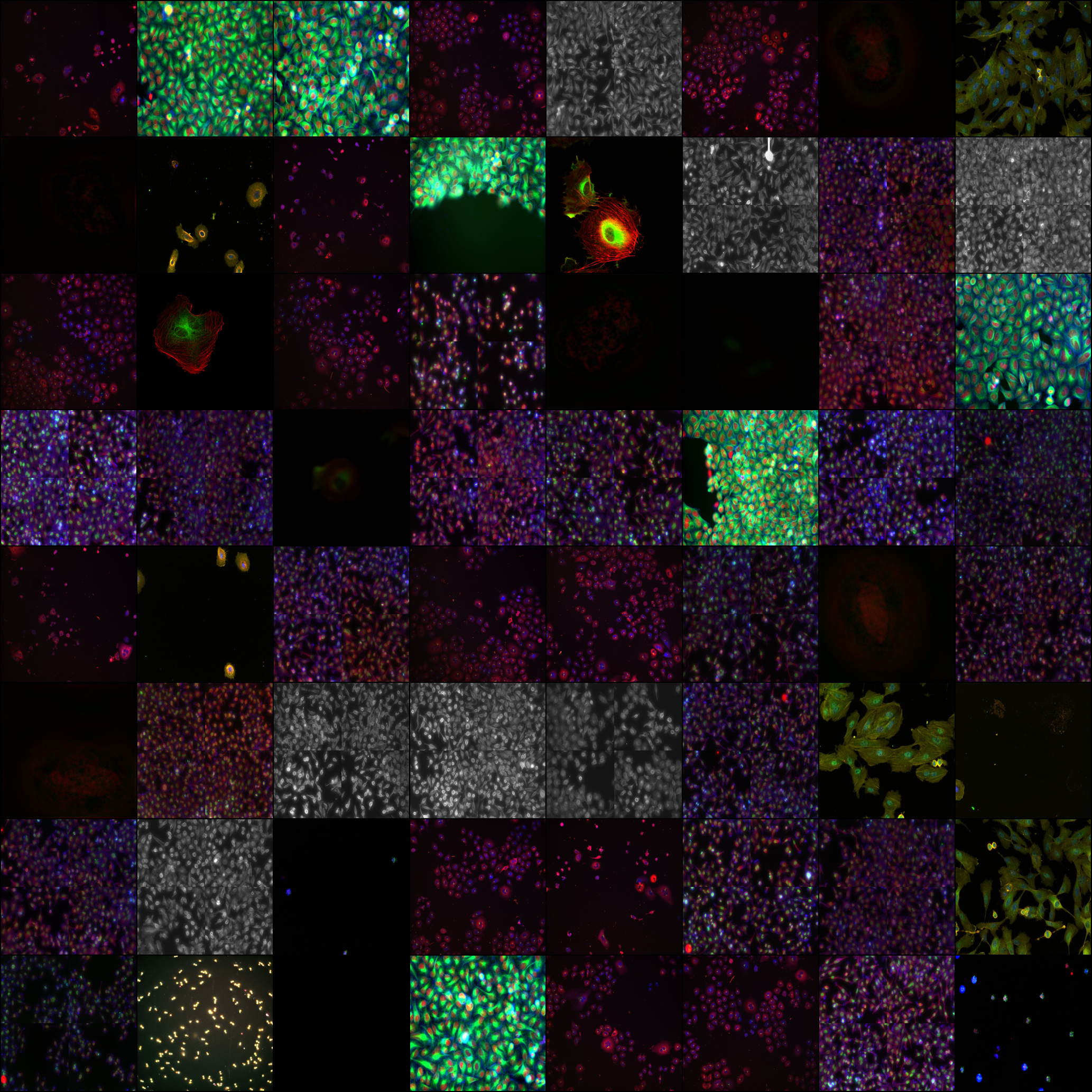

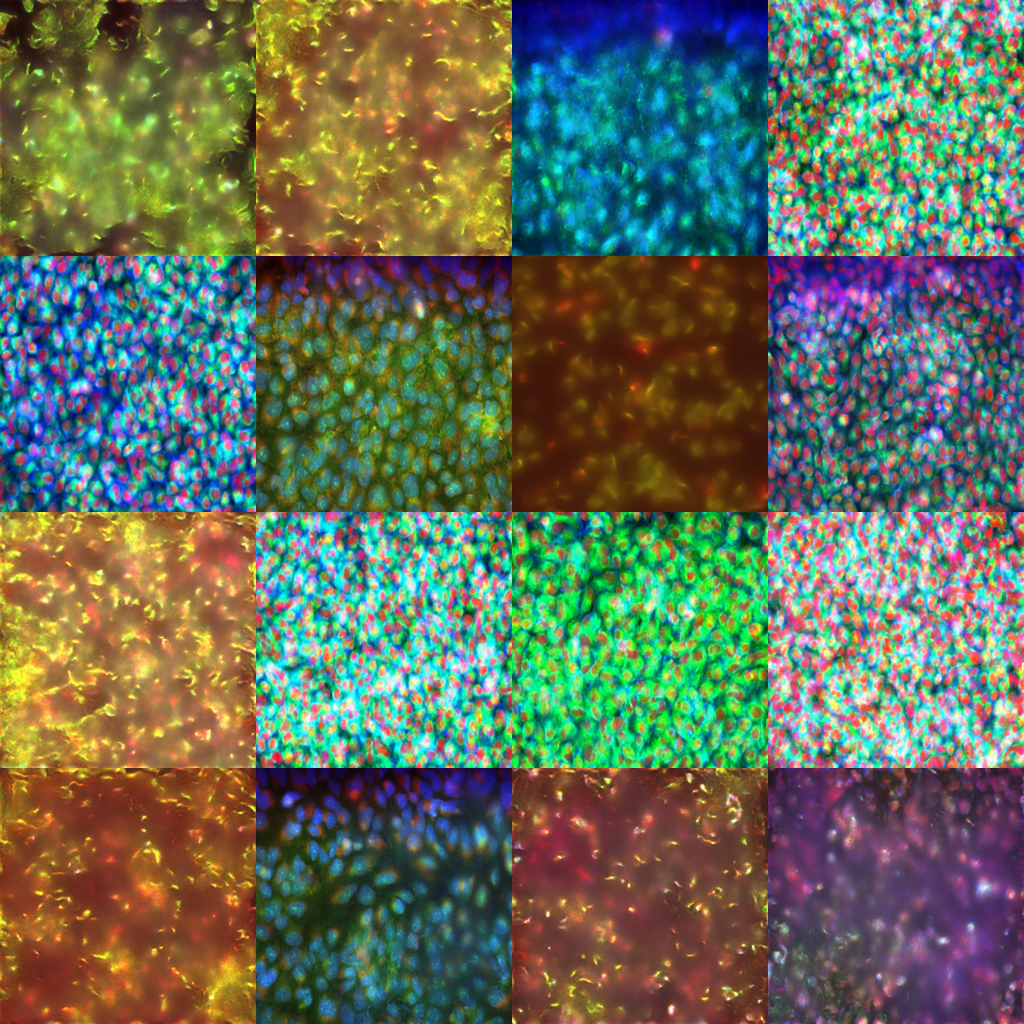

Diffusion model trained on a public dataset of images from image data resource to create highly detailed accurate depictions of flourescent and super-resolution cell images.

Ground-truth image data obtained from idr:

from diffusers import DDIMPipeline, DDPMPipeline, PNDMPipeline

model_id = "nakajimayoshi/ddpm-iris-256"

# load model and scheduler

ddim = DDIMPipeline.from_pretrained(model_id) # you can replace DDPMPipeline with DDIMPipeline or PNDMPipeline for faster inference

# run pipeline in inference (sample random noise and denoise)

image = ddim().images[0]

# save image

image.save("sample.png")

The role of generative AI in the science is a new discussion and the merits of it have yet to be evaluated. Whilst current image-to-image and text-to-image models make it easier than ever to create stunning images, they lack the specific training sets to replicate accurate and detailed images found in flourescent cell microscopy. We propose ddpm-IRIS, a difusion network leveraging Google's Diffusion Model to generate visual depitctions of cell features with more detail than traditional models.

Hyperparameters:

- image_size = 256

- train_batch_size = 16

- eval_batch_size = 16

- num_epochs = 50

- gradient_accumulation_steps = 1

- learning_rate = 1e-4

- lr_warmup_steps = 500

- save_image_epochs = 10

- save_model_epochs = 30

- mixed_precision = 'fp16'

trained on 1 Nvidia A100 40GB GPU over 50 epochs for 2.5 hours.

- Downloads last month

- 20