GenshinCLIP

A simple open-sourced SigLIP model further fine-tuned on 15k Genshin Impact (Game Version 5.0) English text-image pairs at resolution 384x384.

Visit the github for case study and data pair examples.

The model is far from being perfect, but could still offer some better text-image matching performance in some Genshin Impact scenarios.

- Deprecated Models (Trained on Genshin Impact Version 4.x)

| Model | Checkpoint Size | Val Loss |

|---|---|---|

| GenshinImpact-CLIP-ViT-B-16-laion2B-s34B-b88K | 0.59 GB | 1.152 |

| GenshinImpact-ViT-SO400M-14-SigLIP-384 | 3.51 GB | 0.362 |

Intended uses & limitations

You can use the raw model for tasks like zero-shot image classification and image-text retrieval.

How to use (With OpenCLIP)

Here is how to use this model to perform zero-shot image classification:

import torch

import torch.nn.functional as F

from PIL import Image

import requests

from open_clip import create_model_from_pretrained, get_tokenizer

def preprocess_text(string):

return "Genshin Impact\n" + string

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# load checkpoint from local path

# model_path = "path/to/open_clip_pytorch_model.bin"

# model_name = "ViT-SO400M-14-SigLIP-384"

# model, preprocess = create_model_from_pretrained(model_name=model_name, pretrained=model_path, device=device)

# tokenizer = get_tokenizer(model_name)

# or load from hub

model, preprocess = create_model_from_pretrained('hf-hub:mrzjy/GenshinImpact-5.0-ViT-SO400M-14-SigLIP-384')

tokenizer = get_tokenizer('hf-hub:mrzjy/GenshinImpact-5.0-ViT-SO400M-14-SigLIP-384')

# image

image_url = "https://static.wikia.nocookie.net/gensin-impact/images/3/33/Qingce_Village.png"

image = Image.open(requests.get(image_url, stream=True).raw)

image = preprocess(image).unsqueeze(0).to(device)

# text choices

labels = [

"This is an area of Liyue",

"This is an area of Mondstadt",

"This is an area of Sumeru",

"This is Qingce Village"

]

labels = [preprocess_text(l) for l in labels]

text = tokenizer(labels, context_length=model.context_length).to(device)

with torch.autocast(device_type=device.type):

with torch.no_grad():

image_features = model.encode_image(image)

text_features = model.encode_text(text)

image_features = F.normalize(image_features, dim=-1)

image_features = F.normalize(image_features, dim=-1)

text_features = F.normalize(text_features, dim=-1)

text_probs = torch.sigmoid(image_features @ text_features.T * model.logit_scale.exp() + model.logit_bias)

scores = [f"{s:.3f}" for i, s in enumerate(text_probs.tolist()[0])]

print(scores)

Model Card

SigLIP for GenshinImpact

SigLIP model further fine-tuned on 15k Genshin Impact English text-image pairs at resolution 384x384.

(Note: A slight error occurred in counting training samples for deprecated models. The latest training data should actually include 3,500 additional samples compared to previous counts.)

Training data description

There're currently 14,573 (train) and 298 (validation) text-image pairs used for model training.

All the images and texts are crawled from Genshin Fandom Wiki and are manually parsed to form text-image pairs.

Image Processing:

- Size: Resize all images to 384x384 pixels to match the original model training settings.

- Format: Accept images in PNG or GIF format. For GIFs, extract a random frame to create a static image for text-image pairs.

Text Processing:

- Source: Text can be from the simple caption attribute of an HTML

<img>tag or specified web content. - Format: Prepend all texts with "Genshin Impact" along with some simple template to form natural language sentences.

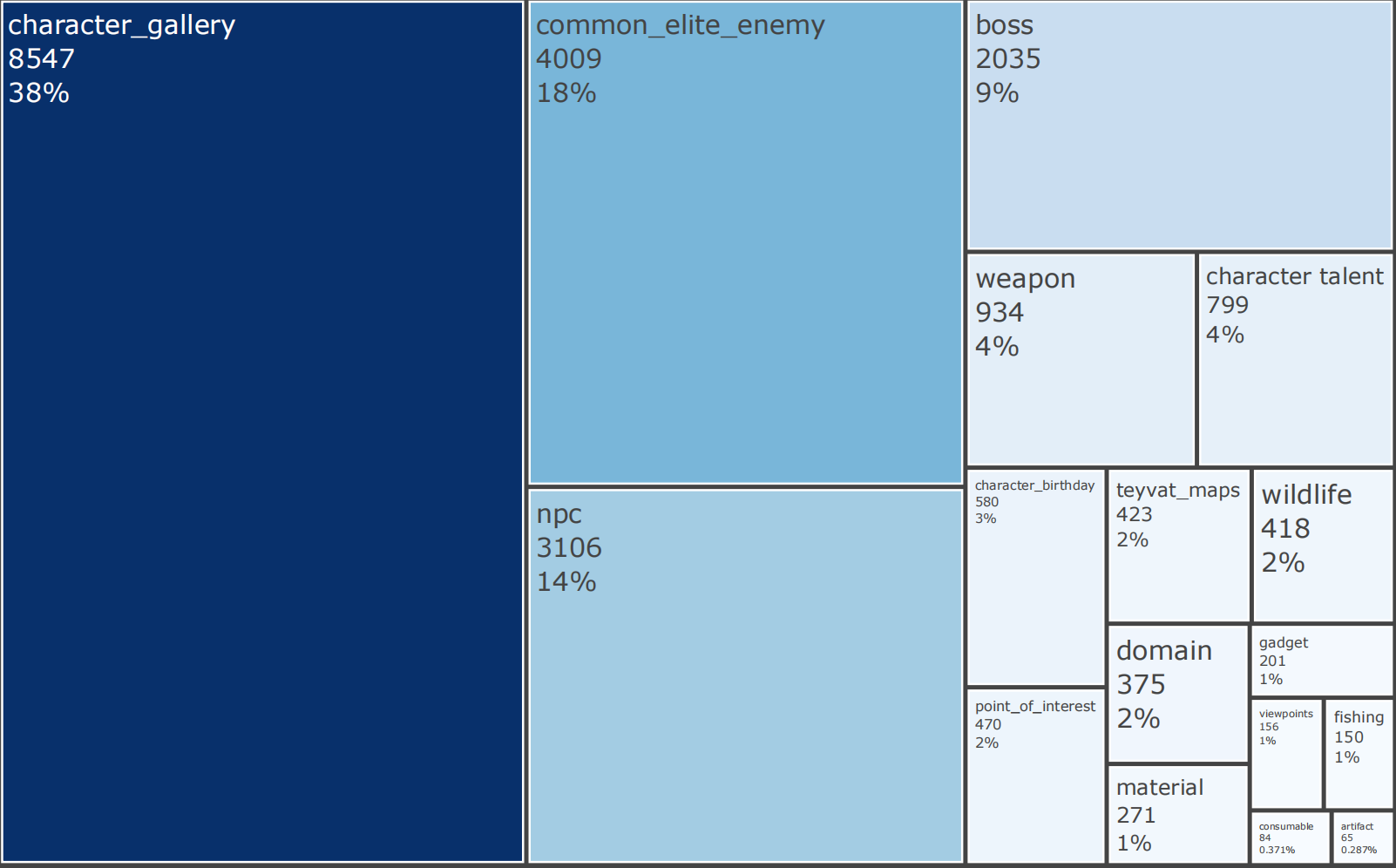

Data Distribution:

- Downloads last month

- 4

Model tree for mrzjy/GenshinImpact-5.0-ViT-SO400M-14-SigLIP-384

Base model

timm/ViT-SO400M-14-SigLIP-384