Training procedure

We finetuned Llama 2 7B model from Meta on nampdn-ai/tiny-codes for ~ 10,000 steps using MonsterAPI no-code LLM finetuner.

This dataset contains 1.63 million rows and is a collection of short and clear code snippets that can help LLM models learn how to reason with both natural and programming languages. The dataset covers a wide range of programming languages, such as Python, TypeScript, JavaScript, Ruby, Julia, Rust, C++, Bash, Java, C#, and Go. It also includes two database languages: Cypher (for graph databases) and SQL (for relational databases) in order to study the relationship of entities.

The finetuning session got completed in 53 hours and costed us ~ $125 for the entire finetuning run!

Hyperparameters & Run details:

- Model Path: meta-llama/Llama-2-7b-hf

- Dataset: nampdn-ai/tiny-codes

- Learning rate: 0.0002

- Number of epochs: 1 (10k steps)

- Data split: Training: 90% / Validation: 10%

- Gradient accumulation steps: 1

Framework versions

- PEFT 0.4.0

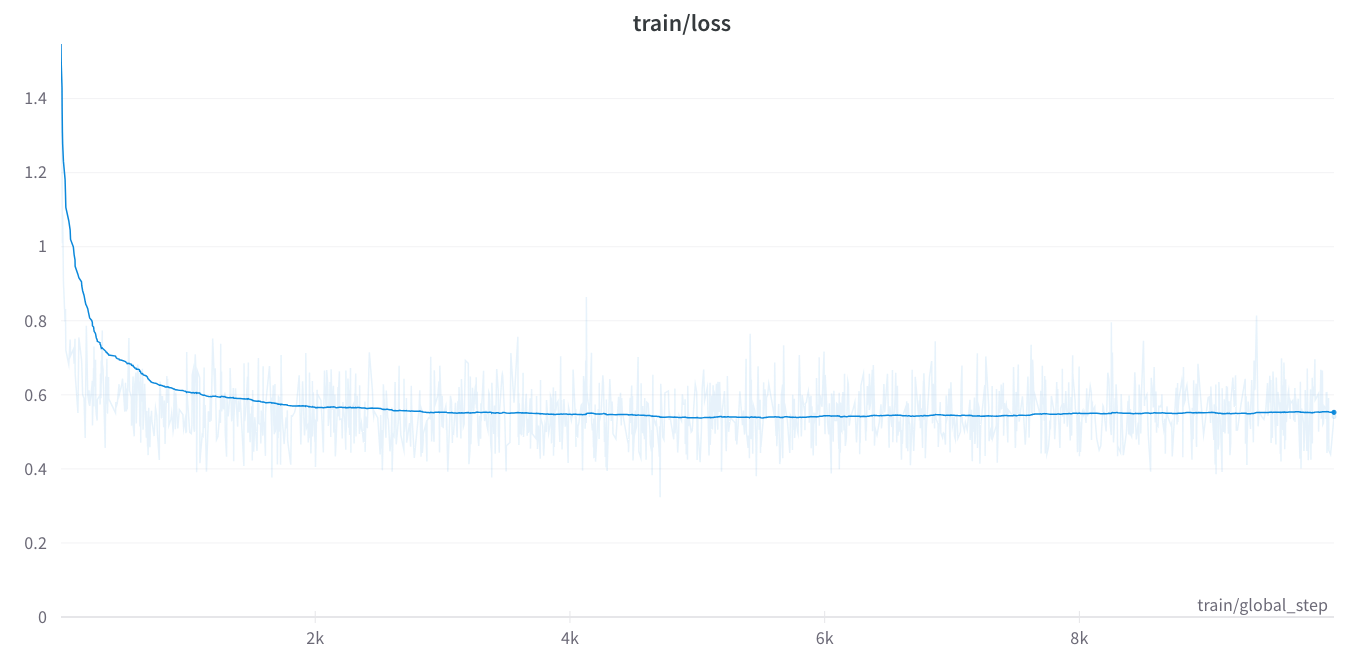

Loss metrics:

- Downloads last month

- 13

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for monsterapi/llama2-code-generation

Base model

meta-llama/Llama-2-7b-hf