Model Card for QA_GeneraToR

Excited 😄 to share with you my very first model 🤖 for generating question-answering datasets! This incredible model takes articles 📜 or web pages, and all you need to provide is a prompt and context. It works like magic ✨, generating both the question and the answer. The prompt can be anything – "what," "who," "where" ... etc ! 😅

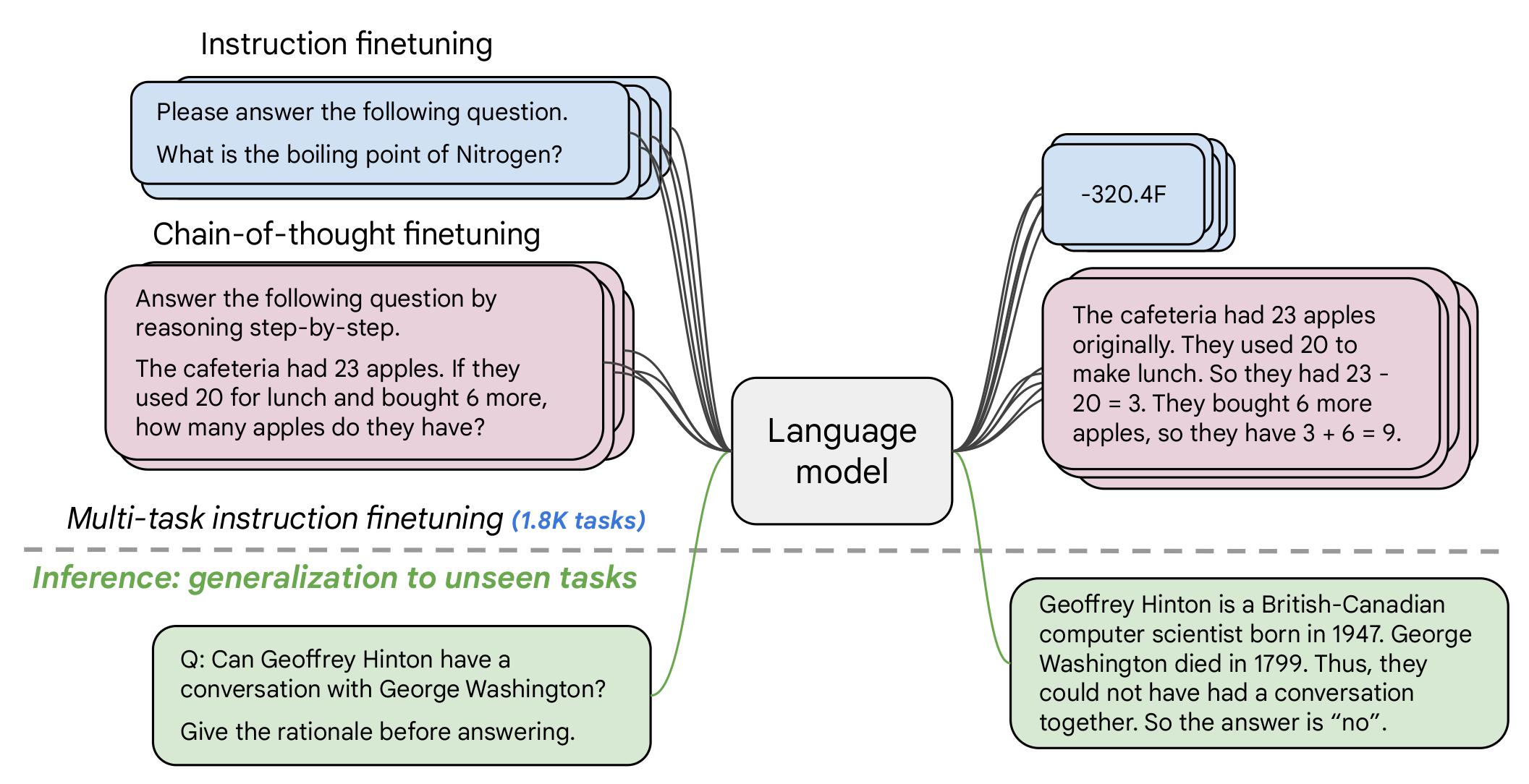

I've harnessed the power of the flan-t5 model 🚀, which has truly elevated the quality of the results. You can find all the code and details in the repository right here: https://lnkd.in/dhE5s_qg

And guess what? I've even deployed the project, so you can experience the magic firsthand: https://lnkd.in/diq-d3bt ❤️

Join me on this exciting journey into #nlp, #textgeneration, #t5, #deeplearning, and #huggingface. Your feedback and collaboration are more than welcome! 🌟

my fine tuned model

This model is fine tuned to generate a question with answers from a context , why that can be very usful this can help you to generate a dataset from a book article any thing you would to make from it dataset and train another model on this dataset , give the model any context with pre prometed of quation you want + context and it will extarct question + answer for you this are promted i use [ "which", "how", "when", "where", "who", "whom", "whose", "why", "which", "who", "whom", "whose", "whereas", "can", "could", "may", "might", "will", "would", "shall", "should", "do", "does", "did", "is", "are", "am", "was", "were", "be", "being", "been", "have", "has", "had", "if", "is", "are", "am", "was", "were", "do", "does", "did", "can", "could", "will", "would", "shall", "should", "might", "may", "must", "may", "might", "must"]

orignal model info

Code

from transformers import AutoTokenizer, AutoModelForQuestionAnswering

model_name="mohamedemam/Question_generator"

def generate_question_answer(context, prompt, model_name="mohamedemam/Question_generator"):

"""

Generates a question-answer pair using the provided context, prompt, and model.

Args:

context: String containing the text or URL of the source material.

prompt: String starting with a question word (e.g., "what," "who").

model_name: Optional string specifying the model name (default: google/flan-t5-base).

Returns:

A tuple containing the generated question and answer strings.

"""

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForQuestionAnswering.from_pretrained(model_name)

inputs = tokenizer(context, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs)

start_scores, end_scores = outputs.start_logits, outputs.end_logits

answer_start = torch.argmax(start_scores)

answer_end = torch.argmax(end_scores) + 1 # Account for inclusive end index

answer = tokenizer.convert_tokens_to_strings(tokenizer.convert_ids_to_tokens(inputs["input_ids"][answer_start:answer_end]))[0]

question = f"{prompt} {answer}" # Formulate the question using answer

return question, answer

# Example usage

context = "The capital of France is Paris."

prompt = "What"

question, answer = generate_question_answer(context, prompt)

print(f"Question: {question}")

print(f"Answer: {answer}")

- Downloads last month

- 18