File size: 1,964 Bytes

702fd4d 92f7645 702fd4d d19d953 702fd4d 92f7645 702fd4d 92f7645 702fd4d 92f7645 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 |

---

license: bigscience-bloom-rail-1.0

datasets:

- OpenAssistant/oasst1

- LEL-A/translated_german_alpaca_validation

- deepset/germandpr

- oscar-corpus/OSCAR-2301

language:

- de

pipeline_tag: conversational

---

# Instruction-fine-tuned German language model (6B parameters; **early alpha version**)

Base model: [malteos/bloom-6b4-clp-german](https://huggingface.co/malteos/bloom-6b4-clp-german) [(Ostendorff and Rehm, 2023)](https://arxiv.org/abs/2301.09626)

Trained on:

- 20B additional German tokens (Wikimedia dumps and OSCAR 2023)

- [OpenAssistant/oasst1](https://huggingface.co/datasets/OpenAssistant/oasst1) (German subset)

- [LEL-A/translated_german_alpaca_validation](https://huggingface.co/datasets/LEL-A/translated_german_alpaca_validation)

- [LEL-A's version of deepset/germandpr](https://github.com/LEL-A/EuroInstructProject#instruct-germandpr-dataset-v1-german)

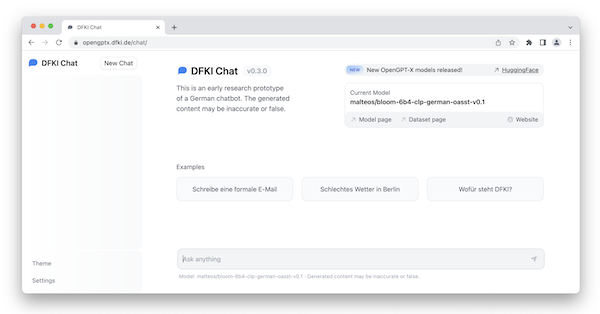

## Chat demo

[](https://opengptx.dfki.de/chat/)

[https://opengptx.dfki.de/chat/](https://opengptx.dfki.de/chat/)

Please note that this a research prototype and may not be suitable for extensive use.

## How to cite

If you are using our code or models, please cite [our paper](https://arxiv.org/abs/2301.09626):

```bibtex

@misc{Ostendorff2023clp,

doi = {10.48550/ARXIV.2301.09626},

author = {Ostendorff, Malte and Rehm, Georg},

title = {Efficient Language Model Training through Cross-Lingual and Progressive Transfer Learning},

publisher = {arXiv},

year = {2023}

}

```

## License

[BigScience BLOOM RAIL 1.0](https://bigscience.huggingface.co/blog/the-bigscience-rail-license)

## Acknowledgements

This model was trained during the [Helmholtz GPU Hackathon 2023](https://www.fz-juelich.de/de/ias/jsc/aktuelles/termine/2023/helmholtz-gpu-hackathon-2023).

We gratefully thank the organizers for hosting this event and the provided computing resources. |