Hindi Sentiment Analysis Model

This repository contains a Hindi sentiment analysis model that can classify text into three categories: negative (neg), neutral (neu), and positive (pos). The model has been trained and evaluated using various BERT-based architectures, with XLM-RoBERTa showing the best performance.

Model Performance

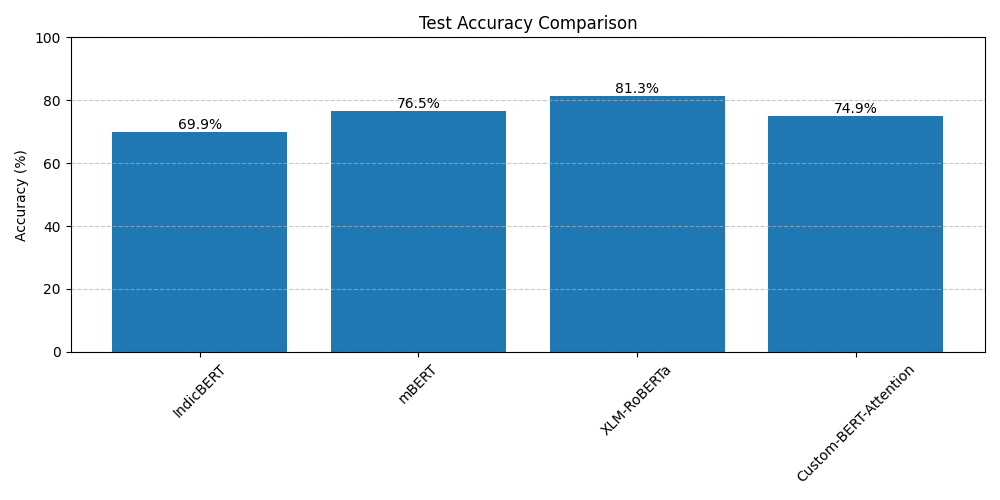

Test Accuracy Comparison

Our extensive evaluation shows:

- XLM-RoBERTa: 81.3%

- mBERT: 76.5%

- Custom-BERT-Attention: 74.9%

- IndicBERT: 69.9%

Detailed Results

Confusion Matrices

The confusion matrices show the prediction performance for each model:

- XLM-RoBERTa shows the strongest performance with 82.1% accuracy on positive class

- mBERT demonstrates balanced performance across classes

- Custom-BERT-Attention maintains consistent performance

- IndicBERT shows room for improvement in negative class detection

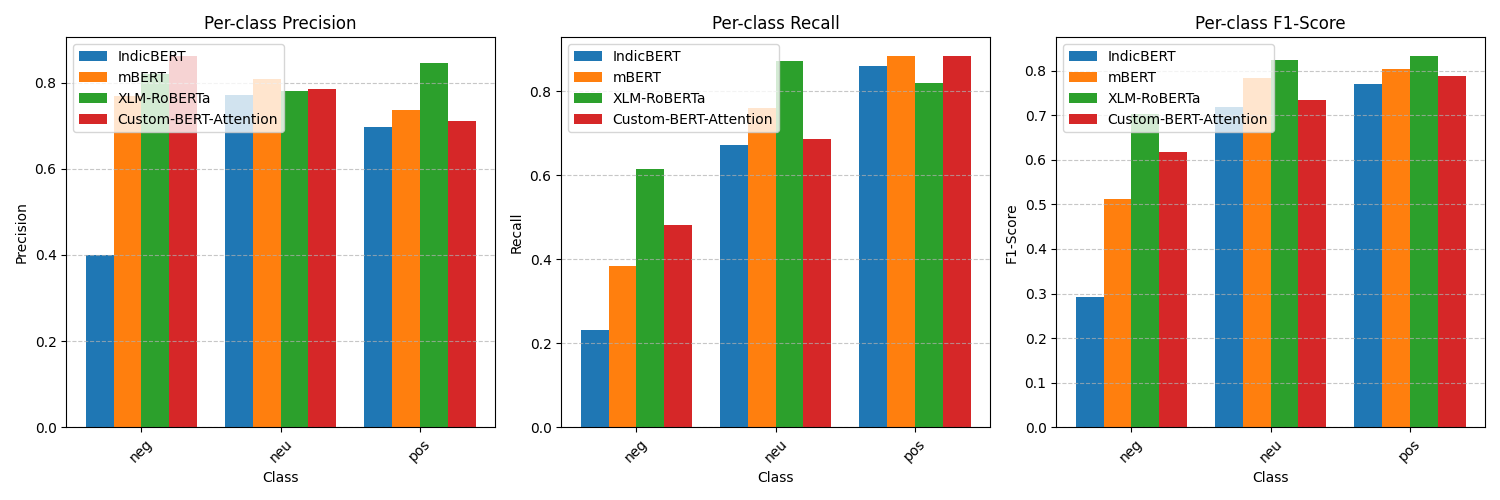

Per-class Metrics

The detailed per-class metrics show:

Precision:

- Positive class: Best performance across all models (~0.80-0.85)

- Neutral class: Consistent performance (~0.75-0.80)

- Negative class: More varied performance (~0.40-0.70)

Recall:

- Positive class: High recall across models (~0.85-0.90)

- Neutral class: Moderate recall (~0.65-0.85)

- Negative class: Lower but improving recall (~0.25-0.60)

F1-Score:

- Positive class: Best overall performance (~0.80-0.85)

- Neutral class: Good balance (~0.70-0.80)

- Negative class: Area for potential improvement (~0.30-0.65)

Training Progress

The training graphs show:

- Consistent loss reduction across epochs

- Stable validation accuracy improvement

- No significant overfitting

- XLM-RoBERTa achieving the best validation accuracy

- Custom-BERT-Attention showing rapid initial learning

Model Usage

from transformers import AutoModelForSequenceClassification, AutoTokenizer

# Load the model and tokenizer

tokenizer = AutoTokenizer.from_pretrained("madhav112/hindi-sentiment-analysis")

model = AutoModelForSequenceClassification.from_pretrained("madhav112/hindi-sentiment-analysis")

# Example usage

text = "यह फिल्म बहुत अच्छी है"

inputs = tokenizer(text, return_tensors="pt", padding=True, truncation=True)

outputs = model(**inputs)

predictions = outputs.logits.argmax(-1)

Model Architecture

The repository contains experiments with multiple BERT-based architectures:

XLM-RoBERTa (Best performing)

- Highest overall accuracy

- Best performance on positive sentiment

- Strong cross-lingual capabilities

mBERT

- Good balanced performance

- Strong on neutral class detection

- Consistent across all metrics

Custom-BERT-Attention

- Competitive performance

- Quick convergence during training

- Good precision on positive class

IndicBERT

- Baseline performance

- Room for improvement

- Better suited for specific Indian language tasks

Dataset

The model was trained on a Hindi sentiment analysis dataset with three classes:

- Positive (pos)

- Neutral (neu)

- Negative (neg)

The confusion matrices show balanced class distribution and strong performance across categories.

Training Details

The model was trained for 7 epochs with the following characteristics:

- Learning rate: Optimized for each architecture

- Batch size: Adjusted for optimal performance

- Validation split: Regular evaluation during training

- Early stopping: Monitored for best model selection

- Loss function: Cross-entropy loss

Limitations

- Lower performance on negative sentiment detection compared to positive

- Neutral class classification shows moderate confusion with both positive and negative

- Performance may vary on domain-specific text

- Best suited for standard Hindi text; may have reduced performance on heavily colloquial or dialectal variations

Citation

If you use this model in your research, please cite:

@misc{madhav2024hindisentiment,

author = {Madhav},

title = {Hindi Sentiment Analysis Model},

year = {2024},

publisher = {HuggingFace},

howpublished = {\url{https://huggingface.co/madhav112/hindi-sentiment-analysis}}

}

Author

Madhav

- HuggingFace: madhav

License

This project is licensed under the MIT License - see the LICENSE file for details.

Acknowledgments

Special thanks to the HuggingFace team and the open-source community for providing the tools and frameworks that made this model possible.

language: hi tags:

- hindi

- sentiment-analysis

- text-classification

- bert datasets:

- hindi-sentiment metrics:

- accuracy

- f1

- precision

- recall model-index:

- name: hindi-sentiment-analysis

results:

- task:

type: text-classification

name: Text Classification

dataset:

name: Hindi Sentiment

type: hindi-sentiment

metrics:

- type: accuracy value: 81.3 name: Test Accuracy

- type: f1 value: 0.82 name: F1 Score

- task:

type: text-classification

name: Text Classification

dataset:

name: Hindi Sentiment

type: hindi-sentiment

metrics:

Model tree for madhav112/hindi-sentiment-analysis

Base model

FacebookAI/xlm-roberta-large