language:

- en

pipeline_tag: text-classification

Model Summary

This is a fact-checking model from our work:

📃 MiniCheck: Efficient Fact-Checking of LLMs on Grounding Documents (GitHub Repo)

The model is based on Flan-T5-Large that predicts a binary label - 1 for supported and 0 for unsupported. The model is doing predictions on the sentence-level. It takes as input a document and a sentence and determine whether the sentence is supported by the document: MiniCheck-Model(document, claim) -> {0, 1}

MiniCheck-Flan-T5-Large is fine tuned from google/flan-t5-large (Chung et al., 2022)

on the combination of 35K data:

- 21K ANLI data (Nie et al., 2020)

- 14K synthetic data generated from scratch in a structed way (more details in the paper).

Model Variants

We also have other two MiniCheck model variants:

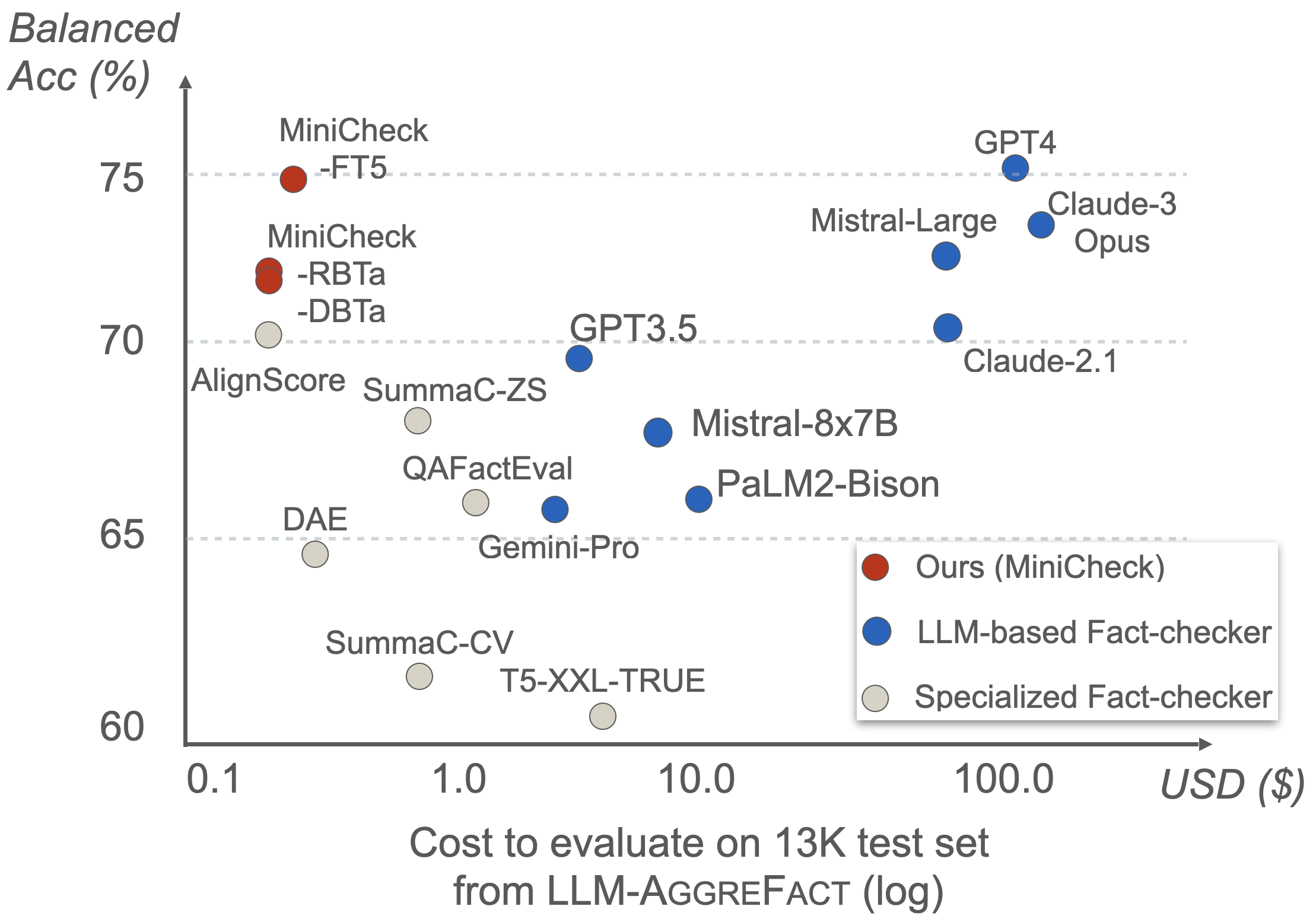

Model Performance

The performance of these models is evaluated on our new collected benchmark (unseen by our models during training), LLM-AggreFact, from 10 recent human annotated datasets on fact-checking and grounding LLM generations. Our most capable model MiniCheck-Flan-T5-Large outperform all exisiting specialized fact-checkers with a similar scale by a large margin (4-10% absolute increase) and is on par with GPT-4, but 400x cheaper. See full results in our work.

Note: We only evaluated the performance of our models on real claims -- without any human intervention in any format, such as injecting certain error types into model-generated claims. Those edited claims do not reflect LLMs' actual behaviors.

Model Usage Demo

Please first clone our GitHub Repo and install necessary packages from requirements.txt.

Below is a simple use case

from minicheck.minicheck import MiniCheck

doc = "A group of students gather in the school library to study for their upcoming final exams."

claim_1 = "The students are preparing for an examination."

claim_2 = "The students are on vacation."

# model_name can be one of ['roberta-large', 'deberta-v3-large', 'flan-t5-large']

scorer = MiniCheck(model_name='flan-t5-large', device=f'cuda:0', cache_dir='./ckpts')

pred_label, raw_prob, _, _ = scorer.score(docs=[doc, doc], claims=[claim_1, claim_2])

print(pred_label) # [1, 0]

print(raw_prob) # [0.9805923700332642, 0.007121307775378227]

Test on our LLM-AggreFact Benchmark

import pandas as pd

from datasets import load_dataset

from minicheck.minicheck import MiniCheck

# load 13K test data

df = pd.DataFrame(load_dataset("lytang/LLM-AggreFact")['test'])

docs = df.doc.values

claims = df.claim.values

scorer = MiniCheck(model_name='flan-t5-large', device=f'cuda:0', cache_dir='./ckpts')

pred_label, raw_prob, _, _ = scorer.score(docs=docs, claims=claims) # ~ 20 mins, depending on hardware

To evalaute the result on the benchmark

from sklearn.metrics import balanced_accuracy_score

df['preds'] = pred_label

result_df = pd.DataFrame(columns=['Dataset', 'BAcc'])

for dataset in df.dataset.unique():

sub_df = df[df.dataset == dataset]

bacc = balanced_accuracy_score(sub_df.label, sub_df.preds) * 100

result_df.loc[len(result_df)] = [dataset, bacc]

result_df.loc[len(result_df)] = ['Average', result_df.BAcc.mean()]

result_df.round(1)

Citation

@misc{tang2024minicheck,

title={MiniCheck: Efficient Fact-Checking of LLMs on Grounding Documents},

author={Liyan Tang and Philippe Laban and Greg Durrett},

year={2024},

eprint={2404.10774},

archivePrefix={arXiv},

primaryClass={cs.CL}

}