metadata

language:

- en

- ko

datasets:

- kyujinpy/KOpen-platypus

library_name: transformers

pipeline_tag: text-generation

license: cc-by-nc-sa-4.0

(주)미디어그룹사람과숲과 (주)마커의 LLM 연구 컨소시엄에서 개발된 모델입니다

The license is cc-by-nc-sa-4.0.

Ko-Platypus2-13B

Model Details

More detail repo(Github): KO-Platypus

Model Developers Kyujin Han (kyujinpy)

Input Models input text only.

Output Models generate text only.

Model Architecture KO-Platypus2-13B is an auto-regressive language model based on the LLaMA2 transformer architecture.

Base Model hyunseoki/ko-en-llama2-13b

Training Dataset

I use KOpen-platypus.

It is high-quality korean translation dataset about open-platypus.

I use A100 GPU 40GB and COLAB, when trianing.

Model Benchmark

KO-LLM leaderboard

- Follow up as Open KO-LLM LeaderBoard.

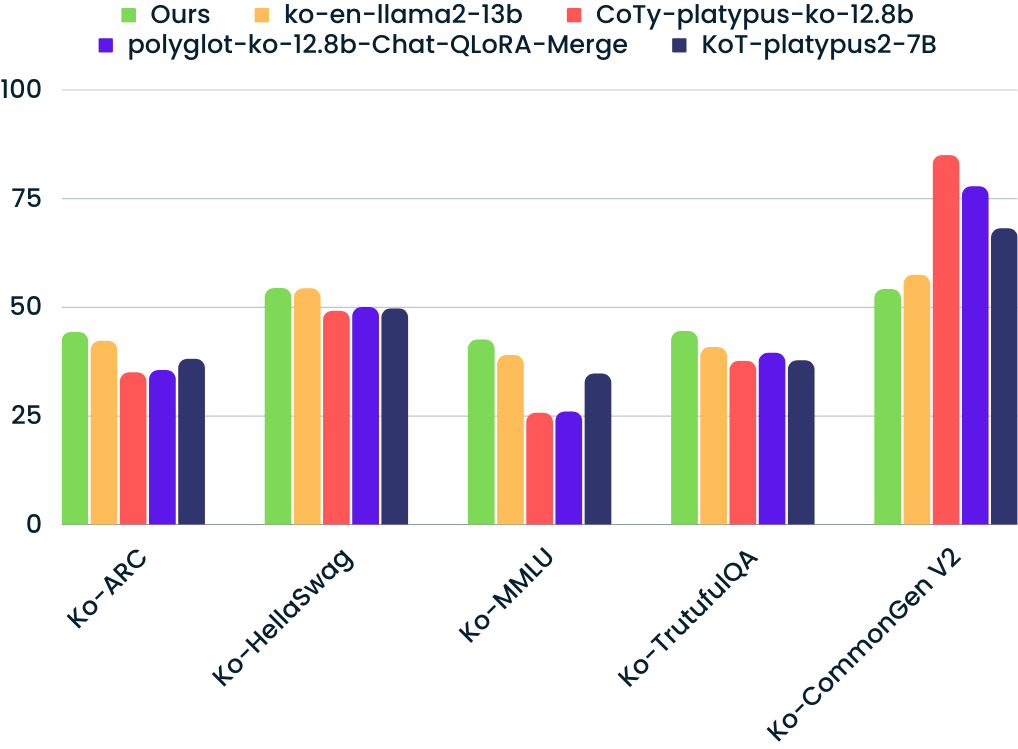

| Model | Average | Ko-ARC | Ko-HellaSwag | Ko-MMLU | Ko-TruthfulQA | Ko-CommonGen V2 |

|---|---|---|---|---|---|---|

| KO-Platypus2-13B(ours) | 47.90 | 44.20 | 54.31 | 42.47 | 44.41 | 54.11 |

| hyunseoki/ko-en-llama2-13b | 46.68 | 42.15 | 54.23 | 38.90 | 40.74 | 57.39 |

| MarkrAI/kyujin-CoTy-platypus-ko-12.8b | 46.44 | 34.98 | 49.11 | 25.68 | 37.59 | 84.86 |

| momo/polyglot-ko-12.8b-Chat-QLoRA-Merge | 45.71 | 35.49 | 49.93 | 25.97 | 39.43 | 77.70 |

| KoT-platypus2-7B | 45.62 | 38.05 | 49.63 | 34.68 | 37.69 | 68.08 |

Compare with Top 4 SOTA models. (update: 10/06)

Implementation Code

### KO-Platypus

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

repo = "kyujinpy/KO-Platypus2-13B"

CoT-llama = AutoModelForCausalLM.from_pretrained(

repo,

return_dict=True,

torch_dtype=torch.float16,

device_map='auto'

)

CoT-llama_tokenizer = AutoTokenizer.from_pretrained(repo)

Readme format: kyujinpy/KoT-platypus2-7B