1girl-EDM2-XS-test-1: From ImageNet to Danbooru in 1 GPU-day

1girl-EDM2-XS-test-1 is an experimental conditional image generation model for 1girl solo anime-styled art.

It is a modified EDM2-XS model that accepts pooled

tagger features as the condition. It only serves as a proof of concept and has no intended practical use.

Model summary

- Parameters: 125M

- Forward GFLOPs: 46

- Dataset: ~1.2M

1girl soloimages - Image resolution: 512 * 704

- Effective batch size: 2000 (40 per batch * 50 steps)

- Learning rate: 1.2e-2 (linear decay)

- Training duration: ~11M images

- Training cost: ~17.5 4090-hours

Usage

Please refer to the accompanying Space for demo and sample code. For those who don't want to wait 10 minutes for an image, a Colab demo is also available.

License

All weights and code inherit the CC BY-NC-SA 4.0 from EDM2.

Context

There are good anime models based on open-weight models, but the way they work has always irked me. The main purpose of anime models is to create images from user specifications, which can be free-form text, or just a list of tags fed into the model as text.

I have no issues with encoding text with a text encoder, but encoding tags? That just feels wrong and inefficient. This leads to stuff such as:

- Multi-token tags creating long text strings that require

BREAKing - Some models requiring careful tag ordering, despite that tags by nature are unordered

- Tag not working due to tag renaming

- Character names conflicting with general tags, causing concept bleeding

To be fair, text encoders are used to encoded tags only because those are what come with the base models. But what if we don't actually need to rely on those web-scale model makers to decide what architecture we use to generate anime girls? People fine-tune models trained on ImageNet all the time, but IFAICT no one really fine-tunes ImageNet-pretrained models for image generation, which seems like a missed opportunity. If a model can make cats and dogs, it should be able to make anime girls. So I checked the top performing ones and tested them on toy datasets before finally deciding on the UNet-based EDM2 architecture.

Dataset & Training

About the dataset, for simplicity I only took the subset of 1girl solo images from danbooru2023 that naturally resize to 512*704 (<~5% area cropped) with some additional basic filters. Images with id % 1000 == 0 are used as the validation set and id % 1000 == 1 as the test set. The tags need to be embedded into some space by something other than a text encoder. The proper way is probably to learn a representation with contrastive learning, but again for simplicity, I just used the pooled feature of a pretrained tagger, which is a 1024D vector for each image.

All training was done on one 4090 GPU. At ~9M images trained, I noticed that the generated images lack high frequency details, and it doesn't really get better with more training. I patched in conditioning dropout with p=5% and trained for a bit more. The results turned out better than I expected, so this what you see here.

Additionally, I also prepared a tiny VAE that unconditionally generate 1024D vectors for the conditioning.

Results & Observations

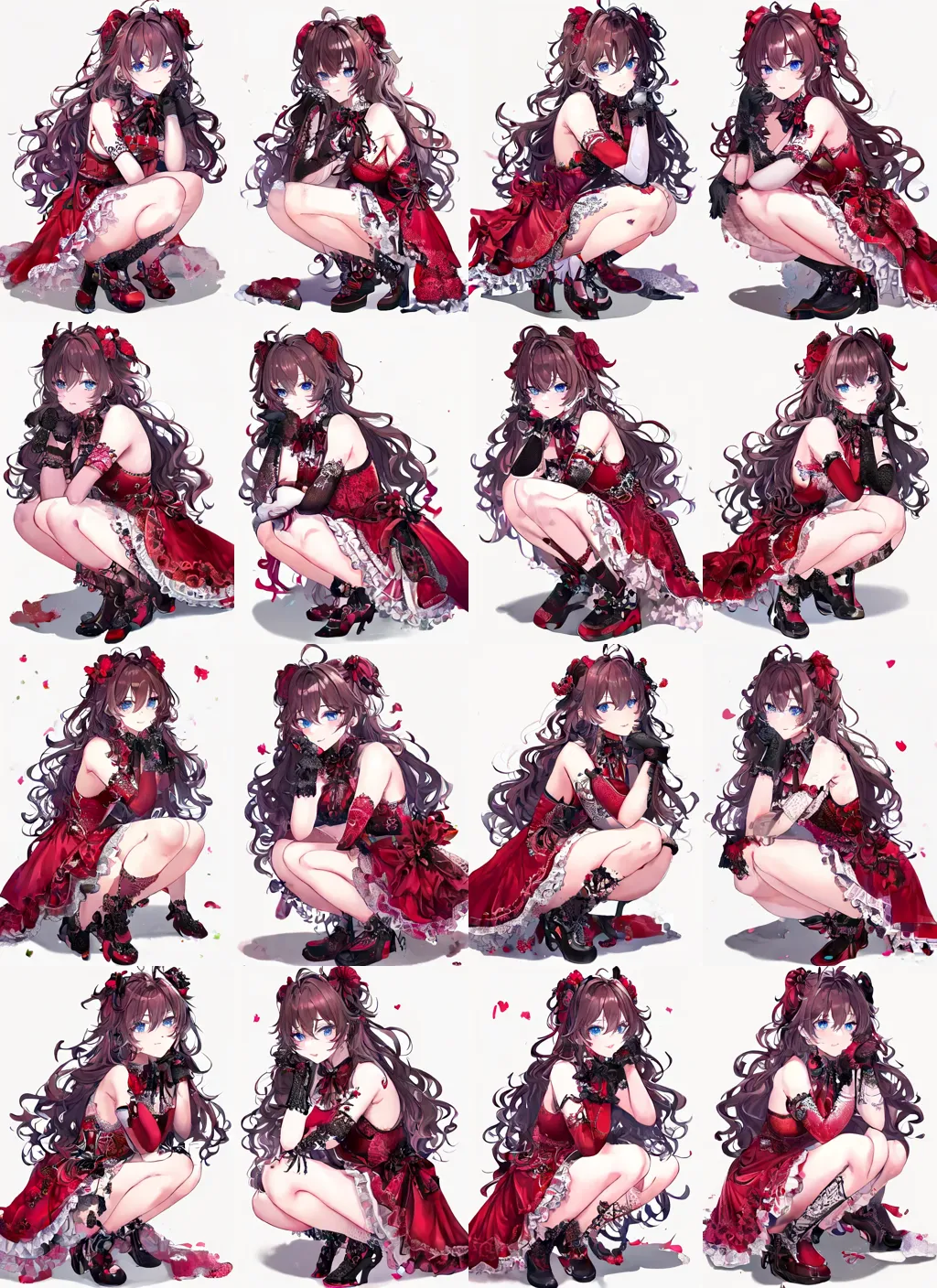

Note: The image conditions used for testing here are not from the training set. The sampling settings are 16 steps of dpmpp_2m with guidance=5. The conditions are cherry-picked to prevent explicit images; the generated images are not cherry-picked in any way.

First off, I will not pretend that this model is better than existing models. It cannot create fine details and often struggles with anatomy. I consider it worse than the early SD1 models. It does adhere to prompts pretty well, which is the whole point. Almost a bit too well.

The tag encoding ended up being more specific than I expected. It appears to be able to provide more information than a list of tags, including some of the palette, the composition and the style. At a glance, it is counterintuitive that a 1024 element vector can be as informative as a (77+ * 768+) element tensor. But in retrospect it is kind of obvious since a 1024D vector encodes a distribution of tags whereas a list of tags is merely a sample from the distribution.

One might worry that conditioning on tag encodings from only images with aspect ratio 8:11 might prevent the model from using encodings from wider images. Fortunately, the model is able to interpret the tags regardless of the aspect ratio (the last two examples above).

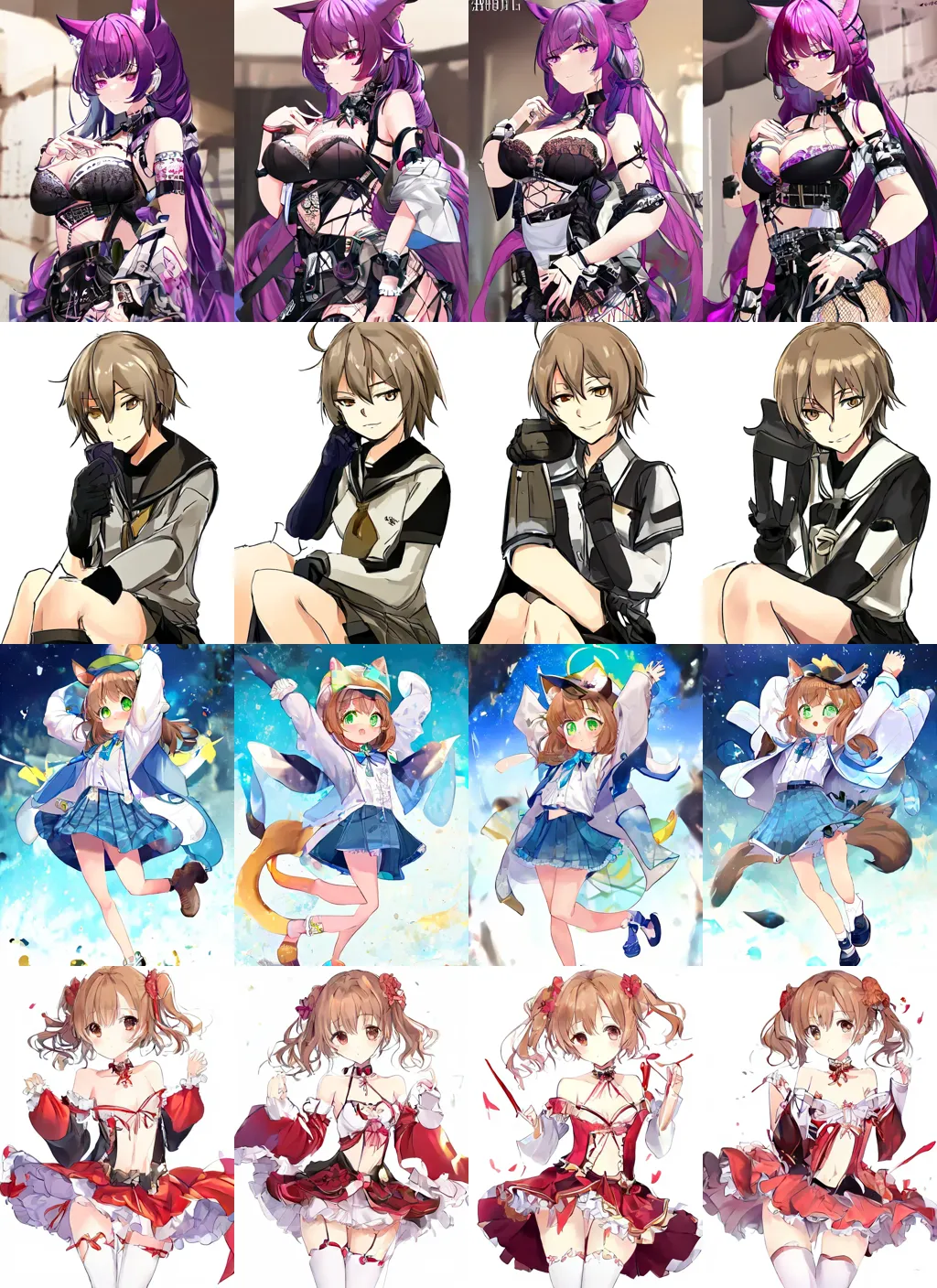

The tiny condition generator also works pretty nicely. It's pleasantly surprising to see how well a simple three linear layer model can synthesize coherent scenes, and how consistent the diffusion model render the scene.

| Each row of 4 images share the same VAE-generated tag condition. |

|---|

One last thing I want to note is how fast this little model runs. I'm getting an inference speed of 79 it/s @512x704 on my 4060 Ti machine without clever optimizations, which is >6 times as fast as SD1 models @512x512 according to benchmarks. This makes doing the tests a breeze.

Conclusions & Next steps

Overall, I'm quite satisfied with the results. I kind of went in not expecting this to work but it did achieve something. Most importantly, the results suggest that:

- Creating anime models do not require web-pretrained models; ImageNet models suffice

- Tag conditioning does not require text encoders or cross attention

The obvious direction now is to continue training with more images or perhaps scaling up the model, however I don't really have the compute resource for all that. Besides that, the first problem I'd like to address is adding actual conditional generation of conditions, so the model can be used like conventional models. After that, there are still stuff related to image conditioning that I'd like to see fixed, for example, content-style-quality interactions and character/outfit conditioning in general.