PEFT documentation

LoRA

LoRA

This conceptual guide gives a brief overview of LoRA, a technique that accelerates the fine-tuning of large models while consuming less memory.

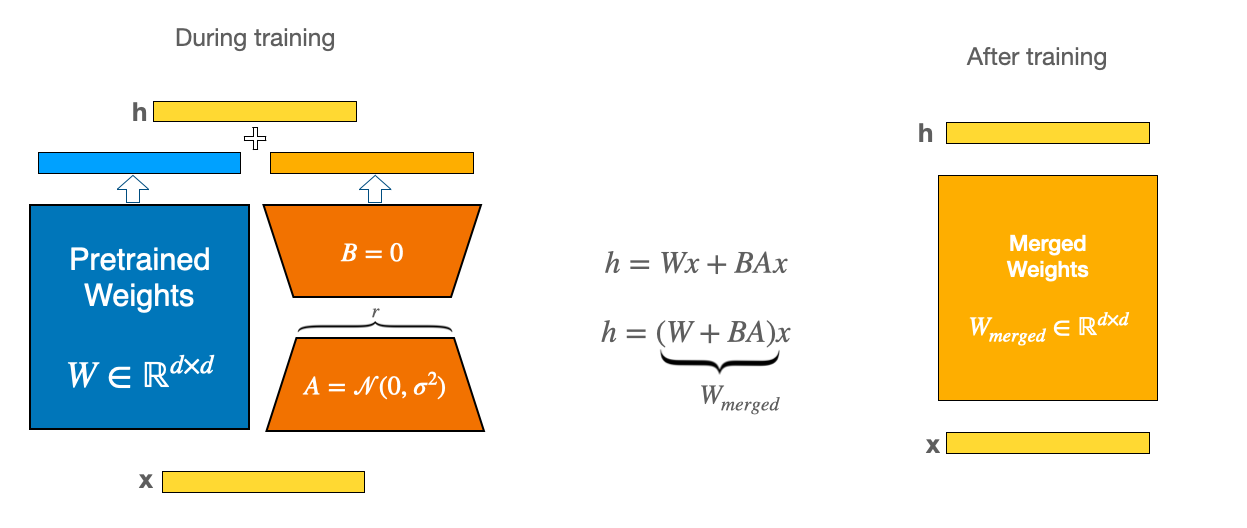

To make fine-tuning more efficient, LoRA’s approach is to represent the weight updates with two smaller matrices (called update matrices) through low-rank decomposition. These new matrices can be trained to adapt to the new data while keeping the overall number of changes low. The original weight matrix remains frozen and doesn’t receive any further adjustments. To produce the final results, both the original and the adapted weights are combined.

This approach has a number of advantages:

- LoRA makes fine-tuning more efficient by drastically reducing the number of trainable parameters.

- The original pre-trained weights are kept frozen, which means you can have multiple lightweight and portable LoRA models for various downstream tasks built on top of them.

- LoRA is orthogonal to many other parameter-efficient methods and can be combined with many of them.

- Performance of models fine-tuned using LoRA is comparable to the performance of fully fine-tuned models.

- LoRA does not add any inference latency because adapter weights can be merged with the base model.

In principle, LoRA can be applied to any subset of weight matrices in a neural network to reduce the number of trainable

parameters. However, for simplicity and further parameter efficiency, in Transformer models LoRA is typically applied to

attention blocks only. The resulting number of trainable parameters in a LoRA model depends on the size of the low-rank

update matrices, which is determined mainly by the rank r and the shape of the original weight matrix.

Merge LoRA weights into the base model

While LoRA is significantly smaller and faster to train, you may encounter latency issues during inference due to separately loading the base model and the LoRA model. To eliminate latency, use the merge_and_unload() function to merge the adapter weights with the base model which allows you to effectively use the newly merged model as a standalone model.

This works because during training, the smaller weight matrices (A and B in the diagram above) are separate. But once training is complete, the weights can actually be merged into a new weight matrix that is identical.

Utils for LoRA

Use merge_adapter() to merge the LoRa layers into the base model while retaining the PeftModel. This will help in later unmerging, deleting, loading different adapters and so on.

Use unmerge_adapter() to unmerge the LoRa layers from the base model while retaining the PeftModel. This will help in later merging, deleting, loading different adapters and so on.

Use unload() to get back the base model without the merging of the active lora modules. This will help when you want to get back the pretrained base model in some applications when you want to reset the model to its original state. For example, in Stable Diffusion WebUi, when the user wants to infer with base model post trying out LoRAs.

Use delete_adapter() to delete an existing adapter.

Use add_weighted_adapter() to combine multiple LoRAs into a new adapter based on the user provided weighing scheme.

Common LoRA parameters in PEFT

As with other methods supported by PEFT, to fine-tune a model using LoRA, you need to:

- Instantiate a base model.

- Create a configuration (

LoraConfig) where you define LoRA-specific parameters. - Wrap the base model with

get_peft_model()to get a trainablePeftModel. - Train the

PeftModelas you normally would train the base model.

LoraConfig allows you to control how LoRA is applied to the base model through the following parameters:

r: the rank of the update matrices, expressed inint. Lower rank results in smaller update matrices with fewer trainable parameters.target_modules: The modules (for example, attention blocks) to apply the LoRA update matrices.lora_alpha: LoRA scaling factor.bias: Specifies if thebiasparameters should be trained. Can be'none','all'or'lora_only'.use_rslora: When set to True, uses Rank-Stabilized LoRA which sets the adapter scaling factor tolora_alpha/math.sqrt(r), since it was proven to work better. Otherwise, it will use the original default value oflora_alpha/r.modules_to_save: List of modules apart from LoRA layers to be set as trainable and saved in the final checkpoint. These typically include model’s custom head that is randomly initialized for the fine-tuning task.layers_to_transform: List of layers to be transformed by LoRA. If not specified, all layers intarget_modulesare transformed.layers_pattern: Pattern to match layer names intarget_modules, iflayers_to_transformis specified. By defaultPeftModelwill look at common layer pattern (layers,h,blocks, etc.), use it for exotic and custom models.rank_pattern: The mapping from layer names or regexp expression to ranks which are different from the default rank specified byr.alpha_pattern: The mapping from layer names or regexp expression to alphas which are different from the default alpha specified bylora_alpha.

LoRA examples

For an example of LoRA method application to various downstream tasks, please refer to the following guides:

While the original paper focuses on language models, the technique can be applied to any dense layers in deep learning models. As such, you can leverage this technique with diffusion models. See Dreambooth fine-tuning with LoRA task guide for an example.

Initialization options

The initialization of LoRA weights is controlled by the parameter init_lora_weights of the LoraConfig. By default, PEFT initializes LoRA weights the same way as the reference implementation, i.e. using Kaiming-uniform for weight A and initializing weight B as zeros, resulting in an identity transform.

It is also possible to pass init_lora_weights="gaussian". As the name suggests, this results in initializing weight A with a Gaussian distribution (weight B is still zeros). This corresponds to the way that diffusers initializes LoRA weights.

When quantizing the base model, e.g. for QLoRA training, consider using the LoftQ initialization, which has been shown to improve the performance with quantization. The idea is that the LoRA weights are initialized such that the quantization error is minimized. To use this option, do not quantize the base model. Instead, proceed as follows:

from peft import LoftQConfig, LoraConfig, get_peft_model

base_model = AutoModelForCausalLM.from_pretrained(...) # don't quantize here

loftq_config = LoftQConfig(loftq_bits=4, ...) # set 4bit quantization

lora_config = LoraConfig(..., init_lora_weights="loftq", loftq_config=loftq_config)

peft_model = get_peft_model(base_model, lora_config)There is also an option to set initialize_lora_weights=False. When choosing this option, the LoRA weights are initialized such that they do not result in an identity transform. This is useful for debugging and testing purposes and should not be used otherwise.

Finally, the LoRA architecture scales each adapter during every forward pass by a fixed scalar, which is set at initialization, and depends on the rank r. Although the original LoRA method uses the scalar function lora_alpha/r, the research Rank-Stabilized LoRA proves that instead using lora_alpha/math.sqrt(r), stabilizes the adapters and unlocks the increased performance potential from higher ranks. Set use_rslora=True to use the rank-stabilized scaling lora_alpha/math.sqrt(r).