Datasets:

license: apache-2.0

task_categories:

- tabular-regression

- tabular-classification

- question-answering

language:

- en

tags:

- user modelling

- trust

size_categories:

- 10K<n<100K

configs:

- config_name: data

data_files: data.jsonl

This is a slightly edited dataset of the one found here on GitHub. The data contains the user interactions, their bet values, answer correctness etc. Please contact the authors if you have any questions.

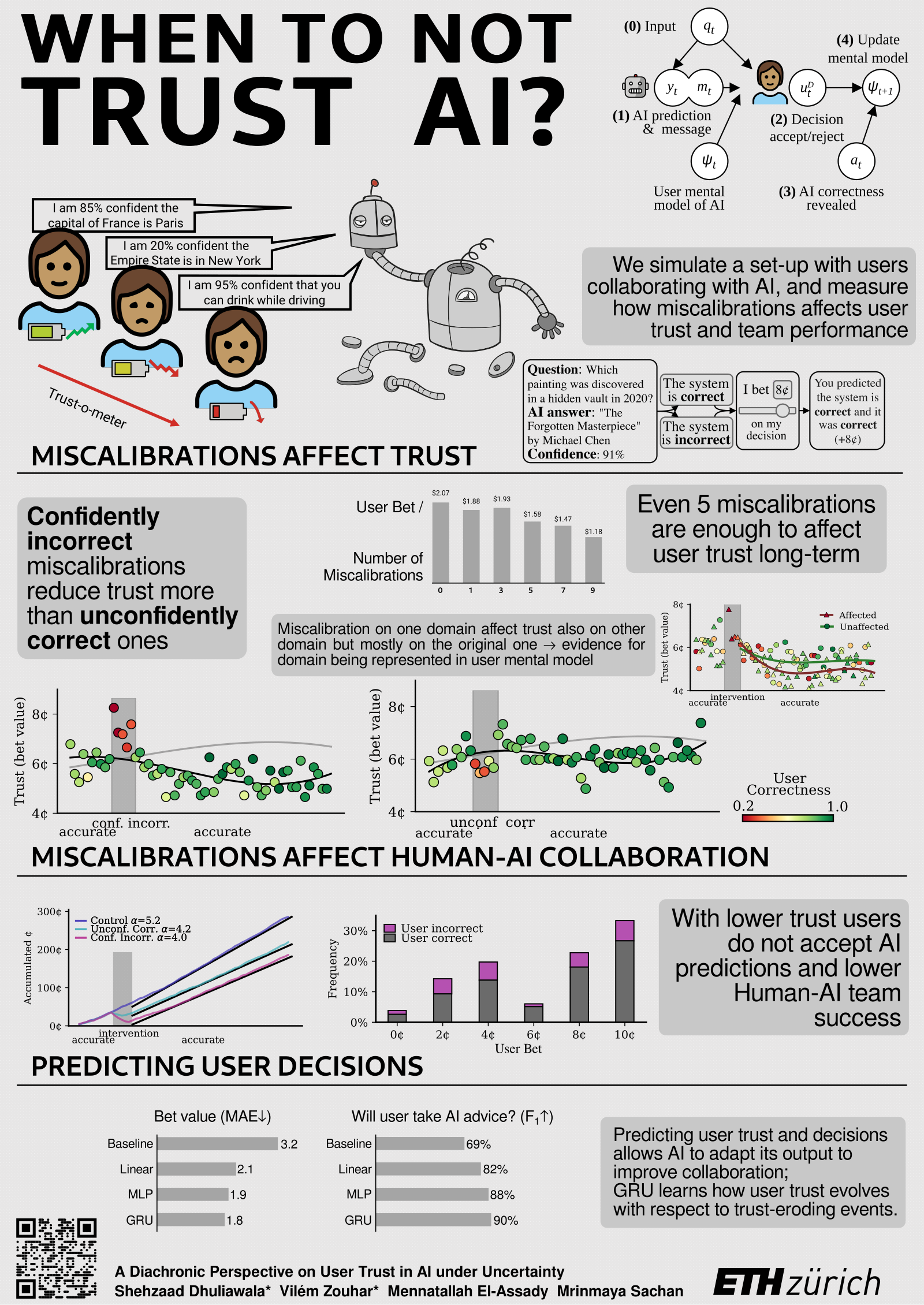

A Diachronic Perspective on User Trust in AI under Uncertainty

Abstract: In a human-AI collaboration, users build a mental model of the AI system based on its veracity and how it presents its decision, e.g. its presentation of system confidence and an explanation of the output. However, modern NLP systems are often uncalibrated, resulting in confidently incorrect predictions that undermine user trust. In order to build trustworthy AI, we must understand how user trust is developed and how it can be regained after potential trust-eroding events. We study the evolution of user trust in response to these trust-eroding events using a betting game as the users interact with the AI. We find that even a few incorrect instances with inaccurate confidence estimates can substantially damage user trust and performance, with very slow recovery. We also show that this degradation in trust can reduce the success of human-AI collaboration and that different types of miscalibration---unconfidently correct and confidently incorrect---have different (negative) effects on user trust. Our findings highlight the importance of calibration in user-facing AI application, and shed light onto what aspects help users decide whether to trust the system.

This work was presented EMNLP 2023, read it here. Written by Shehzaad Dhuliawala, Vilém Zouhar, Mennatallah El-Assady, and Mrinmaya Sachan from ETH Zurich, Department of Computer Science.

@inproceedings{dhuliawala-etal-2023-diachronic,

title = "A Diachronic Perspective on User Trust in {AI} under Uncertainty",

author = "Dhuliawala, Shehzaad and

Zouhar, Vil{\'e}m and

El-Assady, Mennatallah and

Sachan, Mrinmaya",

booktitle = "Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2023",

address = "Singapore",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.emnlp-main.339",

doi = "10.18653/v1/2023.emnlp-main.339",

pages = "5567--5580"

}

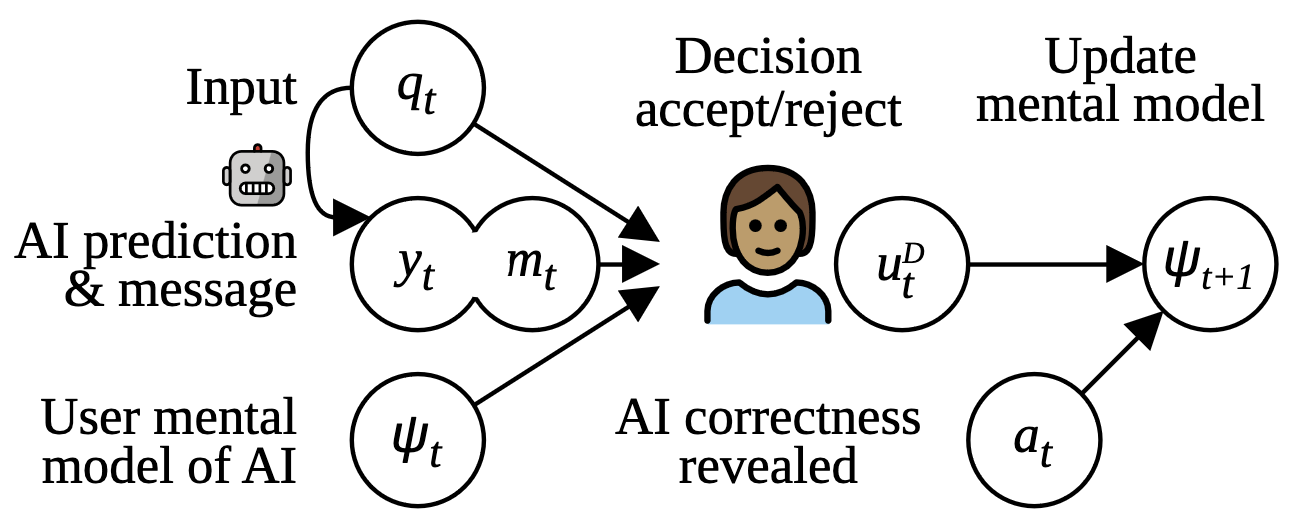

Figure 1: Diachronic view of a typical human-AI collaborative setting.

Here, at each timestep t, the user uses their prior mental model ψt to accept or reject the AI system’s answer yt, supported by an additional message mt comprising of the AI’s confidence, and updates their mental model of the AI system to ψt+1. If the message is rejected, the user invokes a fallback process to provide a different answer.

Figure 1: Diachronic view of a typical human-AI collaborative setting.

Here, at each timestep t, the user uses their prior mental model ψt to accept or reject the AI system’s answer yt, supported by an additional message mt comprising of the AI’s confidence, and updates their mental model of the AI system to ψt+1. If the message is rejected, the user invokes a fallback process to provide a different answer.

Resources