url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 46

51

| id

int64 599M

1.11B

| node_id

stringlengths 18

32

| number

int64 1

3.59k

| title

stringlengths 1

276

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 1

class | assignee

null | assignees

sequence | milestone

null | comments

sequence | created_at

int64 1,587B

1,643B

| updated_at

int64 1,587B

1,643B

| closed_at

int64 1,587B

1,643B

⌀ | author_association

stringclasses 3

values | active_lock_reason

null | draft

bool 2

classes | pull_request

dict | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

null | is_pull_request

bool 2

classes |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/3591 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3591/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3591/comments | https://api.github.com/repos/huggingface/datasets/issues/3591/events | https://github.com/huggingface/datasets/pull/3591 | 1,106,928,613 | PR_kwDODunzps4xNDoB | 3,591 | Add support for time, date, duration, and decimal dtypes | {

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,642,513,565,000 | 1,642,513,565,000 | null | CONTRIBUTOR | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3591",

"html_url": "https://github.com/huggingface/datasets/pull/3591",

"diff_url": "https://github.com/huggingface/datasets/pull/3591.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3591.patch",

"merged_at": null

} | Add support for the pyarrow time (maps to `datetime.time` in python), date (maps to `datetime.time` in python), duration (maps to `datetime.timedelta` in python), and decimal (maps to `decimal.decimal` in python) dtypes. This should be helpful when writing scripts for time-series datasets. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3591/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3591/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3590 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3590/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3590/comments | https://api.github.com/repos/huggingface/datasets/issues/3590/events | https://github.com/huggingface/datasets/pull/3590 | 1,106,784,860 | PR_kwDODunzps4xMlGg | 3,590 | Update README.md | {

"login": "borgr",

"id": 6416600,

"node_id": "MDQ6VXNlcjY0MTY2MDA=",

"avatar_url": "https://avatars.githubusercontent.com/u/6416600?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/borgr",

"html_url": "https://github.com/borgr",

"followers_url": "https://api.github.com/users/borgr/followers",

"following_url": "https://api.github.com/users/borgr/following{/other_user}",

"gists_url": "https://api.github.com/users/borgr/gists{/gist_id}",

"starred_url": "https://api.github.com/users/borgr/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/borgr/subscriptions",

"organizations_url": "https://api.github.com/users/borgr/orgs",

"repos_url": "https://api.github.com/users/borgr/repos",

"events_url": "https://api.github.com/users/borgr/events{/privacy}",

"received_events_url": "https://api.github.com/users/borgr/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,642,504,973,000 | 1,642,504,973,000 | null | NONE | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3590",

"html_url": "https://github.com/huggingface/datasets/pull/3590",

"diff_url": "https://github.com/huggingface/datasets/pull/3590.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3590.patch",

"merged_at": null

} | Update license and little things concerning ANLI | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3590/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3590/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3589 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3589/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3589/comments | https://api.github.com/repos/huggingface/datasets/issues/3589/events | https://github.com/huggingface/datasets/pull/3589 | 1,106,766,114 | PR_kwDODunzps4xMhGp | 3,589 | Pin torchmetrics to fix the COMET test | {

"login": "lhoestq",

"id": 42851186,

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lhoestq",

"html_url": "https://github.com/lhoestq",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | 1,642,503,829,000 | 1,642,503,896,000 | 1,642,503,895,000 | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3589",

"html_url": "https://github.com/huggingface/datasets/pull/3589",

"diff_url": "https://github.com/huggingface/datasets/pull/3589.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3589.patch",

"merged_at": 1642503895000

} | Torchmetrics 0.7.0 got released and has issues with `transformers` (see https://github.com/PyTorchLightning/metrics/issues/770)

I'm pinning it to 0.6.0 in the CI, since 0.7.0 makes the COMET metric test fail. COMET requires torchmetrics==0.6.0 anyway. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3589/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3589/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3588 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3588/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3588/comments | https://api.github.com/repos/huggingface/datasets/issues/3588/events | https://github.com/huggingface/datasets/pull/3588 | 1,106,749,000 | PR_kwDODunzps4xMdiC | 3,588 | Update README.md | {

"login": "borgr",

"id": 6416600,

"node_id": "MDQ6VXNlcjY0MTY2MDA=",

"avatar_url": "https://avatars.githubusercontent.com/u/6416600?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/borgr",

"html_url": "https://github.com/borgr",

"followers_url": "https://api.github.com/users/borgr/followers",

"following_url": "https://api.github.com/users/borgr/following{/other_user}",

"gists_url": "https://api.github.com/users/borgr/gists{/gist_id}",

"starred_url": "https://api.github.com/users/borgr/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/borgr/subscriptions",

"organizations_url": "https://api.github.com/users/borgr/orgs",

"repos_url": "https://api.github.com/users/borgr/repos",

"events_url": "https://api.github.com/users/borgr/events{/privacy}",

"received_events_url": "https://api.github.com/users/borgr/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,642,502,775,000 | 1,642,502,775,000 | null | NONE | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3588",

"html_url": "https://github.com/huggingface/datasets/pull/3588",

"diff_url": "https://github.com/huggingface/datasets/pull/3588.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3588.patch",

"merged_at": null

} | Adding information from the git repo and paper that were missing | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3588/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3588/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3587 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3587/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3587/comments | https://api.github.com/repos/huggingface/datasets/issues/3587/events | https://github.com/huggingface/datasets/issues/3587 | 1,106,719,182 | I_kwDODunzps5B9zHO | 3,587 | No module named 'fsspec.archive' | {

"login": "shuuchen",

"id": 13246825,

"node_id": "MDQ6VXNlcjEzMjQ2ODI1",

"avatar_url": "https://avatars.githubusercontent.com/u/13246825?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/shuuchen",

"html_url": "https://github.com/shuuchen",

"followers_url": "https://api.github.com/users/shuuchen/followers",

"following_url": "https://api.github.com/users/shuuchen/following{/other_user}",

"gists_url": "https://api.github.com/users/shuuchen/gists{/gist_id}",

"starred_url": "https://api.github.com/users/shuuchen/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/shuuchen/subscriptions",

"organizations_url": "https://api.github.com/users/shuuchen/orgs",

"repos_url": "https://api.github.com/users/shuuchen/repos",

"events_url": "https://api.github.com/users/shuuchen/events{/privacy}",

"received_events_url": "https://api.github.com/users/shuuchen/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] | closed | false | null | [] | null | [] | 1,642,501,021,000 | 1,642,501,990,000 | 1,642,501,990,000 | NONE | null | null | null | ## Describe the bug

Cannot import datasets after installation.

## Steps to reproduce the bug

```shell

$ python

Python 3.9.7 (default, Sep 16 2021, 13:09:58)

[GCC 7.5.0] :: Anaconda, Inc. on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import datasets

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/shuchen/miniconda3/envs/hf/lib/python3.9/site-packages/datasets/__init__.py", line 34, in <module>

from .arrow_dataset import Dataset, concatenate_datasets

File "/home/shuchen/miniconda3/envs/hf/lib/python3.9/site-packages/datasets/arrow_dataset.py", line 61, in <module>

from .arrow_writer import ArrowWriter, OptimizedTypedSequence

File "/home/shuchen/miniconda3/envs/hf/lib/python3.9/site-packages/datasets/arrow_writer.py", line 28, in <module>

from .features import (

File "/home/shuchen/miniconda3/envs/hf/lib/python3.9/site-packages/datasets/features/__init__.py", line 2, in <module>

from .audio import Audio

File "/home/shuchen/miniconda3/envs/hf/lib/python3.9/site-packages/datasets/features/audio.py", line 7, in <module>

from ..utils.streaming_download_manager import xopen

File "/home/shuchen/miniconda3/envs/hf/lib/python3.9/site-packages/datasets/utils/streaming_download_manager.py", line 18, in <module>

from ..filesystems import COMPRESSION_FILESYSTEMS

File "/home/shuchen/miniconda3/envs/hf/lib/python3.9/site-packages/datasets/filesystems/__init__.py", line 6, in <module>

from . import compression

File "/home/shuchen/miniconda3/envs/hf/lib/python3.9/site-packages/datasets/filesystems/compression.py", line 5, in <module>

from fsspec.archive import AbstractArchiveFileSystem

ModuleNotFoundError: No module named 'fsspec.archive'

```

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3587/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3587/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3586 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3586/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3586/comments | https://api.github.com/repos/huggingface/datasets/issues/3586/events | https://github.com/huggingface/datasets/issues/3586 | 1,106,455,672 | I_kwDODunzps5B8yx4 | 3,586 | Revisit `enable/disable_` toggle function prefix | {

"login": "jaketae",

"id": 25360440,

"node_id": "MDQ6VXNlcjI1MzYwNDQw",

"avatar_url": "https://avatars.githubusercontent.com/u/25360440?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jaketae",

"html_url": "https://github.com/jaketae",

"followers_url": "https://api.github.com/users/jaketae/followers",

"following_url": "https://api.github.com/users/jaketae/following{/other_user}",

"gists_url": "https://api.github.com/users/jaketae/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jaketae/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jaketae/subscriptions",

"organizations_url": "https://api.github.com/users/jaketae/orgs",

"repos_url": "https://api.github.com/users/jaketae/repos",

"events_url": "https://api.github.com/users/jaketae/events{/privacy}",

"received_events_url": "https://api.github.com/users/jaketae/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892871,

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement",

"name": "enhancement",

"color": "a2eeef",

"default": true,

"description": "New feature or request"

}

] | open | false | null | [] | null | [] | 1,642,478,995,000 | 1,642,478,995,000 | null | CONTRIBUTOR | null | null | null | As discussed in https://github.com/huggingface/transformers/pull/15167, we should revisit the `enable/disable_` toggle function prefix, potentially in favor of `set_enabled_`. Concretely, this translates to

- De-deprecating `disable_progress_bar()`

- Adding `enable_progress_bar()`

- On the caching side, adding `enable_caching` and `disable_caching`

Additional decisions have to be made with regards to the existing `set_enabled_X` functions; that is, whether to keep them as is or deprecate them in favor of the aforementioned functions.

cc @mariosasko @lhoestq | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3586/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3586/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3585 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3585/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3585/comments | https://api.github.com/repos/huggingface/datasets/issues/3585/events | https://github.com/huggingface/datasets/issues/3585 | 1,105,821,470 | I_kwDODunzps5B6X8e | 3,585 | Datasets streaming + map doesn't work for `Audio` | {

"login": "patrickvonplaten",

"id": 23423619,

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/patrickvonplaten",

"html_url": "https://github.com/patrickvonplaten",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

},

{

"id": 1935892865,

"node_id": "MDU6TGFiZWwxOTM1ODkyODY1",

"url": "https://api.github.com/repos/huggingface/datasets/labels/duplicate",

"name": "duplicate",

"color": "cfd3d7",

"default": true,

"description": "This issue or pull request already exists"

}

] | open | false | null | [

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

},

{

"login": "lhoestq",

"id": 42851186,

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lhoestq",

"html_url": "https://github.com/lhoestq",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"type": "User",

"site_admin": false

}

] | null | [

"This seems related to https://github.com/huggingface/datasets/issues/3505."

] | 1,642,424,142,000 | 1,642,424,757,000 | null | MEMBER | null | null | null | ## Describe the bug

When using audio datasets in streaming mode, applying a `map(...)` before iterating leads to an error as the key `array` does not exist anymore.

## Steps to reproduce the bug

```python

from datasets import load_dataset

ds = load_dataset("common_voice", "en", streaming=True, split="train")

def map_fn(batch):

print("audio keys", batch["audio"].keys())

batch["audio"] = batch["audio"]["array"][:100]

return batch

ds = ds.map(map_fn)

sample = next(iter(ds))

```

I think the audio is somehow decoded before `.map(...)` is actually called.

## Expected results

IMO, the above code snippet should work.

## Actual results

```bash

audio keys dict_keys(['path', 'bytes'])

Traceback (most recent call last):

File "./run_audio.py", line 15, in <module>

sample = next(iter(ds))

File "/home/patrick/python_bin/datasets/iterable_dataset.py", line 341, in __iter__

for key, example in self._iter():

File "/home/patrick/python_bin/datasets/iterable_dataset.py", line 338, in _iter

yield from ex_iterable

File "/home/patrick/python_bin/datasets/iterable_dataset.py", line 192, in __iter__

yield key, self.function(example)

File "./run_audio.py", line 9, in map_fn

batch["input"] = batch["audio"]["array"][:100]

KeyError: 'array'

```

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 1.17.1.dev0

- Platform: Linux-5.3.0-64-generic-x86_64-with-glibc2.17

- Python version: 3.8.12

- PyArrow version: 6.0.1

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3585/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3585/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3584 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3584/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3584/comments | https://api.github.com/repos/huggingface/datasets/issues/3584/events | https://github.com/huggingface/datasets/issues/3584 | 1,105,231,768 | I_kwDODunzps5B4H-Y | 3,584 | https://huggingface.co/datasets/huggingface/transformers-metadata | {

"login": "ecankirkic",

"id": 37082592,

"node_id": "MDQ6VXNlcjM3MDgyNTky",

"avatar_url": "https://avatars.githubusercontent.com/u/37082592?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/ecankirkic",

"html_url": "https://github.com/ecankirkic",

"followers_url": "https://api.github.com/users/ecankirkic/followers",

"following_url": "https://api.github.com/users/ecankirkic/following{/other_user}",

"gists_url": "https://api.github.com/users/ecankirkic/gists{/gist_id}",

"starred_url": "https://api.github.com/users/ecankirkic/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/ecankirkic/subscriptions",

"organizations_url": "https://api.github.com/users/ecankirkic/orgs",

"repos_url": "https://api.github.com/users/ecankirkic/repos",

"events_url": "https://api.github.com/users/ecankirkic/events{/privacy}",

"received_events_url": "https://api.github.com/users/ecankirkic/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 3470211881,

"node_id": "LA_kwDODunzps7O1zsp",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset-viewer",

"name": "dataset-viewer",

"color": "E5583E",

"default": false,

"description": "Related to the dataset viewer on huggingface.co"

}

] | open | false | null | [] | null | [] | 1,642,378,694,000 | 1,642,411,314,000 | null | NONE | null | null | null | ## Dataset viewer issue for '*name of the dataset*'

**Link:** *link to the dataset viewer page*

*short description of the issue*

Am I the one who added this dataset ? Yes-No

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3584/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3584/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3583 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3583/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3583/comments | https://api.github.com/repos/huggingface/datasets/issues/3583/events | https://github.com/huggingface/datasets/issues/3583 | 1,105,195,144 | I_kwDODunzps5B3_CI | 3,583 | Add The Medical Segmentation Decathlon Dataset | {

"login": "omarespejel",

"id": 4755430,

"node_id": "MDQ6VXNlcjQ3NTU0MzA=",

"avatar_url": "https://avatars.githubusercontent.com/u/4755430?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/omarespejel",

"html_url": "https://github.com/omarespejel",

"followers_url": "https://api.github.com/users/omarespejel/followers",

"following_url": "https://api.github.com/users/omarespejel/following{/other_user}",

"gists_url": "https://api.github.com/users/omarespejel/gists{/gist_id}",

"starred_url": "https://api.github.com/users/omarespejel/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/omarespejel/subscriptions",

"organizations_url": "https://api.github.com/users/omarespejel/orgs",

"repos_url": "https://api.github.com/users/omarespejel/repos",

"events_url": "https://api.github.com/users/omarespejel/events{/privacy}",

"received_events_url": "https://api.github.com/users/omarespejel/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2067376369,

"node_id": "MDU6TGFiZWwyMDY3Mzc2MzY5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20request",

"name": "dataset request",

"color": "e99695",

"default": false,

"description": "Requesting to add a new dataset"

}

] | open | false | null | [] | null | [] | 1,642,369,345,000 | 1,642,369,345,000 | null | NONE | null | null | null | ## Adding a Dataset

- **Name:** *The Medical Segmentation Decathlon Dataset*

- **Description:** The underlying data set was designed to explore the axis of difficulties typically encountered when dealing with medical images, such as small data sets, unbalanced labels, multi-site data, and small objects.

- **Paper:** [link to the dataset paper if available](https://arxiv.org/abs/2106.05735)

- **Data:** http://medicaldecathlon.com/

- **Motivation:** Hugging Face seeks to democratize ML for society. One of the growing niches within ML is the ML + Medicine community. Key data sets will help increase the supply of HF resources for starting an initial community.

(cc @osanseviero @abidlabs )

Instructions to add a new dataset can be found [here](https://github.com/huggingface/datasets/blob/master/ADD_NEW_DATASET.md).

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3583/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3583/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3582 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3582/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3582/comments | https://api.github.com/repos/huggingface/datasets/issues/3582/events | https://github.com/huggingface/datasets/issues/3582 | 1,104,877,303 | I_kwDODunzps5B2xb3 | 3,582 | conll 2003 dataset source url is no longer valid | {

"login": "rcanand",

"id": 303900,

"node_id": "MDQ6VXNlcjMwMzkwMA==",

"avatar_url": "https://avatars.githubusercontent.com/u/303900?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/rcanand",

"html_url": "https://github.com/rcanand",

"followers_url": "https://api.github.com/users/rcanand/followers",

"following_url": "https://api.github.com/users/rcanand/following{/other_user}",

"gists_url": "https://api.github.com/users/rcanand/gists{/gist_id}",

"starred_url": "https://api.github.com/users/rcanand/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/rcanand/subscriptions",

"organizations_url": "https://api.github.com/users/rcanand/orgs",

"repos_url": "https://api.github.com/users/rcanand/repos",

"events_url": "https://api.github.com/users/rcanand/events{/privacy}",

"received_events_url": "https://api.github.com/users/rcanand/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

},

{

"id": 2067388877,

"node_id": "MDU6TGFiZWwyMDY3Mzg4ODc3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20bug",

"name": "dataset bug",

"color": "2edb81",

"default": false,

"description": "A bug in a dataset script provided in the library"

}

] | open | false | null | [] | null | [

"I came to open the same issue."

] | 1,642,287,857,000 | 1,642,425,282,000 | null | NONE | null | null | null | ## Describe the bug

Loading `conll2003` dataset fails because it was removed (just yesterday 1/14/2022) from the location it is looking for.

## Steps to reproduce the bug

```python

from datasets import load_dataset

load_dataset("conll2003")

```

## Expected results

The dataset should load.

## Actual results

It is looking for the dataset at `https://github.com/davidsbatista/NER-datasets/raw/master/CONLL2003/train.txt` but it was removed from there yesterday (see [commit](https://github.com/davidsbatista/NER-datasets/commit/9d8f45cc7331569af8eb3422bbe1c97cbebd5690) that removed the file and related [issue](https://github.com/davidsbatista/NER-datasets/issues/8)).

- We should replace this with an alternate valid location.

- this is being referenced in the huggingface course chapter 7 [colab notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/master/course/chapter7/section2_pt.ipynb), which is also broken.

```python

FileNotFoundError Traceback (most recent call last)

<ipython-input-4-27c956bec93c> in <module>()

1 from datasets import load_dataset

2

----> 3 raw_datasets = load_dataset("conll2003")

11 frames

/usr/local/lib/python3.7/dist-packages/datasets/utils/file_utils.py in get_from_cache(url, cache_dir, force_download, proxies, etag_timeout, resume_download, user_agent, local_files_only, use_etag, max_retries, use_auth_token, ignore_url_params)

610 )

611 elif response is not None and response.status_code == 404:

--> 612 raise FileNotFoundError(f"Couldn't find file at {url}")

613 _raise_if_offline_mode_is_enabled(f"Tried to reach {url}")

614 if head_error is not None:

FileNotFoundError: Couldn't find file at https://github.com/davidsbatista/NER-datasets/raw/master/CONLL2003/train.txt

```

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version:

- Platform:

- Python version:

- PyArrow version:

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3582/reactions",

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 1,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3582/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3581 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3581/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3581/comments | https://api.github.com/repos/huggingface/datasets/issues/3581/events | https://github.com/huggingface/datasets/issues/3581 | 1,104,857,822 | I_kwDODunzps5B2sre | 3,581 | Unable to create a dataset from a parquet file in S3 | {

"login": "regCode",

"id": 18012903,

"node_id": "MDQ6VXNlcjE4MDEyOTAz",

"avatar_url": "https://avatars.githubusercontent.com/u/18012903?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/regCode",

"html_url": "https://github.com/regCode",

"followers_url": "https://api.github.com/users/regCode/followers",

"following_url": "https://api.github.com/users/regCode/following{/other_user}",

"gists_url": "https://api.github.com/users/regCode/gists{/gist_id}",

"starred_url": "https://api.github.com/users/regCode/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/regCode/subscriptions",

"organizations_url": "https://api.github.com/users/regCode/orgs",

"repos_url": "https://api.github.com/users/regCode/repos",

"events_url": "https://api.github.com/users/regCode/events{/privacy}",

"received_events_url": "https://api.github.com/users/regCode/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] | open | false | null | [] | null | [] | 1,642,282,456,000 | 1,642,282,456,000 | null | NONE | null | null | null | ## Describe the bug

Trying to create a dataset from a parquet file in S3.

## Steps to reproduce the bug

```python

import s3fs

from datasets import Dataset

s3 = s3fs.S3FileSystem(anon=False)

with s3.open(PATH_LTR_TOY_CLEAN_DATASET, 'rb') as s3file:

dataset = Dataset.from_parquet(s3file)

```

## Expected results

A new Dataset object

## Actual results

```AttributeError: 'S3File' object has no attribute 'decode'```

```

AttributeError Traceback (most recent call last)

<command-2452877612515691> in <module>

5

6 with s3.open(PATH_LTR_TOY_CLEAN_DATASET, 'rb') as s3file:

----> 7 dataset = Dataset.from_parquet(s3file)

/databricks/python/lib/python3.8/site-packages/datasets/arrow_dataset.py in from_parquet(path_or_paths, split, features, cache_dir, keep_in_memory, columns, **kwargs)

907 from .io.parquet import ParquetDatasetReader

908

--> 909 return ParquetDatasetReader(

910 path_or_paths,

911 split=split,

/databricks/python/lib/python3.8/site-packages/datasets/io/parquet.py in __init__(self, path_or_paths, split, features, cache_dir, keep_in_memory, **kwargs)

28 path_or_paths = path_or_paths if isinstance(path_or_paths, dict) else {self.split: path_or_paths}

29 hash = _PACKAGED_DATASETS_MODULES["parquet"][1]

---> 30 self.builder = Parquet(

31 cache_dir=cache_dir,

32 data_files=path_or_paths,

/databricks/python/lib/python3.8/site-packages/datasets/builder.py in __init__(self, cache_dir, name, hash, base_path, info, features, use_auth_token, namespace, data_files, data_dir, **config_kwargs)

246

247 if data_files is not None and not isinstance(data_files, DataFilesDict):

--> 248 data_files = DataFilesDict.from_local_or_remote(

249 sanitize_patterns(data_files), base_path=base_path, use_auth_token=use_auth_token

250 )

/databricks/python/lib/python3.8/site-packages/datasets/data_files.py in from_local_or_remote(cls, patterns, base_path, allowed_extensions, use_auth_token)

576 for key, patterns_for_key in patterns.items():

577 out[key] = (

--> 578 DataFilesList.from_local_or_remote(

579 patterns_for_key,

580 base_path=base_path,

/databricks/python/lib/python3.8/site-packages/datasets/data_files.py in from_local_or_remote(cls, patterns, base_path, allowed_extensions, use_auth_token)

544 ) -> "DataFilesList":

545 base_path = base_path if base_path is not None else str(Path().resolve())

--> 546 data_files = resolve_patterns_locally_or_by_urls(base_path, patterns, allowed_extensions)

547 origin_metadata = _get_origin_metadata_locally_or_by_urls(data_files, use_auth_token=use_auth_token)

548 return cls(data_files, origin_metadata)

/databricks/python/lib/python3.8/site-packages/datasets/data_files.py in resolve_patterns_locally_or_by_urls(base_path, patterns, allowed_extensions)

191 data_files = []

192 for pattern in patterns:

--> 193 if is_remote_url(pattern):

194 data_files.append(Url(pattern))

195 else:

/databricks/python/lib/python3.8/site-packages/datasets/utils/file_utils.py in is_remote_url(url_or_filename)

115

116 def is_remote_url(url_or_filename: str) -> bool:

--> 117 parsed = urlparse(url_or_filename)

118 return parsed.scheme in ("http", "https", "s3", "gs", "hdfs", "ftp")

119

/usr/lib/python3.8/urllib/parse.py in urlparse(url, scheme, allow_fragments)

370 Note that we don't break the components up in smaller bits

371 (e.g. netloc is a single string) and we don't expand % escapes."""

--> 372 url, scheme, _coerce_result = _coerce_args(url, scheme)

373 splitresult = urlsplit(url, scheme, allow_fragments)

374 scheme, netloc, url, query, fragment = splitresult

/usr/lib/python3.8/urllib/parse.py in _coerce_args(*args)

122 if str_input:

123 return args + (_noop,)

--> 124 return _decode_args(args) + (_encode_result,)

125

126 # Result objects are more helpful than simple tuples

/usr/lib/python3.8/urllib/parse.py in _decode_args(args, encoding, errors)

106 def _decode_args(args, encoding=_implicit_encoding,

107 errors=_implicit_errors):

--> 108 return tuple(x.decode(encoding, errors) if x else '' for x in args)

109

110 def _coerce_args(*args):

/usr/lib/python3.8/urllib/parse.py in <genexpr>(.0)

106 def _decode_args(args, encoding=_implicit_encoding,

107 errors=_implicit_errors):

--> 108 return tuple(x.decode(encoding, errors) if x else '' for x in args)

109

110 def _coerce_args(*args):

AttributeError: 'S3File' object has no attribute 'decode'

```

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 1.17.0

- Platform: Ubuntu 20.04.3 LTS

- Python version: 3.8.10

- PyArrow version: 6.0.1

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3581/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3581/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3580 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3580/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3580/comments | https://api.github.com/repos/huggingface/datasets/issues/3580/events | https://github.com/huggingface/datasets/issues/3580 | 1,104,663,242 | I_kwDODunzps5B19LK | 3,580 | Bug in wiki bio load | {

"login": "tuhinjubcse",

"id": 3104771,

"node_id": "MDQ6VXNlcjMxMDQ3NzE=",

"avatar_url": "https://avatars.githubusercontent.com/u/3104771?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/tuhinjubcse",

"html_url": "https://github.com/tuhinjubcse",

"followers_url": "https://api.github.com/users/tuhinjubcse/followers",

"following_url": "https://api.github.com/users/tuhinjubcse/following{/other_user}",

"gists_url": "https://api.github.com/users/tuhinjubcse/gists{/gist_id}",

"starred_url": "https://api.github.com/users/tuhinjubcse/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/tuhinjubcse/subscriptions",

"organizations_url": "https://api.github.com/users/tuhinjubcse/orgs",

"repos_url": "https://api.github.com/users/tuhinjubcse/repos",

"events_url": "https://api.github.com/users/tuhinjubcse/events{/privacy}",

"received_events_url": "https://api.github.com/users/tuhinjubcse/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2067388877,

"node_id": "MDU6TGFiZWwyMDY3Mzg4ODc3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20bug",

"name": "dataset bug",

"color": "2edb81",

"default": false,

"description": "A bug in a dataset script provided in the library"

}

] | open | false | null | [] | null | [] | 1,642,241,073,000 | 1,642,425,303,000 | null | NONE | null | null | null |

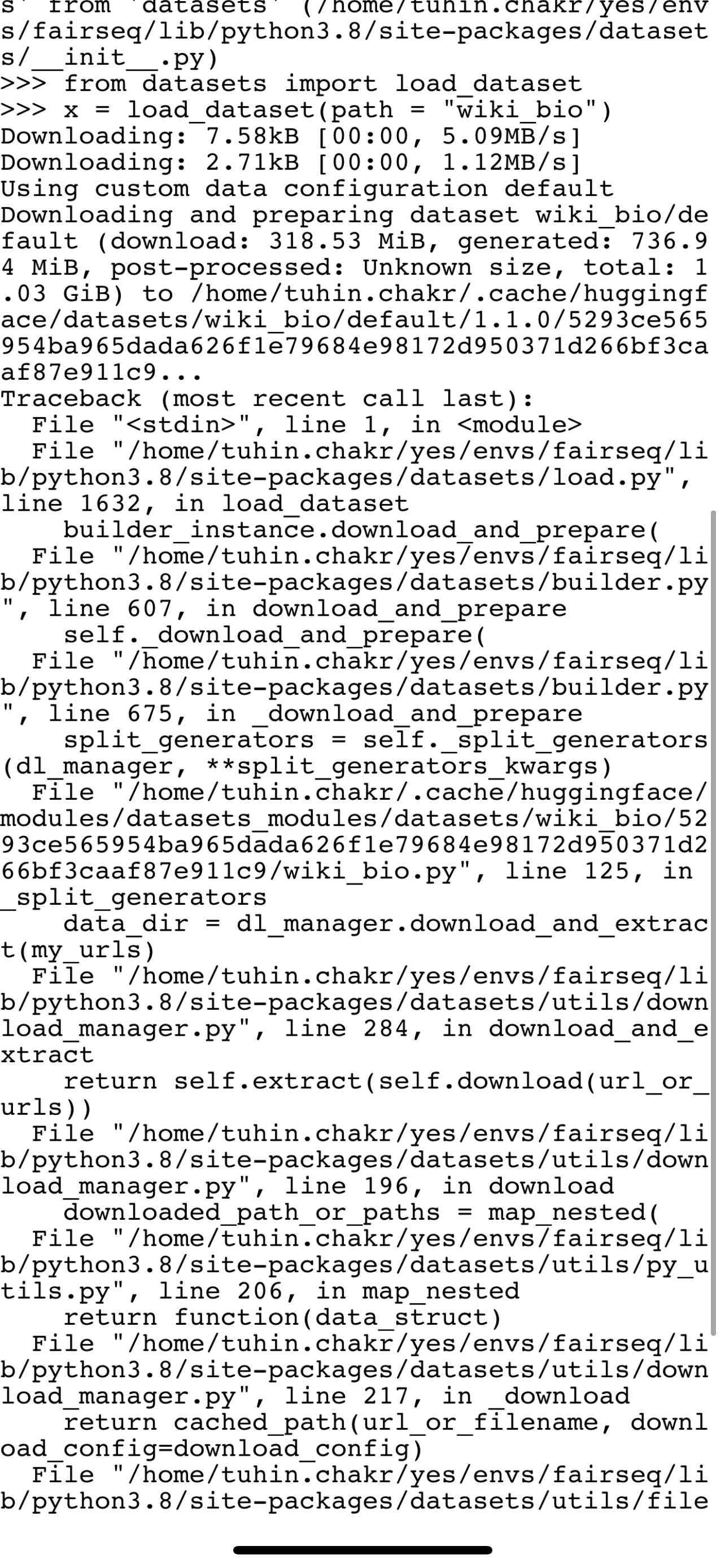

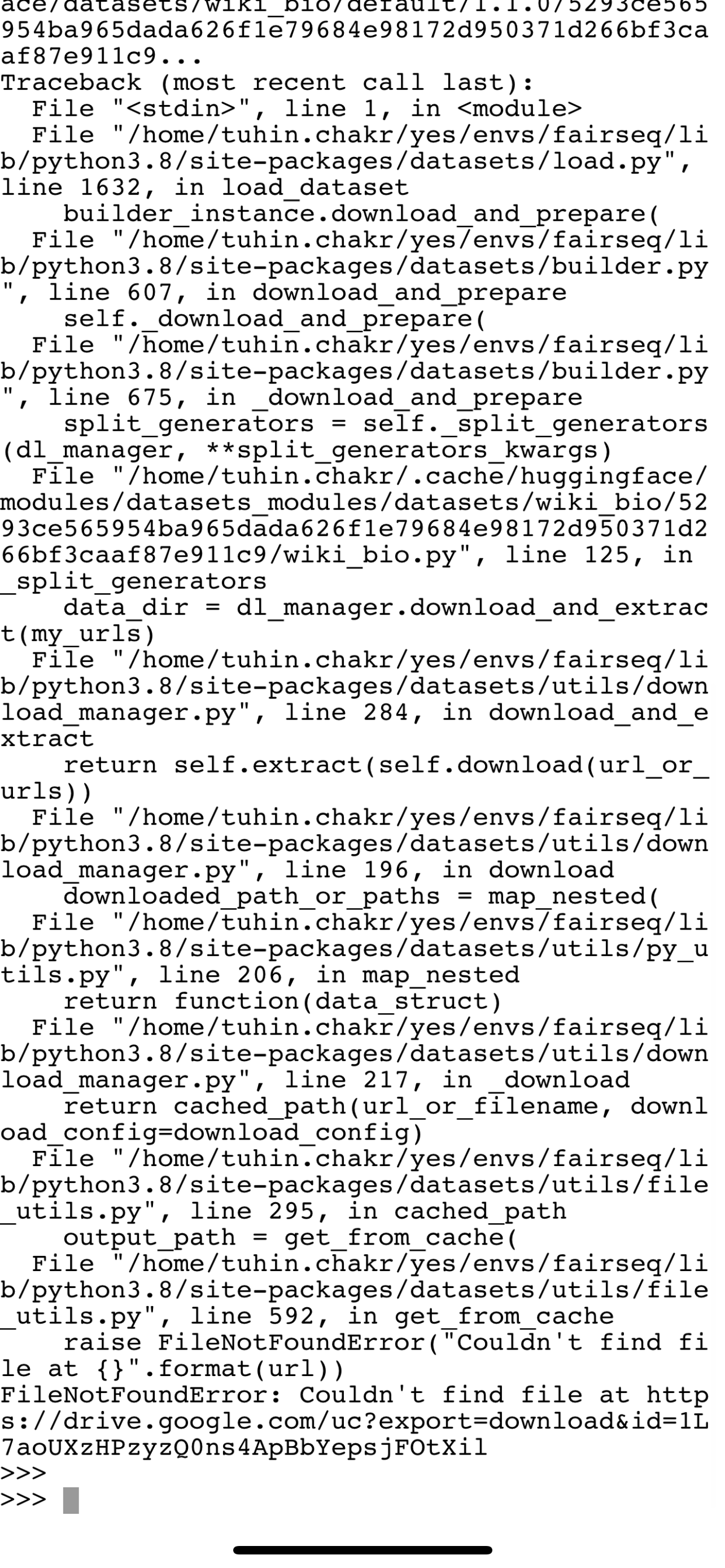

wiki_bio is failing to load because of a failing drive link . Can someone fix this ?

a | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3580/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3580/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3579 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3579/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3579/comments | https://api.github.com/repos/huggingface/datasets/issues/3579/events | https://github.com/huggingface/datasets/pull/3579 | 1,103,451,118 | PR_kwDODunzps4xBmY4 | 3,579 | Add Text2log Dataset | {

"login": "apergo-ai",

"id": 68908804,

"node_id": "MDQ6VXNlcjY4OTA4ODA0",

"avatar_url": "https://avatars.githubusercontent.com/u/68908804?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/apergo-ai",

"html_url": "https://github.com/apergo-ai",

"followers_url": "https://api.github.com/users/apergo-ai/followers",

"following_url": "https://api.github.com/users/apergo-ai/following{/other_user}",

"gists_url": "https://api.github.com/users/apergo-ai/gists{/gist_id}",

"starred_url": "https://api.github.com/users/apergo-ai/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/apergo-ai/subscriptions",

"organizations_url": "https://api.github.com/users/apergo-ai/orgs",

"repos_url": "https://api.github.com/users/apergo-ai/repos",

"events_url": "https://api.github.com/users/apergo-ai/events{/privacy}",

"received_events_url": "https://api.github.com/users/apergo-ai/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,642,157,101,000 | 1,642,157,101,000 | null | CONTRIBUTOR | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3579",

"html_url": "https://github.com/huggingface/datasets/pull/3579",

"diff_url": "https://github.com/huggingface/datasets/pull/3579.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3579.patch",

"merged_at": null

} | Adding the text2log dataset used for training FOL sentence translating models | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3579/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3579/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3578 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3578/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3578/comments | https://api.github.com/repos/huggingface/datasets/issues/3578/events | https://github.com/huggingface/datasets/issues/3578 | 1,103,403,287 | I_kwDODunzps5BxJkX | 3,578 | label information get lost after parquet serialization | {

"login": "Tudyx",

"id": 56633664,

"node_id": "MDQ6VXNlcjU2NjMzNjY0",

"avatar_url": "https://avatars.githubusercontent.com/u/56633664?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Tudyx",

"html_url": "https://github.com/Tudyx",

"followers_url": "https://api.github.com/users/Tudyx/followers",

"following_url": "https://api.github.com/users/Tudyx/following{/other_user}",

"gists_url": "https://api.github.com/users/Tudyx/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Tudyx/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Tudyx/subscriptions",

"organizations_url": "https://api.github.com/users/Tudyx/orgs",

"repos_url": "https://api.github.com/users/Tudyx/repos",

"events_url": "https://api.github.com/users/Tudyx/events{/privacy}",

"received_events_url": "https://api.github.com/users/Tudyx/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] | open | false | null | [] | null | [] | 1,642,155,038,000 | 1,642,155,038,000 | null | NONE | null | null | null | ## Describe the bug

In *dataset_info.json* file, information about the label get lost after the dataset serialization.

## Steps to reproduce the bug

```python

from datasets import load_dataset

# normal save

dataset = load_dataset('glue', 'sst2', split='train')

dataset.save_to_disk("normal_save")

# save after parquet serialization

dataset.to_parquet("glue-sst2-train.parquet")

dataset = load_dataset("parquet", data_files='glue-sst2-train.parquet')

dataset.save_to_disk("save_after_parquet")

```

## Expected results

I expected to keep label information in *dataset_info.json* file even after parquet serialization

## Actual results

In the normal serialization i got

```json

"label": {

"num_classes": 2,

"names": [

"negative",

"positive"

],

"names_file": null,

"id": null,

"_type": "ClassLabel"

},

```

And after parquet serialization i got

```json

"label": {

"dtype": "int64",

"id": null,

"_type": "Value"

},

```

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 1.17.0

- Platform: ubuntu 20.04

- Python version: 3.8.10

- PyArrow version: 6.0.1

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3578/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3578/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3577 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3577/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3577/comments | https://api.github.com/repos/huggingface/datasets/issues/3577/events | https://github.com/huggingface/datasets/issues/3577 | 1,102,598,241 | I_kwDODunzps5BuFBh | 3,577 | Add The Mexican Emotional Speech Database (MESD) | {

"login": "omarespejel",

"id": 4755430,

"node_id": "MDQ6VXNlcjQ3NTU0MzA=",

"avatar_url": "https://avatars.githubusercontent.com/u/4755430?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/omarespejel",

"html_url": "https://github.com/omarespejel",

"followers_url": "https://api.github.com/users/omarespejel/followers",

"following_url": "https://api.github.com/users/omarespejel/following{/other_user}",

"gists_url": "https://api.github.com/users/omarespejel/gists{/gist_id}",

"starred_url": "https://api.github.com/users/omarespejel/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/omarespejel/subscriptions",

"organizations_url": "https://api.github.com/users/omarespejel/orgs",

"repos_url": "https://api.github.com/users/omarespejel/repos",

"events_url": "https://api.github.com/users/omarespejel/events{/privacy}",

"received_events_url": "https://api.github.com/users/omarespejel/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2067376369,

"node_id": "MDU6TGFiZWwyMDY3Mzc2MzY5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20request",

"name": "dataset request",

"color": "e99695",

"default": false,

"description": "Requesting to add a new dataset"

}

] | open | false | null | [] | null | [] | 1,642,117,776,000 | 1,642,117,776,000 | null | NONE | null | null | null | ## Adding a Dataset

- **Name:** *The Mexican Emotional Speech Database (MESD)*

- **Description:** *Contains 864 voice recordings with six different prosodies: anger, disgust, fear, happiness, neutral, and sadness. Furthermore, three voice categories are included: female adult, male adult, and child. *

- **Paper:** *[Paper](https://ieeexplore.ieee.org/abstract/document/9629934/authors#authors)*

- **Data:** *[link to the Github repository or current dataset location](https://data.mendeley.com/datasets/cy34mh68j9/3)*

- **Motivation:** *Would add Spanish speech data to the HF datasets :) *

Instructions to add a new dataset can be found [here](https://github.com/huggingface/datasets/blob/master/ADD_NEW_DATASET.md).

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3577/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3577/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3576 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3576/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3576/comments | https://api.github.com/repos/huggingface/datasets/issues/3576/events | https://github.com/huggingface/datasets/pull/3576 | 1,102,059,651 | PR_kwDODunzps4w8sUm | 3,576 | Add PASS dataset | {

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,642,094,167,000 | 1,642,094,167,000 | null | CONTRIBUTOR | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3576",

"html_url": "https://github.com/huggingface/datasets/pull/3576",

"diff_url": "https://github.com/huggingface/datasets/pull/3576.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3576.patch",

"merged_at": null

} | This PR adds the PASS dataset.

Closes #3043 | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3576/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3576/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3575 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3575/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3575/comments | https://api.github.com/repos/huggingface/datasets/issues/3575/events | https://github.com/huggingface/datasets/pull/3575 | 1,101,947,955 | PR_kwDODunzps4w8Usm | 3,575 | Add Arrow type casting to struct for Image and Audio + Support nested casting | {

"login": "lhoestq",

"id": 42851186,

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lhoestq",

"html_url": "https://github.com/lhoestq",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [

"Regarding the tests I'm just missing the FixedSizeListType type casting for ListArray objects, will to it tomorrow as well as adding new tests + docstrings\r\n\r\nand also adding soundfile in the CI",

"While writing some tests I noticed that the ExtensionArray can't be directly concatenated - maybe we can get rid of the extension types/arrays and only keep their storages in native arrow types.\r\n\r\nIn this case the `cast_storage` functions should be the responsibility of the Image and Audio classes directly. And therefore we would need two never cast to a pyarrow type again but to a HF feature - since they'd end up being the one able to tell what's castable or not. This is fine in my opinion but let me know what you think. I can take care of this on monday I think",

"Alright I got rid of all the extension type stuff, I'm writing the new tests now :)"

] | 1,642,088,219,000 | 1,642,505,921,000 | null | MEMBER | null | true | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3575",

"html_url": "https://github.com/huggingface/datasets/pull/3575",

"diff_url": "https://github.com/huggingface/datasets/pull/3575.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3575.patch",

"merged_at": null

} | ## Intro

1. Currently, it's not possible to have nested features containing Audio or Image.

2. Moreover one can keep an Arrow array as a StringArray to store paths to images, but such arrays can't be directly concatenated to another image array if it's stored an another Arrow type (typically, a StructType).

3. Allowing several Arrow types for a single HF feature type also leads to bugs like this one #3497

4. Issues like #3247 are quite frequent and happen when Arrow fails to reorder StructArrays.

5. Casting Audio feature type is blocking preparation for the ASR task template: https://github.com/huggingface/datasets/pull/3364

All those issues are linked together by the fact that:

- we are limited by the Arrow type casting which is lacking features for nested types.

- and especially for Audio and Image: they are not robust enough for concatenation and feature inference.

## Proposed solution

To fix 1 and 4 I implemented nested array type casting (which is missing in PyArrow).

To fix 2, 3 and 5 while having a simple implementation for nested array type casting, I changed the storage type of Audio and Image to always be a StructType. Also casting from StringType is directly implemented via a new function `cast_storage` that is defined individually for Audio and Image. I also added nested decoding.

## Implementation details

### I. Better Arrow data type casting for nested data structures

I implemented new functions `array_cast` and `table_cast` that do the exact same as `pyarrow.Array.cast` or `pyarrow.Table.cast` but support nested struct casting and array re-ordering.

These functions can be used on PyArrow objects, and are already integrated in our own `datasets.table.Table.cast` functions. So one can do `my_dataset.data.cast(pyarrow_schema_with_custom_hf_types)` directly.

### II. New image and audio extension types with custom casting

I used PyArrow extension types to be able to define what casting is allowed or not. For example both StringType->ImageExtensionType and StructType->ImageExtensionType are allowed, via the `cast_storage` method.

I factorized all the PyArrow + Pandas extension stuff in the `base_extension.py` file. This aims at separating the front-facing API code of `datasets` from the Arrow back-end which requires advanced knowledge.

### III. Nested feature decoding

I added a new function `decode_nested_example` to decode image and audio data in nested data structures. For optimization's sake, this function is only called if a column has at least one feature that requires decoding.

## Alternative considered

The casting to struct type could have been done directly with python objects using some Audio and Image methods, but bringing arrow data to python objects is expensive. The Audio and Image types could also have been able to convert the arrow data directly, but this is not convenient to use when casting a full Arrow Table with nested fields. Therefore I decided to keep the Arrow data casting logic in Arrow extension types.

## Future work

This work can be used to allow the ArrayND feature types to be nested too (see issue #887)

## TODO

- [ ] fix current tests

- [ ] add new tests

- [ ] docstrings/comments | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3575/reactions",

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 1,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3575/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3574 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3574/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3574/comments | https://api.github.com/repos/huggingface/datasets/issues/3574/events | https://github.com/huggingface/datasets/pull/3574 | 1,101,781,401 | PR_kwDODunzps4w7vu6 | 3,574 | Fix qa4mre tags | {

"login": "lhoestq",

"id": 42851186,

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lhoestq",

"html_url": "https://github.com/lhoestq",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | 1,642,082,219,000 | 1,642,082,582,000 | 1,642,082,581,000 | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3574",

"html_url": "https://github.com/huggingface/datasets/pull/3574",

"diff_url": "https://github.com/huggingface/datasets/pull/3574.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3574.patch",

"merged_at": 1642082581000

} | The YAML tags were invalid. I also fixed the dataset mirroring logging that failed because of this issue [here](https://github.com/huggingface/datasets/actions/runs/1690109581) | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3574/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3574/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3573 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3573/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3573/comments | https://api.github.com/repos/huggingface/datasets/issues/3573/events | https://github.com/huggingface/datasets/pull/3573 | 1,101,157,676 | PR_kwDODunzps4w5oE_ | 3,573 | Add Mauve metric | {

"login": "jthickstun",

"id": 2321244,

"node_id": "MDQ6VXNlcjIzMjEyNDQ=",

"avatar_url": "https://avatars.githubusercontent.com/u/2321244?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jthickstun",

"html_url": "https://github.com/jthickstun",

"followers_url": "https://api.github.com/users/jthickstun/followers",

"following_url": "https://api.github.com/users/jthickstun/following{/other_user}",

"gists_url": "https://api.github.com/users/jthickstun/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jthickstun/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jthickstun/subscriptions",

"organizations_url": "https://api.github.com/users/jthickstun/orgs",

"repos_url": "https://api.github.com/users/jthickstun/repos",

"events_url": "https://api.github.com/users/jthickstun/events{/privacy}",

"received_events_url": "https://api.github.com/users/jthickstun/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,642,045,968,000 | 1,642,181,538,000 | null | NONE | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3573",

"html_url": "https://github.com/huggingface/datasets/pull/3573",

"diff_url": "https://github.com/huggingface/datasets/pull/3573.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3573.patch",

"merged_at": null

} | Add support for the [Mauve](https://github.com/krishnap25/mauve) metric introduced in this [paper](https://arxiv.org/pdf/2102.01454.pdf) (Neurips, 2021). | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3573/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3573/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3572 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3572/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3572/comments | https://api.github.com/repos/huggingface/datasets/issues/3572/events | https://github.com/huggingface/datasets/issues/3572 | 1,100,634,244 | I_kwDODunzps5BmliE | 3,572 | ConnectionError: Couldn't reach https://storage.googleapis.com/ai4bharat-public-indic-nlp-corpora/evaluations/wikiann-ner.tar.gz (error 403) | {

"login": "sahoodib",

"id": 79107194,

"node_id": "MDQ6VXNlcjc5MTA3MTk0",

"avatar_url": "https://avatars.githubusercontent.com/u/79107194?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/sahoodib",

"html_url": "https://github.com/sahoodib",

"followers_url": "https://api.github.com/users/sahoodib/followers",

"following_url": "https://api.github.com/users/sahoodib/following{/other_user}",

"gists_url": "https://api.github.com/users/sahoodib/gists{/gist_id}",

"starred_url": "https://api.github.com/users/sahoodib/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/sahoodib/subscriptions",

"organizations_url": "https://api.github.com/users/sahoodib/orgs",

"repos_url": "https://api.github.com/users/sahoodib/repos",

"events_url": "https://api.github.com/users/sahoodib/events{/privacy}",

"received_events_url": "https://api.github.com/users/sahoodib/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2067388877,

"node_id": "MDU6TGFiZWwyMDY3Mzg4ODc3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20bug",

"name": "dataset bug",

"color": "2edb81",

"default": false,

"description": "A bug in a dataset script provided in the library"

}

] | open | false | null | [] | null | [] | 1,642,010,376,000 | 1,642,425,328,000 | null | NONE | null | null | null | ## Adding a Dataset

- **Name:**IndicGLUE**

- **Description:** *natural language understanding benchmark for Indian languages*

- **Paper:** *(https://indicnlp.ai4bharat.org/home/)*

- **Data:** *https://huggingface.co/datasets/indic_glue#data-fields*

- **Motivation:** *I am trying to train my model on Indian languages*

While I am trying to load dataset it is giving me with the above error. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3572/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3572/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3571 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3571/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3571/comments | https://api.github.com/repos/huggingface/datasets/issues/3571/events | https://github.com/huggingface/datasets/pull/3571 | 1,100,519,604 | PR_kwDODunzps4w3fVQ | 3,571 | Add missing tasks to MuchoCine dataset | {

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,642,003,652,000 | 1,642,003,652,000 | null | CONTRIBUTOR | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3571",

"html_url": "https://github.com/huggingface/datasets/pull/3571",

"diff_url": "https://github.com/huggingface/datasets/pull/3571.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3571.patch",

"merged_at": null

} | Addresses the 2nd bullet point in #2520.

I'm also removing the licensing information, because I couldn't verify that it is correct. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3571/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3571/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3570 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3570/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3570/comments | https://api.github.com/repos/huggingface/datasets/issues/3570/events | https://github.com/huggingface/datasets/pull/3570 | 1,100,480,791 | PR_kwDODunzps4w3Xez | 3,570 | Add the KMWP dataset (extension of #3564) | {

"login": "sooftware",

"id": 42150335,

"node_id": "MDQ6VXNlcjQyMTUwMzM1",

"avatar_url": "https://avatars.githubusercontent.com/u/42150335?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/sooftware",

"html_url": "https://github.com/sooftware",

"followers_url": "https://api.github.com/users/sooftware/followers",

"following_url": "https://api.github.com/users/sooftware/following{/other_user}",

"gists_url": "https://api.github.com/users/sooftware/gists{/gist_id}",

"starred_url": "https://api.github.com/users/sooftware/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/sooftware/subscriptions",

"organizations_url": "https://api.github.com/users/sooftware/orgs",

"repos_url": "https://api.github.com/users/sooftware/repos",

"events_url": "https://api.github.com/users/sooftware/events{/privacy}",

"received_events_url": "https://api.github.com/users/sooftware/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,642,001,588,000 | 1,642,174,881,000 | null | NONE | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3570",

"html_url": "https://github.com/huggingface/datasets/pull/3570",

"diff_url": "https://github.com/huggingface/datasets/pull/3570.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3570.patch",

"merged_at": null

} | New pull request of #3564 (Add the KMWP dataset) | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3570/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3570/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3569 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3569/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3569/comments | https://api.github.com/repos/huggingface/datasets/issues/3569/events | https://github.com/huggingface/datasets/pull/3569 | 1,100,478,994 | PR_kwDODunzps4w3XGo | 3,569 | Add the DKTC dataset (Extension of #3564) | {

"login": "sooftware",

"id": 42150335,

"node_id": "MDQ6VXNlcjQyMTUwMzM1",

"avatar_url": "https://avatars.githubusercontent.com/u/42150335?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/sooftware",

"html_url": "https://github.com/sooftware",

"followers_url": "https://api.github.com/users/sooftware/followers",

"following_url": "https://api.github.com/users/sooftware/following{/other_user}",

"gists_url": "https://api.github.com/users/sooftware/gists{/gist_id}",

"starred_url": "https://api.github.com/users/sooftware/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/sooftware/subscriptions",

"organizations_url": "https://api.github.com/users/sooftware/orgs",

"repos_url": "https://api.github.com/users/sooftware/repos",

"events_url": "https://api.github.com/users/sooftware/events{/privacy}",

"received_events_url": "https://api.github.com/users/sooftware/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [

"I reflect your comment! @lhoestq ",

"Wait, the format of the data just changed, so I'll take it into consideration and commit it.",

"I update the code according to the dataset structure change.",

"Thanks ! I think the dummy data are not valid yet - the dummy train.csv file only contains a partial example (the quotes `\"` start but never end).",

"> Thanks ! I think the dummy data are not valid yet - the dummy train.csv file only contains a partial example (the quotes `\"` start but never end).\r\n\r\nHi! @lhoestq There is a problem. \r\n<img src=\"https://user-images.githubusercontent.com/42150335/149804142-3800e635-f5a0-44d9-9694-0c2b0c05f16b.png\" width=500>\r\n \r\nAs shown in the picture above, the conversation is divided into \"\\n\" in the \"conversion\" column. \r\nThat's why there's an error in the file path that only saved only five lines like below. \r\n\r\n```\r\n'idx', 'class', 'conversation'\r\n'0', '협박 대화', '\"지금 너 스스로를 죽여달라고 애원하는 것인가?'\r\n아닙니다. 죄송합니다.'\r\n죽을 거면 혼자 죽지 우리까지 사건에 휘말리게 해? 진짜 죽여버리고 싶게.'\r\n정말 잘못했습니다.\r\n```\r\n \r\nIn fact, these five lines are all one line. \r\n \r\n\r\n"

] | 1,642,001,489,000 | 1,642,436,184,000 | null | NONE | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3569",

"html_url": "https://github.com/huggingface/datasets/pull/3569",