The dataset viewer is not available for this split.

Error code: FeaturesError

Exception: ParserError

Message: Error tokenizing data. C error: Expected 16 fields in line 6, saw 17

Traceback: Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/split/first_rows.py", line 322, in compute

compute_first_rows_from_parquet_response(

File "/src/services/worker/src/worker/job_runners/split/first_rows.py", line 88, in compute_first_rows_from_parquet_response

rows_index = indexer.get_rows_index(

File "/src/libs/libcommon/src/libcommon/parquet_utils.py", line 640, in get_rows_index

return RowsIndex(

File "/src/libs/libcommon/src/libcommon/parquet_utils.py", line 521, in __init__

self.parquet_index = self._init_parquet_index(

File "/src/libs/libcommon/src/libcommon/parquet_utils.py", line 538, in _init_parquet_index

response = get_previous_step_or_raise(

File "/src/libs/libcommon/src/libcommon/simple_cache.py", line 591, in get_previous_step_or_raise

raise CachedArtifactError(

libcommon.simple_cache.CachedArtifactError: The previous step failed.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/split/first_rows.py", line 240, in compute_first_rows_from_streaming_response

iterable_dataset = iterable_dataset._resolve_features()

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/iterable_dataset.py", line 2216, in _resolve_features

features = _infer_features_from_batch(self.with_format(None)._head())

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/iterable_dataset.py", line 1239, in _head

return _examples_to_batch(list(self.take(n)))

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/iterable_dataset.py", line 1389, in __iter__

for key, example in ex_iterable:

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/iterable_dataset.py", line 1044, in __iter__

yield from islice(self.ex_iterable, self.n)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/iterable_dataset.py", line 282, in __iter__

for key, pa_table in self.generate_tables_fn(**self.kwargs):

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/packaged_modules/csv/csv.py", line 195, in _generate_tables

for batch_idx, df in enumerate(csv_file_reader):

File "/src/services/worker/.venv/lib/python3.9/site-packages/pandas/io/parsers/readers.py", line 1843, in __next__

return self.get_chunk()

File "/src/services/worker/.venv/lib/python3.9/site-packages/pandas/io/parsers/readers.py", line 1985, in get_chunk

return self.read(nrows=size)

File "/src/services/worker/.venv/lib/python3.9/site-packages/pandas/io/parsers/readers.py", line 1923, in read

) = self._engine.read( # type: ignore[attr-defined]

File "/src/services/worker/.venv/lib/python3.9/site-packages/pandas/io/parsers/c_parser_wrapper.py", line 234, in read

chunks = self._reader.read_low_memory(nrows)

File "parsers.pyx", line 850, in pandas._libs.parsers.TextReader.read_low_memory

File "parsers.pyx", line 905, in pandas._libs.parsers.TextReader._read_rows

File "parsers.pyx", line 874, in pandas._libs.parsers.TextReader._tokenize_rows

File "parsers.pyx", line 891, in pandas._libs.parsers.TextReader._check_tokenize_status

File "parsers.pyx", line 2061, in pandas._libs.parsers.raise_parser_error

pandas.errors.ParserError: Error tokenizing data. C error: Expected 16 fields in line 6, saw 17Need help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

Dataset: Low-to-High-Resolution Weather Forecasting using Topography

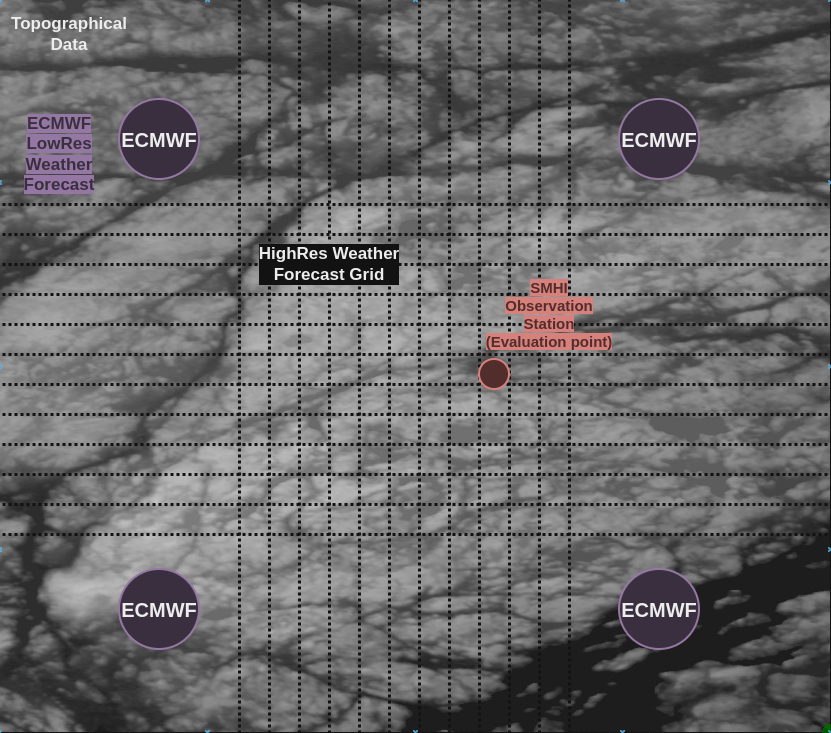

The dataset is intended and structured for the problem of transforming/interpolating low-resolution weather forecasts into higher resolution using topography data.

The dataset consists of 3 different types of data (as illustrated above):

- Historical weather observation data (SMHI)

- Historical weather observation data from selected SMHI observation stations (evaluation points)

- Historical low-resolution weather forecasts (ECMWF)

- For a given SMHI station: Historical, (relatively) low-resolution ECMWF weather forecasts from the 4 nearest ECMWF grid points

- Topography/elevation data (Copernicus DEM GLO-30):

- Topography/elevation data around a given SMHI station, grid enclosed by the 4 ECMWF points

- Mean elevation around each of the nearest 4 ECMWF grid points for a given SMHI station (mean over +/-0.05 arc degree in each direction from the grid point center)

The dataset is meant to facilitate the following modeling pipeline:

- Weather forecasts for a set of 4 neighboring ECMWF points are combined with topography/elevation data and turned into higher resolution forecasts grid (corresponding to the resolution of the topography data)

- SMHI weather observation data is used as a sparse evaluation point of the produced higher-resolution forecasts

License and terms

The terms of usage of the source data and their corresponding licenses:

- SMHI weather observation data: (Creative Common Attribution 4.0)

- ECMWF historical weather forecasts:

- Copernicus DEM GLO-30:

Acknowledgment

The dataset has been developed as part of the OWGRE project, funded within the ERA-Net SES Joint Call 2020 for transnational research, development and demonstration projects.

Data details & samples

SMHI weather observation data

SMHI weather observation data is structured in csv files, separately for each weather parameter and weather observation station. See the sample below:

Datum;Tid (UTC);Lufttemperatur;Kvalitet

2020-01-01;06:00:00;-2.2;G

2020-01-01;12:00:00;-2.7;G

2020-01-01;18:00:00;0.2;G

2020-01-02;06:00:00;0.3;G

2020-01-02;12:00:00;4.3;G

2020-01-02;18:00:00;4.9;G

2020-01-03;06:00:00;6.0;G

2020-01-03;12:00:00;2.7;G

2020-01-03;18:00:00;1.7;G

2020-01-04;06:00:00;-4.6;G

2020-01-04;12:00:00;0.6;G

2020-01-04;18:00:00;-5.9;G

2020-01-05;06:00:00;-7.9;G

2020-01-05;12:00:00;-3.1;G

ECMWF historical weather forecasts

Historical ECMWF weather forecasts contain a number of forecasted weather variables at 4 nearest grid points around each SMHI observation station:

<xarray.Dataset>

Dimensions: (reference_time: 2983, valid_time: 54,

corner_index: 4, station_index: 275)

Coordinates:

* reference_time (reference_time) datetime64[ns] 2020-01-01 ... 20...

latitude (corner_index, station_index) float64 55.3 ... 68.7

longitude (corner_index, station_index) float64 ...

point (corner_index, station_index) int64 ...

* valid_time (valid_time) int32 0 1 2 3 4 5 ... 48 49 50 51 52 53

* station_index (station_index) int64 0 1 2 3 4 ... 271 272 273 274

* corner_index (corner_index) <U3 'llc' 'lrc' 'ulc' 'urc'

station_names (station_index) <U29 ...

station_ids (station_index) int64 ...

Data variables:

PressureReducedMSL (reference_time, valid_time, corner_index, station_index) float32 ...

RelativeHumidity (reference_time, valid_time, corner_index, station_index) float32 ...

SolarDownwardRadiation (reference_time, valid_time, corner_index, station_index) float64 ...

Temperature (reference_time, valid_time, corner_index, station_index) float32 ...

WindDirection:10 (reference_time, valid_time, corner_index, station_index) float32 ...

WindSpeed:10 (reference_time, valid_time, corner_index, station_index) float32 ...

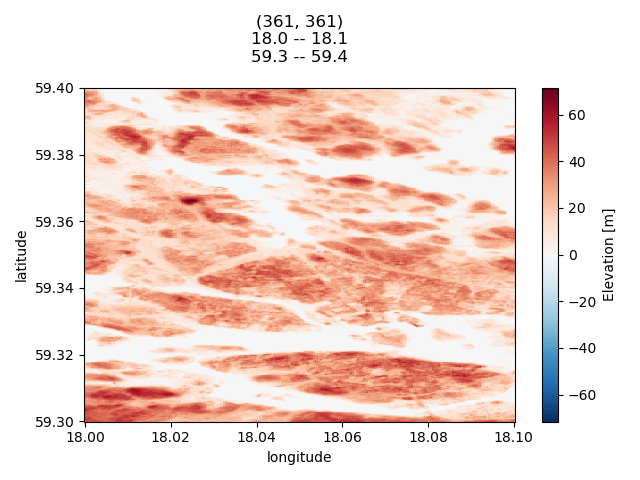

Topography data

The topography data is provided in the chunks cut around each of the SMHI stations. The corners of each chunk correspond to ECMWF forecast grid points.

Each chunk consists approximately 361 x 361 points, spanning across 0.1° x 0.1°. (Some of the values across longitudes are NaN since apparently the Earth is not square [citation needed]).

Loading the data

The dependencies can be installed through conda or mamba in the following way:

mamba create -n ourenv python pandas xarray dask netCDF4

Below, for a given SMHI weather observation station, we read the following data:

- weather observations

- historical ECMWF weather forecasts

- topography/elevation

import pickle

import pandas as pd

import xarray as xr

smhi_weather_observation_station_index = 153

smhi_weather_observation_station_id = pd.read_csv(

'./smhi_weather_observation_stations.csv',

index_col='station_index'

).loc[smhi_weather_observation_station_index]['id'] # 102540

weather_parameter = 1 # temperature

# NOTE: Need to unzip the file first!

smhi_observation_data = pd.read_csv(

'./weather_observations/smhi_observations_from_2020/'

f'parameter_{weather_parameter}'

f'/smhi_weather_param_{weather_parameter}_station_{smhi_weather_observation_station_id}.csv',

sep=';',

)

print('SMHI observation data:')

print(smhi_observation_data)

ecmwf_data = xr.open_dataset(

'./ecmwf_historical_weather_forecasts/ECMWF_HRES-reindexed.nc'

).sel(station_index=smhi_weather_observation_station_index)

print('ECMWF data:')

print(ecmwf_data)

topography_data = xr.open_dataset(

'topography/sweden_chunks_copernicus-dem-30m'

f'/topography_chunk_station_index-{smhi_weather_observation_station_index}.nc'

)

print('Topography chunk:')

print(topography_data)

mean_elevations = pickle.load(open('./topography/ecmwf_grid_mean_elevations.pkl', 'rb'))

print('Mean elevation:')

print(mean_elevations[0])

- Downloads last month

- 69