license: other

license_name: idl-train

license_link: LICENSE

task_categories:

- image-to-text

size_categories:

- 10M<n<100M

Dataset Card for Industry Documents Library (IDL)

Dataset Description

- Point of Contact from curators: Kate Tasker, UCSF

- Point of Contact Hugging Face: Pablo Montalvo

Dataset Summary

Industry Documents Library (IDL) is a document dataset filtered from UCSF documents library with 19 million pages kept as valid samples. Each document exists as a collection of a pdf, a tiff image with the same contents rendered, a json file containing extensive Textract OCR annotations from the idl_data project, and a .ocr file with the original, older OCR annotation. In each pdf, there may be from 1 to up to 3000 pages.

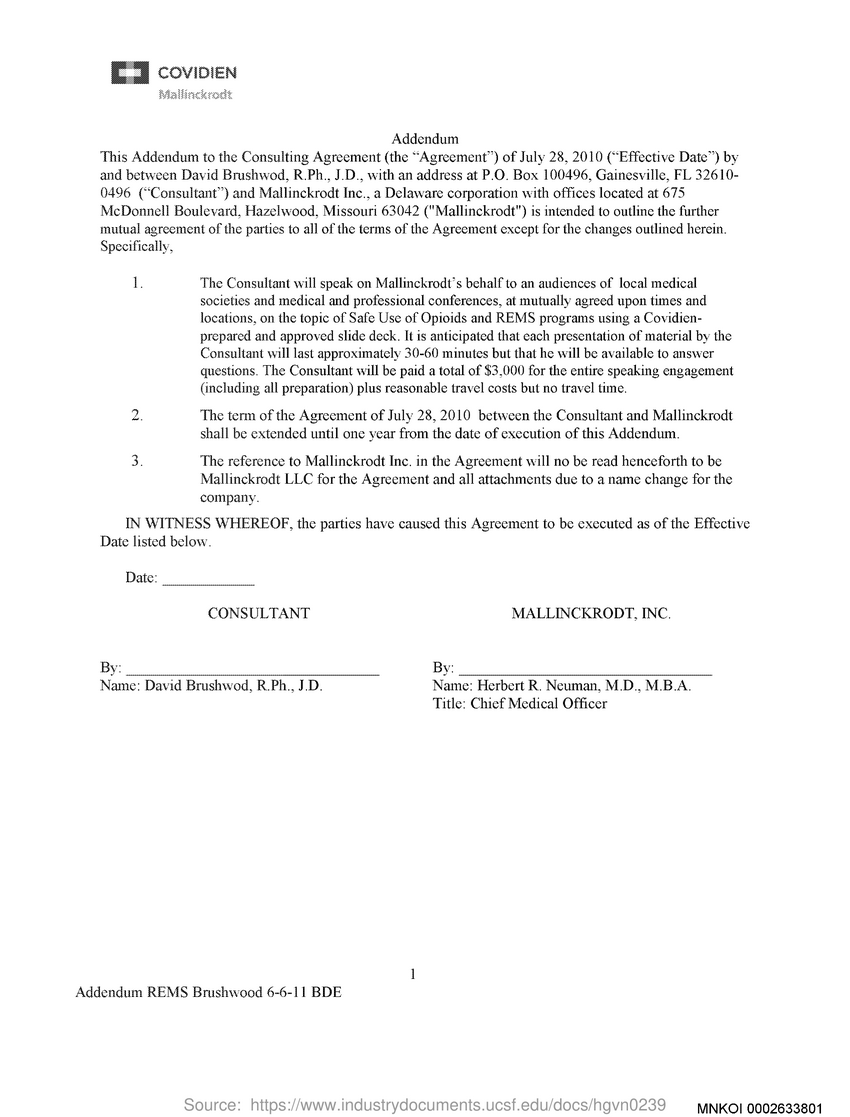

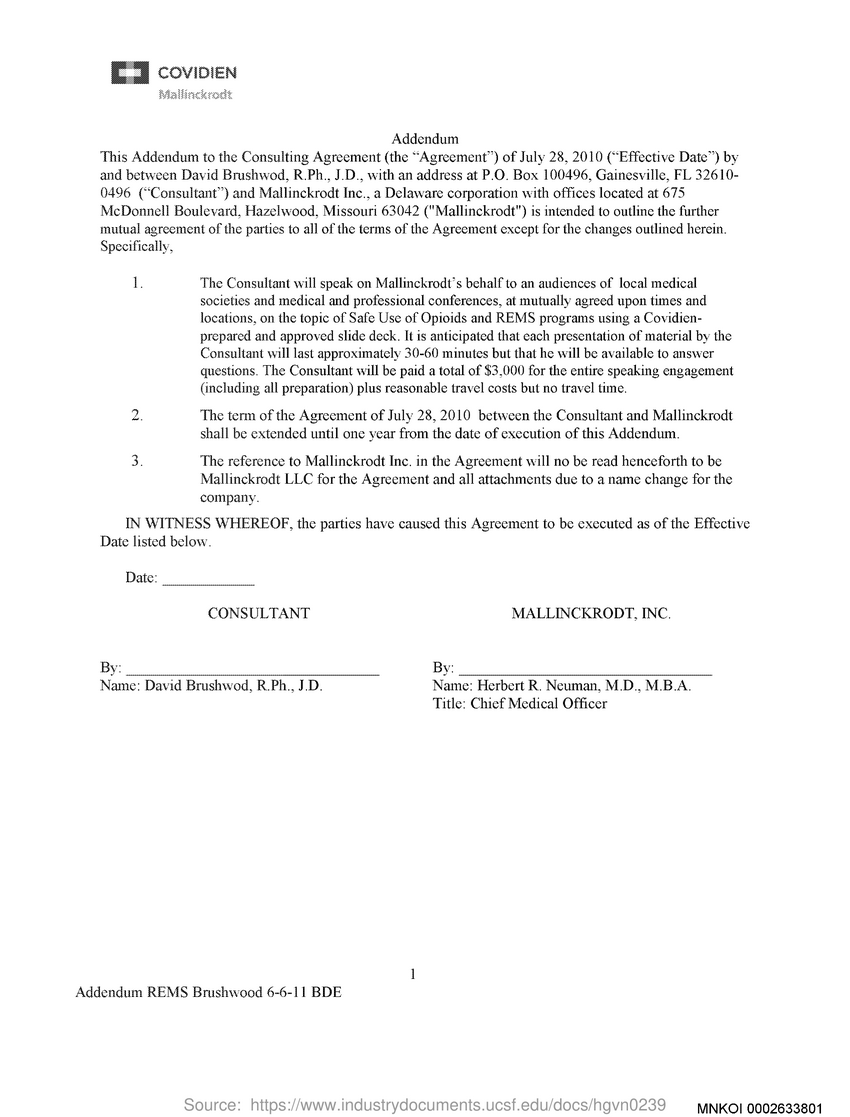

An example page of one pdf document from the Industry Documents Library.

This instance of IDL is in webdataset .tar format.

Usage with chug

Check out chug, our optimized library for sharded dataset loading!

import chug

task_cfg = chug.DataTaskDocReadCfg(page_sampling='all')

data_cfg = chug.DataCfg(

source='pixparse/idl-wds',

split='train',

batch_size=None,

format='hfids',

num_workers=0,

)

data_loader = chug.create_loader(

data_cfg,

task_cfg,

)

sample = next(iter(data_loader))

Usage with datasets

This dataset can also be used with webdataset library or current releases of Hugging Face datasets.

Here is an example using the "streaming" parameter. We do recommend downloading the dataset to save bandwidth.

dataset = load_dataset('pixparse/idl-wds', streaming=True)

print(next(iter(dataset['train'])).keys())

>> dict_keys(['__key__', '__url__', 'json', 'ocr', 'pdf', 'tif'])

For faster download, you can directly use the huggingface_hub library. Make sure hf_transfer is installed prior to downloading and mind that you have enough space locally.

import os

os.environ["HF_HUB_ENABLE_HF_TRANSFER"] = "1"

from huggingface_hub import HfApi, logging

#logging.set_verbosity_debug()

hf = HfApi()

hf.snapshot_download("pixparse/idl-wds", repo_type="dataset", local_dir_use_symlinks=False)

Further, a metadata file _pdfa-english-train-info-minimal.json contains the list of samples per shard, with same basename and .json or .pdf extension,

as well as the count of files per shard.

Words and lines document metadata

Initially, we obtained the raw data from the IDL API and combined it with the idl_data annotation. This information is then reshaped into lines organized in reading order, under the key lines. We keep non-reshaped word and bounding box information under the word key, should users want to use their own heuristic.

The way we obtain an approximate reading order is simply by looking at the frequency peaks of the leftmost word x-coordinate. A frequency peak means that a high number of lines are starting from the same point. Then, we keep track of the x-coordinate of each such identified column. If no peaks are found, the document is assumed to be readable in plain format. The code to detect columns can be found here.

def get_columnar_separators(page, min_prominence=0.3, num_bins=10, kernel_width=1):

"""

Identifies the x-coordinates that best separate columns by analyzing the derivative of a histogram

of the 'left' values (xmin) of bounding boxes.

Args:

page (dict): Page data with 'bbox' containing bounding boxes of words.

min_prominence (float): The required prominence of peaks in the histogram.

num_bins (int): Number of bins to use for the histogram.

kernel_width (int): The width of the Gaussian kernel used for smoothing the histogram.

Returns:

separators (list): The x-coordinates that separate the columns, if any.

"""

try:

left_values = [b[0] for b in page['bbox']]

hist, bin_edges = np.histogram(left_values, bins=num_bins)

hist = scipy.ndimage.gaussian_filter1d(hist, kernel_width)

min_val = min(hist)

hist = np.insert(hist, [0, len(hist)], min_val)

bin_width = bin_edges[1] - bin_edges[0]

bin_edges = np.insert(bin_edges, [0, len(bin_edges)], [bin_edges[0] - bin_width, bin_edges[-1] + bin_width])

peaks, _ = scipy.signal.find_peaks(hist, prominence=min_prominence * np.max(hist))

derivatives = np.diff(hist)

separators = []

if len(peaks) > 1:

# This finds the index of the maximum derivative value between peaks

# which indicates peaks after trough --> column

for i in range(len(peaks)-1):

peak_left = peaks[i]

peak_right = peaks[i+1]

max_deriv_index = np.argmax(derivatives[peak_left:peak_right]) + peak_left

separator_x = bin_edges[max_deriv_index + 1]

separators.append(separator_x)

except Exception as e:

separators = []

return separators

That way, columnar documents can be better separated. This is a basic heuristic but it should improve overall the readability of the documents.

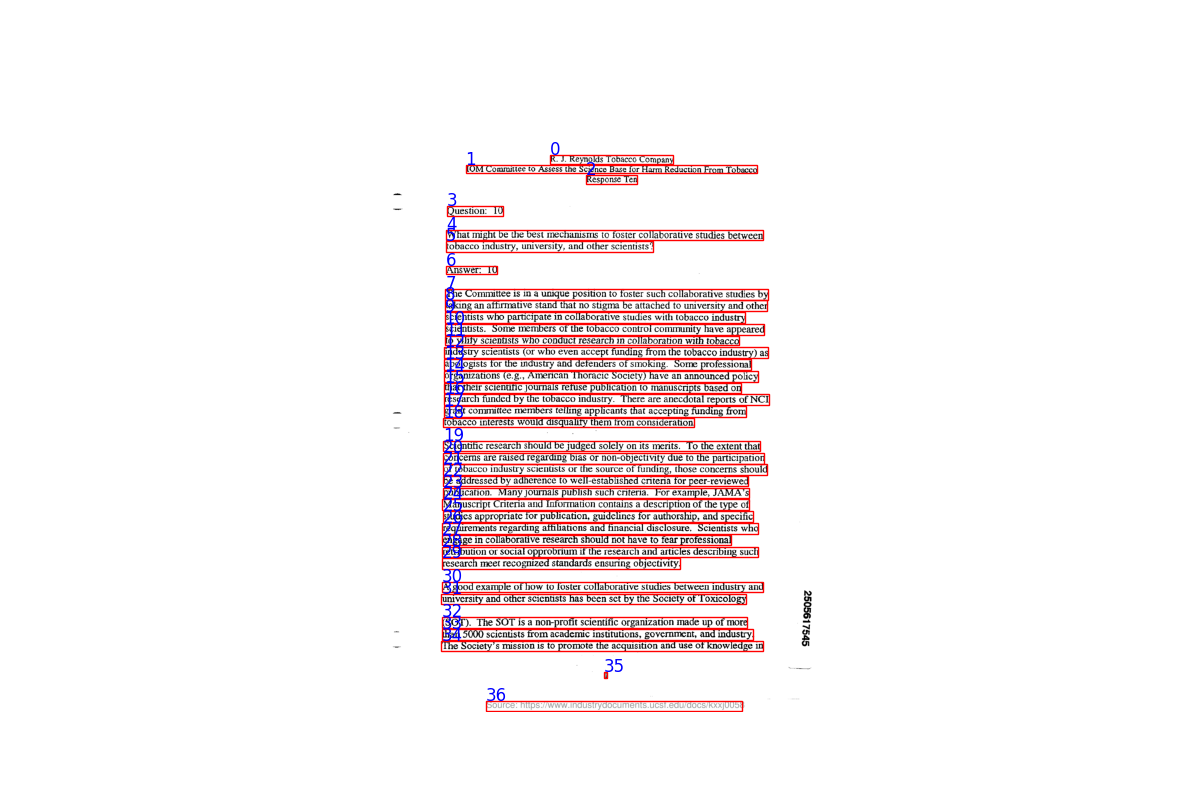

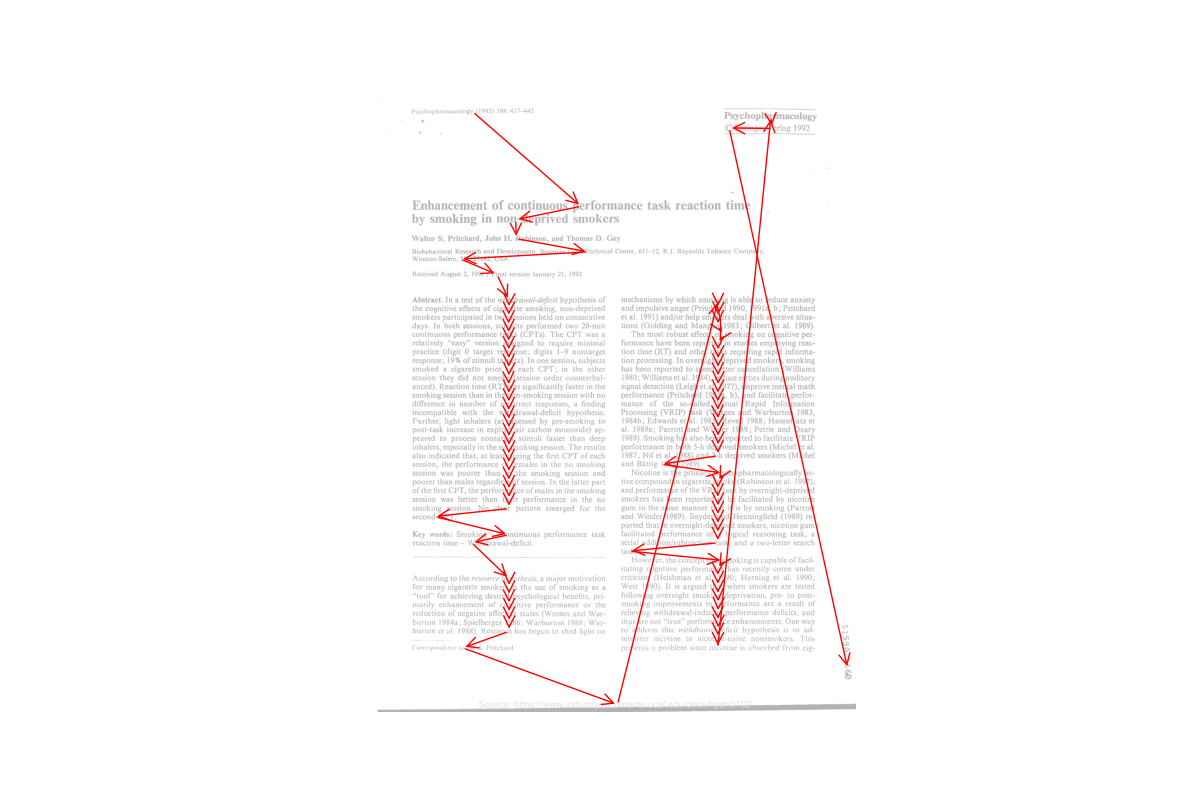

Standard reading order for a single-column document. On the left, bounding boxes are ordered, and on the right a rendition of the corresponding reading order is given.

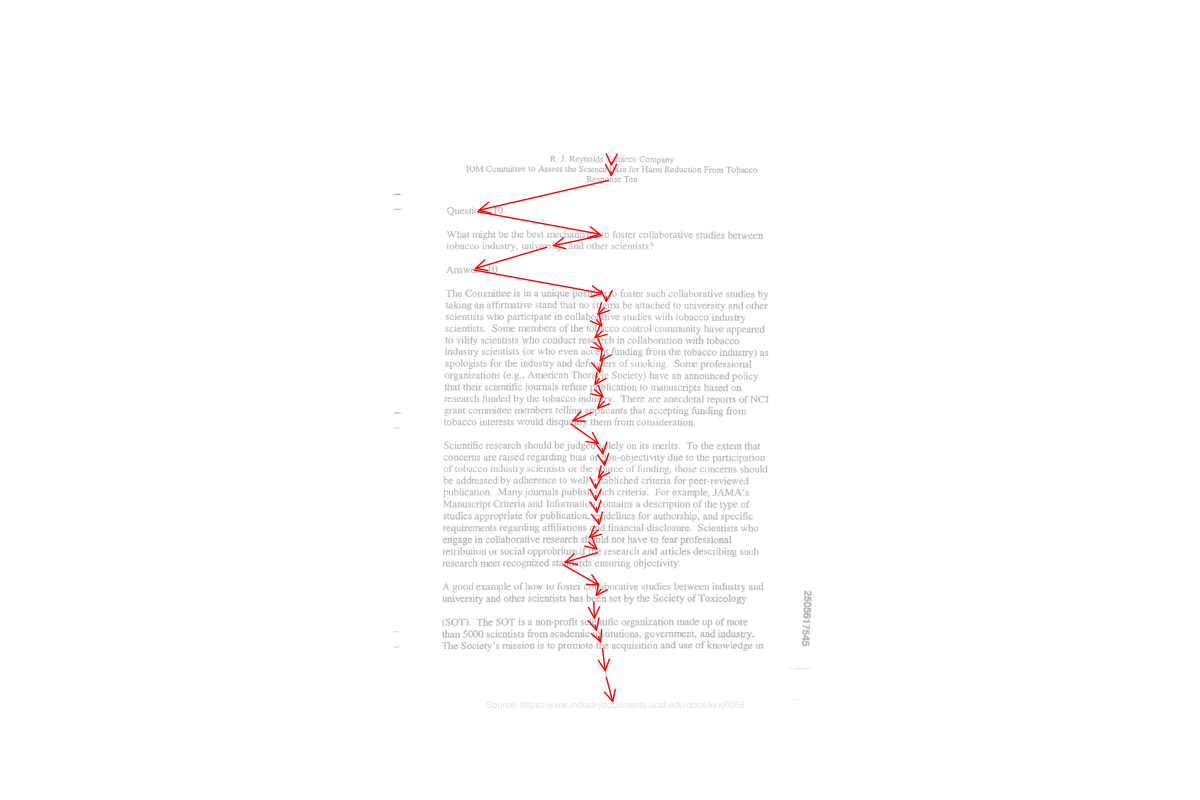

Heuristic-driven columnar reading order for a two-columns document. On the left, bounding boxes are ordered, and on the right a rendition of the corresponding reading order is given. Some inaccuracies remain but the overall reading order is preserved.

For each pdf document, we store statistics on number of pages per shard, number of valid samples per shard. A valid sample is a sample that can be encoded then decoded, which we did for each sample.

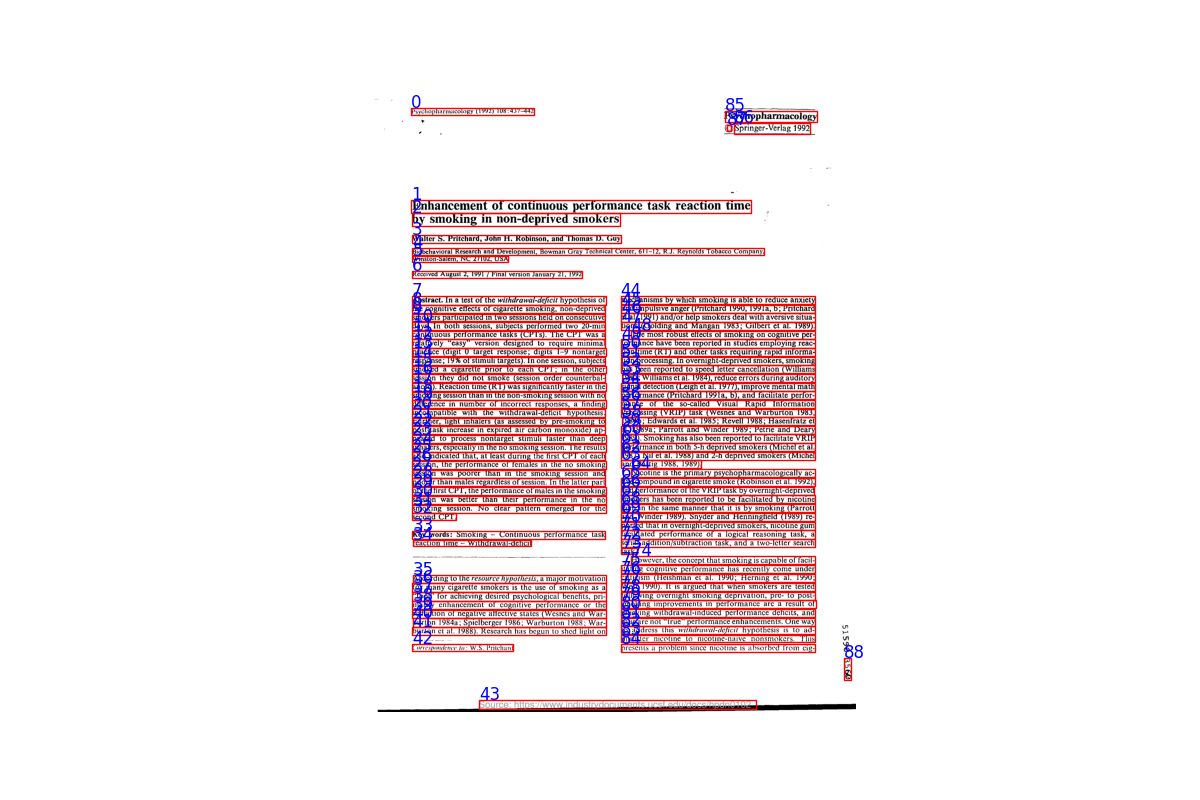

Data, metadata and statistics.

An example page of one pdf document from the Industry Documents Library.

The metadata for each document has been formatted in this way. Each pdf is paired with a json file with the following structure. Entries have been shortened for readability.

{

"pages": [

{

"text": [

"COVIDIEN",

"Mallinckrodt",

"Addendum",

"This Addendum to the Consulting Agreement (the \"Agreement\") of July 28, 2010 (\"Effective Date\") by",

"and between David Brushwod, R.Ph., J.D., with an address at P.O. Box 100496, Gainesville, FL 32610-",

],

"bbox": [

[0.185964, 0.058857, 0.092199, 0.011457],

[0.186465, 0.079529, 0.087209, 0.009247],

[0.459241, 0.117854, 0.080015, 0.011332],

[0.117109, 0.13346, 0.751004, 0.014365],

[0.117527, 0.150306, 0.750509, 0.012954]

],

"poly": [

[

{"X": 0.185964, "Y": 0.058857}, {"X": 0.278163, "Y": 0.058857}, {"X": 0.278163, "Y": 0.070315}, {"X": 0.185964, "Y": 0.070315}

],

[

{"X": 0.186465, "Y": 0.079529}, {"X": 0.273673, "Y": 0.079529}, {"X": 0.273673, "Y": 0.088777}, {"X": 0.186465, "Y": 0.088777}

],

[

{"X": 0.459241, "Y": 0.117854}, {"X": 0.539256, "Y": 0.117854}, {"X": 0.539256, "Y": 0.129186}, {"X": 0.459241, "Y": 0.129186}

],

[

{"X": 0.117109, "Y": 0.13346}, {"X": 0.868113, "Y": 0.13346}, {"X": 0.868113, "Y": 0.147825}, {"X": 0.117109, "Y": 0.147825}

],

[

{"X": 0.117527, "Y": 0.150306}, {"X": 0.868036, "Y": 0.150306}, {"X": 0.868036, "Y": 0.163261}, {"X": 0.117527, "Y": 0.163261}

]

],

"score": [

0.9939, 0.5704, 0.9961, 0.9898, 0.9935

]

}

]

}

The top-level key, pages, is a list of every page in the document. The above example shows only one page. text is a list of lines in the document, with their individual associated bounding box in the next entry. bbox contains the bounding box coordinates in left, top, width, height format, with coordinates relative to the page size. poly is the corresponding polygon.

score is the confidence score for each line obtained with Textract.

Data Splits

Train

idl-train-*.tar- Downloaded on 2023/12/16

- 3000 shards, 3144726 samples, 19174595 pages

Additional Information

Dataset Curators

Pablo Montalvo, Ross Wightman

Licensing Information

While the Industry Documents Library is a public archive of documents and audiovisual materials, companies or individuals hold the rights to the information they created, meaning material cannot be “substantially” reproduced in books or other media without the copyright holder’s permission.

The use of copyrighted material, including reproduction, is governed by United States copyright law (Title 17, United States Code). The law may permit the “fair use” of a copyrighted work, including the making of a photocopy, “for purposes such as criticism, comment, news reporting, teaching (including multiple copies for classroom use), scholarship or research.” 17 U.S.C. § 107.

The Industry Documents Library makes its collections available under court-approved agreements with the rightsholders or under the fair use doctrine, depending on the collection.

According to the US Copyright Office, when determining whether a particular use comes under “fair use” you must consider the following:

the purpose and character of the use, including whether it is of commercial nature or for nonprofit educational purposes;

the nature of the copyrighted work itself;

how much of the work you are using in relation to the copyrighted work as a whole (1 page of a 1000 page work or 1 print advertisement vs. an entire 30 second advertisement);

the effect of the use upon the potential market for or value of the copyrighted work. (For additional information see the US Copyright Office Fair Use Index).

Each user of this website is responsible for ensuring compliance with applicable copyright laws. Persons obtaining, or later using, a copy of copyrighted material in excess of “fair use” may become liable for copyright infringement. By accessing this website, the user agrees to hold harmless the University of California, its affiliates and their directors, officers, employees and agents from all claims and expenses, including attorneys’ fees, arising out of the use of this website by the user.

For more in-depth information on copyright and fair use, visit the Stanford University Libraries’ Copyright and Fair Use website.

If you hold copyright to a document or documents in our collections and have concerns about our inclusion of this material, please see the IDL Take-Down Policy or contact us with any questions.

In the dataset, the API from the Industry Documents Library holds the following permissions counts per file, showing all are now public (none are "confidential" or "privileged", only formerly.)

{'public/no restrictions': 3005133,

'public/formerly confidential': 264978,

'public/formerly privileged': 30063,

'public/formerly privileged/formerly confidential': 669,

'public/formerly confidential/formerly privileged': 397,

}