Datasets:

The dataset viewer is not available for this dataset.

Error code: ConfigNamesError

Exception: BadZipFile

Message: zipfiles that span multiple disks are not supported

Traceback: Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/dataset/config_names.py", line 66, in compute_config_names_response

config_names = get_dataset_config_names(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/inspect.py", line 164, in get_dataset_config_names

dataset_module = dataset_module_factory(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 1671, in dataset_module_factory

raise e1 from None

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 1640, in dataset_module_factory

return HubDatasetModuleFactoryWithoutScript(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 1069, in get_module

module_name, default_builder_kwargs = infer_module_for_data_files(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 586, in infer_module_for_data_files

split_modules = {

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 587, in <dictcomp>

split: infer_module_for_data_files_list(data_files_list, download_config=download_config)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 528, in infer_module_for_data_files_list

return infer_module_for_data_files_list_in_archives(data_files_list, download_config=download_config)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/load.py", line 556, in infer_module_for_data_files_list_in_archives

for f in xglob(extracted, recursive=True, download_config=download_config)[

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/utils/file_utils.py", line 1016, in xglob

fs, *_ = url_to_fs(urlpath, **storage_options)

File "/src/services/worker/.venv/lib/python3.9/site-packages/fsspec/core.py", line 395, in url_to_fs

fs = filesystem(protocol, **inkwargs)

File "/src/services/worker/.venv/lib/python3.9/site-packages/fsspec/registry.py", line 293, in filesystem

return cls(**storage_options)

File "/src/services/worker/.venv/lib/python3.9/site-packages/fsspec/spec.py", line 80, in __call__

obj = super().__call__(*args, **kwargs)

File "/src/services/worker/.venv/lib/python3.9/site-packages/fsspec/implementations/zip.py", line 62, in __init__

self.zip = zipfile.ZipFile(

File "/usr/local/lib/python3.9/zipfile.py", line 1266, in __init__

self._RealGetContents()

File "/usr/local/lib/python3.9/zipfile.py", line 1329, in _RealGetContents

endrec = _EndRecData(fp)

File "/usr/local/lib/python3.9/zipfile.py", line 286, in _EndRecData

return _EndRecData64(fpin, -sizeEndCentDir, endrec)

File "/usr/local/lib/python3.9/zipfile.py", line 232, in _EndRecData64

raise BadZipFile("zipfiles that span multiple disks are not supported")

zipfile.BadZipFile: zipfiles that span multiple disks are not supportedNeed help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

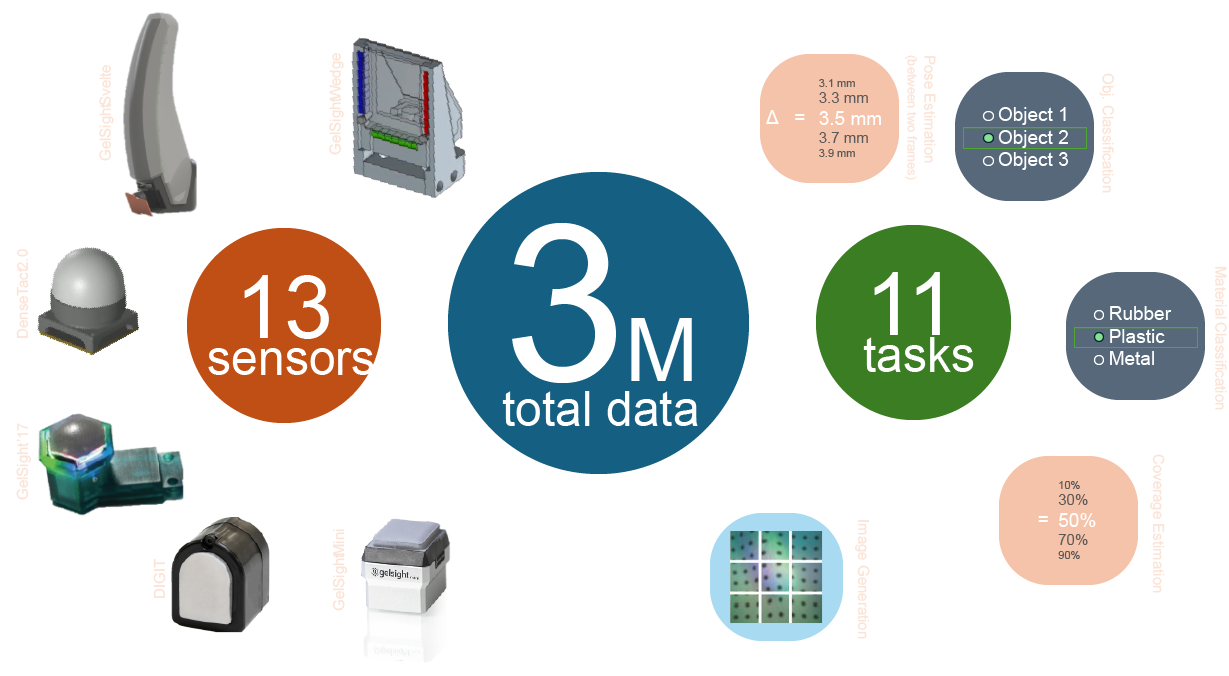

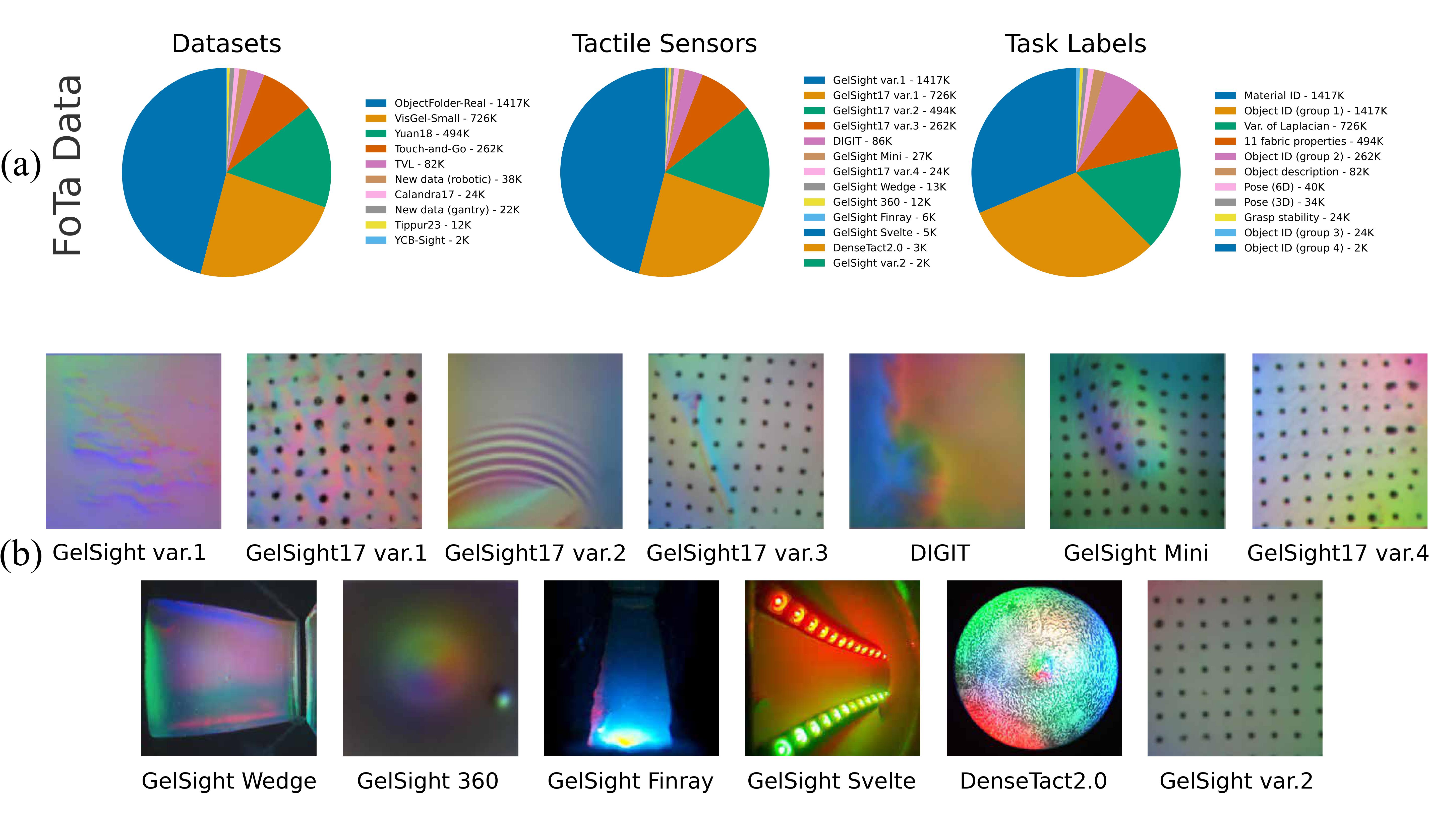

Foundation Tactile (FoTa) - a multi-sensor multi-task large dataset for tactile sensing

This repository stores the FoTa dataset and the pretrained checkpoints of Transferable Tactile Transformers (T3).

Paper Code ColabJialiang (Alan) Zhao, Yuxiang Ma, Lirui Wang, and Edward H. Adelson

MIT CSAIL

Overview

FoTa was released with Transferable Tactile Transformers (T3) as a large dataset for tactile representation learning. It aggregates some of the largest open-source tactile datasets, and it is released in a unified WebDataset format.

Fota contains over 3 million tactile images collected from 13 camera-based tactile sensors and 11 tasks.

File structure

After downloading and unzipping, the file structure of FoTa looks like:

dataset_1

|---- train

|---- count.txt

|---- data_000000.tar

|---- data_000001.tar

|---- ...

|---- val

|---- count.txt

|---- data_000000.tar

|---- ...

dataset_2

:

dataset_n

Each .tar file is one sharded dataset. At runtime, wds (WebDataset) api automatically loads, shuffles, and unpacks all shards on demand.

The nicest part of having a .tar file, instead of saving all raw data into matrices (e.g. .npz for zarr), is that .tar is easy to visualize without the need of any code.

Simply double click on any .tar file to check its content.

Although you will never need to unpack a .tar manually (wds does that automatically), it helps to understand the logic and file structure.

data_000000.tar

|---- file_name_1.jpg

|---- file_name_1.json

:

|---- file_name_n.jpg

|---- file_name_n.json

The .jpg files are tactile images, and the .json files store task-specific labels.

For more details on operations of the paper, checkout our GitHub repository and Colab tutorial.

Getting started

Checkout our Colab for a step-by-step tutorial!

Download and unpack

Download either with the web interface or using the python interface:

pip install huggingface_hub

then inside a python script or in ipython, run the following:

from huggingface_hub import snapshot_download

snapshot_download(repo_id="alanz-mit/FoundationTactile", repo_type="dataset", local_dir=".", local_dir_use_symlinks=False)

To unpack the dataset which has been split into many .zip files:

cd dataset

zip -s 0 FoTa_dataset.zip --out unsplit_FoTa_dataset.zip

unzip unsplit_FoTa_dataset.zip

Citation

@article{zhao2024transferable,

title={Transferable Tactile Transformers for Representation Learning Across Diverse Sensors and Tasks},

author={Jialiang Zhao and Yuxiang Ma and Lirui Wang and Edward H. Adelson},

year={2024},

eprint={2406.13640},

archivePrefix={arXiv},

}

MIT License.

- Downloads last month

- 163