The full dataset viewer is not available (click to read why). Only showing a preview of the rows.

Error code: DatasetGenerationCastError

Exception: DatasetGenerationCastError

Message: An error occurred while generating the dataset

All the data files must have the same columns, but at some point there are 4 missing columns ({'label', 'color', 'bbox', 'occlusion_percentage'})

This happened while the json dataset builder was generating data using

hf://datasets/UrbanSyn/UrbanSyn/bbox2d/bbox2d_0945.json (at revision 2be11cba462f5f67d8a28f07cdff58c8bab646f4)

Please either edit the data files to have matching columns, or separate them into different configurations (see docs at https://hf.co/docs/hub/datasets-manual-configuration#multiple-configurations)

Traceback: Traceback (most recent call last):

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 2011, in _prepare_split_single

writer.write_table(table)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/arrow_writer.py", line 585, in write_table

pa_table = table_cast(pa_table, self._schema)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/table.py", line 2302, in table_cast

return cast_table_to_schema(table, schema)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/table.py", line 2256, in cast_table_to_schema

raise CastError(

datasets.table.CastError: Couldn't cast

to

{'label': Value(dtype='string', id=None), 'color': Sequence(feature=Value(dtype='int64', id=None), length=-1, id=None), 'bbox': {'xMax': Value(dtype='int64', id=None), 'xMin': Value(dtype='int64', id=None), 'yMax': Value(dtype='int64', id=None), 'yMin': Value(dtype='int64', id=None)}, 'occlusion_percentage': Value(dtype='float64', id=None)}

because column names don't match

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/config/parquet_and_info.py", line 1316, in compute_config_parquet_and_info_response

parquet_operations, partial = stream_convert_to_parquet(

File "/src/services/worker/src/worker/job_runners/config/parquet_and_info.py", line 909, in stream_convert_to_parquet

builder._prepare_split(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 1882, in _prepare_split

for job_id, done, content in self._prepare_split_single(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/builder.py", line 2013, in _prepare_split_single

raise DatasetGenerationCastError.from_cast_error(

datasets.exceptions.DatasetGenerationCastError: An error occurred while generating the dataset

All the data files must have the same columns, but at some point there are 4 missing columns ({'label', 'color', 'bbox', 'occlusion_percentage'})

This happened while the json dataset builder was generating data using

hf://datasets/UrbanSyn/UrbanSyn/bbox2d/bbox2d_0945.json (at revision 2be11cba462f5f67d8a28f07cdff58c8bab646f4)

Please either edit the data files to have matching columns, or separate them into different configurations (see docs at https://hf.co/docs/hub/datasets-manual-configuration#multiple-configurations)Need help to make the dataset viewer work? Open a discussion for direct support.

bbox

dict | label

string | color

sequence | occlusion_percentage

float64 |

|---|---|---|---|

{

"xMax": 276,

"xMin": 0,

"yMax": 684,

"yMin": 373

} | car | [

142,

48,

13,

255

] | 0.04 |

{

"xMax": 1095,

"xMin": 997,

"yMax": 703,

"yMin": 439

} | person | [

114,

47,

11,

255

] | 0.62 |

{

"xMax": 310,

"xMin": 285,

"yMax": 535,

"yMin": 455

} | person | [

208,

151,

11,

255

] | 28.36 |

{

"xMax": 412,

"xMin": 335,

"yMax": 521,

"yMin": 475

} | car | [

211,

40,

13,

255

] | 62.02 |

{

"xMax": 561,

"xMin": 524,

"yMax": 558,

"yMin": 470

} | person | [

230,

218,

11,

255

] | 94.82 |

{

"xMax": 1641,

"xMin": 1620,

"yMax": 470,

"yMin": 416

} | person | [

231,

63,

11,

255

] | 91.05 |

{

"xMax": 1403,

"xMin": 1380,

"yMax": 496,

"yMin": 428

} | person | [

119,

136,

11,

255

] | 7.68 |

{

"xMax": 203,

"xMin": 81,

"yMax": 747,

"yMin": 465

} | person | [

187,

28,

11,

255

] | 54.14 |

{

"xMax": 1235,

"xMin": 1142,

"yMax": 515,

"yMin": 453

} | car | [

235,

52,

13,

255

] | 98.47 |

{

"xMax": 1460,

"xMin": 1411,

"yMax": 556,

"yMin": 395

} | person | [

210,

195,

11,

255

] | 14.37 |

{

"xMax": 1267,

"xMin": 1078,

"yMax": 640,

"yMin": 482

} | motorcycle | [

25,

32,

17,

255

] | 51.17 |

{

"xMax": 1955,

"xMin": 1760,

"yMax": 480,

"yMin": 389

} | car | [

212,

140,

13,

255

] | 99.27 |

{

"xMax": 1092,

"xMin": 841,

"yMax": 684,

"yMin": 497

} | motorcycle | [

2,

120,

17,

255

] | 43.66 |

{

"xMax": 971,

"xMin": 680,

"yMax": 719,

"yMin": 519

} | motorcycle | [

164,

116,

17,

255

] | 0 |

{

"xMax": 1290,

"xMin": 1255,

"yMax": 519,

"yMin": 464

} | motorcycle | [

96,

224,

17,

255

] | 58.6 |

{

"xMax": 1289,

"xMin": 1253,

"yMax": 505,

"yMin": 440

} | rider | [

96,

224,

12,

255

] | 47.1 |

{

"xMax": 1249,

"xMin": 1236,

"yMax": 493,

"yMin": 451

} | person | [

24,

187,

11,

255

] | 7.99 |

{

"xMax": 1759,

"xMin": 1704,

"yMax": 513,

"yMin": 375

} | person | [

140,

4,

11,

255

] | 0 |

{

"xMax": 1388,

"xMin": 1332,

"yMax": 487,

"yMin": 450

} | car | [

3,

220,

13,

255

] | 66.86 |

{

"xMax": 1717,

"xMin": 1677,

"yMax": 512,

"yMin": 386

} | person | [

233,

8,

11,

255

] | 7.86 |

{

"xMax": 1553,

"xMin": 1539,

"yMax": 474,

"yMin": 427

} | person | [

93,

179,

11,

255

] | 59.26 |

{

"xMax": 1198,

"xMin": 959,

"yMax": 659,

"yMin": 482

} | motorcycle | [

141,

203,

17,

255

] | 44.02 |

{

"xMax": 1581,

"xMin": 1450,

"yMax": 531,

"yMin": 465

} | car | [

142,

48,

13,

255

] | 81.05 |

{

"xMax": 901,

"xMin": 784,

"yMax": 634,

"yMin": 532

} | car | [

95,

124,

13,

255

] | 0.53 |

{

"xMax": 1778,

"xMin": 1665,

"yMax": 667,

"yMin": 346

} | person | [

45,

55,

11,

255

] | 0.02 |

{

"xMax": 1463,

"xMin": 1432,

"yMax": 517,

"yMin": 492

} | motorcycle | [

71,

112,

17,

255

] | 69.75 |

{

"xMax": 1136,

"xMin": 1124,

"yMax": 546,

"yMin": 517

} | person | [

1,

175,

11,

255

] | 46.49 |

{

"xMax": 1414,

"xMin": 1281,

"yMax": 534,

"yMin": 485

} | car | [

50,

144,

13,

255

] | 81.35 |

{

"xMax": 1350,

"xMin": 1336,

"yMax": 534,

"yMin": 489

} | person | [

26,

132,

11,

255

] | 57.71 |

{

"xMax": 698,

"xMin": 670,

"yMax": 611,

"yMin": 565

} | person | [

43,

110,

11,

255

] | 45.83 |

{

"xMax": 761,

"xMin": 738,

"yMax": 609,

"yMin": 562

} | person | [

182,

194,

11,

255

] | 76.72 |

{

"xMax": 1282,

"xMin": 1272,

"yMax": 534,

"yMin": 500

} | person | [

162,

71,

11,

255

] | 50.5 |

{

"xMax": 870,

"xMin": 842,

"yMax": 617,

"yMin": 535

} | person | [

252,

30,

11,

255

] | 86.49 |

{

"xMax": 675,

"xMin": 617,

"yMax": 633,

"yMin": 589

} | motorcycle | [

2,

120,

17,

255

] | 83.47 |

{

"xMax": 705,

"xMin": 644,

"yMax": 631,

"yMin": 587

} | motorcycle | [

164,

116,

17,

255

] | 95.73 |

{

"xMax": 1207,

"xMin": 1171,

"yMax": 543,

"yMin": 512

} | car | [

165,

216,

13,

255

] | 29.28 |

{

"xMax": 1349,

"xMin": 1313,

"yMax": 578,

"yMin": 466

} | person | [

2,

120,

11,

255

] | 60.54 |

{

"xMax": 472,

"xMin": 0,

"yMax": 969,

"yMin": 212

} | train | [

210,

195,

16,

255

] | 0.12 |

{

"xMax": 1400,

"xMin": 1360,

"yMax": 586,

"yMin": 458

} | person | [

229,

118,

11,

255

] | 28.08 |

{

"xMax": 945,

"xMin": 903,

"yMax": 605,

"yMin": 525

} | person | [

159,

26,

11,

255

] | 0.56 |

{

"xMax": 1020,

"xMin": 1007,

"yMax": 565,

"yMin": 533

} | person | [

73,

56,

11,

255

] | 77.45 |

{

"xMax": 656,

"xMin": 636,

"yMax": 632,

"yMin": 564

} | person | [

71,

112,

11,

255

] | 51.24 |

{

"xMax": 1470,

"xMin": 1437,

"yMax": 565,

"yMin": 456

} | person | [

49,

44,

11,

255

] | 0 |

{

"xMax": 1170,

"xMin": 1121,

"yMax": 577,

"yMin": 497

} | person | [

20,

198,

11,

255

] | 0.09 |

{

"xMax": 1162,

"xMin": 1029,

"yMax": 582,

"yMin": 522

} | car | [

118,

36,

13,

255

] | 9.39 |

{

"xMax": 966,

"xMin": 838,

"yMax": 588,

"yMin": 540

} | car | [

189,

228,

13,

255

] | 83.85 |

{

"xMax": 1036,

"xMin": 1028,

"yMax": 559,

"yMin": 532

} | person | [

70,

167,

11,

255

] | 48.57 |

{

"xMax": 1087,

"xMin": 1048,

"yMax": 548,

"yMin": 445

} | person | [

211,

40,

11,

255

] | 6.81 |

{

"xMax": 604,

"xMin": 523,

"yMax": 583,

"yMin": 355

} | person | [

118,

36,

11,

255

] | 0.26 |

{

"xMax": 2047,

"xMin": 2024,

"yMax": 675,

"yMin": 574

} | person | [

239,

75,

11,

255

] | 0.46 |

{

"xMax": 1071,

"xMin": 1041,

"yMax": 545,

"yMin": 451

} | person | [

141,

203,

11,

255

] | 76.19 |

{

"xMax": 1141,

"xMin": 1117,

"yMax": 540,

"yMin": 473

} | person | [

253,

130,

11,

255

] | 2.57 |

{

"xMax": 1159,

"xMin": 1136,

"yMax": 532,

"yMin": 480

} | person | [

137,

214,

11,

255

] | 61.57 |

{

"xMax": 1552,

"xMin": 1482,

"yMax": 564,

"yMin": 531

} | car | [

142,

48,

13,

255

] | 85.19 |

{

"xMax": 1383,

"xMin": 1270,

"yMax": 569,

"yMin": 438

} | train | [

16,

15,

16,

255

] | 60.87 |

{

"xMax": 1481,

"xMin": 1470,

"yMax": 564,

"yMin": 522

} | person | [

229,

118,

11,

255

] | 0.31 |

{

"xMax": 162,

"xMin": 0,

"yMax": 868,

"yMin": 55

} | person | [

234,

208,

11,

255

] | 5.58 |

{

"xMax": 1751,

"xMin": 1709,

"yMax": 620,

"yMin": 534

} | person | [

226,

119,

11,

255

] | 90.81 |

{

"xMax": 1614,

"xMin": 1596,

"yMax": 589,

"yMin": 531

} | person | [

95,

124,

11,

255

] | 6.51 |

{

"xMax": 1123,

"xMin": 1107,

"yMax": 521,

"yMin": 475

} | person | [

164,

116,

11,

255

] | 88.64 |

{

"xMax": 1503,

"xMin": 1492,

"yMax": 565,

"yMin": 525

} | person | [

49,

44,

11,

255

] | 36.02 |

{

"xMax": 1599,

"xMin": 1489,

"yMax": 602,

"yMin": 528

} | car | [

234,

208,

13,

255

] | 0.61 |

{

"xMax": 1414,

"xMin": 1403,

"yMax": 546,

"yMin": 517

} | person | [

138,

59,

11,

255

] | 43.05 |

{

"xMax": 1387,

"xMin": 1132,

"yMax": 652,

"yMin": 451

} | car | [

73,

56,

13,

255

] | 0.31 |

{

"xMax": 1936,

"xMin": 1669,

"yMax": 681,

"yMin": 534

} | car | [

55,

71,

13,

255

] | 0.27 |

{

"xMax": 1458,

"xMin": 1442,

"yMax": 559,

"yMin": 517

} | person | [

2,

120,

11,

255

] | 18.48 |

{

"xMax": 1421,

"xMin": 1414,

"yMax": 547,

"yMin": 520

} | person | [

26,

132,

11,

255

] | 49.61 |

{

"xMax": 1802,

"xMin": 1739,

"yMax": 630,

"yMin": 477

} | person | [

137,

214,

11,

255

] | 39.22 |

{

"xMax": 1238,

"xMin": 1229,

"yMax": 560,

"yMin": 533

} | person | [

72,

212,

11,

255

] | 56.82 |

{

"xMax": 1437,

"xMin": 1223,

"yMax": 634,

"yMin": 403

} | train | [

210,

195,

16,

255

] | 0.55 |

{

"xMax": 1644,

"xMin": 1624,

"yMax": 577,

"yMin": 500

} | person | [

141,

203,

11,

255

] | 21.3 |

{

"xMax": 1177,

"xMin": 1163,

"yMax": 573,

"yMin": 527

} | person | [

102,

51,

11,

255

] | 73.18 |

{

"xMax": 1007,

"xMin": 978,

"yMax": 603,

"yMin": 522

} | person | [

234,

208,

11,

255

] | 0 |

{

"xMax": 1548,

"xMin": 1532,

"yMax": 558,

"yMin": 513

} | person | [

222,

102,

11,

255

] | 64.16 |

{

"xMax": 1125,

"xMin": 1014,

"yMax": 622,

"yMin": 527

} | car | [

234,

208,

13,

255

] | 0.52 |

{

"xMax": 1490,

"xMin": 1439,

"yMax": 562,

"yMin": 525

} | car | [

222,

102,

13,

255

] | 99.59 |

{

"xMax": 1630,

"xMin": 1601,

"yMax": 575,

"yMin": 496

} | person | [

211,

40,

11,

255

] | 26.9 |

{

"xMax": 1182,

"xMin": 1164,

"yMax": 573,

"yMin": 524

} | person | [

253,

23,

11,

255

] | 38.99 |

{

"xMax": 711,

"xMin": 641,

"yMax": 658,

"yMin": 516

} | person | [

45,

55,

11,

255

] | 0 |

{

"xMax": 1519,

"xMin": 1506,

"yMax": 552,

"yMin": 518

} | person | [

158,

100,

11,

255

] | 38.46 |

{

"xMax": 779,

"xMin": 742,

"yMax": 639,

"yMin": 525

} | person | [

95,

124,

11,

255

] | 0 |

{

"xMax": 1533,

"xMin": 1525,

"yMax": 553,

"yMin": 518

} | person | [

28,

238,

11,

255

] | 85.71 |

{

"xMax": 1239,

"xMin": 1167,

"yMax": 588,

"yMin": 526

} | car | [

73,

56,

13,

255

] | 0.58 |

{

"xMax": 1510,

"xMin": 1433,

"yMax": 572,

"yMin": 518

} | car | [

212,

183,

13,

255

] | 1.69 |

{

"xMax": 1520,

"xMin": 1513,

"yMax": 549,

"yMin": 521

} | person | [

49,

44,

11,

255

] | 57.58 |

{

"xMax": 1673,

"xMin": 1636,

"yMax": 596,

"yMin": 497

} | person | [

253,

130,

11,

255

] | 0 |

{

"xMax": 1231,

"xMin": 1218,

"yMax": 567,

"yMin": 530

} | person | [

155,

96,

11,

255

] | 90.2 |

{

"xMax": 2015,

"xMin": 1940,

"yMax": 689,

"yMin": 484

} | person | [

113,

202,

11,

255

] | 0.08 |

{

"xMax": 1002,

"xMin": 982,

"yMax": 595,

"yMin": 533

} | person | [

118,

36,

11,

255

] | 68.46 |

{

"xMax": 1632,

"xMin": 1618,

"yMax": 561,

"yMin": 512

} | person | [

25,

143,

11,

255

] | 90.09 |

{

"xMax": 1492,

"xMin": 1476,

"yMax": 520,

"yMin": 475

} | person | [

1,

175,

11,

255

] | 86.39 |

{

"xMax": 615,

"xMin": 389,

"yMax": 604,

"yMin": 470

} | motorcycle | [

164,

116,

17,

255

] | 69.38 |

{

"xMax": 1423,

"xMin": 1409,

"yMax": 516,

"yMin": 477

} | person | [

209,

95,

11,

255

] | 83.2 |

{

"xMax": 697,

"xMin": 674,

"yMax": 512,

"yMin": 438

} | person | [

165,

216,

11,

255

] | 24.2 |

{

"xMax": 406,

"xMin": 360,

"yMax": 510,

"yMin": 419

} | person | [

136,

114,

11,

255

] | 30.07 |

{

"xMax": 503,

"xMin": 301,

"yMax": 581,

"yMin": 453

} | motorcycle | [

141,

203,

17,

255

] | 75.3 |

{

"xMax": 228,

"xMin": 125,

"yMax": 652,

"yMin": 341

} | person | [

71,

112,

11,

255

] | 10.45 |

{

"xMax": 1645,

"xMin": 1636,

"yMax": 523,

"yMin": 487

} | person | [

255,

75,

11,

255

] | 29.27 |

{

"xMax": 1429,

"xMin": 1168,

"yMax": 1023,

"yMin": 14

} | person | [

252,

30,

11,

255

] | 0 |

{

"xMax": 1616,

"xMin": 1604,

"yMax": 518,

"yMin": 484

} | person | [

183,

38,

11,

255

] | 83.21 |

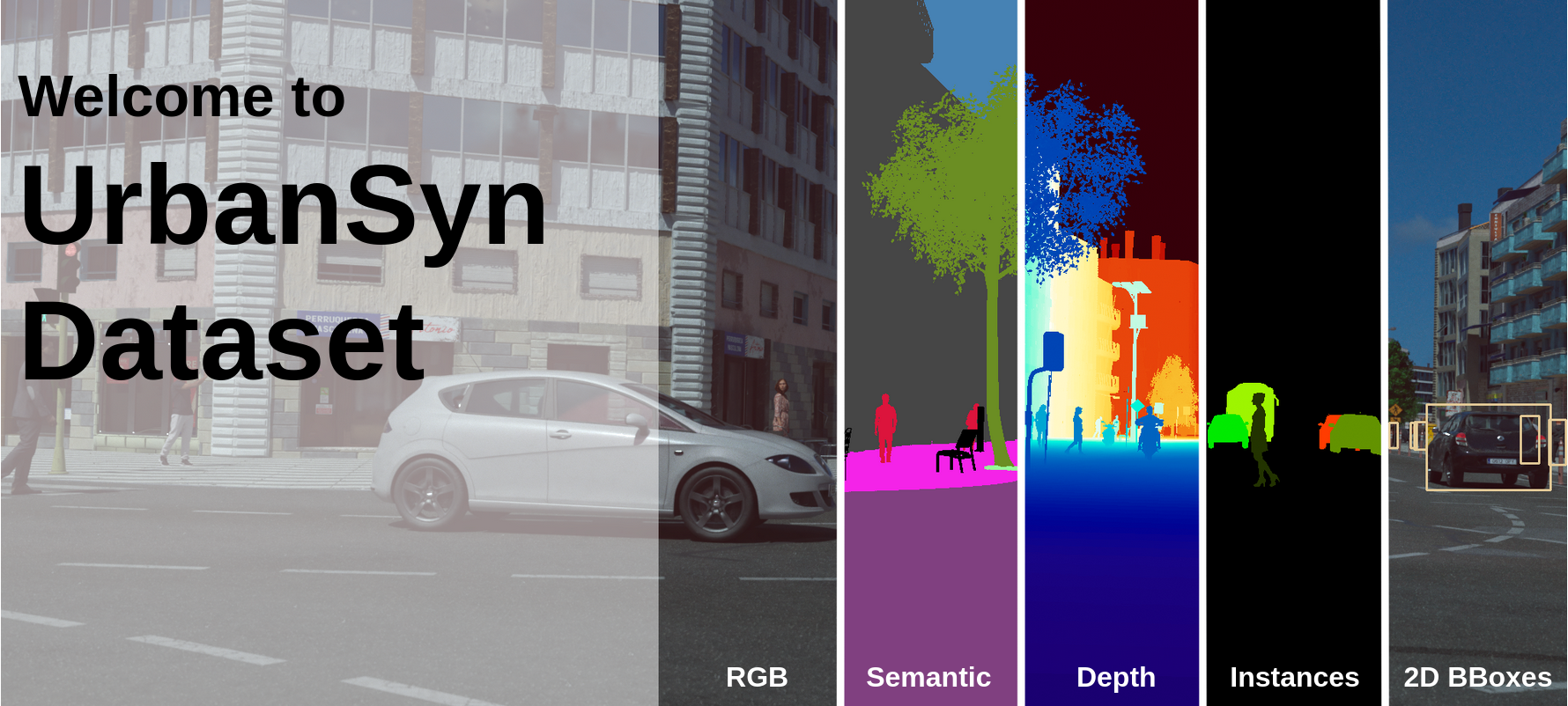

UrbanSyn Dataset

UrbanSyn is an open synthetic dataset featuring photorealistic driving scenes. It contains ground-truth annotations for semantic segmentation, scene depth, panoptic instance segmentation, and 2-D bounding boxes. Website https://urbansyn.org

Overview

UrbanSyn is a diverse, compact, and photorealistic dataset that provides more than 7.5k synthetic annotated images. It was born to address the synth-to-real domain gap, contributing to unprecedented synthetic-only baselines used by domain adaptation (DA) methods.

- Reduce the synth-to-real domain gap

UrbanSyn dataset helps to reduce the domain gap by contributing to unprecedented synthetic-only baselines used by domain adaptation (DA) methods.

- Ground-truth annotations

UrbanSyn comes with photorealistic color images, per-pixel semantic segmentation, depth, instance panoptic segmentation, and 2-D bounding boxes.

- Open for research and commercial purposes

UrbanSyn may be used for research and commercial purposes. It is released publicly under the Creative Commons Attribution-Commercial-ShareAlike 4.0 license.

- High-degree of photorealism

UrbanSyn features highly realistic and curated driving scenarios leveraging procedurally-generated content and high-quality curated assets. To achieve UrbanSyn photorealism we leverage industry-standard unbiased path-tracing and AI-based denoising techniques.

White Paper

When using or referring to the UrbanSyn dataset in your research, please cite our white paper:

@misc{gomez2023one,

title={All for One, and One for All: UrbanSyn Dataset, the third Musketeer of Synthetic Driving Scenes},

author={Jose L. Gómez and Manuel Silva and Antonio Seoane and Agnès Borrás and Mario Noriega and Germán Ros and Jose A. Iglesias-Guitian and Antonio M. López},

year={2023},

eprint={2312.12176},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Terms of Use

The UrbanSyn Dataset is provided by the Computer Vision Center (UAB) and CITIC (University of A Coruña).

UrbanSyn may be used for research and commercial purposes, and it is subject to the Creative Commons Attribution-Commercial-ShareAlike 4.0. A summary of the CC-BY-SA 4.0 licensing terms can be found [here].

Due to constraints from our asset providers for UrbanSyn, we prohibit the use of generative AI technologies for reverse engineering any assets or creating content for stock media platforms based on the UrbanSyn dataset.

While we strive to generate precise data, all information is presented 'as is' without any express or implied warranties. We explicitly disclaim all representations and warranties regarding the validity, scope, accuracy, completeness, safety, or utility of the licensed content, including any implied warranties of merchantability, fitness for a particular purpose, or otherwise.

Acknowledgements

Funded by Grant agreement PID2020-115734RB-C21 "SSL-ADA" and Grant agreement PID2020-115734RB-C22 "PGAS-ADA"

For more information about our team members and how to contact us, visit our website https://urbansyn.org

Folder structure and content

rgb: contains RGB images with a resolution of 2048x1024 in PNG format.ss and ss_colour: contains the pixel-level semantic segmentation labels in grayscale (value = Class ID) and colour (value = Class RGB) respectively in PNG format. We follow the 19 training classes defined on Cityscapes:name trainId color 'road' 0 (128, 64,128) 'sidewalk' 1 (244, 35,232) 'building' 2 ( 70, 70, 70) 'wall' 3 (102,102,156) 'fence' 4 (190,153,153) 'pole' 5 (153,153,153) 'traffic light' 6 (250,170, 30) 'traffic sign' 7 (220,220, 0) 'vegetation' 8 (107,142, 35) 'terrain' 9 (152,251,152) 'sky' 10 ( 70,130,180) 'person' 11 (220, 20, 60) 'rider' 12 (255, 0, 0) 'car' 13 ( 0, 0,142) 'truck' 14 ( 0, 0, 70) 'bus' 15 ( 0, 60,100) 'train' 16 ( 0, 80,100) 'motorcycle' 17 ( 0, 0,230) 'bicycle' 18 (119, 11, 32) 'unlabeled' 19 ( 0, 0, 0) panoptic: contains the instance segmentation of the dynamic objects of the image in PNG format. Each instance is codified using the RGB channels, where RG corresponds to the instance number and B to the class ID. Dynamic objects are Person, Rider, Car, Truck, Bus, Train, Motorcycle and Bicycle.bbox2D: contains the 2D bounding boxes and Instances information for all the dynamic objects in the image up to 110 meters of distance from the camera and bigger than 150 pixels. We provide the annotations in a json file with the next structure:- bbox: provides the bounding box size determined by the top left corner (xMin, yMin) and Bottom right corner (xMax, YMax).

- color: corresponds to the colour of the instance in the panoptic instance segmentation map inside panoptic folder.

- label: defines the class name

- occlusion_percentage: provides the occlusion percentatge of the object. Being 0 not occluded and 100 fully occluded.

depth: contains the depth map of the image in EXR format.

Download locally with huggingface_hub library

You can download the dataset on Python this way:

from huggingface_hub import snapshot_downloadsnapshot_download(repo_id="UrbanSyn/UrbanSyn", repo_type="dataset")More information about how to download and additional options can be found here

- Downloads last month

- 228