Datasets:

The dataset viewer is not available for this split.

Error code: StreamingRowsError

Exception: UnidentifiedImageError

Message: cannot identify image file <_io.BytesIO object at 0x7f4e001ce1d0>

Traceback: Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/split/first_rows.py", line 328, in compute

compute_first_rows_from_parquet_response(

File "/src/services/worker/src/worker/job_runners/split/first_rows.py", line 88, in compute_first_rows_from_parquet_response

rows_index = indexer.get_rows_index(

File "/src/libs/libcommon/src/libcommon/parquet_utils.py", line 631, in get_rows_index

return RowsIndex(

File "/src/libs/libcommon/src/libcommon/parquet_utils.py", line 512, in __init__

self.parquet_index = self._init_parquet_index(

File "/src/libs/libcommon/src/libcommon/parquet_utils.py", line 529, in _init_parquet_index

response = get_previous_step_or_raise(

File "/src/libs/libcommon/src/libcommon/simple_cache.py", line 566, in get_previous_step_or_raise

raise CachedArtifactError(

libcommon.simple_cache.CachedArtifactError: The previous step failed.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/src/services/worker/src/worker/utils.py", line 91, in get_rows_or_raise

return get_rows(

File "/src/libs/libcommon/src/libcommon/utils.py", line 183, in decorator

return func(*args, **kwargs)

File "/src/services/worker/src/worker/utils.py", line 68, in get_rows

rows_plus_one = list(itertools.islice(ds, rows_max_number + 1))

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/iterable_dataset.py", line 1392, in __iter__

example = _apply_feature_types_on_example(

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/iterable_dataset.py", line 1082, in _apply_feature_types_on_example

decoded_example = features.decode_example(encoded_example, token_per_repo_id=token_per_repo_id)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/features/features.py", line 1940, in decode_example

return {

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/features/features.py", line 1941, in <dictcomp>

column_name: decode_nested_example(feature, value, token_per_repo_id=token_per_repo_id)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/features/features.py", line 1341, in decode_nested_example

return schema.decode_example(obj, token_per_repo_id=token_per_repo_id)

File "/src/services/worker/.venv/lib/python3.9/site-packages/datasets/features/image.py", line 182, in decode_example

image = PIL.Image.open(bytes_)

File "/src/services/worker/.venv/lib/python3.9/site-packages/PIL/Image.py", line 3339, in open

raise UnidentifiedImageError(msg)

PIL.UnidentifiedImageError: cannot identify image file <_io.BytesIO object at 0x7f4e001ce1d0>Need help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

Dataset Card for FLAIR land-cover semantic segmentation

Context & Data

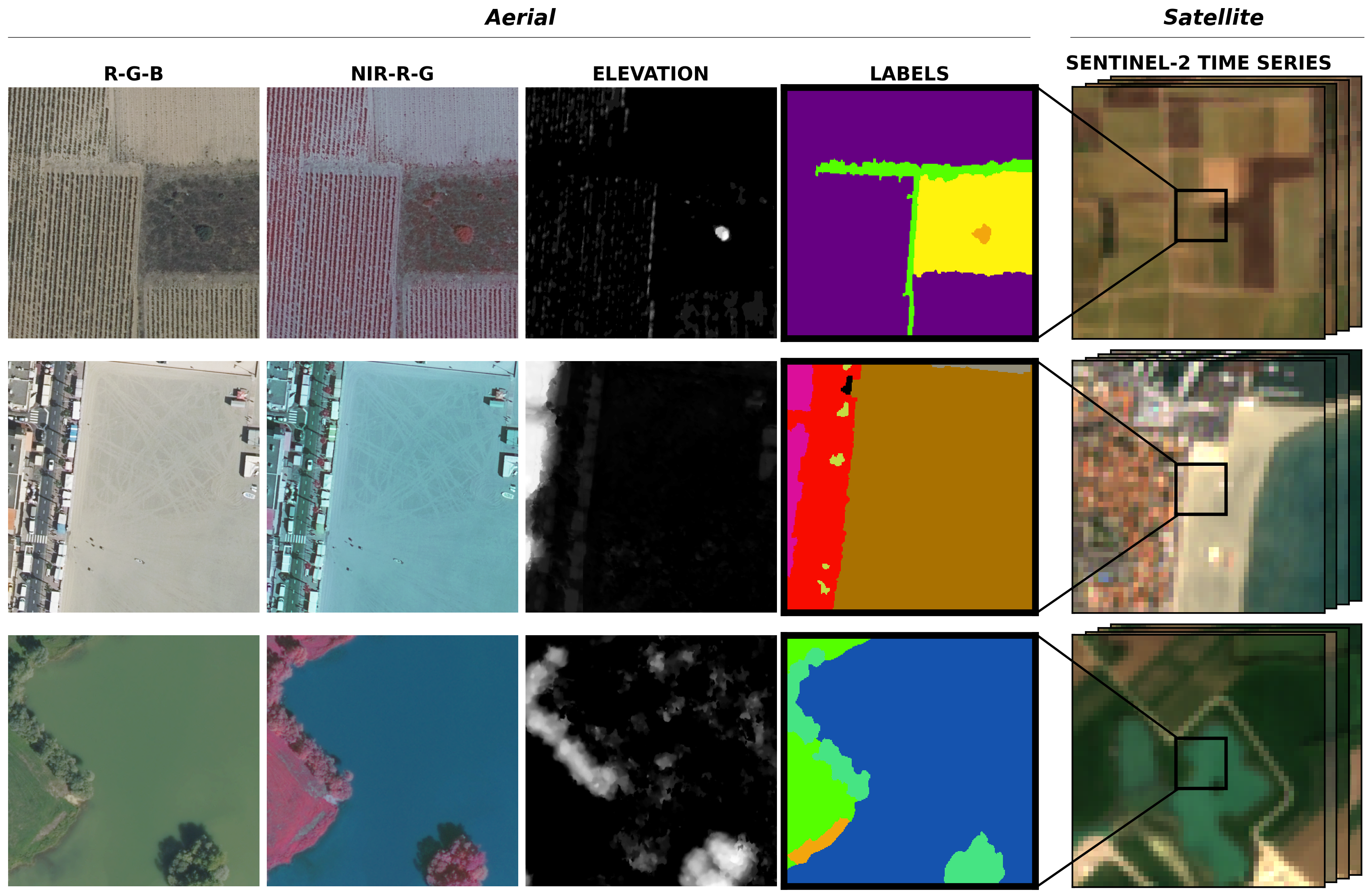

The hereby FLAIR (#1 and #2) dataset is sampled countrywide and is composed of over 20 billion annotated pixels of very high resolution aerial imagery at 0.2 m spatial resolution, acquired over three years and different months (spatio-temporal domains). Aerial imagery patches consist of 5 channels (RVB-Near Infrared-Elevation) and have corresponding annotation (with 19 semantic classes or 13 for the baselines). Furthermore, to integrate broader spatial context and temporal information, high resolution Sentinel-2 satellite 1-year time series with 10 spectral band are also provided. More than 50,000 Sentinel-2 acquisitions with 10 m spatial resolution are available.

The dataset covers 55 distinct spatial domains, encompassing 974 areas spanning 980 km². This dataset provides a robust foundation for advancing land cover mapping techniques.

We sample two test sets based on different input data and focus on semantic classes. The first test set (flair#1-test) uses very high resolution aerial imagery only and samples primarily anthropized land cover classes.

In contrast, the second test set (flair#2-test) combines aerial and satellite imagery and has more natural classes with temporal variations represented.

| Class | Train/val (%) | Test flair#1 (%) | Test flair#2 (%) | Class | Train/val (%) | Test flair#1 (%) | Test flair#2 (%) | ||

|---|---|---|---|---|---|---|---|---|---|

| (1) Building | 8.14 | 8.6 | 3.26 | (11) Agricultural Land | 10.98 | 6.95 | 18.19 | ||

| (2) Pervious surface | 8.25 | 7.34 | 3.82 | (12) Plowed land | 3.88 | 2.25 | 1.81 | ||

| (3) Impervious surface | 13.72 | 14.98 | 5.87 | (13) Swimming pool | 0.01 | 0.04 | 0.02 | ||

| (4) Bare soil | 3.47 | 4.36 | 1.6 | (14) Snow | 0.15 | - | - | ||

| (5) Water | 4.88 | 5.98 | 3.17 | (15) Clear cut | 0.15 | 0.01 | 0.82 | ||

| (6) Coniferous | 2.74 | 2.39 | 10.24 | (16) Mixed | 0.05 | - | 0.12 | ||

| (7) Deciduous | 15.38 | 13.91 | 24.79 | (17) Ligneous | 0.01 | 0.03 | - | ||

| (8) Brushwood | 6.95 | 6.91 | 3.81 | (18) Greenhouse | 0.12 | 0.2 | 0.15 | ||

| (9) Vineyard | 3.13 | 3.87 | 2.55 | (19) Other | 0.14 | 0.- | 0.04 | ||

| (10) Herbaceous vegetation | 17.84 | 22.17 | 19.76 |

Dataset Structure

The FLAIR dataset consists of a total of 93 462 patches: 61 712 patches for the train/val dataset, 15 700 patches for flair#1-test and 16 050 patches for flair#2-test.

Each patch includes a high-resolution aerial image (512x512) at 0.2 m, a yearly satellite image time series (40x40 by default by wider areas are provided) with a spatial resolution of 10 m and associated cloud and snow masks (available in train/val and flair#2-test), and pixel-precise elevation and land cover annotations at 0.2 m resolution (512x512).

Band order

- 1. Red

- 2. Green

- 3. Blue

- 4. NIR

- 5. nDSM

- 1. Blue (B2 490nm)

- 2. Green (B3 560nm)

- 3. Red (B4 665nm)

- 4. Red-Edge (B5 705nm)

- 5. Red-Edge2 (B6 470nm)

- 6. Red-Edge3 (B7 783nm)

- 7. NIR (B8 842nm)

- 8. NIR-Red-Edge (B8a 865nm)

- 9. SWIR (B11 1610nm)

- 10. SWIR2 (B12 2190nm)

Annotations

Each pixel has been manually annotated by photo-interpretation of the 20 cm resolution aerial imagery, carried out by a team supervised by geography experts from the IGN. Movable objects like cars or boats are annotated according to their underlying cover.

Data Splits

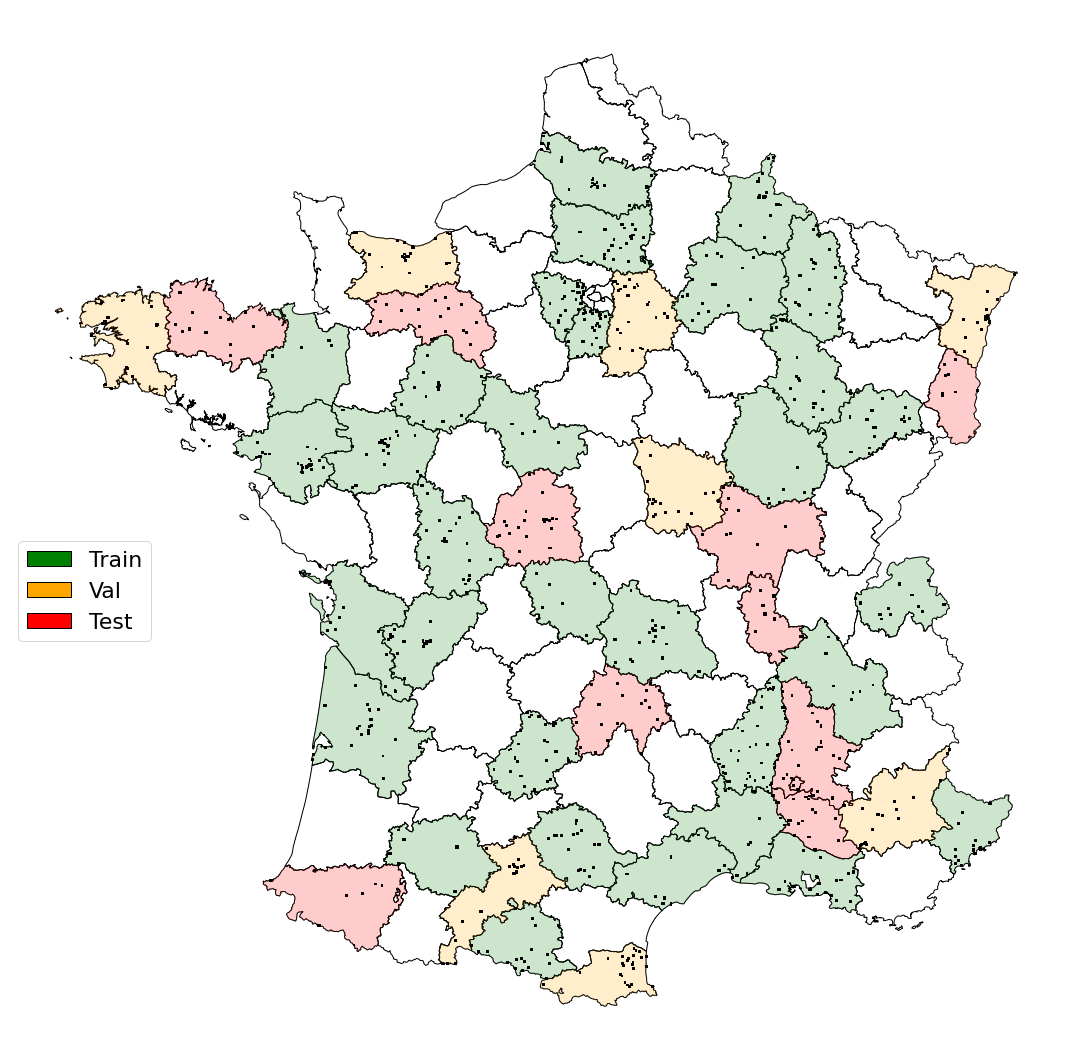

The dataset is made up of 55 distinct spatial domains, aligned with the administrative boundaries of the French départements.

For our experiments, we designate 32 domains for training, 8 for validation, and reserve 10 official test sets for flair#1-test and flair#2-test.

It can also be noted that some domains are common in the flair#1-test and flair#2-test datasets but cover different areas within the domain.

This arrangement ensures a balanced distribution of semantic classes, radiometric attributes, bioclimatic conditions, and acquisition times across each set.

Consequently, every split accurately reflects the landscape diversity inherent to metropolitan France.

It is important to mention that the patches come with meta-data permitting alternative splitting schemes.

Official domain split:

| TRAIN: | D006, D007, D008, D009, D013, D016, D017, D021, D023, D030, D032, D033, D034, D035, D038, D041, D044, D046, D049, D051, D052, D055, D060, D063, D070, D072, D074, D078, D080, D081, D086, D091 |

|---|---|

| VALIDATION: | D004, D014, D029, D031, D058, D066, D067, D077 |

| TEST-flair#1: | D012, D022, D026, D064, D068, D071, D075, D076, D083, D085 |

| TEST-flair#2: | D015, D022, D026, D036, D061, D064, D068, D069, D071, D084 |

Baseline code

Flair #1 (aerial only)

A U-Net architecture with a pre-trained ResNet34 encoder from the pytorch segmentation models library is used for the baselines. The used architecture allows integration of patch-wise metadata information and employs commonly used image data augmentation techniques.

Flair#1 code repository 📁 : https://github.com/IGNF/FLAIR-1

Link to the paper : https://arxiv.org/pdf/2211.12979.pdf

Please include a citation to the following article if you use the FLAIR#1 dataset:

@article{ign2022flair1,

doi = {10.13140/RG.2.2.30183.73128/1},

url = {https://arxiv.org/pdf/2211.12979.pdf},

author = {Garioud, Anatol and Peillet, Stéphane and Bookjans, Eva and Giordano, Sébastien and Wattrelos, Boris},

title = {FLAIR #1: semantic segmentation and domain adaptation dataset},

publisher = {arXiv},

year = {2022}

}

Flair #2 (aerial and satellite)

We propose the U-T&T model, a two-branch architecture that combines spatial and temporal information from very high-resolution aerial images and high-resolution satellite images into a single output. The U-Net architecture is employed for the spatial/texture branch, using a ResNet34 backbone model pre-trained on ImageNet. For the spatio-temporal branch, the U-TAE architecture incorporates a Temporal self-Attention Encoder (TAE) to explore the spatial and temporal characteristics of the Sentinel-2 time series data, applying attention masks at different resolutions during decoding. This model allows for the fusion of learned information from both sources, enhancing the representation of mono-date and time series data.

U-T&T code repository 📁 : https://github.com/IGNF/FLAIR-2

Link to the paper : https://arxiv.org/abs/2310.13336

HF_data_path : " " # Path to unzipped FLAIR HF dataset

domains_train : ["D006_2020","D007_2020","D008_2019","D009_2019","D013_2020","D016_2020","D017_2018","D021_2020","D023_2020","D030_2021","D032_2019","D033_2021","D034_2021","D035_2020","D038_2021","D041_2021","D044_2020","D046_2019","D049_2020","D051_2019","D052_2019","D055_2018","D060_2021","D063_2019","D070_2020","D072_2019","D074_2020","D078_2021","D080_2021","D081_2020","D086_2020","D091_2021"]

domains_val : ["D004_2021","D014_2020","D029_2021","D031_2019","D058_2020","D066_2021","D067_2021","D077_2021"]

domains_test : ["D015_2020","D022_2021","D026_2020","D036_2020","D061_2020","D064_2021","D068_2021","D069_2020","D071_2020","D084_2021"]

Please include a citation to the following article if you use the FLAIR#2 dataset:

@inproceedings{garioud2023flair,

title={FLAIR: a Country-Scale Land Cover Semantic Segmentation Dataset From Multi-Source Optical Imagery},

author={Anatol Garioud and Nicolas Gonthier and Loic Landrieu and Apolline De Wit and Marion Valette and Marc Poupée and Sébastien Giordano and Boris Wattrelos},

year={2023},

booktitle={Advances in Neural Information Processing Systems (NeurIPS) 2023},

doi={https://doi.org/10.48550/arXiv.2310.13336},

}

CodaLab challenges

The FLAIR dataset was used for two challenges organized by IGN in 2023 on the CodaLab platform.

Challenge FLAIR#1 : https://codalab.lisn.upsaclay.fr/competitions/8769

Challenge FLAIR#2 : https://codalab.lisn.upsaclay.fr/competitions/13447

flair#1-test | The podium:

🥇 businiao - 0.65920

🥈 Breizhchess - 0.65600

🥉 wangzhiyu918 - 0.64930

flair#2-test | The podium:

🥇 strakajk - 0.64130

🥈 Breizhchess - 0.63550

🥉 qwerty64 - 0.63510

Acknowledgment

This work was performed using HPC/AI resources from GENCI-IDRIS (Grant 2022-A0131013803). This work was supported by the project "Copernicus / FPCUP” of the European Union, by the French Space Agency (CNES) and by Connect by CNES.

Contact

If you have any questions, issues or feedback, you can contact us at: flair@ign.fr

Dataset license

The "OPEN LICENCE 2.0/LICENCE OUVERTE" is a license created by the French government specifically for the purpose of facilitating the dissemination of open data by public administration.

This licence is governed by French law.

This licence has been designed to be compatible with any free licence that at least requires an acknowledgement of authorship, and specifically with the previous version of this licence as well as with the following licences: United Kingdom’s “Open Government Licence” (OGL), Creative Commons’ “Creative Commons Attribution” (CC-BY) and Open Knowledge Foundation’s “Open Data Commons Attribution” (ODC-BY).

- Downloads last month

- 974