The viewer is disabled because this dataset repo requires arbitrary Python code execution. Please consider

removing the

loading script

and relying on

automated data support

(you can use

convert_to_parquet

from the datasets library). If this is not possible, please

open a discussion

for direct help.

Dataset Card for "argmicro"

Dataset Summary

The arg-microtexts corpus features 112 short argumentative texts. All texts were originally written in German and have been professionally translated to English.

Based on Freeman’s theory of the macro-structure of arguments (1991; 2011) and Toulmin (2003)'s diagramming techniques, ArgMicro consists of pro (proponent) and opp (opponent) components and six types of relations: seg (segment), add (addition), exa (example), reb (rebut), sup (support), and und (undercut). It also introduced segment-based spans, which also contain non-argumentative parts, in order to cover the whole text.

Supported Tasks and Leaderboards

- Tasks: Structure Prediction, Relation Identification, Central Claim Identification, Role Classification, Function Classification

- Leaderboards: [More Information Needed]

Languages

German, with English translation (by a professional translator).

Dataset Structure

Data Instances

- Size of downloaded dataset files: 2.89 MB

{

"id": "micro_b001",

"topic_id": "waste_separation",

"stance": 1,

"text": "Yes, it's annoying and cumbersome to separate your rubbish properly all the time. Three different bin bags stink away in the kitchen and have to be sorted into different wheelie bins. But still Germany produces way too much rubbish and too many resources are lost when what actually should be separated and recycled is burnt. We Berliners should take the chance and become pioneers in waste separation!",

"edus": {

"id": ["e1", "e2", "e3", "e4", "e5"],

"start": [0, 82, 184, 232, 326],

"end": [81, 183, 231, 325, 402]

},

"adus": {

"id": ["a1", "a2", "a3", "a4", "a5"],

"type": [0, 0, 1, 1, 1]

},

"edges": {

"id": ["c1", "c10", "c2", "c3", "c4", "c6", "c7", "c8", "c9"],

"src": ["a1", "e5", "a2", "a3", "a4", "e1", "e2", "e3", "e4"],

"trg": ["a5", "a5", "a1", "c1", "c3", "a1", "a2", "a3", "a4"],

"type": [4, 0, 1, 5, 3, 0, 0, 0, 0]

}

}

Data Fields

id: the instanceidof the document, astringfeaturetopic_id: the topic of the document, astringfeature (see list of topics)stance: the index of stance on the topic, anintfeature (see stance labels)text: the text content of the document, astringfeatureedus: elementary discourse units; a segmented span of text (see the authors' further explanation)id: the instanceidof EDUs, a list ofstringfeaturestart: the indices indicating the inclusive start of the spans, a list ofintfeatureend: the indices indicating the exclusive end of the spans, a list ofintfeature

adus: argumentative discourse units; argumentatively relevant claims built on EDUs (see the authors' further explanation)id: the instanceidof ADUs, a list ofstringfeaturetype: the indices indicating the ADU type, a list ofintfeature (see type list)

edges: the relations betweenadusoradusand otheredges(see the authors' further explanation)id: the instanceidof edges, a list ofstringfeaturesrc: theidofadusindicating the source element in a relation, a list ofstringfeaturetrg: theidofadusoredgesindicating the target element in a relation, a list ofstringfeaturetype: the indices indicating the edge type, a list ofintfeature (see type list)

Data Splits

| train | |

|---|---|

| No. of instances | 112 |

| No. of sentences/instance (on average) | 5.1 |

Data Labels

Stance

| Stance | Count | Percentage |

|---|---|---|

pro |

46 | 41.1 % |

con |

42 | 37.5 % |

unclear |

1 | 0.9 % |

UNDEFINED |

23 | 20.5 % |

pro: yes, in favour of the proposed issuecon: no, against the proposed issueunclear: the position of the author is unclearUNDEFINED: no stance label assigned

See stances types.

ADUs

| ADUs | Count | Percentage |

|---|---|---|

pro |

451 | 78.3 % |

opp |

125 | 21.7 % |

pro: proponent, who presents and defends his claimsopp: opponent, who critically questions the proponent in a regimented fashion (Peldszus, 2015, p.5)

Relations

| Relations | Count | Percentage |

|---|---|---|

support: sup |

281 | 55.2 % |

support: exa |

9 | 1.8 % |

attack: und |

65 | 12.8 % |

attack: reb |

110 | 21.6 % |

other: joint |

44 | 8.6 % |

sup: support (ADU->ADU)exa: support by example (ADU->ADU)add: additional source, for combined/convergent arguments with multiple premises, i.e., linked support, convergent support, serial support (ADU->ADU)reb: rebutting attack (ADU->ADU)- definition: "targeting another node and thereby challenging its acceptability"

und: undercutting attack (ADU->Edge)- definition: "targeting an edge and thereby challenging the acceptability of the inference from the source to the target node" (P&S, 2016; EN annotation guideline)

joint: combines text segments if one does not express a complete proposition on its own, or if the author divides a clause/sentence into parts, using punctuation

See other corpus statistics in Peldszus (2015), Section 5.

Example

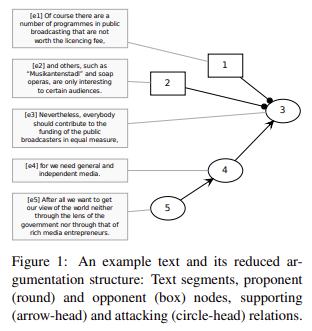

(Peldszus & Stede, 2015, p. 940, Figure 1)

Dataset Creation

This section is composed of information and excerpts provided in Peldszus (2015).

Curation Rationale

"Argumentation can, for theoretical purposes, be studied on the basis of carefully constructed examples that illustrate specific phenomena...[We] address this need by making a resource publicly available that is designed to fill a particular gap." (pp. 2-3)

Source Data

23 texts were written by the authors as a “proof of concept” for the idea. These texts also have been used as examples in teaching and testing argumentation analysis with students.

90 texts have been collected in a controlled text generation experiment, where normal competent language users wrote short texts of controlled linguistic and rhetoric complexity.

Initial Data Collection and Normalization

"Our contribution is a collection of 112 “microtexts” that have been written in response to trigger questions, mostly in the form of “Should one do X”. The texts are short but at the same time “complete” in that they provide a standpoint and a justification, by necessity in a fairly dense form." (p.2)

"The probands were asked to first gather a list with the pros and cons of the trigger question, then take stance for one side and argue for it on the basis of their reflection in a short argumentative text. Each text was to fulfill three requirements: It should be about five segments long; all segments should be argumentatively relevant, either formulating the main claim of the text, supporting the main claim or another segment, or attacking the main claim or another segment. Also, the probands were asked that at least one possible objection to the claim should be considered in the text. Finally, the text should be written in such a way that it would be understandable without having its trigger question as a headline." (p.3)

"[A]ll texts have been corrected for spelling and grammar errors...Their segmentation was corrected when necessary...some modifications in the remaining segments to maintain text coherence, which we made as minimal as possible." (p.4)

"We thus constrained the translation to preserve the segmentation of the text on the one hand (effectively ruling out phrasal translations of clause-type segments) and to preserve its linearization on the other hand (disallowing changes to the order of appearance of arguments)." (p.5)

Who are the source language producers?

The texts with ids b001-b064 and k001-k031 have been collected in a controlled text generation experiment from 23 subjects discussing various controversial issues from a fixed list. All probands were native speakers of German, of varying age, education and profession.

The texts with ids d01-d23 have been written by Andreas Peldszus, the author.

Annotations

Annotation process

All texts are annotated with argumentation structures, following the scheme proposed in Peldszus & Stede (2013). For inter-annotator-agreement scores see Peldszus (2014). The (German) annotation guidelines are published in Peldszus, Warzecha, Stede (2016). See the annotation guidelines (de, en), and the annotation schemes.

"[T]he markup of argumentation structures in the full corpus was done by one expert annotator. All annotations have been checked, controversial instances have been discussed in a reconciliation phase by two or more expert annotators...The annotation of the corpus was originally done manually on paper. In follow-up annotations, we used GraPAT (Sonntag & Stede, 2014)." (p.7)

Who are the annotators?

[More Information Needed]

Personal and Sensitive Information

[More Information Needed]

Considerations for Using the Data

Social Impact of Dataset

"Automatic argumentation recognition has many possible applications, including improving document summarization (Teufel and Moens, 2002), retrieval capabilities of legal databases (Palau and Moens, 2011), opinion mining for commercial purposes, or also as a tool for assessing public opinion on political questions.

"...[W]e suggest there is yet one resource missing that could facilitate the development of automatic argumentation recognition systems: Short texts with explicit argumentation, little argumentatively irrelevant material, less rhetorical gimmicks (or even deception), in clean written language." (Peldszus, 2014, p. 88)

Discussion of Biases

[More Information Needed]

Other Known Limitations

[More Information Needed]

Additional Information

Dataset Curators

[More Information Needed]

Licensing Information

The arg-microtexts corpus is released under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. (see license agreement)

Citation Information

@inproceedings{peldszus2015annotated,

title={An annotated corpus of argumentative microtexts},

author={Peldszus, Andreas and Stede, Manfred},

booktitle={Argumentation and Reasoned Action: Proceedings of the 1st European Conference on Argumentation, Lisbon},

volume={2},

pages={801--815},

year={2015}

}

@inproceedings{peldszus2014towards,

title={Towards segment-based recognition of argumentation structure in short texts},

author={Peldszus, Andreas},

booktitle={Proceedings of the First Workshop on Argumentation Mining},

pages={88--97},

year={2014}

}

Contributions

Thanks to @idalr for adding this dataset.

- Downloads last month

- 116