MiniGPT-4 checkpoint aligned with @panopstor's FF7R dataset (link in the EveryDream discord). Produces captions that are more useful for training SD datasets that MiniGPT4's default output.

Easiest way to use this is to launch a docker instance for oobabooga/text-generation-webui, eg TheBloke/runpod-pytorch-runclick, follow the instructions for MiniGPT-4 here. For now you'll need to manually edit minigpt_pipeline.py (this line to point to the .pth file in this repo instead of the default.

Dataset

adapted from the @panopstor's FF7R dataset - zip here

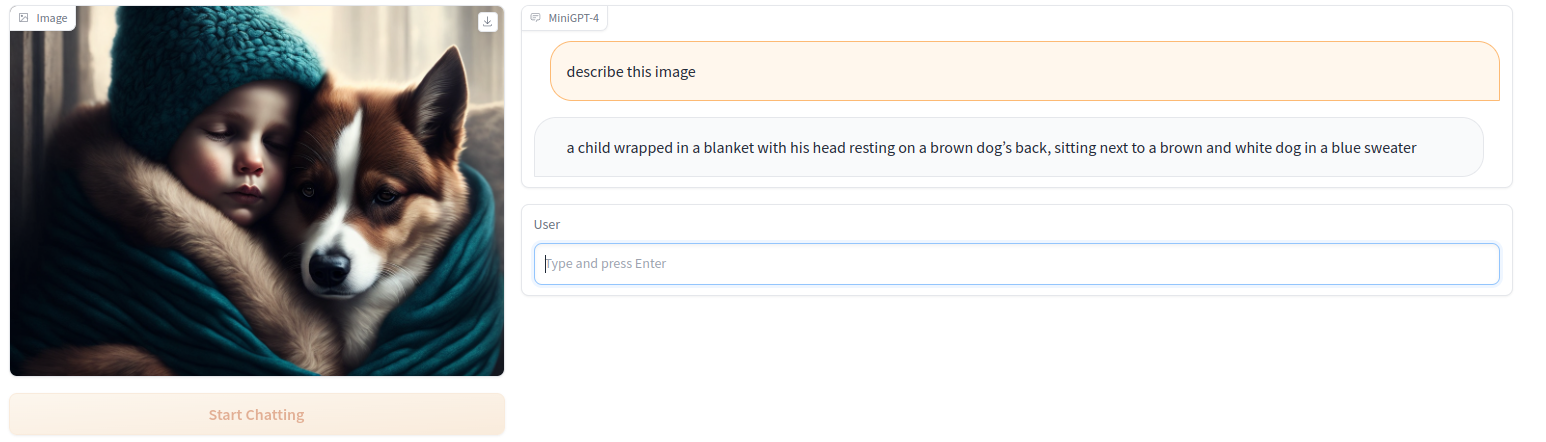

Sample output:

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

HF Inference deployability: The model has no library tag.