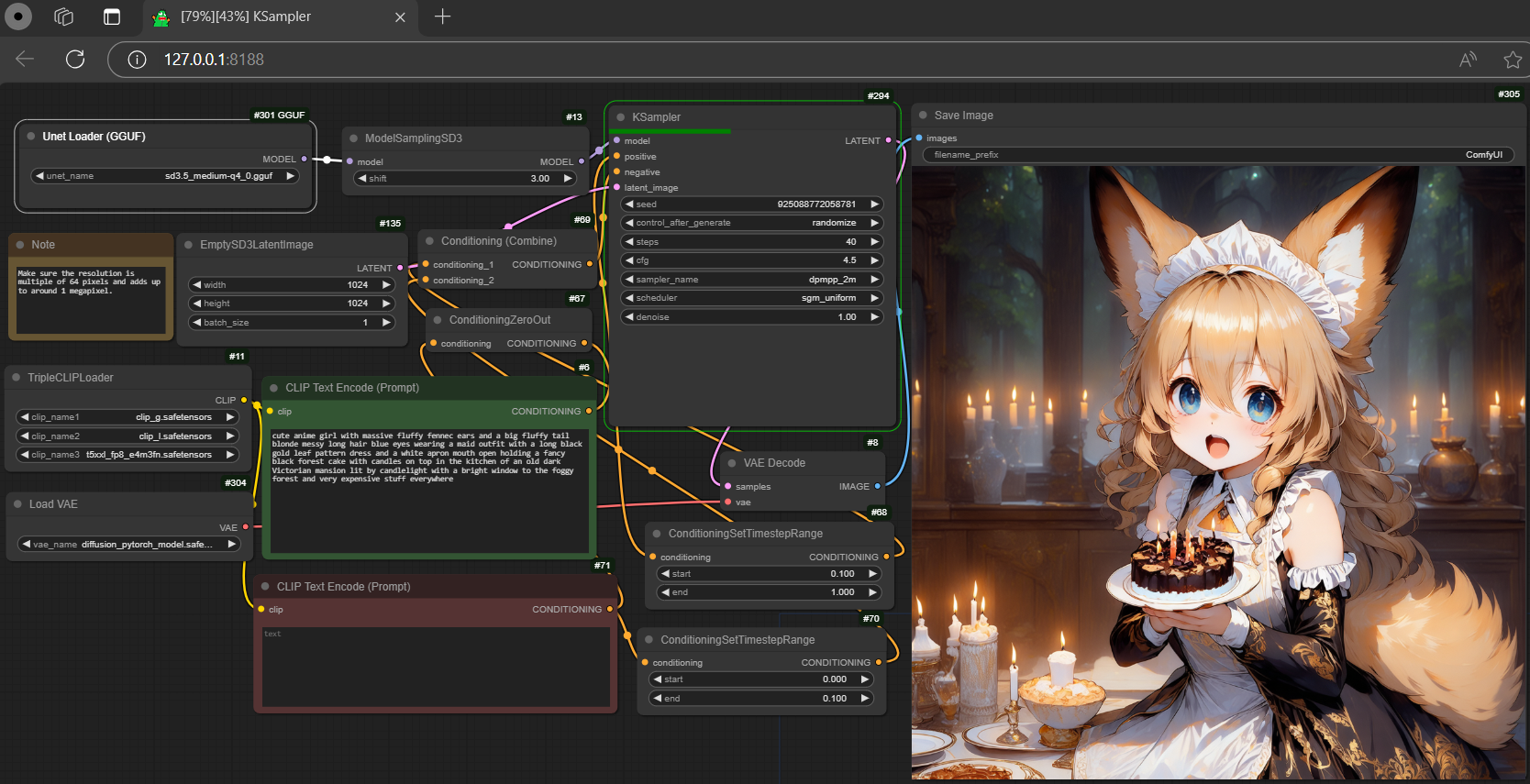

GGUF quantized version of Stable Diffusion 3.5 Medium

Setup (once)

- drag sd3.5_medium-q5_0.gguf (2.02GB) to > ./ComfyUI/models/unet

- drag clip_g.safetensors (1.39GB) to > ./ComfyUI/models/clip

- drag clip_l.safetensors (246MB) to > ./ComfyUI/models/clip

- drag t5xxl_fp8_e4m3fn.safetensors (4.89GB) to > ./ComfyUI/models/clip

- drag diffusion_pytorch_model.safetensors (168MB) to > ./ComfyUI/models/vae

Run it straight (no installation needed way)

- run the .bat file in the main directory (assuming you are using the gguf-comfy pack below)

- drag the workflow json file (see below) to > your browser

- generate your first picture with sd3, awesome!

Workflows

- example workflow for gguf (if it doesn't work, upgrade your pack: ggc y) 👻

- example workflow for the original safetensors 🎃

Bug reports (or brief review)

- t/q1_0 and t/q2_0; not working recently (invalid GGMLQ type error)

- q2_k is super fast; not usable; but might be good for medical research or abstract painter

- q3 family is fast; finger issue can be easily detected but picture quality is interestingly good

- btw, q3 family is usable; just need some effort on +/- prompt(s); good for old machine user

- notice the same file size in some quantized models; but they are different (according to its SHA256 hash) even they are exactly the same in size; still keep them all here (in full set); see who can deal with it

- q4 and above should be no problem for general-to-high quality production (demo picture shown above generated by just Q4_0 - 1.74GB); and sd team is pretty considerable, though you might not think this model is useful if you have good hardware; imagine you can run it with merely an ancient CPU, should probably appreciate their great effort; good job folks, thumbs up 👍

Upper tier options

References

- base model from stabilityai

- comfyui from comfyanonymous

- gguf node from city96

- gguf-comfy pack

- Downloads last month

- 3,137

Hardware compatibility

Log In

to view the estimation

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for calcuis/sd3.5-medium-gguf

Base model

stabilityai/stable-diffusion-3.5-medium