Vision transformer pre-trained with MAE on Formula 1 racing dataset

Vision transformer baze-sized (ViT base) feature model. Pre-trained with Masked Autoencoder (MAE) Self-Supervised approach on the custom Formula 1 racing dataset from Constructor SportsTech, allowes the extraction of features that are more efficient for use in Computer Vision tasks in the areas of racing and Formula 1 then features pre-trained on standard ImageNet-1K. This ViT model is ready for use in Transformers library realization of MAE.

Model Details

- Model type: feature backbone

- Image size: 224 x 224

- Original MAE repo: https://github.com/facebookresearch/mae

- Original paper: Masked Autoencoders Are Scalable Vision Learners (https://arxiv.org/abs/2111.06377)

Training Procedure

F1 ViT-base MAE was pre-trained on the custom dataset containing more than 1 million Formula 1 images from seasons 2021, 2022, 2023 with both racing and non racing scenes. The traing was performed on a cluster of 8 A100 80GB GPUs provided by Nebius who invited us to technical preview of their platform.

Training Hyperparameters

- Masking proportion during pre-training: 75 %

- Normalized pixels during pre-training: False

- Epochs: 500

- Batch size: 4096

- Learning rate: 3e-3

- Warmup: 40 epochs

- Optimizer: AdamW

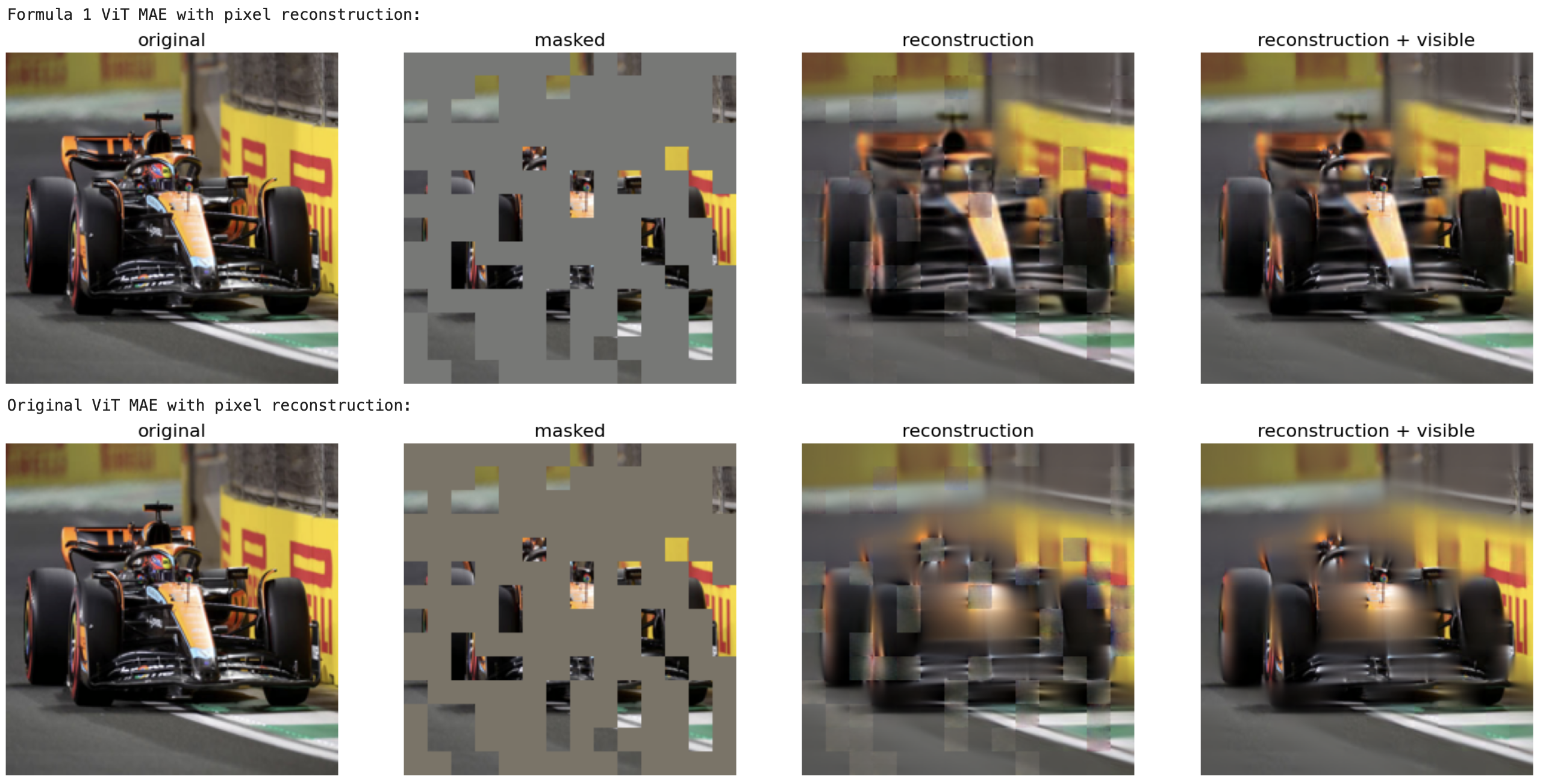

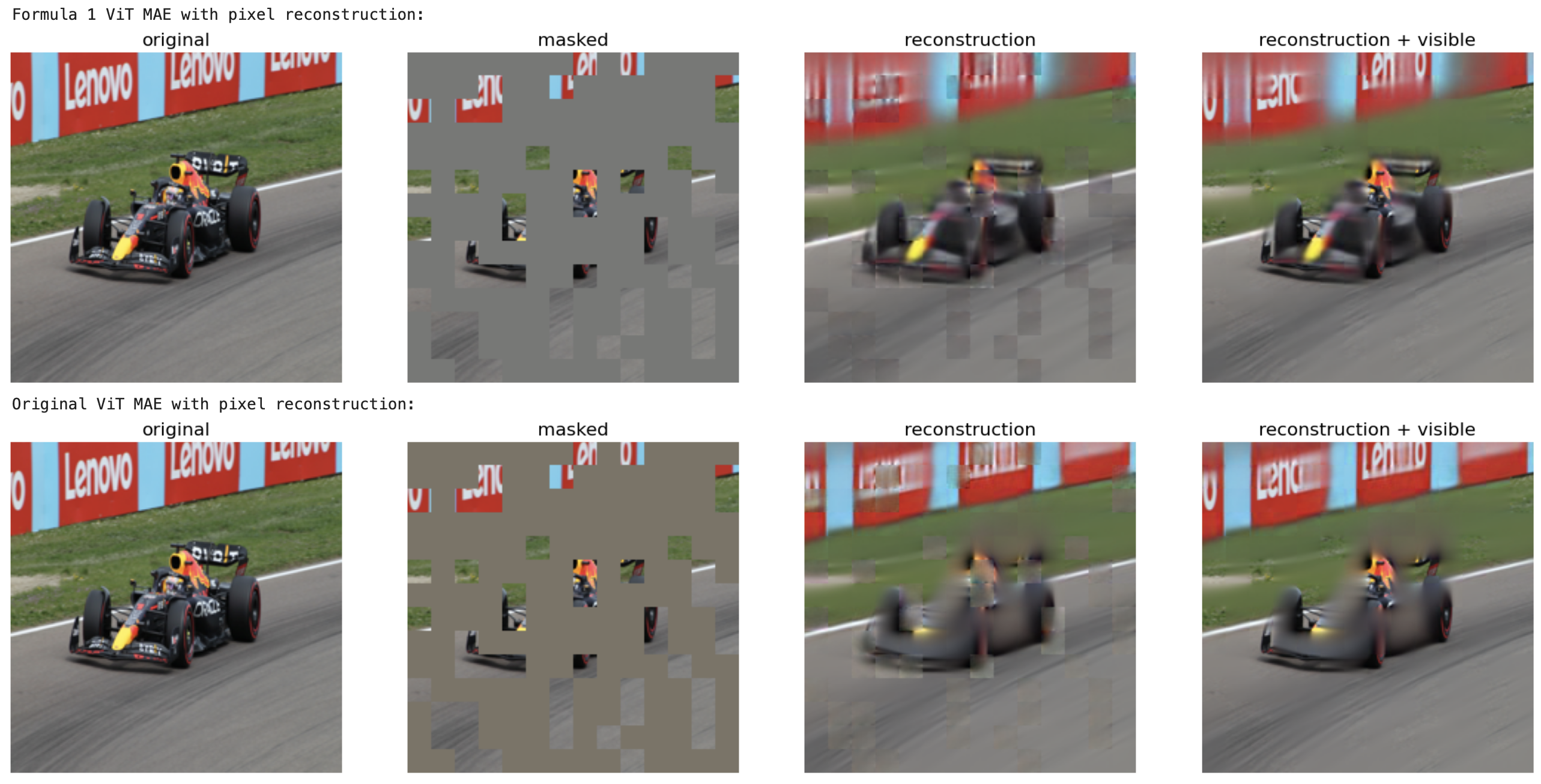

Comparison with ViT-base MAE pre-trained on ImageNet-1K

Comparison of F1 ViT-base MAE and original ViT-base MAE pre-trained on ImageNet-1K by reconstruction results on images from Formula 1 domain. Top is F1 ViT-base MAE reconstruction output, bottom is original ViT-base MAE.

How to use

Usage is the same as in Transformers library realization of MAE.

from transformers import AutoImageProcessor, ViTMAEForPreTraining

from PIL import Image

import requests

url = 'https://huggingface.co/andrewbo29/vit-mae-base-formula1/blob/main/racing_example.jpg'

image = Image.open(requests.get(url, stream=True).raw)

processor = AutoImageProcessor.from_pretrained('andrewbo29/vit-mae-base-formula1')

model = ViTMAEForPreTraining.from_pretrained('andrewbo29/vit-mae-base-formula1')

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

loss = outputs.loss

mask = outputs.mask

ids_restore = outputs.ids_restore

BibTeX entry and citation info

@article{DBLP:journals/corr/abs-2111-06377,

author = {Kaiming He and

Xinlei Chen and

Saining Xie and

Yanghao Li and

Piotr Doll{\'{a}}r and

Ross B. Girshick},

title = {Masked Autoencoders Are Scalable Vision Learners},

journal = {CoRR},

volume = {abs/2111.06377},

year = {2021},

url = {https://arxiv.org/abs/2111.06377},

eprinttype = {arXiv},

eprint = {2111.06377},

timestamp = {Tue, 16 Nov 2021 12:12:31 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-2111-06377.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

- Downloads last month

- 6