pegasus-large-cnn-quantized

This model is a fine-tuned version of google/pegasus-large on CNN/DailyMail dataset.

Model description

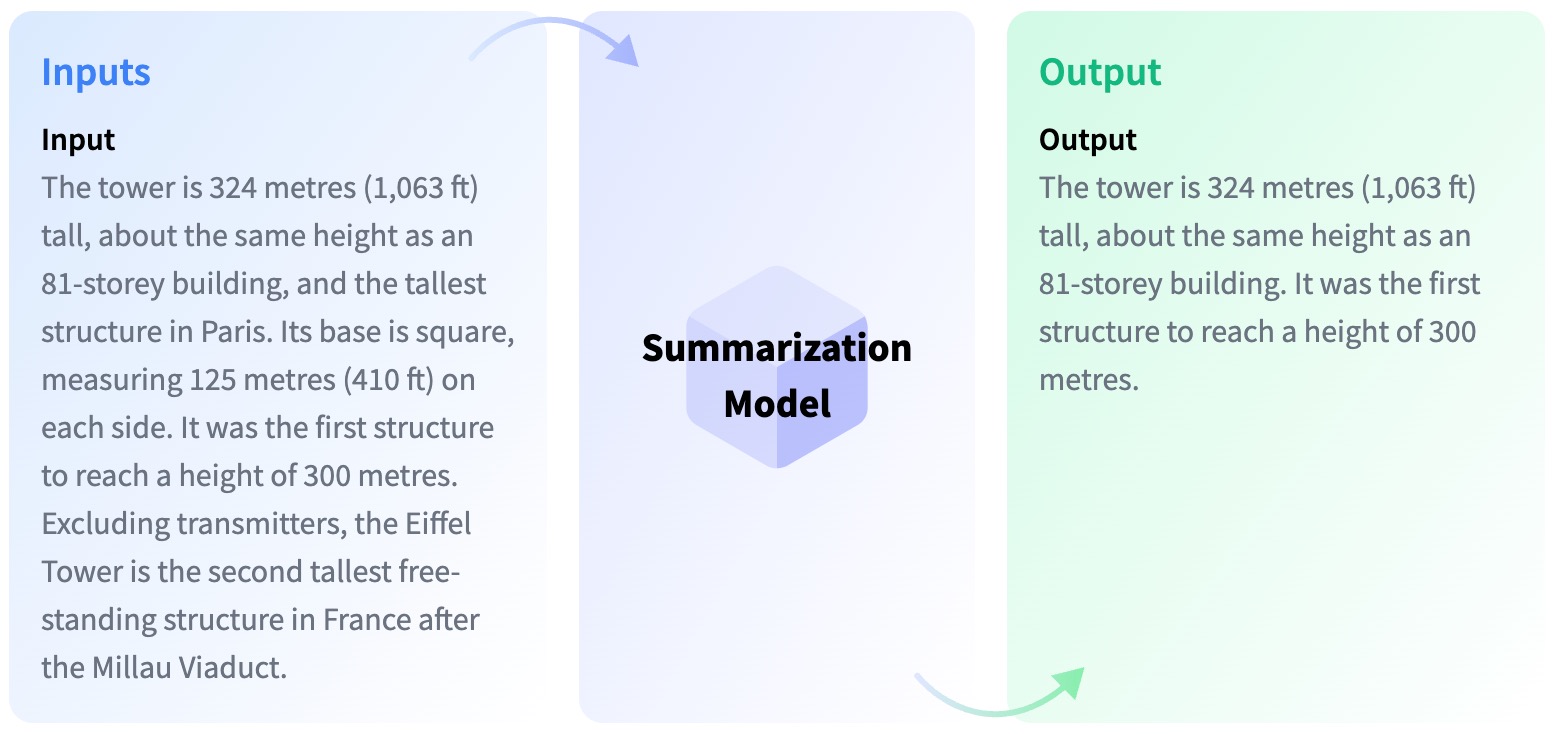

Online news reading has become one of the most popular ways to consume the latest news. News aggregation websites such as Google News and Yahoo News have made it easy for users to find the latest news and provide thousands of news stories from hundreds of news publishers. As people have limited time, reading all news articles is not feasible. The success of zero-shot and few-shot prompting with models like GPT-3 has led to a paradigm shift in NLP. So, in the era of ChatGPT, we we conducted experiments using various large language models (LLMs) such as BART and PEGASUS to improve the quality and coherence of the generated news summaries.

This is a quantized model finetuned on the CNN/DailyMail dataset.

Training procedure

Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 123

- optimizer: AdamW with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

Framework versions

- Transformers 4.29.0

- Pytorch 1.13.1+cu117

- Datasets 2.12.0

- Tokenizers 0.13.3

- Downloads last month

- 2