Emojich

generate emojis from text

Model was trained by Sber AI

- Task:

text2image generation - Num Parameters:

1.3 B - Training Data Volume:

120 million text-image pairs&2749 text-emoji pairs

Model Description

😋 Emojich is a 1.3 billion params model from the family GPT3-like, it generates emoji-style images with the brain of ◾ Malevich.

Fine-tuning stage:

The main goal of fine-tuning is trying to keep the generalization of ruDALL-E Malevich (XL) model on text to emoji tasks. ruDALL-E Malevich is a multi-modality big pretrained transformer, that uses images and texts. The idea with freezing feedforward and self-attention layers in pretrained transformer is demonstrated high performance in changing different modalities. Also, the model has a good chance for over-fitting text modality and lost generalization. To deal with this problem is increased coefficient 10^3 in weighted cross-entropy loss for image codebooks part.

Full version of training code is available on Kaggle:

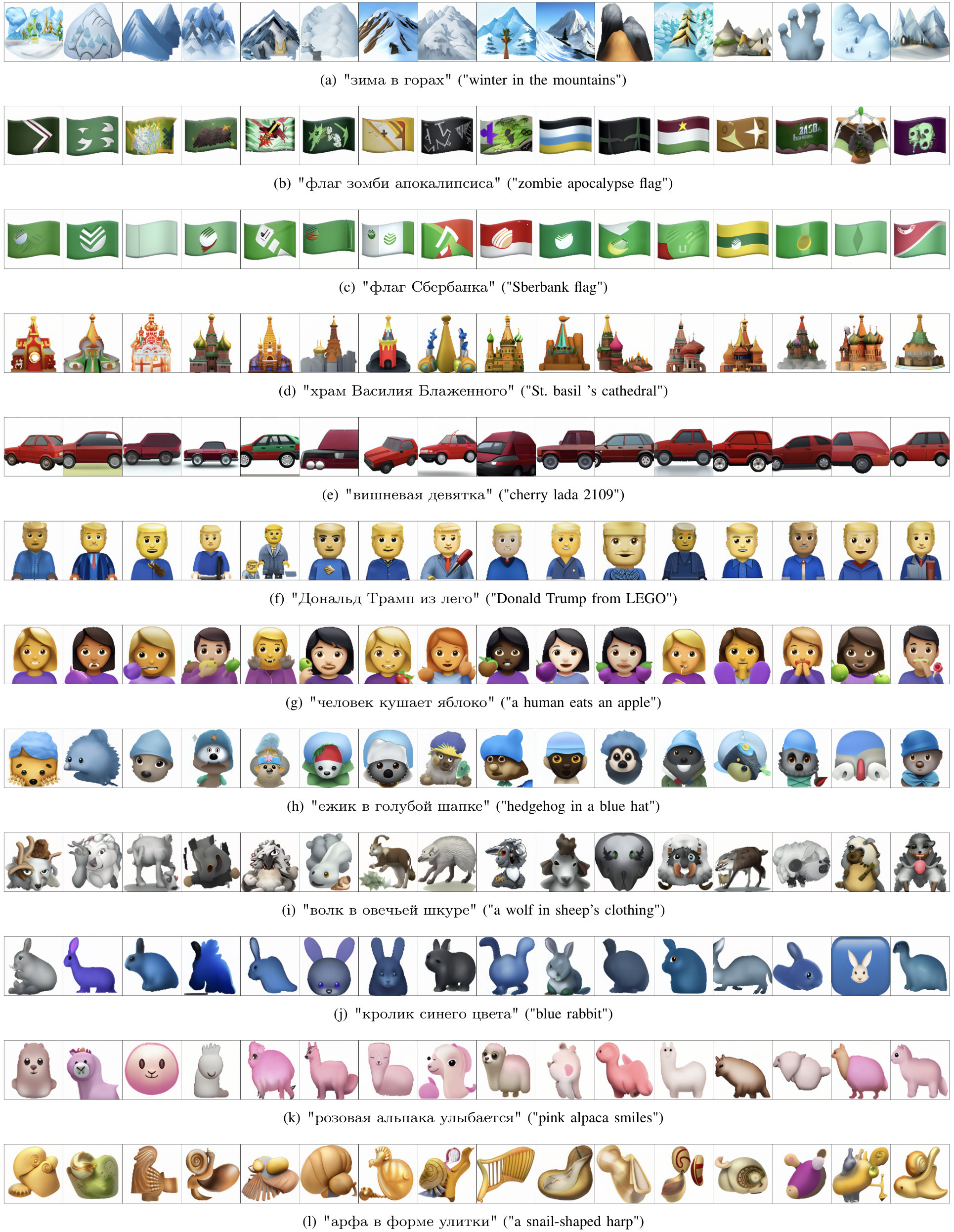

Examples of generated emojis

All examples are generated automatically (without manual cherry-picking) with hyper-parameters: seed 42, batch size 16, top-k 2048, top-p 0.995, temperature 1.0, GPU A100. For making better generative emojis should use more attempts (~512) and select the best one manually.

Remember, the great art makers became "great" after creating just only one masterpiece.