license: apache-2.0

language:

- en

- az

- sw

- af

- ar

- ba

- be

- bxr

- bg

- bn

- cv

- hy

- da

- de

- el

- es

- eu

- fa

- fi

- fr

- he

- hi

- hu

- kk

- id

- it

- ja

- ka

- ky

- ko

- lt

- lv

- mn

- ml

- os

- mr

- ms

- my

- nl

- ro

- pl

- pt

- sah

- ru

- tg

- sv

- ta

- te

- tk

- th

- tr

- tl

- tt

- tyv

- uk

- en

- ur

- vi

- uz

- yo

- zh

- xal

pipeline_tag: text-generation

tags:

- PyTorch

- Transformers

- gpt3

- gpt2

- Deepspeed

- Megatron

datasets:

- mc4

- wikipedia

thumbnail: https://github.com/sberbank-ai/mgpt

Multilingual GPT model

We introduce family of autoregressive GPT-like models with 1.3 billion parameters trained on 60 languages from 25 language families using Wikipedia and Colossal Clean Crawled Corpus.

We reproduce the GPT-3 architecture using GPT-2 sources and the sparse attention mechanism, Deepspeed and Megatron frameworks allows us to effectively parallelize the training and inference steps. Resulting models show performance on par with the recently released XGLM models at the same time covering more languages and enhance NLP possibilities for low resource languages.

Code

The source code for the mGPT XL model is available on Github

Paper

mGPT: Few-Shot Learners Go Multilingual

@misc{https://doi.org/10.48550/arxiv.2204.07580,

doi = {10.48550/ARXIV.2204.07580},

url = {https://arxiv.org/abs/2204.07580},

author = {Shliazhko, Oleh and Fenogenova, Alena and Tikhonova, Maria and Mikhailov, Vladislav and Kozlova, Anastasia and Shavrina, Tatiana},

keywords = {Computation and Language (cs.CL), Artificial Intelligence (cs.AI), FOS: Computer and information sciences, FOS: Computer and information sciences, I.2; I.2.7, 68-06, 68-04, 68T50, 68T01},

title = {mGPT: Few-Shot Learners Go Multilingual},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}

Languages

Model supports 60 languages:

ISO codes:

az, sw, af, ar, ba, be, bxr, bg, bn, cv, hy, da, de, el, es, eu, fa, fi, fr, he, hi, hu, kk, id, it, ja, ka, ky, ko, lt, lv, mn, ml, os, mr, ms, my, nl, ro, pl, pt, sah, ru, tg, sv, ta, te, tk, th, tr, tl, tt, tyv, uk, en, ur, vi, uz, yo, zh, xal

Languages:

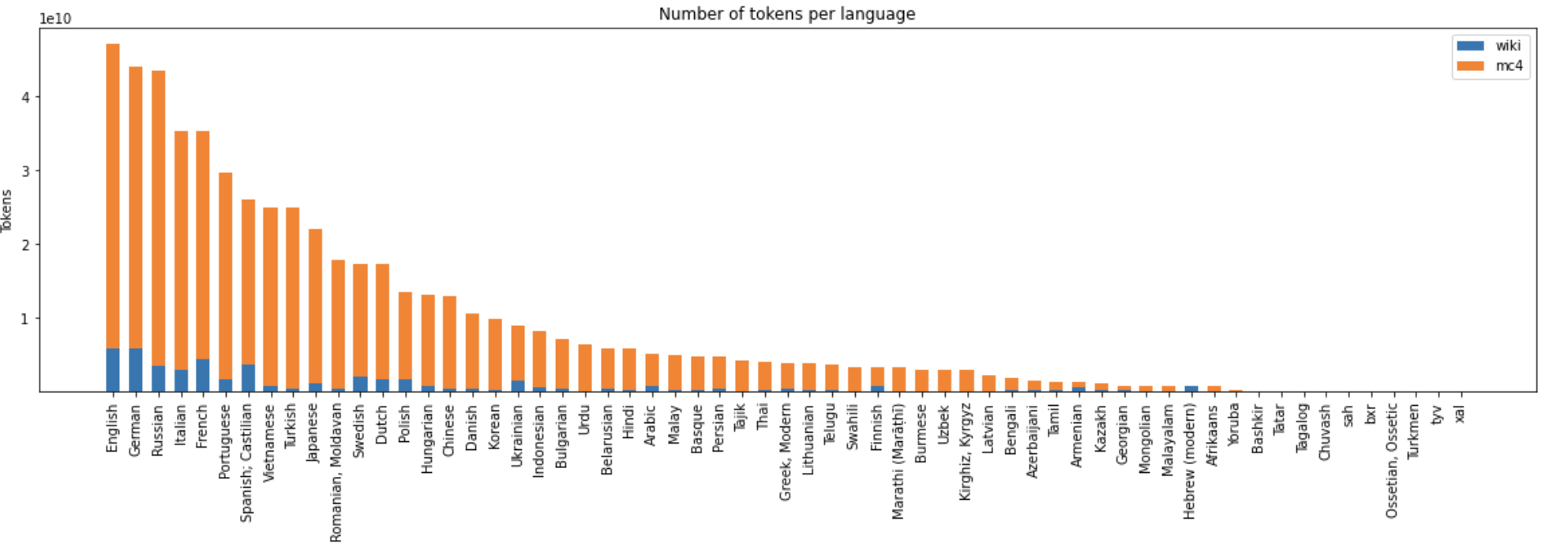

Training Data Statistics

- Size: 488 Billion UTF characters

"General training corpus statistics"

"General training corpus statistics"

Details

Model was trained with sequence length 512 using Megatron and Deepspeed libs by SberDevices team on a dataset of 600 GB of texts in 60 languages. The model has seen 440 billion BPE tokens in total.

Total training time was around 12 days on 256 Nvidia V100 GPUs.