File size: 5,192 Bytes

adf14b0 b767fe7 adf14b0 b767fe7 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 |

---

license: apache-2.0

inference: true

tags:

- vicuna

---

**This model is a 8bit quantization of Vicuna 13B.**

- 13B parameters

- Group size: 128

- wbits: 8

- true-sequential: yes

- act-order: yes

- 8-bit quantized - Read more about this here: https://github.com/ggerganov/llama.cpp/pull/951

- Conversion process: Llama 13B -> Llama 13B HF -> Vicuna13B-v1.1 HF -> Vicuna13B-v1.1-8bit-128g

<br>

<br>

# Basic installation procedure

- It was a nightmare, I will only detail briefly what you'll need. WSL was quite painful to sort out.

- I will not provide installation support, sorry.

- You can certainly use llama.cpp and other loaders that support 8bit quantization, I just chose oobabooga/text-generation-webui.

- You will likely face many bugs until text-generation-webui loads, ranging between missing PATH or env variables to having to manually pip uninstall/install packages.

- The notes below will likely become outdated once both text-generation-webui and GPTQ-for-LLaMa receive the appropriate bug fixes.

- If this model produces very slow answers (1 token/s), it means you are not using Cuda for bitsandbytes or that your hardware needs an upgrade.

- If this model produces answers with weird characters, it means you are not using the correct version of qwopqwop200/GPTQ-for-LLaMa as mentioned below.

- If this model produces answers that are out of topic or if it talks to itself, it means you are not using the correct checkout 508de42 of qwopqwop200/GPTQ-for-LLaMa as mentioned below.

Cuda (Slow tokens/s):

```

git clone https://github.com/oobabooga/text-generation-webui

cd text-generation-webui

pip install -r requirements.txt

mkdir repositories

cd repositories

git clone https://github.com/qwopqwop200/GPTQ-for-LLaMa.git -b cuda # Make sure you obtain the qwopqwop200 version, not the oobabooga one! (because "act-order: yes")

cd GPTQ-for-LLaMa

pip install -r requirements.txt

python setup_cuda.py install

```

Triton (Fast tokens/s) - Works on Windows with WSL (what I've used) or Linux:

```

git clone https://github.com/oobabooga/text-generation-webui

cd text-generation-webui

git fetch origin pull/1229/head:triton # This is the version that supports Triton - https://github.com/oobabooga/text-generation-webui/pull/1229

git checkout triton

pip install -r requirements.txt

mkdir repositories

cd repositories

git clone https://github.com/qwopqwop200/GPTQ-for-LLaMa.git # -b cuda

cd GPTQ-for-LLaMa

git checkout 508de42 # Before qwopqwop200 broke everything... - https://github.com/qwopqwop200/GPTQ-for-LLaMa/issues/183

pip install -r requirements.txt

```

<br>

<br>

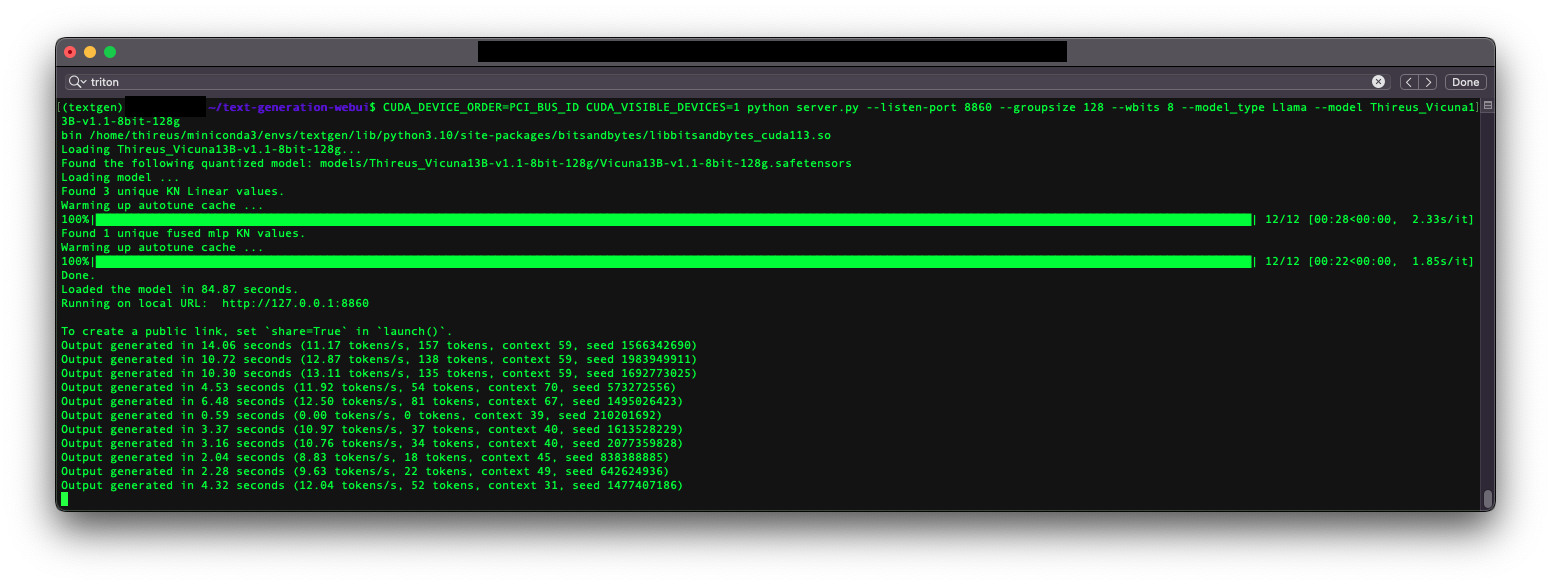

# Testbench detail and results

- Latest version of oobabooga + https://github.com/oobabooga/text-generation-webui/pull/1229

- NVIDIA GTX 3090

- 32BG DDR4

- i9-7980XE OC @4.6Ghz

- 11 tokens/s on average with Triton

- Preliminary observations: better results than --load-in-8bits (To Be Confirmed)

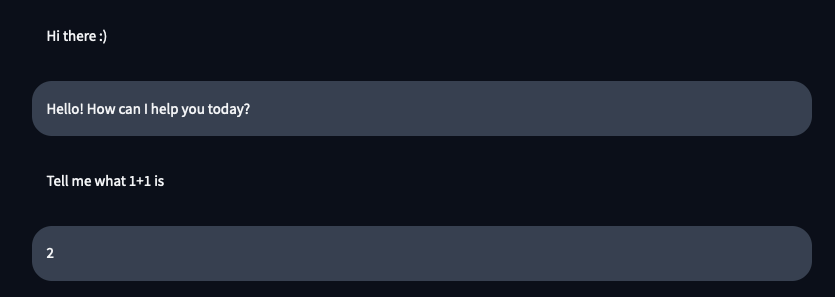

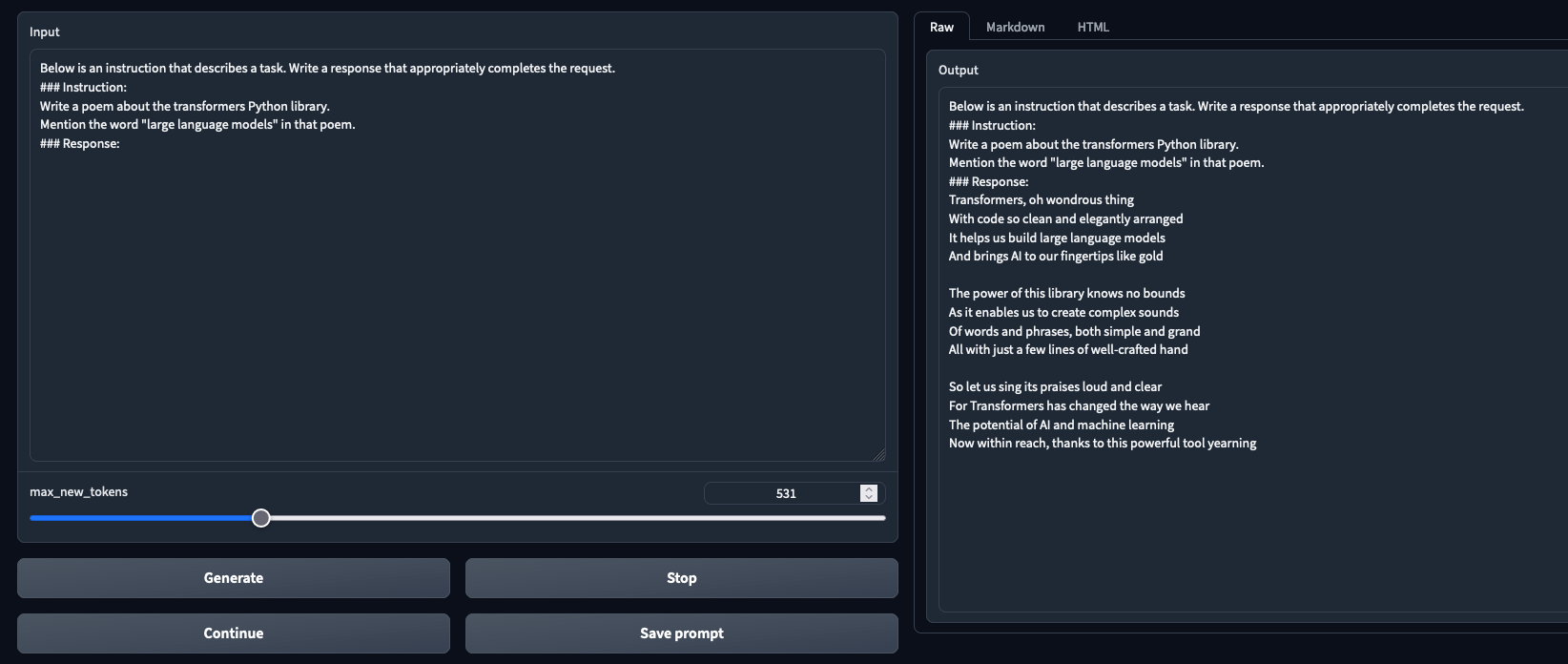

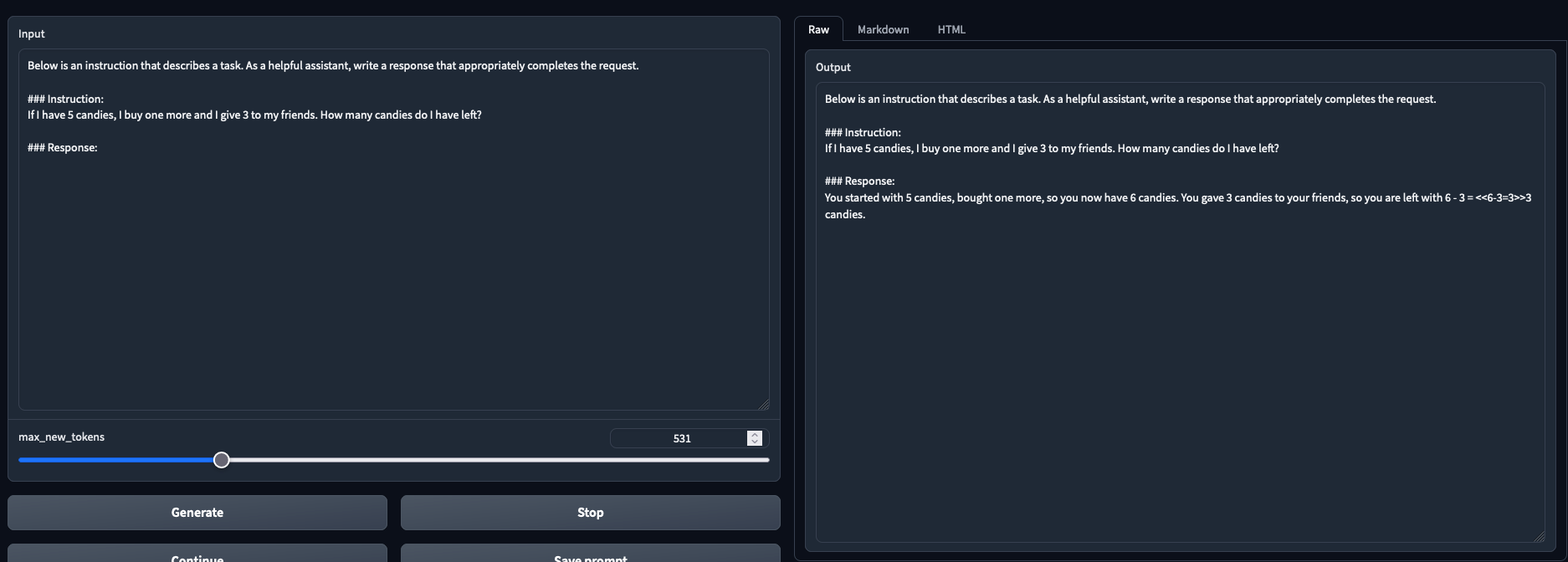

- Tested and working in both chat mode and text generation mode

<br>

<br>

# Vicuna Model Card

## Model details

**Model type:**

Vicuna is an open-source chatbot trained by fine-tuning LLaMA on user-shared conversations collected from ShareGPT.

It is an auto-regressive language model, based on the transformer architecture.

**Model date:**

Vicuna was trained between March 2023 and April 2023.

**Organizations developing the model:**

The Vicuna team with members from UC Berkeley, CMU, Stanford, and UC San Diego.

**Paper or resources for more information:**

https://vicuna.lmsys.org/

**License:**

Apache License 2.0

**Where to send questions or comments about the model:**

https://github.com/lm-sys/FastChat/issues

## Intended use

**Primary intended uses:**

The primary use of Vicuna is research on large language models and chatbots.

**Primary intended users:**

The primary intended users of the model are researchers and hobbyists in natural language processing, machine learning, and artificial intelligence.

## Training dataset

70K conversations collected from ShareGPT.com.

## Evaluation dataset

A preliminary evaluation of the model quality is conducted by creating a set of 80 diverse questions and utilizing GPT-4 to judge the model outputs. See https://vicuna.lmsys.org/ for more details.

## Major updates of weights v1.1

- Refactor the tokenization and separator. In Vicuna v1.1, the separator has been changed from `"###"` to the EOS token `"</s>"`. This change makes it easier to determine the generation stop criteria and enables better compatibility with other libraries.

- Fix the supervised fine-tuning loss computation for better model quality. |