Upload folder using huggingface_hub

#4

by

sharpenb

- opened

- README.md +6 -5

- config.json +1 -1

- model/optimized_model.pkl +2 -2

- model/smash_config.json +1 -1

- plots.png +0 -0

README.md

CHANGED

|

@@ -19,17 +19,18 @@ metrics:

|

|

| 19 |

</div>

|

| 20 |

<!-- header end -->

|

| 21 |

|

| 22 |

-

# Simply make AI models cheaper, smaller, faster, and greener!

|

| 23 |

-

|

| 24 |

[](https://twitter.com/PrunaAI)

|

| 25 |

[](https://github.com/PrunaAI)

|

| 26 |

[](https://www.linkedin.com/company/93832878/admin/feed/posts/?feedType=following)

|

|

|

|

|

|

|

|

|

|

| 27 |

|

| 28 |

- Give a thumbs up if you like this model!

|

| 29 |

- Contact us and tell us which model to compress next [here](https://www.pruna.ai/contact).

|

| 30 |

- Request access to easily compress your *own* AI models [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

|

| 31 |

- Read the documentations to know more [here](https://pruna-ai-pruna.readthedocs-hosted.com/en/latest/)

|

| 32 |

-

-

|

| 33 |

|

| 34 |

## Results

|

| 35 |

|

|

@@ -76,9 +77,9 @@ You can run the smashed model with these steps:

|

|

| 76 |

|

| 77 |

The configuration info are in `config.json`.

|

| 78 |

|

| 79 |

-

## License

|

| 80 |

|

| 81 |

-

We follow the same license as the original model. Please check the license of the original model nitrosocke/Arcane-Diffusion before using this model.

|

| 82 |

|

| 83 |

## Want to compress other models?

|

| 84 |

|

|

|

|

| 19 |

</div>

|

| 20 |

<!-- header end -->

|

| 21 |

|

|

|

|

|

|

|

| 22 |

[](https://twitter.com/PrunaAI)

|

| 23 |

[](https://github.com/PrunaAI)

|

| 24 |

[](https://www.linkedin.com/company/93832878/admin/feed/posts/?feedType=following)

|

| 25 |

+

[](https://discord.gg/CP4VSgck)

|

| 26 |

+

|

| 27 |

+

# Simply make AI models cheaper, smaller, faster, and greener!

|

| 28 |

|

| 29 |

- Give a thumbs up if you like this model!

|

| 30 |

- Contact us and tell us which model to compress next [here](https://www.pruna.ai/contact).

|

| 31 |

- Request access to easily compress your *own* AI models [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

|

| 32 |

- Read the documentations to know more [here](https://pruna-ai-pruna.readthedocs-hosted.com/en/latest/)

|

| 33 |

+

- Join Pruna AI community on Discord [here](https://discord.gg/CP4VSgck) to share feedback/suggestions or get help.

|

| 34 |

|

| 35 |

## Results

|

| 36 |

|

|

|

|

| 77 |

|

| 78 |

The configuration info are in `config.json`.

|

| 79 |

|

| 80 |

+

## Credits & License

|

| 81 |

|

| 82 |

+

We follow the same license as the original model. Please check the license of the original model nitrosocke/Arcane-Diffusion before using this model which provided the base model.

|

| 83 |

|

| 84 |

## Want to compress other models?

|

| 85 |

|

config.json

CHANGED

|

@@ -1 +1 @@

|

|

| 1 |

-

{"pruners": "None", "pruning_ratio":

|

|

|

|

| 1 |

+

{"pruners": "None", "pruning_ratio": "None", "factorizers": "None", "quantizers": "None", "n_quantization_bits": 32, "output_deviation": 0.0, "compilers": "['step_caching', 'tiling', 'diffusers2']", "static_batch": true, "static_shape": false, "controlnet": "None", "unet_dim": 4, "device": "cuda", "batch_size": 1, "max_batch_size": 1, "image_height": 512, "image_width": 512, "version": "1.5", "task": "txt2img", "weight_name": "None", "model_name": "nitrosocke/Arcane-Diffusion", "save_load_fn": "stable_fast"}

|

model/optimized_model.pkl

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:

|

| 3 |

-

size

|

|

|

|

| 1 |

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:754b139ac9927a861d41a31375d2c230c64b8ba5e68837256087cc6ecc83f475

|

| 3 |

+

size 2743420938

|

model/smash_config.json

CHANGED

|

@@ -1 +1 @@

|

|

| 1 |

-

{"api_key": "pruna_c4c77860c62a2965f6bc281841ee1d7bd3", "verify_url": "http://johnrachwan.pythonanywhere.com", "smash_config": {"pruners": "None", "pruning_ratio":

|

|

|

|

| 1 |

+

{"api_key": "pruna_c4c77860c62a2965f6bc281841ee1d7bd3", "verify_url": "http://johnrachwan.pythonanywhere.com", "smash_config": {"pruners": "None", "pruning_ratio": "None", "factorizers": "None", "quantizers": "None", "n_quantization_bits": 32, "output_deviation": 0.0, "compilers": "['step_caching', 'tiling', 'diffusers2']", "static_batch": true, "static_shape": false, "controlnet": "None", "unet_dim": 4, "device": "cuda", "cache_dir": ".models/optimized_model", "batch_size": 1, "max_batch_size": 1, "image_height": 512, "image_width": 512, "version": "1.5", "task": "txt2img", "weight_name": "None", "model_name": "nitrosocke/Arcane-Diffusion", "save_load_fn": "stable_fast"}}

|

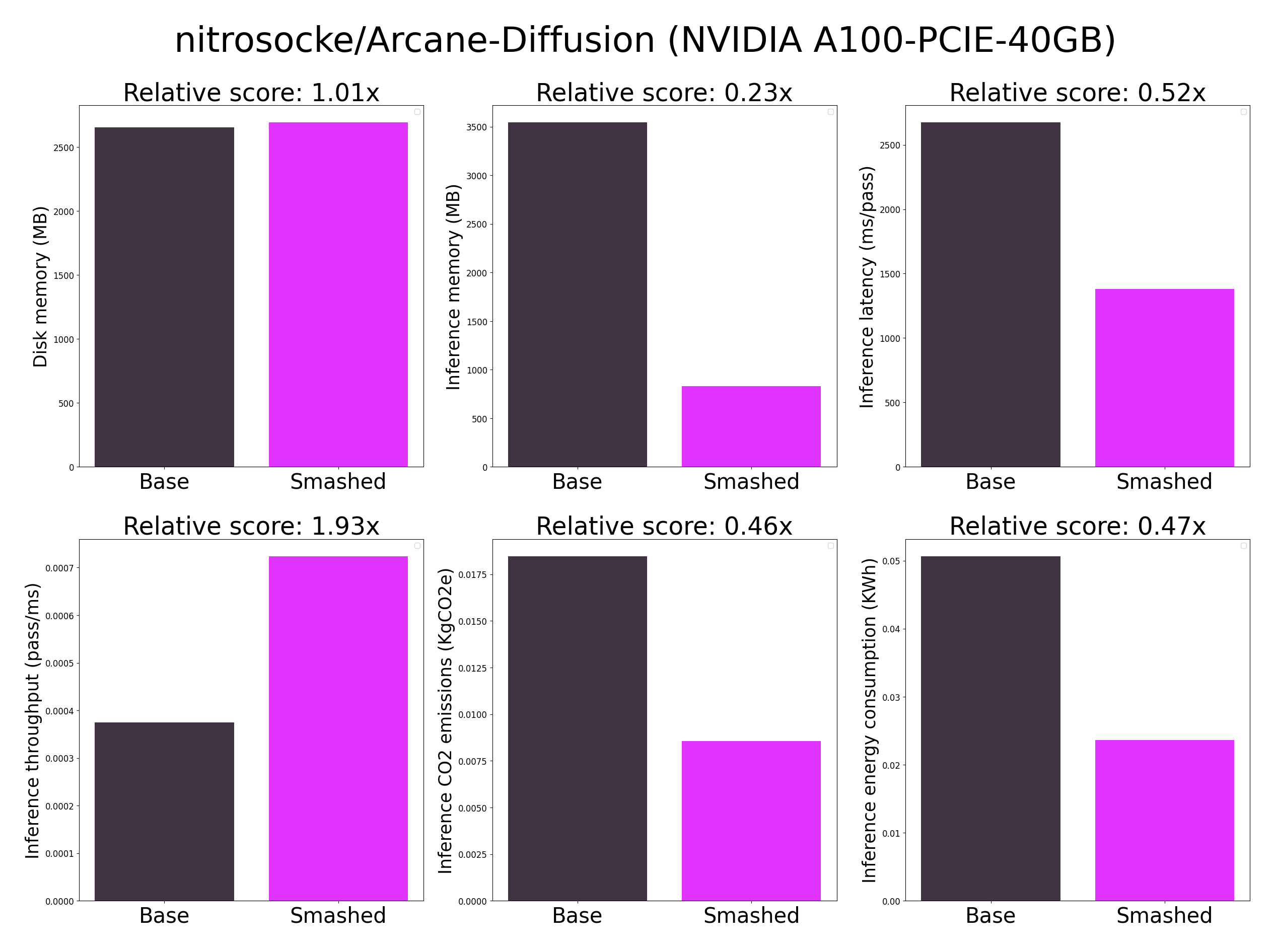

plots.png

CHANGED

|

|