Update README.md

#2

by

danelcsb

- opened

README.md

CHANGED

|

@@ -1,6 +1,9 @@

|

|

| 1 |

---

|

| 2 |

library_name: transformers

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

| 4 |

---

|

| 5 |

|

| 6 |

# Model Card for Model ID

|

|

@@ -13,7 +16,22 @@ tags: []

|

|

| 13 |

|

| 14 |

### Model Description

|

| 15 |

|

| 16 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 17 |

|

| 18 |

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

|

| 19 |

|

|

@@ -29,8 +47,8 @@ This is the model card of a 🤗 transformers model that has been pushed on the

|

|

| 29 |

|

| 30 |

<!-- Provide the basic links for the model. -->

|

| 31 |

|

| 32 |

-

- **Repository:**

|

| 33 |

-

- **Paper [optional]:**

|

| 34 |

- **Demo [optional]:** [More Information Needed]

|

| 35 |

|

| 36 |

## Uses

|

|

@@ -41,7 +59,7 @@ This is the model card of a 🤗 transformers model that has been pushed on the

|

|

| 41 |

|

| 42 |

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

|

| 43 |

|

| 44 |

-

[

|

| 45 |

|

| 46 |

### Downstream Use [optional]

|

| 47 |

|

|

@@ -71,7 +89,40 @@ Users (both direct and downstream) should be made aware of the risks, biases and

|

|

| 71 |

|

| 72 |

Use the code below to get started with the model.

|

| 73 |

|

| 74 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 75 |

|

| 76 |

## Training Details

|

| 77 |

|

|

@@ -79,21 +130,29 @@ Use the code below to get started with the model.

|

|

| 79 |

|

| 80 |

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

|

| 81 |

|

| 82 |

-

[

|

| 83 |

|

| 84 |

### Training Procedure

|

| 85 |

|

| 86 |

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

|

| 87 |

|

| 88 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 89 |

|

| 90 |

-

|

| 91 |

|

|

|

|

| 92 |

|

| 93 |

#### Training Hyperparameters

|

| 94 |

|

| 95 |

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

|

| 96 |

|

|

|

|

|

|

|

| 97 |

#### Speeds, Sizes, Times [optional]

|

| 98 |

|

| 99 |

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

|

|

@@ -104,6 +163,8 @@ Use the code below to get started with the model.

|

|

| 104 |

|

| 105 |

<!-- This section describes the evaluation protocols and provides the results. -->

|

| 106 |

|

|

|

|

|

|

|

| 107 |

### Testing Data, Factors & Metrics

|

| 108 |

|

| 109 |

#### Testing Data

|

|

@@ -154,7 +215,7 @@ Carbon emissions can be estimated using the [Machine Learning Impact calculator]

|

|

| 154 |

|

| 155 |

### Model Architecture and Objective

|

| 156 |

|

| 157 |

-

[

|

| 158 |

|

| 159 |

### Compute Infrastructure

|

| 160 |

|

|

@@ -174,7 +235,16 @@ Carbon emissions can be estimated using the [Machine Learning Impact calculator]

|

|

| 174 |

|

| 175 |

**BibTeX:**

|

| 176 |

|

| 177 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 178 |

|

| 179 |

**APA:**

|

| 180 |

|

|

@@ -192,7 +262,7 @@ Carbon emissions can be estimated using the [Machine Learning Impact calculator]

|

|

| 192 |

|

| 193 |

## Model Card Authors [optional]

|

| 194 |

|

| 195 |

-

[

|

| 196 |

|

| 197 |

## Model Card Contact

|

| 198 |

|

|

|

|

| 1 |

---

|

| 2 |

library_name: transformers

|

| 3 |

+

license: apache-2.0

|

| 4 |

+

language:

|

| 5 |

+

- en

|

| 6 |

+

pipeline_tag: object-detection

|

| 7 |

---

|

| 8 |

|

| 9 |

# Model Card for Model ID

|

|

|

|

| 16 |

|

| 17 |

### Model Description

|

| 18 |

|

| 19 |

+

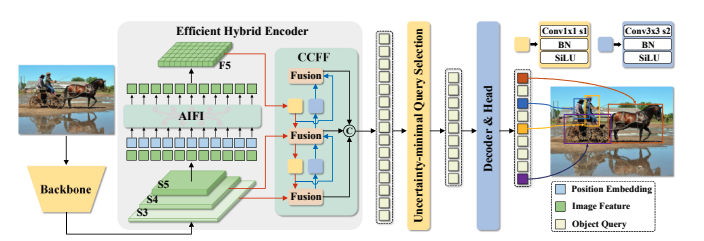

The YOLO series has become the most popular framework for real-time object detection due to its reasonable trade-off between speed and accuracy.

|

| 20 |

+

However, we observe that the speed and accuracy of YOLOs are negatively affected by the NMS.

|

| 21 |

+

Recently, end-to-end Transformer-based detectors (DETRs) have provided an alternative to eliminating NMS.

|

| 22 |

+

Nevertheless, the high computational cost limits their practicality and hinders them from fully exploiting the advantage of excluding NMS.

|

| 23 |

+

In this paper, we propose the Real-Time DEtection TRansformer (RT-DETR), the first real-time end-to-end object detector to our best knowledge that addresses the above dilemma.

|

| 24 |

+

We build RT-DETR in two steps, drawing on the advanced DETR:

|

| 25 |

+

first we focus on maintaining accuracy while improving speed, followed by maintaining speed while improving accuracy.

|

| 26 |

+

Specifically, we design an efficient hybrid encoder to expeditiously process multi-scale features by decoupling intra-scale interaction and cross-scale fusion to improve speed.

|

| 27 |

+

Then, we propose the uncertainty-minimal query selection to provide high-quality initial queries to the decoder, thereby improving accuracy.

|

| 28 |

+

In addition, RT-DETR supports flexible speed tuning by adjusting the number of decoder layers to adapt to various scenarios without retraining.

|

| 29 |

+

Our RT-DETR-R50 / R101 achieves 53.1% / 54.3% AP on COCO and 108 / 74 FPS on T4 GPU, outperforming previously advanced YOLOs in both speed and accuracy.

|

| 30 |

+

We also develop scaled RT-DETRs that outperform the lighter YOLO detectors (S and M models).

|

| 31 |

+

Furthermore, RT-DETR-R50 outperforms DINO-R50 by 2.2% AP in accuracy and about 21 times in FPS.

|

| 32 |

+

After pre-training with Objects365, RT-DETR-R50 / R101 achieves 55.3% / 56.2% AP. The project page: this [https URL](https://zhao-yian.github.io/RTDETR/).

|

| 33 |

+

|

| 34 |

+

|

| 35 |

|

| 36 |

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

|

| 37 |

|

|

|

|

| 47 |

|

| 48 |

<!-- Provide the basic links for the model. -->

|

| 49 |

|

| 50 |

+

- **Repository:** https://github.com/lyuwenyu/RT-DETR

|

| 51 |

+

- **Paper [optional]:** https://arxiv.org/abs/2304.08069

|

| 52 |

- **Demo [optional]:** [More Information Needed]

|

| 53 |

|

| 54 |

## Uses

|

|

|

|

| 59 |

|

| 60 |

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

|

| 61 |

|

| 62 |

+

You can use the raw model for object detection. See the [model hub](https://huggingface.co/models?search=rtdetr) to look for all available RTDETR models.

|

| 63 |

|

| 64 |

### Downstream Use [optional]

|

| 65 |

|

|

|

|

| 89 |

|

| 90 |

Use the code below to get started with the model.

|

| 91 |

|

| 92 |

+

```

|

| 93 |

+

import torch

|

| 94 |

+

import requests

|

| 95 |

+

|

| 96 |

+

from PIL import Image

|

| 97 |

+

from transformers import RTDetrForObjectDetection, RTDetrImageProcessor

|

| 98 |

+

|

| 99 |

+

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

|

| 100 |

+

image = Image.open(requests.get(url, stream=True).raw)

|

| 101 |

+

|

| 102 |

+

image_processor = RTDetrImageProcessor.from_pretrained("PekingU/rtdetr_r50vd")

|

| 103 |

+

model = RTDetrForObjectDetection.from_pretrained("PekingU/rtdetr_r50vd")

|

| 104 |

+

|

| 105 |

+

inputs = image_processor(images=image, return_tensors="pt")

|

| 106 |

+

|

| 107 |

+

with torch.no_grad():

|

| 108 |

+

outputs = model(**inputs)

|

| 109 |

+

|

| 110 |

+

results = image_processor.post_process_object_detection(outputs, target_sizes=torch.tensor([image.size[::-1]]), threshold=0.3)

|

| 111 |

+

|

| 112 |

+

for result in results:

|

| 113 |

+

for score, label_id, box in zip(result["scores"], result["labels"], result["boxes"]):

|

| 114 |

+

score, label = score.item(), label_id.item()

|

| 115 |

+

box = [round(i, 2) for i in box.tolist()]

|

| 116 |

+

print(f"{model.config.id2label[label]}: {score:.2f} {box}")

|

| 117 |

+

```

|

| 118 |

+

This should output

|

| 119 |

+

```

|

| 120 |

+

sofa: 0.97 [0.14, 0.38, 640.13, 476.21]

|

| 121 |

+

cat: 0.96 [343.38, 24.28, 640.14, 371.5]

|

| 122 |

+

cat: 0.96 [13.23, 54.18, 318.98, 472.22]

|

| 123 |

+

remote: 0.95 [40.11, 73.44, 175.96, 118.48]

|

| 124 |

+

remote: 0.92 [333.73, 76.58, 369.97, 186.99]

|

| 125 |

+

```

|

| 126 |

|

| 127 |

## Training Details

|

| 128 |

|

|

|

|

| 130 |

|

| 131 |

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

|

| 132 |

|

| 133 |

+

The RTDETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

|

| 134 |

|

| 135 |

### Training Procedure

|

| 136 |

|

| 137 |

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

|

| 138 |

|

| 139 |

+

We conduct experiments on

|

| 140 |

+

COCO [20] and Objects365 [35], where RT-DETR is trained

|

| 141 |

+

on COCO train2017 and validated on COCO val2017

|

| 142 |

+

dataset. We report the standard COCO metrics, including

|

| 143 |

+

AP (averaged over uniformly sampled IoU thresholds ranging from 0.50-0.95 with a step size of 0.05), AP50, AP75, as

|

| 144 |

+

well as AP at different scales: APS, APM, APL.

|

| 145 |

|

| 146 |

+

#### Preprocessing [optional]

|

| 147 |

|

| 148 |

+

Images are resized/rescaled such that the shortest side is at 640 pixels.

|

| 149 |

|

| 150 |

#### Training Hyperparameters

|

| 151 |

|

| 152 |

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

|

| 153 |

|

| 154 |

+

|

| 155 |

+

|

| 156 |

#### Speeds, Sizes, Times [optional]

|

| 157 |

|

| 158 |

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

|

|

|

|

| 163 |

|

| 164 |

<!-- This section describes the evaluation protocols and provides the results. -->

|

| 165 |

|

| 166 |

+

This model achieves an AP (average precision) of 53.1 on COCO 2017 validation. For more details regarding evaluation results, we refer to table 2 of the original paper.

|

| 167 |

+

|

| 168 |

### Testing Data, Factors & Metrics

|

| 169 |

|

| 170 |

#### Testing Data

|

|

|

|

| 215 |

|

| 216 |

### Model Architecture and Objective

|

| 217 |

|

| 218 |

+

|

| 219 |

|

| 220 |

### Compute Infrastructure

|

| 221 |

|

|

|

|

| 235 |

|

| 236 |

**BibTeX:**

|

| 237 |

|

| 238 |

+

```bibtex

|

| 239 |

+

@misc{lv2023detrs,

|

| 240 |

+

title={DETRs Beat YOLOs on Real-time Object Detection},

|

| 241 |

+

author={Yian Zhao and Wenyu Lv and Shangliang Xu and Jinman Wei and Guanzhong Wang and Qingqing Dang and Yi Liu and Jie Chen},

|

| 242 |

+

year={2023},

|

| 243 |

+

eprint={2304.08069},

|

| 244 |

+

archivePrefix={arXiv},

|

| 245 |

+

primaryClass={cs.CV}

|

| 246 |

+

}

|

| 247 |

+

```

|

| 248 |

|

| 249 |

**APA:**

|

| 250 |

|

|

|

|

| 262 |

|

| 263 |

## Model Card Authors [optional]

|

| 264 |

|

| 265 |

+

[Sangbum Choi](https://huggingface.co/danelcsb)

|

| 266 |

|

| 267 |

## Model Card Contact

|

| 268 |

|