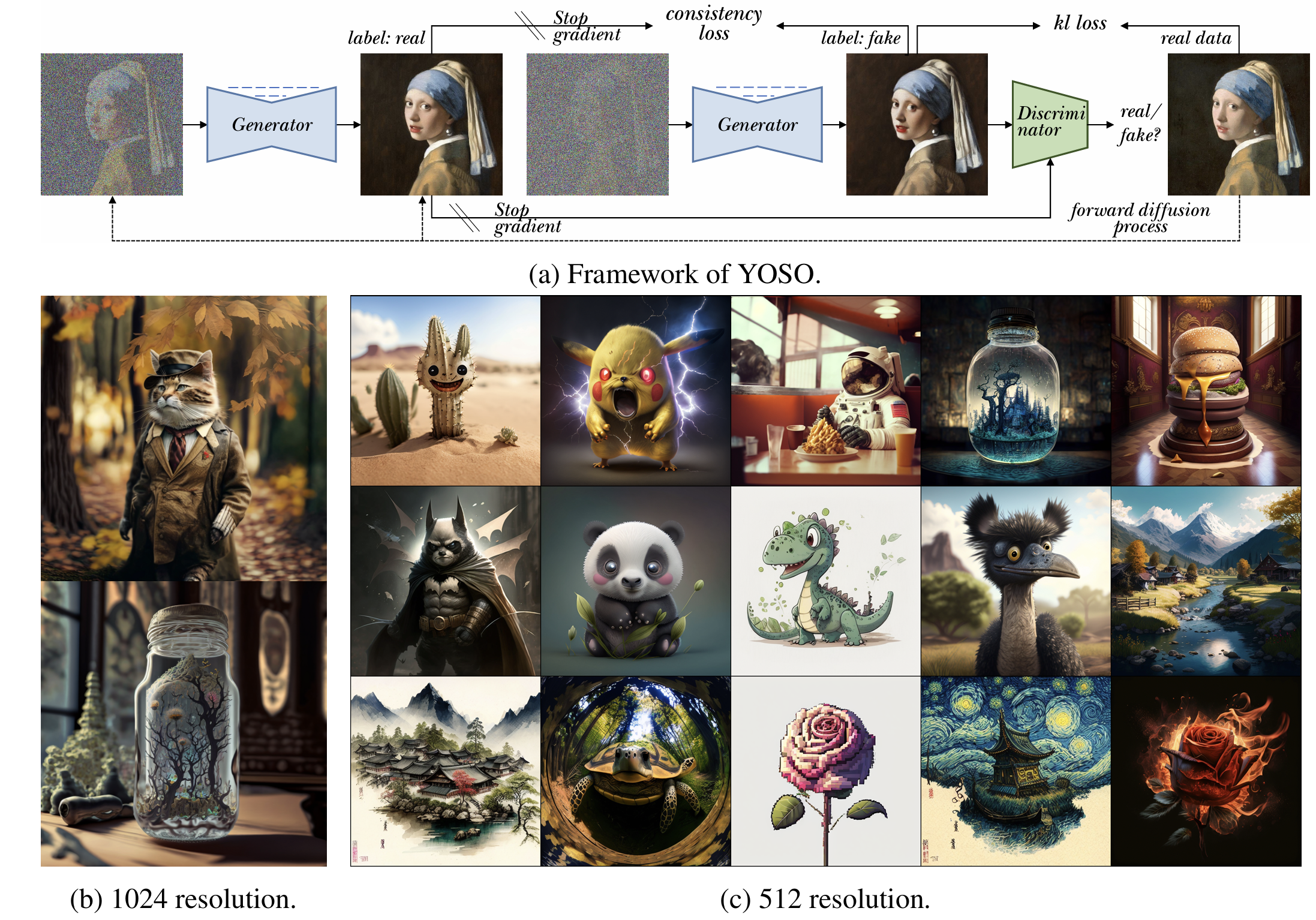

You Only Sample Once (YOSO)

The YOSO was proposed in "You Only Sample Once: Taming One-Step Text-To-Image Synthesis by Self-Cooperative Diffusion GANs" by Yihong Luo, Xiaolong Chen, Xinghua Qu, Jing Tang.

Official Repository of this paper: YOSO.

This model is fine-tuning from PixArt-XL-2-512x512, enabling one-step inference to perform text-to-image generation.

We wanna highlight that the YOSO-PixArt was originally trained on 512 resolution. However, we found that we can construct a YOSO that enables generating samples with 1024 resolution by merging with PixArt-XL-2-1024-MS (Section 6.3.1 in the paper). The impressive performance indicates the robust generalization ability of our YOSO.

usage

import torch

from diffusers import PixArtAlphaPipeline, LCMScheduler, Transformer2DModel

transformer = Transformer2DModel.from_pretrained(

"Luo-Yihong/yoso_pixart1024", torch_dtype=torch.float16).to('cuda')

pipe = PixArtAlphaPipeline.from_pretrained("PixArt-alpha/PixArt-XL-2-512x512",

transformer=transformer,

torch_dtype=torch.float16, use_safetensors=True)

pipe = pipe.to('cuda')

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

pipe.scheduler.config.prediction_type = "v_prediction"

generator = torch.manual_seed(318)

imgs = pipe(prompt="Pirate ship trapped in a cosmic maelstrom nebula, rendered in cosmic beach whirlpool engine, volumetric lighting, spectacular, ambient lights, light pollution, cinematic atmosphere, art nouveau style, illustration art artwork by SenseiJaye, intricate detail.",

num_inference_steps=1,

num_images_per_prompt = 1,

generator = generator,

guidance_scale=1.,

)[0]

imgs[0]

Bibtex

@misc{luo2024sample,

title={You Only Sample Once: Taming One-Step Text-to-Image Synthesis by Self-Cooperative Diffusion GANs},

author={Yihong Luo and Xiaolong Chen and Xinghua Qu and Jing Tang},

year={2024},

eprint={2403.12931},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

- Downloads last month

- 41

Inference Providers

NEW

This model is not currently available via any of the supported third-party Inference Providers, and

the model is not deployed on the HF Inference API.