metadata

license: bigscience-openrail-m

metrics:

- code_eval

library_name: transformers

tags:

- code

MoTCoder: Elevating Large Language Models with Modular Thought for Challenging Programming Tasks

This is the official model repository of MoTCoder: Elevating Large Language Models with Modular Thought for Challenging Programming Tasks.

Performance

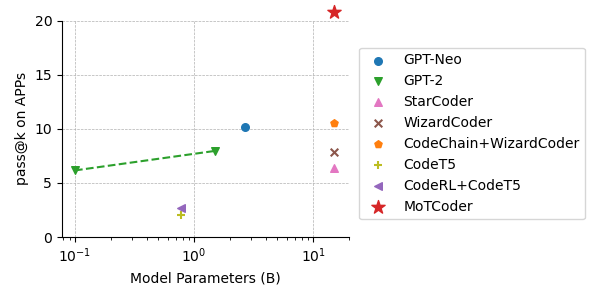

Performance on APPS

| Model | Size | Pass@ | Introductory | Interview | Competition | All |

|---|---|---|---|---|---|---|

| GPT-Neo | 2.7B | 1 | 3.90 | 0.57 | 0.00 | 1.12 |

| 5 | 5.50 | 0.80 | 0.00 | 1.58 | ||

| Codex | 12B | 1 | 4.14 | 0.14 | 0.02 | 0.92 |

| 5 | 9.65 | 0.51 | 0.09 | 2.25 | ||

| 1000 | 25.02 | 3.70 | 3.23 | 7.87 | ||

| AlphaCode | 1B | 1000 | 17.67 | 5.24 | 7.06 | 8.09 |

| AlphaCode (Filtered 1k) | 5 | 14.36 | 5.63 | 4.58 | 7.17 | |

| AlphaCode (Filtered 10k) | 5 | 18.18 | 8.21 | 6.65 | 9.89 | |

| AlphaCode (Filtered 50k) | 5 | 20.36 | 9.66 | 7.75 | 11.42 | |

| StarCoder | 15B | 1 | 7.25 | 6.89 | 4.08 | 6.40 |

| WizardCoder | 15B | 1 | 26.04 | 4.21 | 0.81 | 7.90 |

| CodeLlama | 7B | 5 | 10.76 | 2.01 | 0.77 | 3.51 |

| 10 | 15.59 | 3.12 | 1.41 | 5.27 | ||

| 100 | 33.52 | 9.40 | 7.13 | 13.77 | ||

| 13B | 5 | 23.74 | 5.63 | 2.05 | 8.54 | |

| 10 | 30.19 | 8.12 | 3.35 | 11.58 | ||

| 100 | 48.99 | 18.40 | 11.98 | 23.23 | ||

| 34B | 5 | 32.81 | 8.75 | 2.88 | 12.39 | |

| 10 | 38.97 | 12.16 | 4.69 | 16.03 | ||

| 100 | 56.32 | 24.31 | 15.39 | 28.93 | ||

| CodeLlama-Python | 7B | 5 | 12.72 | 4.18 | 1.31 | 5.31 |

| 10 | 18.50 | 6.25 | 2.24 | 7.90 | ||

| 100 | 38.26 | 14.94 | 9.12 | 18.44 | ||

| 13B | 5 | 26.33 | 7.06 | 2.79 | 10.06 | |

| 10 | 32.77 | 10.03 | 4.33 | 13.44 | ||

| 100 | 51.60 | 21.46 | 14.60 | 26.12 | ||

| 34B | 5 | 28.94 | 7.80 | 3.45 | 11.16 | |

| 10 | 35.91 | 11.12 | 5.53 | 14.96 | ||

| 100 | 54.92 | 23.90 | 16.81 | 28.69 | ||

| CodeLlama-Instruct | 7B | 5 | 12.85 | 2.07 | 1.13 | 4.04 |

| 10 | 17.86 | 3.12 | 1.95 | 5.83 | ||

| 100 | 35.37 | 9.44 | 8.45 | 14.43 | ||

| 13B | 5 | 24.01 | 6.93 | 2.39 | 9.44 | |

| 10 | 30.27 | 9.58 | 3.83 | 12.57 | ||

| 100 | 48.73 | 19.55 | 13.12 | 24.10 | ||

| 34B | 5 | 31.56 | 7.86 | 3.21 | 11.67 | |

| 10 | 37.80 | 11.08 | 5.12 | 15.23 | ||

| 100 | 55.72 | 22.80 | 16.38 | 28.10 | ||

| CoTCode | 15B | 1 | 33.80 | 19.70 | 11.09 | 20.80 |

| code-davinci-002 | - | 1 | 29.30 | 6.40 | 2.50 | 10.20 |

| GPT3.5 | - | 1 | 48.00 | 19.42 | 5.42 | 22.33 |

Performance on CodeContests

| Model | Size | Revision | Val pass@1 | Val pass@5 | Test pass@1 | Test pass@5 | Average pass@1 | Average pass@5 |

|---|---|---|---|---|---|---|---|---|

| code-davinci-002 | - | - | - | - | 1.00 | - | 1.00 | - |

| code-davinci-002 + CodeT | - | 5 | - | - | 3.20 | - | 3.20 | - |

| WizardCoder | 15B | - | 1.11 | 3.18 | 1.98 | 3.27 | 1.55 | 3.23 |

| WizardCoder + CodeChain | 15B | 5 | 2.35 | 3.29 | 2.48 | 3.30 | 2.42 | 3.30 |

| CoTCode | 15B | - | 2.39 | 7.69 | 6.18 | 12.73 | 4.29 | 10.21 |

| GPT3.5 | - | - | 6.81 | 16.23 | 5.82 | 11.16 | 6.32 | 13.70 |