license: other

language:

- en

UPDATE: There's a Llama 2 sequel now! Check it out here!

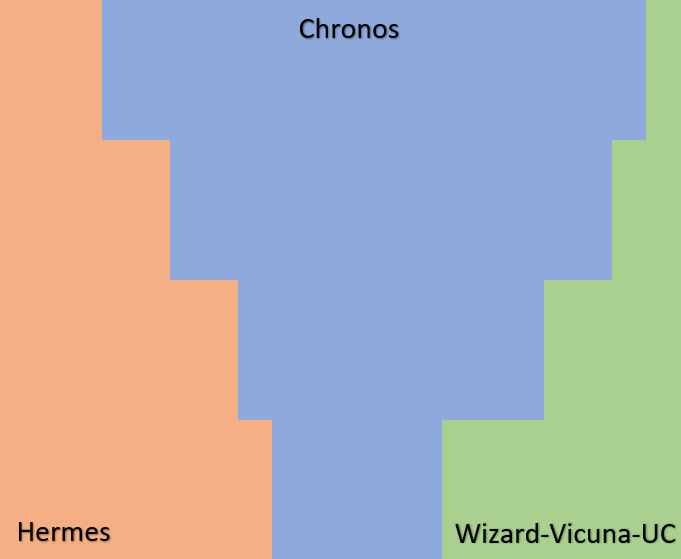

An experiment with gradient merges using the following script, with Chronos as its primary model, augmented by Hermes and Wizard-Vicuna Uncensored.

Quantized models are available from TheBloke: GGML - GPTQ (You're the best!)

Model details

Chronos is a wonderfully verbose model, though it definitely seems to lack in the logic department. Hermes and WizardLM have been merged gradually, primarily in the higher layers (10+) in an attempt to rectify some of this behaviour.

The main objective was to create an all-round model with improved story generation and roleplaying capabilities.

Below is an illustration to showcase a rough approximation of the gradients I used to create MythoLogic:

Prompt Format

This model primarily uses Alpaca formatting, so for optimal model performance, use:

<System prompt/Character Card>

### Instruction:

Your instruction or question here.

For roleplay purposes, I suggest the following - Write <CHAR NAME>'s next reply in a chat between <YOUR NAME> and <CHAR NAME>. Write a single reply only.

### Response:

license: other

Open LLM Leaderboard Evaluation Results

Detailed results can be found here

| Metric | Value |

|---|---|

| Avg. | 47.23 |

| ARC (25-shot) | 58.45 |

| HellaSwag (10-shot) | 81.56 |

| MMLU (5-shot) | 49.36 |

| TruthfulQA (0-shot) | 49.47 |

| Winogrande (5-shot) | 75.61 |

| GSM8K (5-shot) | 8.64 |

| DROP (3-shot) | 7.53 |