bge-small-en-v1.5-quant

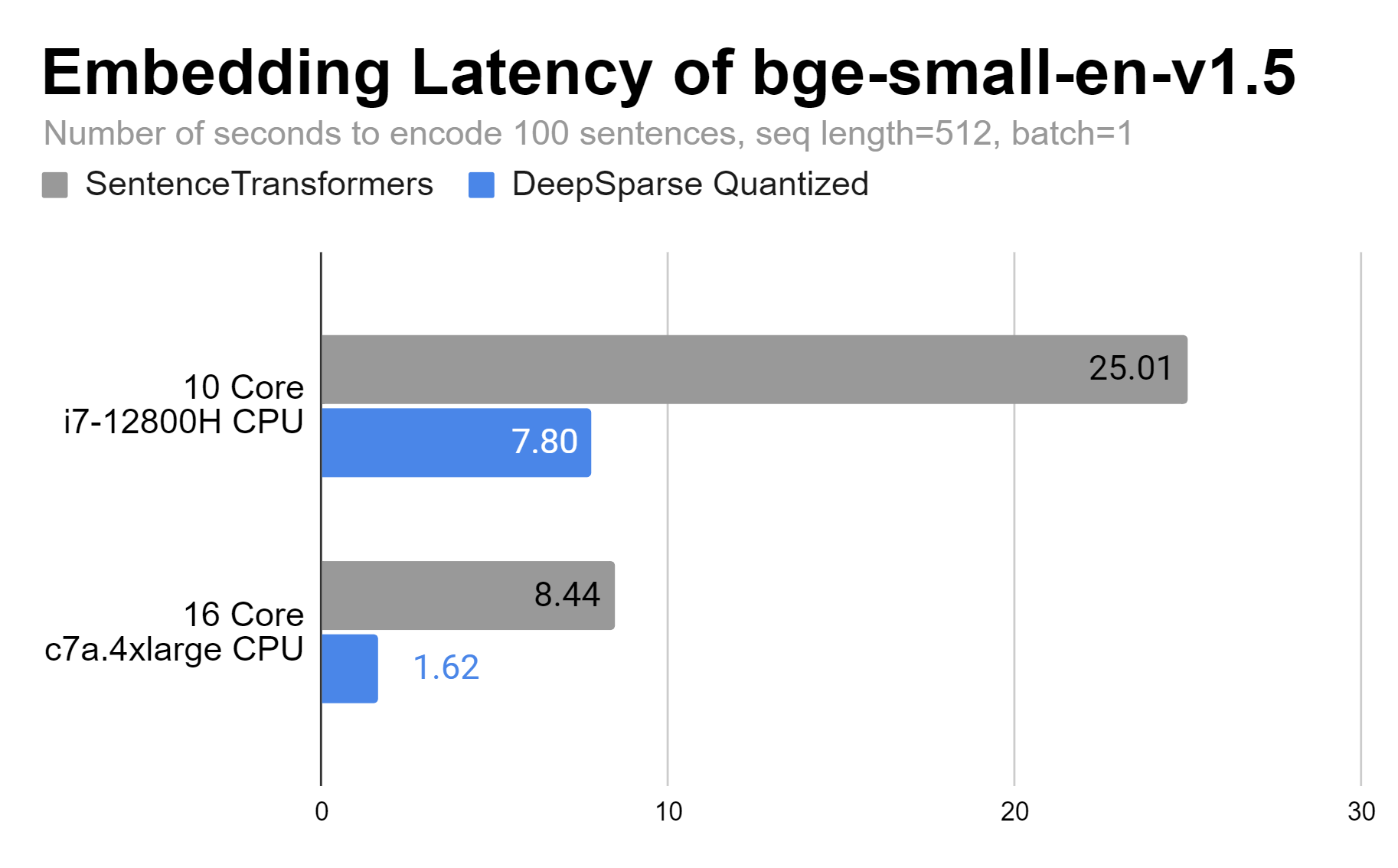

DeepSparse is able to improve latency performance on a 10 core laptop by 3X and up to 5X on a 16 core AWS instance.

Usage

This is the quantized (INT8) ONNX variant of the bge-small-en-v1.5 embeddings model accelerated with Sparsify for quantization and DeepSparseSentenceTransformers for inference.

pip install -U deepsparse-nightly[sentence_transformers]

from deepsparse.sentence_transformers import DeepSparseSentenceTransformer

model = DeepSparseSentenceTransformer('neuralmagic/bge-small-en-v1.5-quant', export=False)

# Our sentences we like to encode

sentences = ['This framework generates embeddings for each input sentence',

'Sentences are passed as a list of string.',

'The quick brown fox jumps over the lazy dog.']

# Sentences are encoded by calling model.encode()

embeddings = model.encode(sentences)

# Print the embeddings

for sentence, embedding in zip(sentences, embeddings):

print("Sentence:", sentence)

print("Embedding:", embedding.shape)

print("")

For general questions on these models and sparsification methods, reach out to the engineering team on our community Slack.

- Downloads last month

- 1,272

Inference Providers

NEW

This model is not currently available via any of the supported Inference Providers.

Spaces using neuralmagic/bge-small-en-v1.5-quant 4

Evaluation results

- accuracy on MTEB AmazonCounterfactualClassification (en)test set self-reported74.194

- ap on MTEB AmazonCounterfactualClassification (en)test set self-reported37.562

- f1 on MTEB AmazonCounterfactualClassification (en)test set self-reported68.470

- accuracy on MTEB AmazonPolarityClassificationtest set self-reported91.894

- ap on MTEB AmazonPolarityClassificationtest set self-reported88.646

- f1 on MTEB AmazonPolarityClassificationtest set self-reported91.872

- accuracy on MTEB AmazonReviewsClassification (en)test set self-reported46.718

- f1 on MTEB AmazonReviewsClassification (en)test set self-reported46.258

- map_at_1 on MTEB ArguAnatest set self-reported34.424

- map_at_10 on MTEB ArguAnatest set self-reported49.630