Update README.md

Browse files

README.md

CHANGED

|

@@ -15,18 +15,17 @@ TF-ID (Table/Figure IDentifier) is a family of object detection models finetuned

|

|

| 15 |

| Model | Model size | Model Description |

|

| 16 |

| ------- | ------------- | ------------- |

|

| 17 |

| TF-ID-base[[HF]](https://huggingface.co/yifeihu/TF-ID-base) | 0.23B | Extract tables/figures and their caption text

|

| 18 |

-

| TF-ID-large[[HF]](https://huggingface.co/yifeihu/TF-ID-large) | 0.77B | Extract tables/figures and their caption text

|

| 19 |

| TF-ID-base-no-caption[[HF]](https://huggingface.co/yifeihu/TF-ID-base-no-caption) | 0.23B | Extract tables/figures without caption text

|

| 20 |

-

| TF-ID-large-no-caption[[HF]](https://huggingface.co/yifeihu/TF-ID-large-no-caption) | 0.77B | Extract tables/figures without caption text

|

| 21 |

All TF-ID models are finetuned from [microsoft/Florence-2](https://huggingface.co/microsoft/Florence-2-large-ft) checkpoints.

|

| 22 |

|

| 23 |

-

The models were finetuned with papers from Hugging Face Daily Papers. All bounding boxes are manually annotated and checked by humans.

|

|

|

|

|

|

|

|

|

|

| 24 |

|

| 25 |

-

|

| 26 |

-

|

| 27 |

-

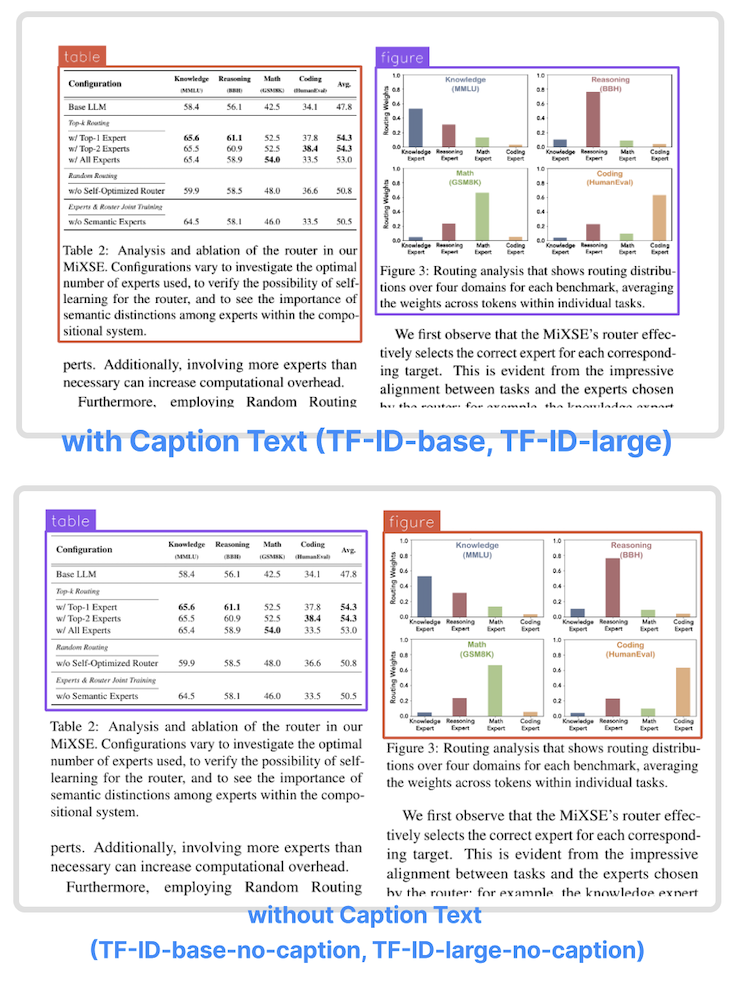

TF-ID-base and TF-ID-large draw bounding boxes around tables/figures and their caption text.

|

| 28 |

-

|

| 29 |

-

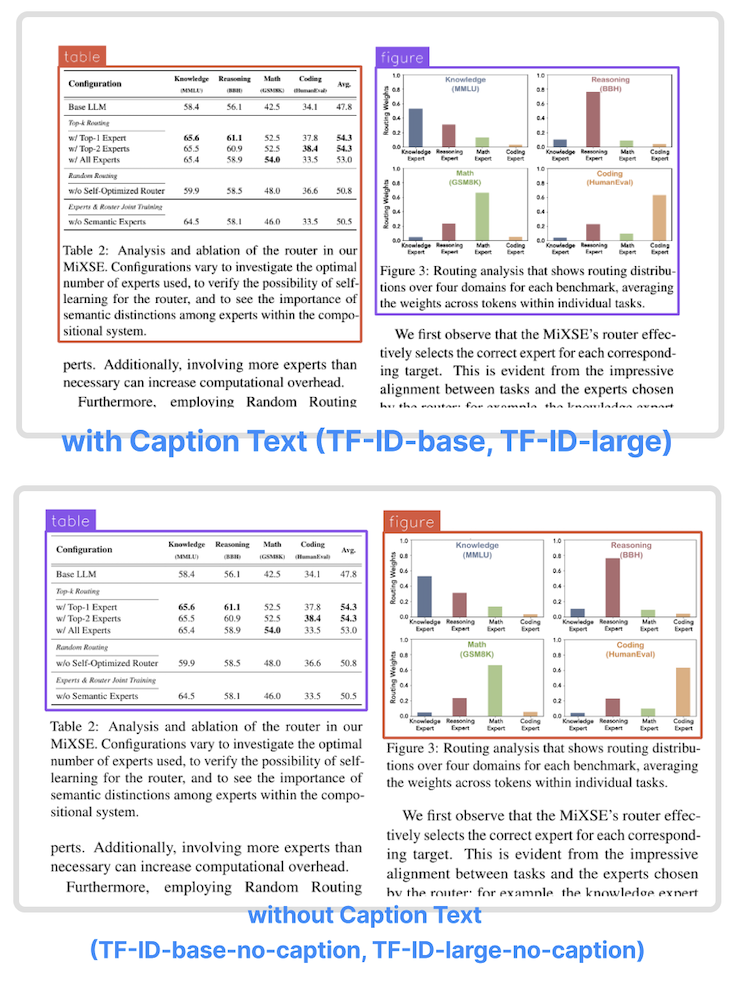

TF-ID-base-no-caption and TF-ID-large-no-caption draw bounding boxes around tables/figures without their caption text.

|

| 30 |

|

| 31 |

|

| 32 |

|

|

@@ -34,6 +33,10 @@ Object Detection results format:

|

|

| 34 |

{'\<OD>': {'bboxes': [[x1, y1, x2, y2], ...],

|

| 35 |

'labels': ['label1', 'label2', ...]} }

|

| 36 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 37 |

## Benchmarks

|

| 38 |

|

| 39 |

We tested the models on paper pages outside the training dataset. The papers are a subset of huggingface daily paper.

|

|

@@ -59,18 +62,16 @@ Use the code below to get started with the model.

|

|

| 59 |

```python

|

| 60 |

import requests

|

| 61 |

from PIL import Image

|

| 62 |

-

from transformers import AutoProcessor, AutoModelForCausalLM

|

| 63 |

|

| 64 |

-

model = AutoModelForCausalLM.from_pretrained("yifeihu/TF-ID-base

|

| 65 |

-

processor = AutoProcessor.from_pretrained("yifeihu/TF-ID-base

|

| 66 |

|

| 67 |

prompt = "<OD>"

|

| 68 |

-

|

| 69 |

url = "https://huggingface.co/yifeihu/TF-ID-base/resolve/main/arxiv_2305_10853_5.png?download=true"

|

| 70 |

image = Image.open(requests.get(url, stream=True).raw)

|

| 71 |

|

| 72 |

inputs = processor(text=prompt, images=image, return_tensors="pt")

|

| 73 |

-

|

| 74 |

generated_ids = model.generate(

|

| 75 |

input_ids=inputs["input_ids"],

|

| 76 |

pixel_values=inputs["pixel_values"],

|

|

@@ -78,8 +79,8 @@ generated_ids = model.generate(

|

|

| 78 |

do_sample=False,

|

| 79 |

num_beams=3

|

| 80 |

)

|

| 81 |

-

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]

|

| 82 |

|

|

|

|

| 83 |

parsed_answer = processor.post_process_generation(generated_text, task="<OD>", image_size=(image.width, image.height))

|

| 84 |

|

| 85 |

print(parsed_answer)

|

|

@@ -87,16 +88,15 @@ print(parsed_answer)

|

|

| 87 |

|

| 88 |

To visualize the results, see [this tutorial notebook](https://colab.research.google.com/github/roboflow-ai/notebooks/blob/main/notebooks/how-to-finetune-florence-2-on-detection-dataset.ipynb) for more details.

|

| 89 |

|

| 90 |

-

## Finetuning Code and Dataset

|

| 91 |

-

|

| 92 |

-

Coming soon!

|

| 93 |

-

|

| 94 |

## BibTex and citation info

|

| 95 |

|

| 96 |

```

|

| 97 |

-

@misc{TF-ID,

|

| 98 |

-

|

| 99 |

-

|

| 100 |

-

|

|

|

|

|

|

|

|

|

|

| 101 |

}

|

| 102 |

```

|

|

|

|

| 15 |

| Model | Model size | Model Description |

|

| 16 |

| ------- | ------------- | ------------- |

|

| 17 |

| TF-ID-base[[HF]](https://huggingface.co/yifeihu/TF-ID-base) | 0.23B | Extract tables/figures and their caption text

|

| 18 |

+

| TF-ID-large[[HF]](https://huggingface.co/yifeihu/TF-ID-large) (Recommended) | 0.77B | Extract tables/figures and their caption text

|

| 19 |

| TF-ID-base-no-caption[[HF]](https://huggingface.co/yifeihu/TF-ID-base-no-caption) | 0.23B | Extract tables/figures without caption text

|

| 20 |

+

| TF-ID-large-no-caption[[HF]](https://huggingface.co/yifeihu/TF-ID-large-no-caption) (Recommended) | 0.77B | Extract tables/figures without caption text

|

| 21 |

All TF-ID models are finetuned from [microsoft/Florence-2](https://huggingface.co/microsoft/Florence-2-large-ft) checkpoints.

|

| 22 |

|

| 23 |

+

- The models were finetuned with papers from Hugging Face Daily Papers. All bounding boxes are manually annotated and checked by humans.

|

| 24 |

+

- TF-ID models take an image of a single paper page as the input, and return bounding boxes for all tables and figures in the given page.

|

| 25 |

+

- TF-ID-base and TF-ID-large draw bounding boxes around tables/figures and their caption text.

|

| 26 |

+

- TF-ID-base-no-caption and TF-ID-large-no-caption draw bounding boxes around tables/figures without their caption text.

|

| 27 |

|

| 28 |

+

**Large models are always recommended!**

|

|

|

|

|

|

|

|

|

|

|

|

|

| 29 |

|

| 30 |

|

| 31 |

|

|

|

|

| 33 |

{'\<OD>': {'bboxes': [[x1, y1, x2, y2], ...],

|

| 34 |

'labels': ['label1', 'label2', ...]} }

|

| 35 |

|

| 36 |

+

## Training Code and Dataset

|

| 37 |

+

- Dataset: [yifeihu/TF-ID-arxiv-papers](https://huggingface.co/datasets/yifeihu/TF-ID-arxiv-papers)

|

| 38 |

+

- Code: [github.com/ai8hyf/TF-ID](https://github.com/ai8hyf/TF-ID)

|

| 39 |

+

|

| 40 |

## Benchmarks

|

| 41 |

|

| 42 |

We tested the models on paper pages outside the training dataset. The papers are a subset of huggingface daily paper.

|

|

|

|

| 62 |

```python

|

| 63 |

import requests

|

| 64 |

from PIL import Image

|

| 65 |

+

from transformers import AutoProcessor, AutoModelForCausalLM

|

| 66 |

|

| 67 |

+

model = AutoModelForCausalLM.from_pretrained("yifeihu/TF-ID-base", trust_remote_code=True)

|

| 68 |

+

processor = AutoProcessor.from_pretrained("yifeihu/TF-ID-base", trust_remote_code=True)

|

| 69 |

|

| 70 |

prompt = "<OD>"

|

|

|

|

| 71 |

url = "https://huggingface.co/yifeihu/TF-ID-base/resolve/main/arxiv_2305_10853_5.png?download=true"

|

| 72 |

image = Image.open(requests.get(url, stream=True).raw)

|

| 73 |

|

| 74 |

inputs = processor(text=prompt, images=image, return_tensors="pt")

|

|

|

|

| 75 |

generated_ids = model.generate(

|

| 76 |

input_ids=inputs["input_ids"],

|

| 77 |

pixel_values=inputs["pixel_values"],

|

|

|

|

| 79 |

do_sample=False,

|

| 80 |

num_beams=3

|

| 81 |

)

|

|

|

|

| 82 |

|

| 83 |

+

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=False)[0]

|

| 84 |

parsed_answer = processor.post_process_generation(generated_text, task="<OD>", image_size=(image.width, image.height))

|

| 85 |

|

| 86 |

print(parsed_answer)

|

|

|

|

| 88 |

|

| 89 |

To visualize the results, see [this tutorial notebook](https://colab.research.google.com/github/roboflow-ai/notebooks/blob/main/notebooks/how-to-finetune-florence-2-on-detection-dataset.ipynb) for more details.

|

| 90 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 91 |

## BibTex and citation info

|

| 92 |

|

| 93 |

```

|

| 94 |

+

@misc{TF-ID,

|

| 95 |

+

author = {Yifei Hu},

|

| 96 |

+

title = {TF-ID: Table/Figure IDentifier for academic papers},

|

| 97 |

+

year = {2024},

|

| 98 |

+

publisher = {GitHub},

|

| 99 |

+

journal = {GitHub repository},

|

| 100 |

+

howpublished = {\url{https://github.com/ai8hyf/TF-ID}},

|

| 101 |

}

|

| 102 |

```

|