File size: 23,466 Bytes

b8cd1aa f47036f 42275e7 f47036f 5295346 1b22070 5295346 f7c57c4 b8cd1aa c6cd2eb 69370ac bdba148 69370ac bdba148 8a6f3d5 fe3713e b8cd1aa 08ac5e2 bdba148 7ade8ec b8cd1aa 6270a7a 69370ac 6270a7a 69370ac 6270a7a f7c57c4 e8d1c96 b8cd1aa 69370ac 08ac5e2 b192b15 08ac5e2 b192b15 08ac5e2 42b2aa7 b8cd1aa 69370ac b8cd1aa 69370ac e12ab8c b8cd1aa 42b2aa7 bdba148 69370ac b8cd1aa f7c57c4 b8cd1aa 5abd0fc 42b2aa7 b8cd1aa e12ab8c b8cd1aa a7aa062 8565c27 0d2996b a7aa062 5abd0fc a7aa062 391d5eb 69370ac 391d5eb 2522575 85009f1 628a769 a7aa062 a854db5 a7aa062 0d2996b 69370ac a7aa062 69370ac 62ab1ca 69370ac 62ab1ca 89006ce 62ab1ca 5abd0fc a34b084 89006ce 69370ac 62ab1ca 69370ac 62ab1ca a34b084 8565c27 6d1e759 b8cd1aa e12ab8c 527987f e12ab8c 527987f 69370ac e12ab8c 527987f 69370ac f7c57c4 527987f f7c57c4 527987f f7c57c4 69370ac f7c57c4 08ac5e2 69370ac 08ac5e2 f7c57c4 527987f fe3713e 527987f b8cd1aa 527987f b8cd1aa |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 |

---

language: en

tags:

- Clsssification

license: apache-2.0

datasets:

- tensorflow

- numpy

- keras

- pandas

- openpyxl

- gensin

- contractions

- nltk

- spacy

thumbnail: https://github.com/Marcosdib/S2Query/Classification_Architecture_model.png

---

# MCTI Text Classification Task (uncased)

Disclaimer: The Brazilian Ministry of Science, Technology, and Innovation (MCTI) has partially supported this project.

The model [NLP MCTI Classification Multi](https://huggingface.co/spaces/unb-lamfo-nlp-mcti/NLP-W2V-CNN-Multi) is part of the project [Research Financing Product Portfolio (FPP)](https://huggingface.co/unb-lamfo-nlp-mcti) and focuses

on the Text Classification task, exploring different machine learning strategies to classify a small amount

of long, unstructured, and uneven data to find a proper method with good performance. Pre-training and word embedding

solutions were used to learn word relationships from other datasets with considerable similarity and a larger scale.

Then, using the acquired resources, based on the dataset available in the MCTI, transfer learning plus deep learning

models were applied to improve the understanding of each sentence.

## According to the abstract,

"The research results serve as a successful case of artificial intelligence in a federal government application".

More details about the project, architecture model, training model and classifications process can be found in the article

["Using transfer learning to classify long unstructured texts with small amounts of labeled data"](https://www.scitepress.org/Link.aspx?doi=10.5220/0011527700003318).

## Model description

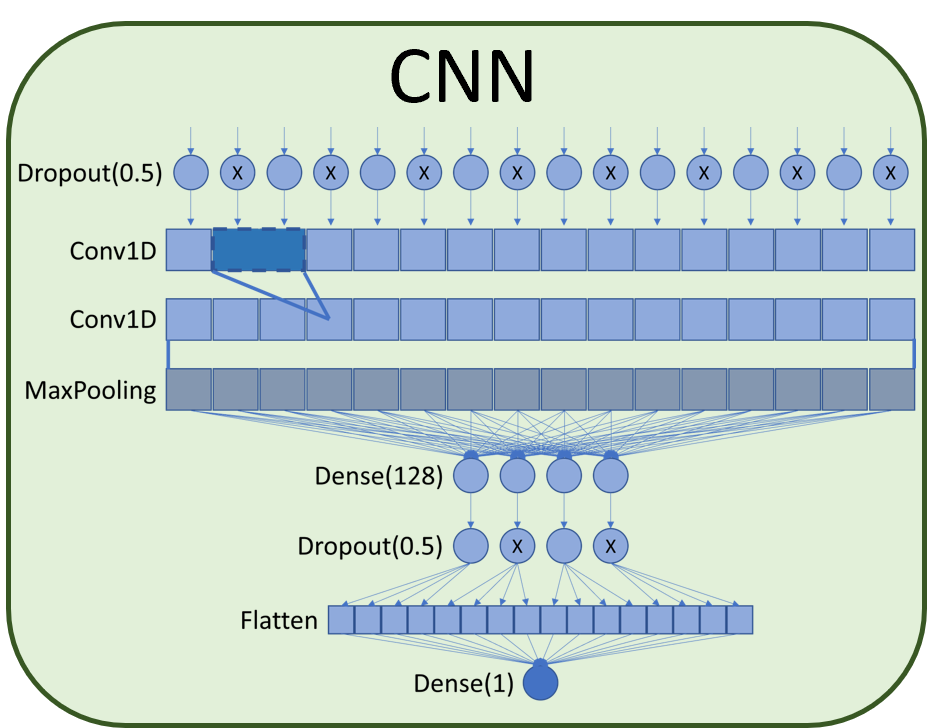

The work consists of a machine learning model with word embedding and Convolutional Neural Network (CNN).

For the project, a Convolutional Neural Network (CNN) was chosen, as it presents better accuracy in empirical

comparison with 3 other different architectures: Neural Network (NN), Deep Neural Network (DNN) and Long-Term

Memory (LSTM).

As the input data is compose of unstructured and nonuniform texts it is essential normalize the data to study

little insights and valuable relationships to work with the best features of them. In this way, learning is

facilitated and allows the gradient descent to converge more quickly.

The first layer of the model is a pre-trained Word2Vec embedding layer as a method of extracting features from the data that can

replace one-hot coding with dimensional reduction. The pre-training of this model is explained further in this document.

After the embedding layer, there is the CNN classification model. The architecture of the CNN network is composed of a 50% dropout layer followed by two 1D convolution layers

associated with a MaxPooling layer. After maximum grouping, a dense layer of size 128 is added connected to

a 50% dropout which finally connects to a flattened layer and the final sort dense layer. The dropout layers

helped to avoid network overfitting by masking part of the data so that the network learned to create

redundancies in the analysis of the inputs.

## Model variations

Table 1 below presents the results of several implementations with different architectures, highlighting the

accuracy, f1-score, recall and precision results obtained in the training of each network.

Table 1: Results of experiments

| Model | Accuracy | F1-score | Recall | Precision |

|------------------------|----------|----------|--------|-----------|

| Keras Embedding + SNN | 92.47 | 88.46 | 79.66 | 100.00 |

| Keras Embedding + DNN | 89.78 | 84.41 | 77.81 | 92.57 |

| Keras Embedding + CNN | 93.01 | 89.91 | 85.18 | 95.69 |

| Keras Embedding + LSTM | 93.01 | 88.94 | 83.32 | 95.54 |

| Word2Vec + SNN | 89.25 | 83.82 | 74.15 | 97.10 |

| Word2Vec + DNN | 90.32 | 86.52 | 85.18 | 88.70 |

| Word2Vec + CNN | 92.47 | 88.42 | 80.85 | 98.72 |

| Word2Vec + LSTM | 89.78 | 84.36 | 75.36 | 95.81 |

| Longformer + SNN | 61.29 | 0 | 0 | 0 |

| Longformer + DNN | 91.93 | 87.62 | 80.37 | 97.62 |

| Longformer + CNN | 94.09 | 90.69 | 83.41 | 100.00 |

| Longformer + LSTM | 61.29 | 0 | 0 | 0 |

Table 2 bellow shows the times required for training each epoch, the data validation execution time and the weight of the deep learning

model associated with each implementation.

Table 2: Results of Training time epoch, Validation time and Weight

| Model | Training time epoch(s) | Validation time (s) | Weight(MB) |

|------------------------|:-----------------------:|:-------------------:|:----------:|

| Keras Embedding + SNN | 100.00 | 0.2 | 0.7 | 1.8 |

| Keras Embedding + DNN | 92.57 | 1.0 | 1.4 | 7.6 |

| Keras Embedding + CNN | 95.69 | 0.4 | 1.1 | 3.2 |

| Keras Embedding + LSTM | 95.54 | 1.4 | 2.0 | 1.8 |

| Word2Vec + SNN | 97.10 | 1.4 | 1.2 | 9.6 |

| Word2Vec + DNN | 88.70 | 2.0 | 6.8 | 7.8 |

| Word2Vec + CNN | 98.72 | 1.9 | 3.4 | 4.7 |

| Word2Vec + LSTM | 95.81 | 2.6 | 14.3 | 1.2 |

| Longformer + SNN | 0 | 128.0 | 1.5 | 36.8 |

| Longformer + DNN | 97.62 | 81.0 | 8.4 | 12.7 |

| Longformer + CNN | 100.00 | 57.0 | 4.5 | 9.6 |

| Longformer + LSTM | 0 | 13.0 | 8.6 | 2.6 |

In addition, it is possible to notice that the model of Longformer + SNN and Longformer + LSTM were not able

to learn. Perhaps the models need some adjustments, however, each training attempt takes between 5 and 8 hours,

which made the attempt to adjust unfeasible in view of other models already showing promising results.

With Longformer the problems caused by the size of the model became more visible. First, it was necessary to

actively deallocate unused chunks of memory right after use so that the next steps could be loaded. Then, it

was necessary to use a CPU environment for training the networks because the weight of the model exceeded the

16GB of video memory available on the P100 board, available in Colab during training. In this case, the high

RAM environment was used, which delivers 25GB of memory for use with the CPU, and this means a longer time

required for training since the GPU performs matrix operations faster then a CPU. These models were trained

5x with 100 training epochs each.

## Intended uses

### How to use

This model is available in huggingface spaces to be applied to excel files containing scrapped oportunity data.

- [NLP MCTI Classification Multi](https://huggingface.co/spaces/unb-lamfo-nlp-mcti/NLP-W2V-CNN-Multi)

You can also find the training and evaluation notebooks in the github repository:

- [PPF-MCTI Repository](https://github.com/chap0lin/PPF-MCTI)

### Limitations and bias

This model is uncased: it does not make a difference between english and English.

This model depends on high-quality scrapped data. Since the model understands a finite number of words, the input needs to

have little to no wrong encodings and abstract markdowns so that the preprocessing can remove them and correctly identify the words.

Even if the training data used for this model could be characterized as fairly neutral, this model can have biased

predictions:

Performance limiting: Loading the longformer model in memory means needing 11Gb available only for the model,

without considering the weight of the deep learning network. For training this means we need a 20+ Gb GPU to

perform the training. Here this was resolved using the high RAM environment of google Colab Pro and training

using CPU which justifies the longer training time per season.

Replicability limitation: Due to the simplicity of the keras embedding model, we are using one hot encoding,

and it has a delicate problem for replication in production. This detail is pending further study to define

whether it is possible to use one of these models.

This bias will also affect all fine-tuned versions of this model.

## Training data

The [inputted training data](https://github.com/chap0lin/PPF-MCTI/tree/master/Datasets) was obtained from scrapping techniques, over 30 different platforms e.g. The Royal Society,

Annenberg foundation, and contained 928 labeled entries (928 rows x 21 columns). Of the data gathered, was used only

the main text content (column u). Text content averages 800 tokens in length, but with high variance, up to 5,000 tokens.

## Training procedure

### Model training with Word2Vec embeddings

After the pre-trained model of word2vec embeddings had already learned meanings relevant to the classification problem,

it was coupled to the classification model to train it with the labeled data in a supervised way. Table 6 shows the results

obtained with related metrics. With this implementation, was reached new levels of accuracy with 86% for CNN architecture

and 88% for the LSTM architecture.

### Preprocessing

Pre-processing was used to standardize the texts for the English language, reduce the number of insignificant tokens and

optimize the training of the models.

The following assumptions were considered:

- The Data Entry base is obtained from the result of Goal 4.

- Labeling (Goal 4) is considered true for accuracy measurement purposes;

- Preprocessing experiments compare accuracy in a shallow neural network (SNN);

- Pre-processing was investigated for the classification goal.

From the Database obtained in Goal 4, stored in the project's [GitHub](https://github.com/mcti-sefip/mcti-sefip-ppfcd2020/blob/scraps-desenvolvimento/Rotulagem/db_PPF_validacao_para%20UNB_%20FINAL.xlsx), a Notebook was developed in [Google Colab](https://colab.research.google.com)

to implement the [preprocessing code](https://github.com/mcti-sefip/mcti-sefip-ppfcd2020/blob/pre-processamento/Pre_Processamento/MCTI_PPF_Pr%C3%A9_processamento.ipynb), which also can be found on the project's GitHub.

Several Python packages were used to develop the preprocessing code:

Table 3: Python packages used

| Objective | Package |

|--------------------------------------------------------|--------------|

| Resolve contractions and slang usage in text | [contractions](https://pypi.org/project/contractions) |

| Natural Language Processing | [nltk](https://pypi.org/project/nltk) |

| Others data manipulations and calculations included in Python 3.10: io, json, math, re (regular expressions), shutil, time, unicodedata; | [numpy](https://pypi.org/project/numpy) |

| Data manipulation and analysis | [pandas](https://pypi.org/project/pandas) |

| http library | [requests](https://pypi.org/project/requests) |

| Training model | [scikit-learn](https://pypi.org/project/scikit-learn) |

| Machine learning | [tensorflow](https://pypi.org/project/tensorflow) |

| Machine learning | [keras](https://keras.io/) |

| Translation from multiple languages to English | [translators](https://pypi.org/project/translators) |

As detailed in the notebook on [GitHub](https://github.com/mcti-sefip/mcti-sefip-ppfcd2020/blob/pre-processamento/Pre_Processamento/MCTI_PPF_Pr%C3%A9_processamento), in the pre-processing, code was created to build and evaluate 8 (eight) different

bases, derived from the base of goal 4, with the application of the methods shown in table 4.

Table 4: Preprocessing methods evaluated

| id | Experiments |

|--------|------------------------------------------------------------------------|

| Base | Original Texts |

| xp1 | Expand Contractions |

| xp2 | Expand Contractions + Convert text to lowercase |

| xp3 | Expand Contractions + Remove Punctuation |

| xp4 | Expand Contractions + Remove Punctuation + Convert text to lowercase |

| xp5 | xp4 + Stemming |

| xp6 | xp4 + Lemmatization |

| xp7 | xp4 + Stemming + Stopwords Removal |

| xp8 | xp4 + Lemmatization + Stopwords Removal |

First, the treatment of punctuation and capitalization was evaluated. This phase resulted in the construction and

evaluation of the first four bases (xp1, xp2, xp3, xp4).

Then, the content simplification was evaluated, from the xp4 base, considering stemming (xp5), Lemmatization (xp6),

stemming + stopwords removal (xp7), and Lemmatization + stopwords removal (xp8).

All eight bases were evaluated to classify the eligibility of the opportunity, through the training of a shallow

neural network (SNN – Shallow Neural Network). The metrics for the eight bases were evaluated. The results are

shown in Table 5.

Table 5: Results obtained in Preprocessing

| id | Experiment | acurácia | f1-score | recall | precision | Média(s) | N_tokens | max_lenght |

|--------|------------------------------------------------------------------------|----------|----------|--------|-----------|----------|----------|------------|

| Base | Original Texts | 89,78% | 84,20% | 79,09% | 90,95% | 417,772 | 23788 | 5636 |

| xp1 | Expand Contractions | 88,71% | 81,59% | 71,54% | 97,33% | 414,715 | 23768 | 5636 |

| xp2 | Expand Contractions + Convert text to lowercase | 90,32% | 85,64% | 77,19% | 97,44% | 368,375 | 20322 | 5629 |

| xp3 | Expand Contractions + Remove Punctuation | 91,94% | 87,73% | 79,66% | 98,72% | 386,650 | 22121 | 4950 |

| xp4 | Expand Contractions + Remove Punctuation + Convert text to lowercase | 90,86% | 86,61% | 80,85% | 94,25% | 326,830 | 18616 | 4950 |

| xp5 | xp4 + Stemming | 91,94% | 87,68% | 78,47% | 100,00% | 257,960 | 14319 | 4950 |

| xp6 | xp4 + Lemmatization | 89,78% | 85,06% | 79,66% | 91,87% | 282,645 | 16194 | 4950 |

| xp7 | xp4 + Stemming + Stopwords Removal | 92,47% | 88,46% | 79,66% | 100,00% | 210,320 | 14212 | 2817 |

| xp8 | ap4 + Lemmatization + Stopwords Removal | 92,47% | 88,46% | 79,66% | 100,00% | 225,580 | 16081 | 2726 |

Even so, between these two excellent options, one can judge which one to choose. XP7: It has less training time,

less number of unique tokens. XP8: It has smaller maximum sizes. In this case, the criterion used for the choice

was the computational cost required to train the vector representation models (word-embedding, sentence-embeddings,

document-embedding). The training time is so close that it did not have such a large weight for the analysis.

As a last step, a spreadsheet was generated for the model (xp8) with the fields opo_pre and opo_pre_tkn, containing the

preprocessed text in sentence format and tokens, respectively. This [database](https://github.com/mcti-sefip/mcti-sefip-ppfcd2020/blob/pre-processamento/Pre_Processamento/oportunidades_final_pre_processado.xlsx) was made

available on the project's GitHub with the inclusion of columns opo_pre (text) and opo_pre_tkn (tokenized).

### Pretraining

Since labeled data is scarce, word-embeddings was trained in an unsupervised manner using other datasets that

contain most of the words it needs to learn. The idea implemented was based on introducing better and better-trained

word embeddings in the model. For an additional dataset to be applied to improve word-embedding training, it must be

compatible with the dataset used to train the classifier. Was searched for datasets from the Kaggle, a platform with

over a thousand available NLP datasets, and the closest we found was the BBC News Articles dataset, which achieved

only 56% compatibility.

The alternative was to use web scraping algorithms to acquire more unlabeled data from the same sources, thus ensuring

compatibility. The original dataset had 260 labeled entries.

Table 6: Compatibility results (*base = labeled MCTI dataset entries)

| Dataset | |

|--------------------------------------|:----------------------:|

| Labeled MCTI | 100% |

| Full MCTI | 100% |

| BBC News Articles | 56.77% |

| New unlabeled MCTI | 75.26% |

Table 7: Results from Pre-trained WE + ML models

| ML Model | Accuracy | F1 Score | Precision | Recall |

|:--------:|:---------:|:---------:|:---------:|:---------:|

| NN | 0.8269 | 0.8545 | 0.8392 | 0.8712 |

| DNN | 0.7115 | 0.7794 | 0.7255 | 0.8485 |

| CNN | 0.8654 | 0.9083 | 0.8486 | 0.9773 |

| LSTM | 0.8846 | 0.9139 | 0.9056 | 0.9318 |

## Evaluation results

The table below presents the results of accuracy, f1-score, recall and precision obtained in the training of each network.

In addition, the necessary times for training each epoch, the data validation execution time and the weight of the deep

learning model associated with each implementation were added.

Table 8: Results of experiments

| Model | Accuracy | F1-score | Recall | Precision | Training time epoch(s) | Validation time (s) | Weight(MB) |

|------------------------|----------|----------|--------|-----------|------------------------|---------------------|------------|

| Keras Embedding + SNN | 92.47 | 88.46 | 79.66 | 100.00 | 0.2 | 0.7 | 1.8 |

| Keras Embedding + DNN | 89.78 | 84.41 | 77.81 | 92.57 | 1.0 | 1.4 | 7.6 |

| Keras Embedding + CNN | 93.01 | 89.91 | 85.18 | 95.69 | 0.4 | 1.1 | 3.2 |

| Keras Embedding + LSTM | 93.01 | 88.94 | 83.32 | 95.54 | 1.4 | 2.0 | 1.8 |

| Word2Vec + SNN | 89.25 | 83.82 | 74.15 | 97.10 | 1.4 | 1.2 | 9.6 |

| Word2Vec + DNN | 90.32 | 86.52 | 85.18 | 88.70 | 2.0 | 6.8 | 7.8 |

| Word2Vec + CNN | 92.47 | 88.42 | 80.85 | 98.72 | 1.9 | 3.4 | 4.7 |

| Word2Vec + LSTM | 89.78 | 84.36 | 75.36 | 95.81 | 2.6 | 14.3 | 1.2 |

| Longformer + SNN | 61.29 | 0 | 0 | 0 | 128.0 | 1.5 | 36.8 |

| Longformer + DNN | 91.93 | 87.62 | 80.37 | 97.62 | 81.0 | 8.4 | 12.7 |

| Longformer + CNN | 94.09 | 90.69 | 83.41 | 100.00 | 57.0 | 4.5 | 9.6 |

| Longformer + LSTM | 61.29 | 0 | 0 | 0 | 13.0 | 8.6 | 2.6 |

The results obtained surpassed those achieved in goal 6 and goal 9, with the best accuracy obtained of 94%

in the longformer + CNN model. We can also observe that the models that achieved the best results were those

that used the CNN network for deep learning.

With the motivation to increase accuracy obtained with baseline implementation, was implemented a transfer learning

strategy under the assumption that small data available for training was insufficient for adequate embedding training.

In this context, was considered two approaches:

- Pre-training word embeddings using similar datasets for text classification;

- Using transformers and attention mechanisms (Longformer) to create contextualized embeddings.

Templates using Word2Vec and Longformer also need to be loaded and their weights are as follows:

Table 9: Templates using Word2Vec and Longformer

| Templates | weights |

|------------------------------|---------|

| Longformer | 10.9GB |

| Word2Vec | 56.1MB |

In addition, it was possible to notice that the model of longformer + SNN and longformer + LSTM were not able

to learn. Perhaps the models need some adjustments, but each training attempt took between 5 and 8 hours, which

made it impossible to try to adjust when other models were already showing promising results.

Above the results obtained, it is also necessary to highlight two limitations found for the replication and

training of networks:

These 10Gb of the model exceed the Github limit and did not go to the repository, so to run the system we need

to download the pre-trained network in the notebook and run the encoder-decoder with the data to create the model.

It is advisable to do this in a GPU environment and save the file on the drive. After that change the environment to

CPU to perform the training. Trying to generate the model in CPU will take more than 3 hours of processing.

The best model that does not have any limitations is Word2Vec + CNN. However, we need to study the limitations to

understand whether it is possible to introduce a new model with better accuracy and indicators. These adjustments

will be worked on during goals 13 and 14 where the main objective will be to encapsulate the solution in the most

suitable way for use in production.

## Benchmarks

### BibTeX entry and citation info

```bibtex

@conference{webist22,

author ={Carlos Rocha. and Marcos Dib. and Li Weigang. and Andrea Nunes. and Allan Faria. and Daniel Cajueiro.

and Maísa {Kely de Melo}. and Victor Celestino.},

title ={Using Transfer Learning To Classify Long Unstructured Texts with Small Amounts of Labeled Data},

booktitle ={Proceedings of the 18th International Conference on Web Information Systems and Technologies - WEBIST,},

year ={2022},

pages ={201-213},

publisher ={SciTePress},

organization ={INSTICC},

doi ={10.5220/0011527700003318},

isbn ={978-989-758-613-2},

issn ={2184-3252},

}

```

<a href="https://huggingface.co/exbert/?model=bert-base-uncased">

<img width="300px" src="https://cdn-media.huggingface.co/exbert/button.png">

</a>

|