Spaces:

No application file

No application file

File size: 7,119 Bytes

c78284a |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 |

# -*- coding: utf-8 -*-

"""Oim "Stable Diffusion WebUi "

Automatically generated by Colaboratory.

Original file is located at

https://colab.research.google.com/drive/1EiNg8Q0RbfxgNGZ70THUhKPNmGIfxhX7

# Welcome to Stable Diffusion WebUI 1.4! by [@altryne](https://twitter.com/altryne/) | [](https://ko-fi.com/N4N3DWMR1)

This colab runs the latest webui version from the repo https://github.com/hlky/stable-diffusion-webui

---

If this colab helped you, support me on ko-fi and don't forget to subscribe to my awesome list https://github.com/altryne/awesome-ai-art-image-synthesis

## 1 - Setup stage

"""

!nvidia-smi -L

"""### 1.1 Download repo and install

Clone git repo and setup miniconda

After runtime is executed, colab will complain that it crashed, but everything is fine, just hit run on the next cell or Runtime-> Run After

"""

# Commented out IPython magic to ensure Python compatibility.

#@markdown ## Download the stable-diffusion repo from hlky

#@markdown And install colab related conda

!git clone https://github.com/hlky/stable-diffusion

# %cd /content/stable-diffusion

!git checkout c0c2a7c0d55561cfb6f42a3681346b9b70749ff1

!pip install -e .

!pip install condacolab

import condacolab

condacolab.install_miniconda()

"""### 1.2 Environment setup

Setup envoroment, Gfpgan and Real-ESRGAN

Setup takes about 5-6 minutes

"""

#@markdown ### Set up conda environment - Takes a while

!conda env update -n base -f /content/stable-diffusion/environment.yaml

"""### 1.3 Setup Upscalers - CFPGan and ESRGAN"""

# Commented out IPython magic to ensure Python compatibility.

#@markdown ### Build upscalers support

#@markdown **GFPGAN** Automatically correct distorted faces with a built-in GFPGAN option, fixes them in less than half a second

#@markdown **ESRGAN** Boosts the resolution of images with a built-in RealESRGAN option

add_CFP = True #@param {type:"boolean"}

add_ESR = True #@param {type:"boolean"}

if add_CFP:

# %cd /content/stable-diffusion/src/gfpgan/

!pip install basicsr facexlib yapf lmdb opencv-python pyyaml tb-nightly --no-deps

!python setup.py develop

!pip install realesrgan

!wget https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth -P experiments/pretrained_models

if add_ESR:

# %cd /content/stable-diffusion/src/realesrgan/

!wget https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth -P experiments/pretrained_models

!wget https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth -P experiments/pretrained_models

# %cd /content/stable-diffusion/

!wget https://github.com/matomo-org/travis-scripts/blob/master/fonts/Arial.ttf?raw=true -O arial.ttf

#@markdown # Load the stable-diffusion model (requires Gdrive)

#@markdown If you don't already have it in your google drive, download the stable-diffusion model from [hugging face](https://huggingface.co/CompVis/stable-diffusion-v1-4)

#@markdown Then upload the file to your google drive (you'll be asked to connect it in the next step)

#@markdown **Model Path Variables**

# ask for the link

print("Local Path Variables:\n")

models_path = "/content/models" #@param {type:"string"}

output_path = "/content/output" #@param {type:"string"}

#@markdown **Google Drive Path Variables (Optional)**

mount_google_drive = True #@param {type:"boolean"}

force_remount = False

if mount_google_drive:

from google.colab import drive

try:

drive_path = "/content/drive"

drive.mount(drive_path,force_remount=force_remount)

models_path_gdrive = "/content/drive/MyDrive/AI/models" #@param {type:"string"}

output_path_gdrive = "/content/drive/MyDrive/AI/StableDiffusion" #@param {type:"string"}

models_path = models_path_gdrive

output_path = output_path_gdrive

except:

print("...error mounting drive or with drive path variables")

print("...reverting to default path variables")

import os

os.makedirs(models_path, exist_ok=True)

os.makedirs(output_path, exist_ok=True)

print(f"models_path: {models_path}")

print(f"output_path: {output_path}")

"""## 2 - Run the Stable Diffusion webui

### 2.1 Optional - Set webUI settings and configs before running

"""

#@markdown # Launch preferences - Advanced

share_password="oimetagi" #@param {type:"string"}

#@markdown * Add a password to your webui

defaults="configs/webui/webui.yaml" #@param {type:"string"}

#@markdown * path to configuration file providing UI defaults, uses same format as cli parameter)

#@markdown Edit this file if you want to change the default settings UI launches with

#@markdown ---

save_metadata = False #@param {type:"boolean"}

#@markdown * Whether to embed the generation parameters in the sample images

skip_grid = False #@param {type:"boolean"}

#@markdown * Do not save a grid, only individual samples. Helpful when evaluating lots of samples

skip_save = False #@param {type:"boolean"}

#@markdown * Do not save individual samples as files. For speed measurements

optimized = False #@param {type:"boolean"}

#@markdown * Load the model onto the device piecemeal instead of all at once to reduce VRAM usage at the cost of performance

optimized_turbo = True #@param {type:"boolean"}

#@markdown * Alternative optimization mode that does not save as much VRAM but runs siginificantly faster

no_verify_input = False #@param {type:"boolean"}

#@markdown * Do not verify input to check if it's too long

no_half = False #@param {type:"boolean"}

#@markdown * Do not switch the model to 16-bit floats

no_progressbar_hiding = True #@param {type:"boolean"}

#@markdown * Do not hide progressbar in gradio UI

extra_models_cpu = False #@param {type:"boolean"}

#@markdown * Run extra models (GFGPAN/ESRGAN) on cpu

esrgan_cpu = True #@param {type:"boolean"}

#@markdown * run ESRGAN on cpu

gfpgan_cpu = True #@param {type:"boolean"}

#@markdown * run GFPGAN on cpu

run_string_with_variables = {

'--save-metadata': f'{save_metadata}',

'--skip-grid': f'{skip_grid}',

'--skip-save': f'{skip_save}',

'--optimized': f'{optimized}',

'--optimized-turbo': f'{optimized_turbo}',

'--no-verify-input': f'{no_verify_input}',

'--no-half': f'{no_half}',

'--no-progressbar-hiding': f'{no_progressbar_hiding}',

'--extra-models-cpu': f'{extra_models_cpu}',

'--esrgan-cpu': f'{esrgan_cpu}',

'--gfpgan-cpu': f'{gfpgan_cpu}'}

only_true_vars = {k for (k,v) in run_string_with_variables.items() if v == 'True'}

vars = " ".join(only_true_vars)

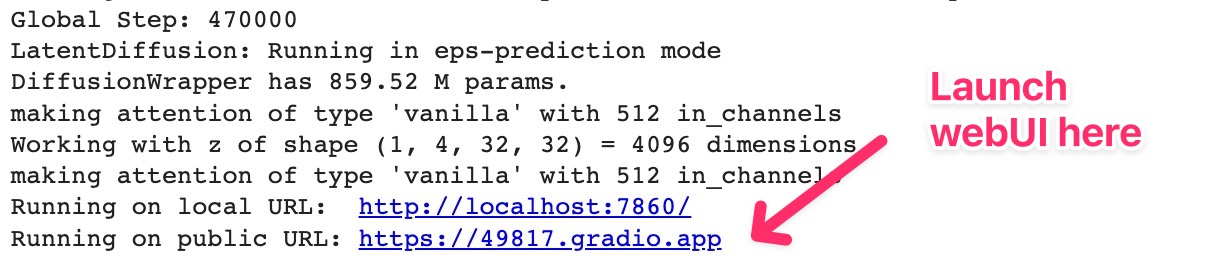

"""## 3 - Launch WebUI for stable diffusion"""

# Commented out IPython magic to ensure Python compatibility.

#@markdown ** keep in mind that this script is set to run for ever, google will disconnect you after 90 minutes on free tiers

#@markdown # Important - click the public URL to launch WebUI in another tab

#@markdown

# %cd /content/stable-diffusion

!python /content/stable-diffusion/scripts/webui.py \

--ckpt '{models_path}/sd-v1-4.ckpt' \

--outdir '{output_path}' \

--share {vars} |