Spaces:

Runtime error

Runtime error

File size: 4,312 Bytes

4be4880 0f8b50e 5cd3b27 4be4880 5cd3b27 4be4880 6d0b4f5 f11e0b8 4be4880 7a8c58a dcd3a45 5cd3b27 4be4880 10e9596 4be4880 10e9596 4be4880 10e9596 4be4880 10e9596 4be4880 10e9596 4be4880 e339a03 4be4880 8229a2a e339a03 8229a2a e339a03 4be4880 e339a03 4be4880 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 |

from deepsparse import Pipeline

import time

import gradio as gr

markdownn = '''

# Text Classification Pipeline with DeepSparse

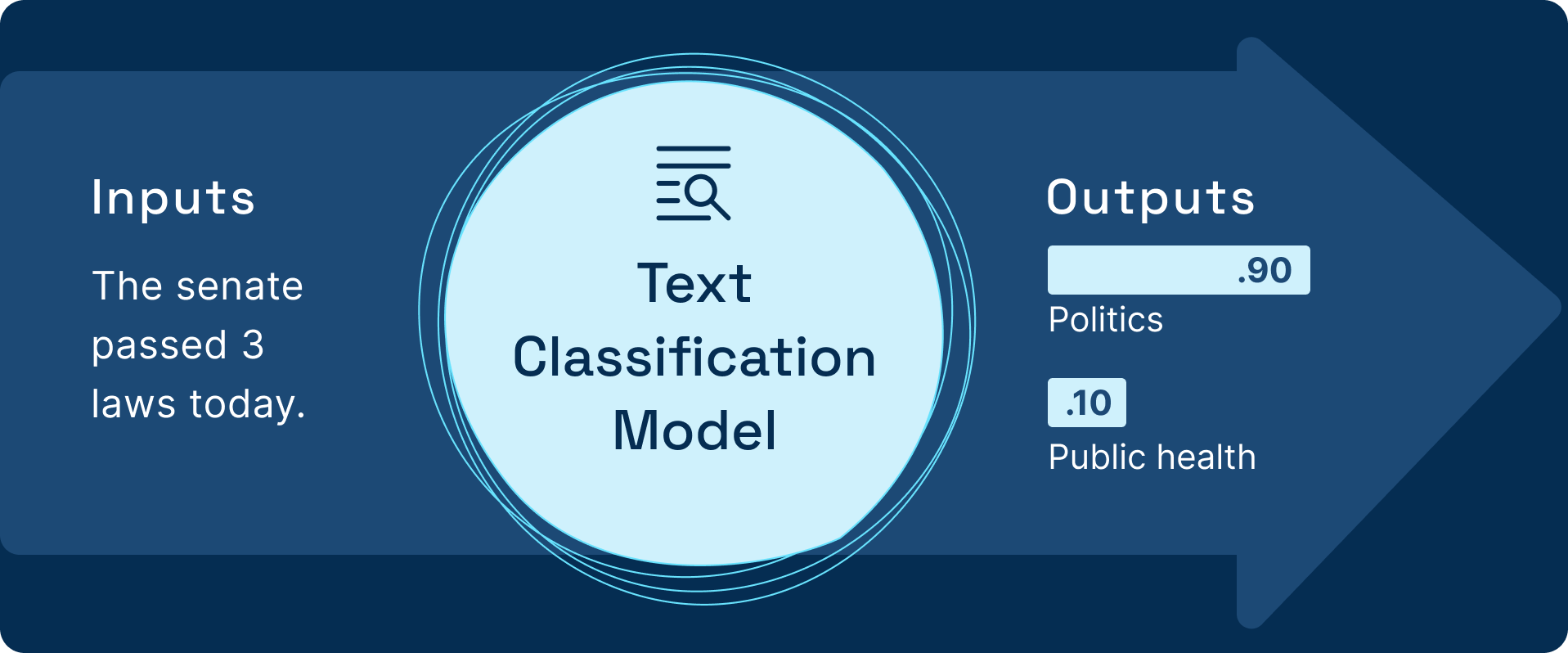

Text Classification involves assigning a label to a given text. For example, sentiment analysis is an example of a text classification use case.

## What is DeepSparse

DeepSparse is sparsity-aware inference runtime offering GPU-class performance on CPUs and APIs to integrate ML into your application. DeepSparse provides sparsified pipelines for computer vision and NLP.

The text classification Pipeline, for example, wraps an NLP model with the proper preprocessing and postprocessing pipelines, such as tokenization.

### Inference

Here is sample code for a text classification pipeline:

```

from deepsparse import Pipeline

pipeline = Pipeline.create(task="zero_shot_text_classification", model_path="zoo:nlp/text_classification/distilbert-none/pytorch/huggingface/mnli/pruned80_quant-none-vnni",model_scheme="mnli",model_config={"hypothesis_template": "This text is related to {}"},)

inference = pipeline(sequences= text,labels=['politics', 'public health', 'Europe'],)

print(inference)

```

## Use case example

Customer review classification is a great example of text classification in action.

The ability to quickly classify sentiment from customers is an added advantage for any business.

Therefore, whichever solution you deploy for classifying the customer reviews should deliver results in the shortest time possible.

By being fast the solution will process more volume, hence cheaper computational resources are utilized.

When deploying a text classification model, decreasing the model’s latency and increasing its throughput is critical. This is why DeepSparse Pipelines have sparse text classification models.

## Resources

[Classify Even Longer Customer Reviews Using Sparsity with DeepSparse](https://neuralmagic.com/blog/accelerate-customer-review-classification-with-sparse-transformers/)

'''

task = "zero_shot_text_classification"

dense_classification_pipeline = Pipeline.create(

task=task,

model_path="zoo:nlp/text_classification/distilbert-none/pytorch/huggingface/mnli/base-none",

model_scheme="mnli",

model_config={"hypothesis_template": "This text is related to {}"},

)

sparse_classification_pipeline = Pipeline.create(

task=task,

model_path="zoo:nlp/text_classification/distilbert-none/pytorch/huggingface/mnli/pruned80_quant-none-vnni",

model_scheme="mnli",

model_config={"hypothesis_template": "This text is related to {}"},

)

def run_pipeline(text):

dense_start = time.perf_counter()

dense_output = dense_classification_pipeline(sequences= text,labels=['politics', 'public health', 'Europe'],)

dense_result = dict(dense_output)

dense_end = time.perf_counter()

dense_duration = (dense_end - dense_start) * 1000.0

sparse_start = time.perf_counter()

sparse_output = sparse_classification_pipeline(sequences= text,labels=['politics', 'public health', 'Europe'],)

sparse_result = dict(sparse_output)

sparse_end = time.perf_counter()

sparse_duration = (sparse_end - sparse_start) * 1000.0

return sparse_result, sparse_duration, dense_result, dense_duration

with gr.Blocks() as demo:

with gr.Row():

with gr.Column():

gr.Markdown(markdownn)

with gr.Column():

gr.Markdown("""

### Text classification demo

""")

text = gr.Text(label="Text")

btn = gr.Button("Submit")

dense_answers = gr.Textbox(label="Dense model answer")

dense_duration = gr.Number(label="Dense Latency (ms):")

sparse_answers = gr.Textbox(label="Sparse model answers")

sparse_duration = gr.Number(label="Sparse Latency (ms):")

gr.Examples([["Who are you voting for in 2020?"],["Public health is very important"]],inputs=[text],)

btn.click(

run_pipeline,

inputs=[text],

outputs=[sparse_answers,sparse_duration,dense_answers,dense_duration],

)

if __name__ == "__main__":

demo.launch() |