+

+  +

+  +

+

+  +

+  +

+  +

+

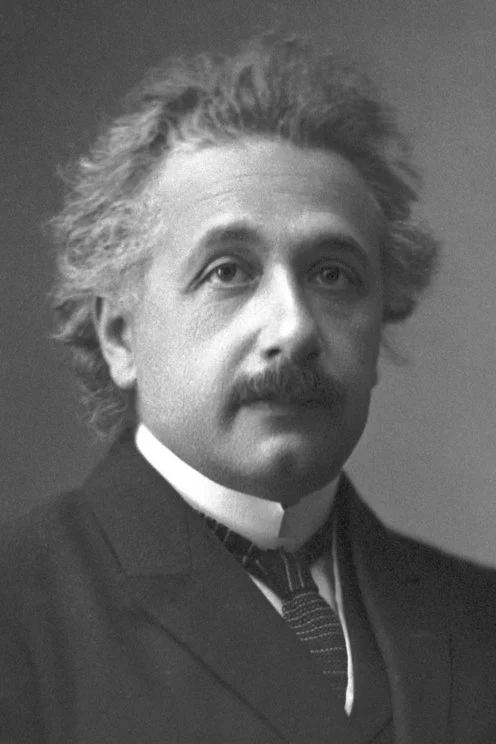

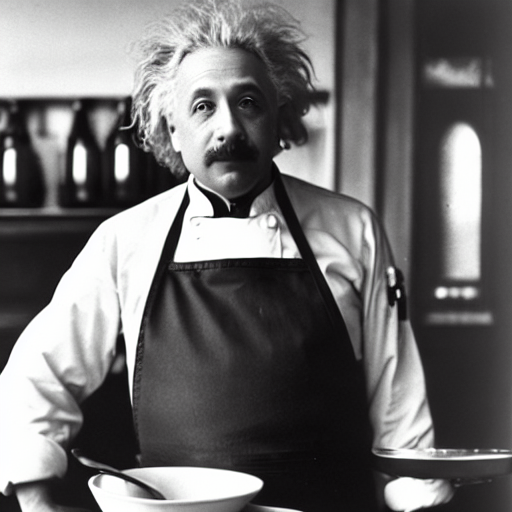

+ Haozhe Zhao1*, Xiaojian Ma2*, Liang Chen1, Shuzheng Si1, Rujie Wu1, + Kaikai An1, Peiyu Yu3, Minjia Zhang4, Qing Li2, Baobao Chang2 +

++ 1Peking University, 2BIGAI, 3UCLA, 4UIUC +

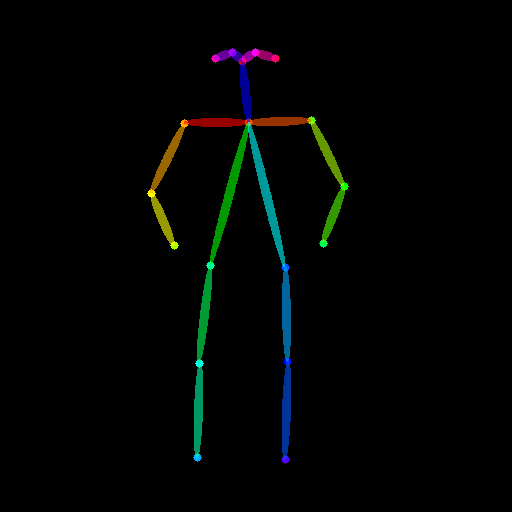

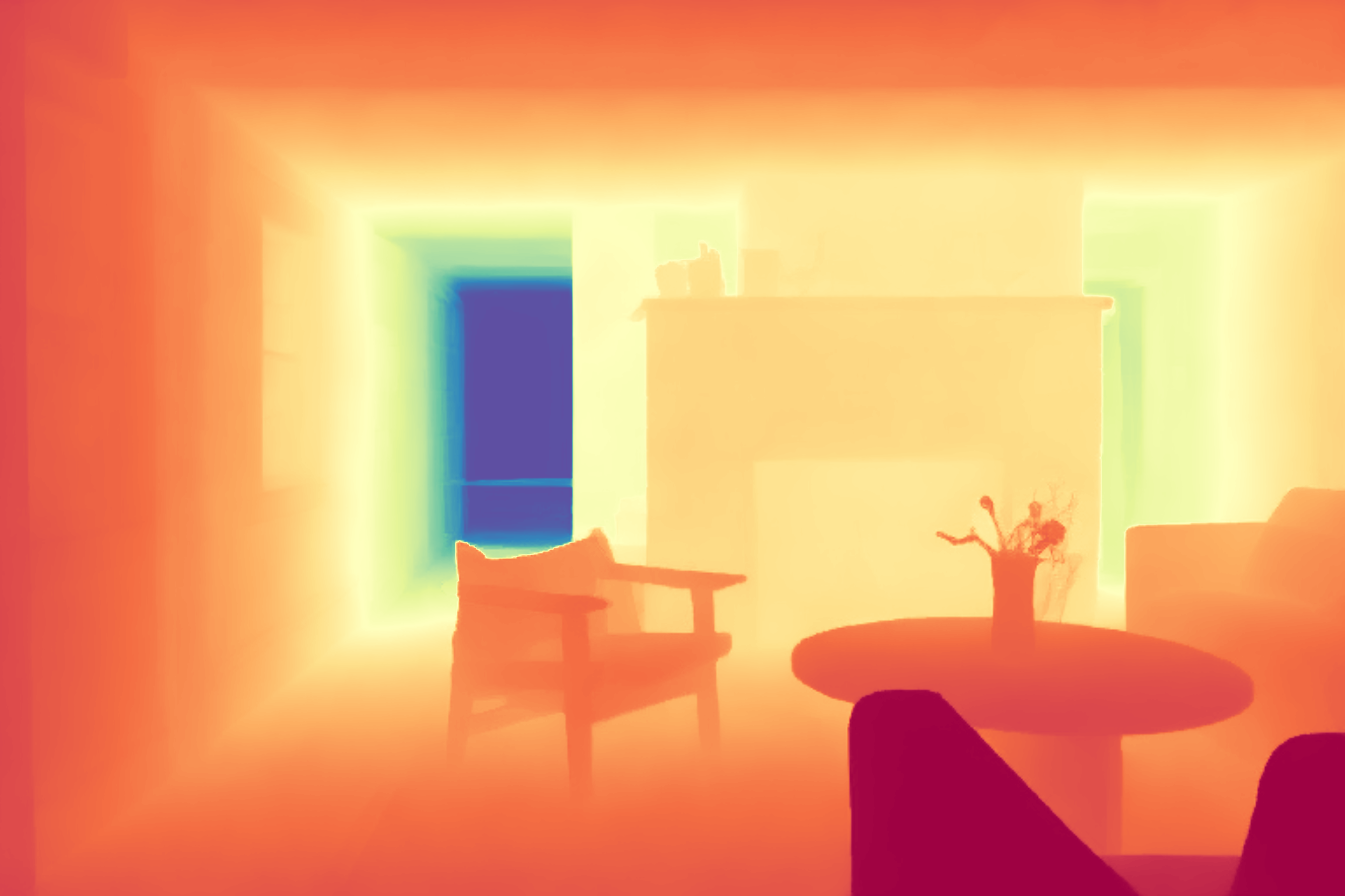

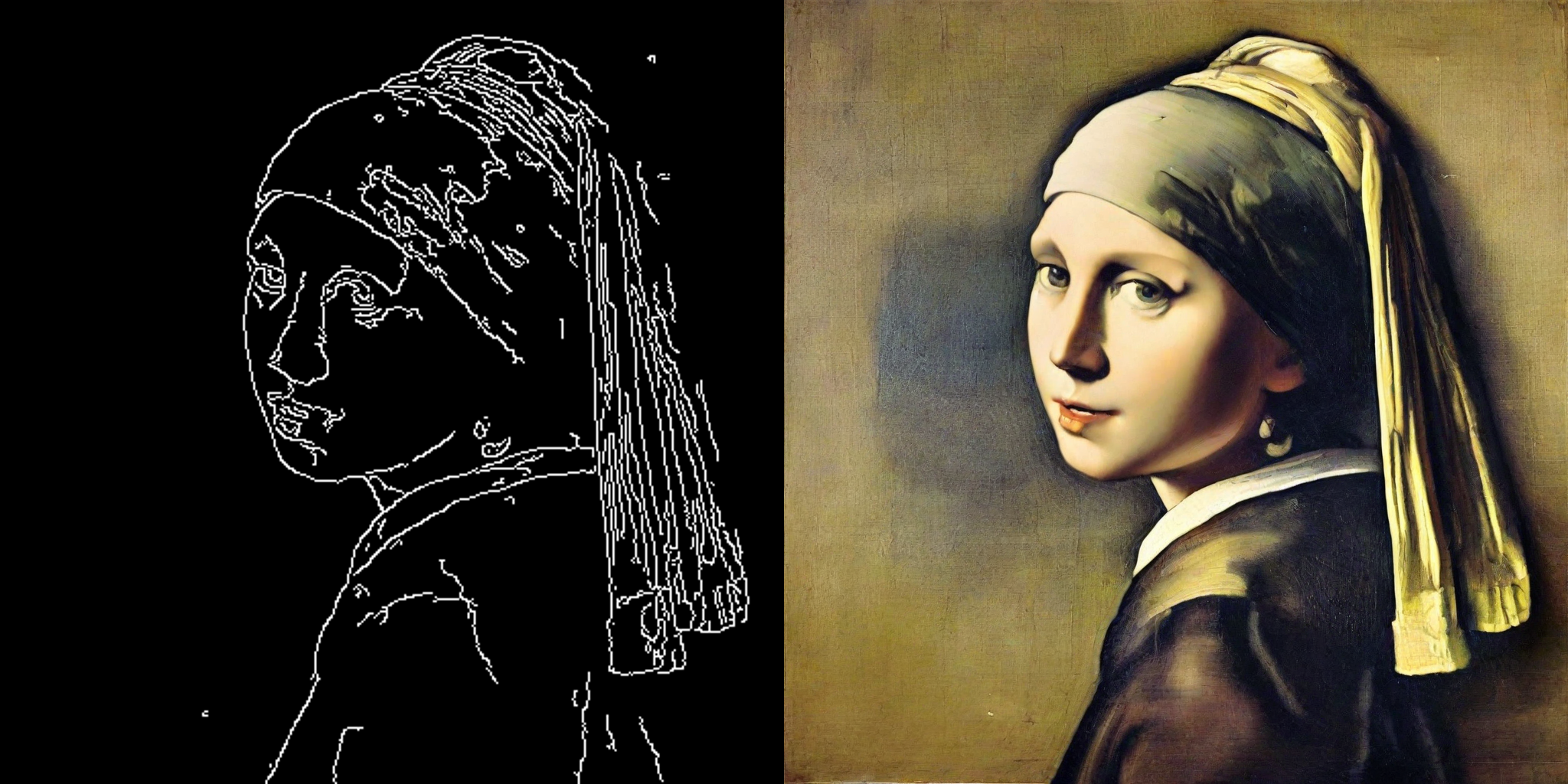

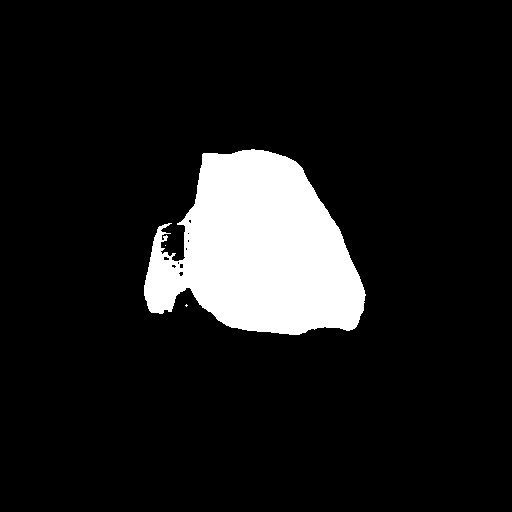

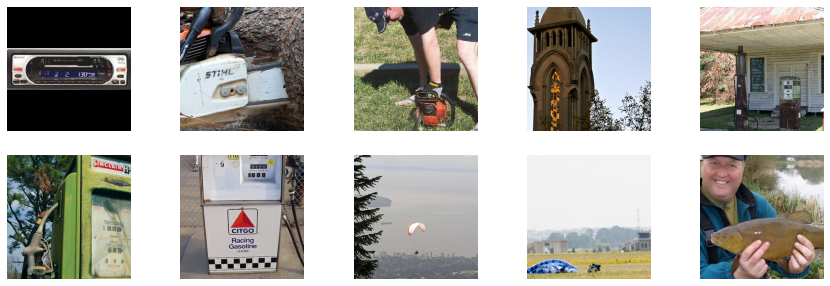

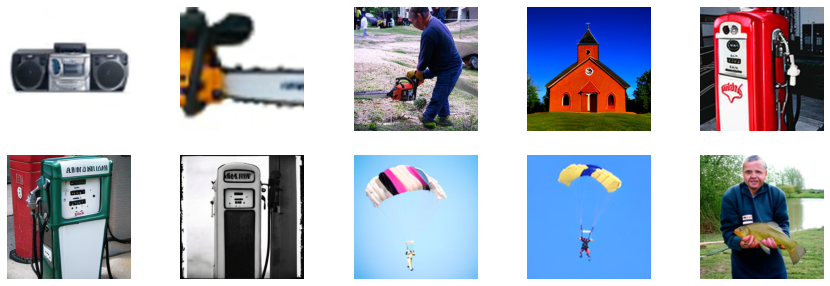

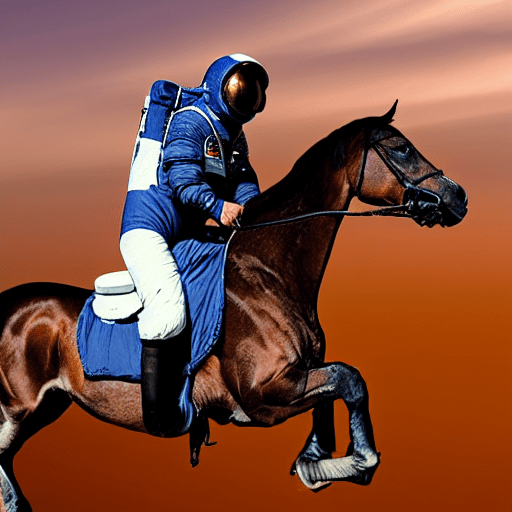

++ UltraEdit is a dataset designed for fine-grained, instruction-based image editing. It contains over 4 million free-form image editing samples and more than 100,000 region-based image editing samples, automatically generated with real images as anchors. +

++ This demo allows you to perform image editing using the Stable Diffusion 3 model trained with this extensive dataset. It supports both free-form (without mask) and region-based (with mask) image editing. Use the sliders to adjust the inference steps and guidance scales, and provide a seed for reproducibility. The image guidance scale of 1.5 and text guidance scale of 7.5 / 12.5 is a good start for free-from/region-based image editing. +

++ Usage Instructions: You need to upload the images and prompts for editing. Use the pen tool to mark the areas you want to edit. If no region is marked, it will resort to free-form editing. +

+ +

+Whichever way you choose to contribute, we strive to be part of an open, welcoming, and kind community. Please, read our [code of conduct](https://github.com/huggingface/diffusers/blob/main/CODE_OF_CONDUCT.md) and be mindful to respect it during your interactions. We also recommend you become familiar with the [ethical guidelines](https://huggingface.co/docs/diffusers/conceptual/ethical_guidelines) that guide our project and ask you to adhere to the same principles of transparency and responsibility.

+

+We enormously value feedback from the community, so please do not be afraid to speak up if you believe you have valuable feedback that can help improve the library - every message, comment, issue, and pull request (PR) is read and considered.

+

+## Overview

+

+You can contribute in many ways ranging from answering questions on issues to adding new diffusion models to

+the core library.

+

+In the following, we give an overview of different ways to contribute, ranked by difficulty in ascending order. All of them are valuable to the community.

+

+* 1. Asking and answering questions on [the Diffusers discussion forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers) or on [Discord](https://discord.gg/G7tWnz98XR).

+* 2. Opening new issues on [the GitHub Issues tab](https://github.com/huggingface/diffusers/issues/new/choose).

+* 3. Answering issues on [the GitHub Issues tab](https://github.com/huggingface/diffusers/issues).

+* 4. Fix a simple issue, marked by the "Good first issue" label, see [here](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22).

+* 5. Contribute to the [documentation](https://github.com/huggingface/diffusers/tree/main/docs/source).

+* 6. Contribute a [Community Pipeline](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3Acommunity-examples).

+* 7. Contribute to the [examples](https://github.com/huggingface/diffusers/tree/main/examples).

+* 8. Fix a more difficult issue, marked by the "Good second issue" label, see [here](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22Good+second+issue%22).

+* 9. Add a new pipeline, model, or scheduler, see ["New Pipeline/Model"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+pipeline%2Fmodel%22) and ["New scheduler"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+scheduler%22) issues. For this contribution, please have a look at [Design Philosophy](https://github.com/huggingface/diffusers/blob/main/PHILOSOPHY.md).

+

+As said before, **all contributions are valuable to the community**.

+In the following, we will explain each contribution a bit more in detail.

+

+For all contributions 4-9, you will need to open a PR. It is explained in detail how to do so in [Opening a pull request](#how-to-open-a-pr).

+

+### 1. Asking and answering questions on the Diffusers discussion forum or on the Diffusers Discord

+

+Any question or comment related to the Diffusers library can be asked on the [discussion forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/) or on [Discord](https://discord.gg/G7tWnz98XR). Such questions and comments include (but are not limited to):

+- Reports of training or inference experiments in an attempt to share knowledge

+- Presentation of personal projects

+- Questions to non-official training examples

+- Project proposals

+- General feedback

+- Paper summaries

+- Asking for help on personal projects that build on top of the Diffusers library

+- General questions

+- Ethical questions regarding diffusion models

+- ...

+

+Every question that is asked on the forum or on Discord actively encourages the community to publicly

+share knowledge and might very well help a beginner in the future that has the same question you're

+having. Please do pose any questions you might have.

+In the same spirit, you are of immense help to the community by answering such questions because this way you are publicly documenting knowledge for everybody to learn from.

+

+**Please** keep in mind that the more effort you put into asking or answering a question, the higher

+the quality of the publicly documented knowledge. In the same way, well-posed and well-answered questions create a high-quality knowledge database accessible to everybody, while badly posed questions or answers reduce the overall quality of the public knowledge database.

+In short, a high quality question or answer is *precise*, *concise*, *relevant*, *easy-to-understand*, *accessible*, and *well-formated/well-posed*. For more information, please have a look through the [How to write a good issue](#how-to-write-a-good-issue) section.

+

+**NOTE about channels**:

+[*The forum*](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) is much better indexed by search engines, such as Google. Posts are ranked by popularity rather than chronologically. Hence, it's easier to look up questions and answers that we posted some time ago.

+In addition, questions and answers posted in the forum can easily be linked to.

+In contrast, *Discord* has a chat-like format that invites fast back-and-forth communication.

+While it will most likely take less time for you to get an answer to your question on Discord, your

+question won't be visible anymore over time. Also, it's much harder to find information that was posted a while back on Discord. We therefore strongly recommend using the forum for high-quality questions and answers in an attempt to create long-lasting knowledge for the community. If discussions on Discord lead to very interesting answers and conclusions, we recommend posting the results on the forum to make the information more available for future readers.

+

+### 2. Opening new issues on the GitHub issues tab

+

+The 🧨 Diffusers library is robust and reliable thanks to the users who notify us of

+the problems they encounter. So thank you for reporting an issue.

+

+Remember, GitHub issues are reserved for technical questions directly related to the Diffusers library, bug reports, feature requests, or feedback on the library design.

+

+In a nutshell, this means that everything that is **not** related to the **code of the Diffusers library** (including the documentation) should **not** be asked on GitHub, but rather on either the [forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) or [Discord](https://discord.gg/G7tWnz98XR).

+

+**Please consider the following guidelines when opening a new issue**:

+- Make sure you have searched whether your issue has already been asked before (use the search bar on GitHub under Issues).

+- Please never report a new issue on another (related) issue. If another issue is highly related, please

+open a new issue nevertheless and link to the related issue.

+- Make sure your issue is written in English. Please use one of the great, free online translation services, such as [DeepL](https://www.deepl.com/translator) to translate from your native language to English if you are not comfortable in English.

+- Check whether your issue might be solved by updating to the newest Diffusers version. Before posting your issue, please make sure that `python -c "import diffusers; print(diffusers.__version__)"` is higher or matches the latest Diffusers version.

+- Remember that the more effort you put into opening a new issue, the higher the quality of your answer will be and the better the overall quality of the Diffusers issues.

+

+New issues usually include the following.

+

+#### 2.1. Reproducible, minimal bug reports

+

+A bug report should always have a reproducible code snippet and be as minimal and concise as possible.

+This means in more detail:

+- Narrow the bug down as much as you can, **do not just dump your whole code file**.

+- Format your code.

+- Do not include any external libraries except for Diffusers depending on them.

+- **Always** provide all necessary information about your environment; for this, you can run: `diffusers-cli env` in your shell and copy-paste the displayed information to the issue.

+- Explain the issue. If the reader doesn't know what the issue is and why it is an issue, she cannot solve it.

+- **Always** make sure the reader can reproduce your issue with as little effort as possible. If your code snippet cannot be run because of missing libraries or undefined variables, the reader cannot help you. Make sure your reproducible code snippet is as minimal as possible and can be copy-pasted into a simple Python shell.

+- If in order to reproduce your issue a model and/or dataset is required, make sure the reader has access to that model or dataset. You can always upload your model or dataset to the [Hub](https://huggingface.co) to make it easily downloadable. Try to keep your model and dataset as small as possible, to make the reproduction of your issue as effortless as possible.

+

+For more information, please have a look through the [How to write a good issue](#how-to-write-a-good-issue) section.

+

+You can open a bug report [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=bug&projects=&template=bug-report.yml).

+

+#### 2.2. Feature requests

+

+A world-class feature request addresses the following points:

+

+1. Motivation first:

+* Is it related to a problem/frustration with the library? If so, please explain

+why. Providing a code snippet that demonstrates the problem is best.

+* Is it related to something you would need for a project? We'd love to hear

+about it!

+* Is it something you worked on and think could benefit the community?

+Awesome! Tell us what problem it solved for you.

+2. Write a *full paragraph* describing the feature;

+3. Provide a **code snippet** that demonstrates its future use;

+4. In case this is related to a paper, please attach a link;

+5. Attach any additional information (drawings, screenshots, etc.) you think may help.

+

+You can open a feature request [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feature_request.md&title=).

+

+#### 2.3 Feedback

+

+Feedback about the library design and why it is good or not good helps the core maintainers immensely to build a user-friendly library. To understand the philosophy behind the current design philosophy, please have a look [here](https://huggingface.co/docs/diffusers/conceptual/philosophy). If you feel like a certain design choice does not fit with the current design philosophy, please explain why and how it should be changed. If a certain design choice follows the design philosophy too much, hence restricting use cases, explain why and how it should be changed.

+If a certain design choice is very useful for you, please also leave a note as this is great feedback for future design decisions.

+

+You can open an issue about feedback [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feedback.md&title=).

+

+#### 2.4 Technical questions

+

+Technical questions are mainly about why certain code of the library was written in a certain way, or what a certain part of the code does. Please make sure to link to the code in question and please provide detail on

+why this part of the code is difficult to understand.

+

+You can open an issue about a technical question [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=bug&template=bug-report.yml).

+

+#### 2.5 Proposal to add a new model, scheduler, or pipeline

+

+If the diffusion model community released a new model, pipeline, or scheduler that you would like to see in the Diffusers library, please provide the following information:

+

+* Short description of the diffusion pipeline, model, or scheduler and link to the paper or public release.

+* Link to any of its open-source implementation.

+* Link to the model weights if they are available.

+

+If you are willing to contribute to the model yourself, let us know so we can best guide you. Also, don't forget

+to tag the original author of the component (model, scheduler, pipeline, etc.) by GitHub handle if you can find it.

+

+You can open a request for a model/pipeline/scheduler [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=New+model%2Fpipeline%2Fscheduler&template=new-model-addition.yml).

+

+### 3. Answering issues on the GitHub issues tab

+

+Answering issues on GitHub might require some technical knowledge of Diffusers, but we encourage everybody to give it a try even if you are not 100% certain that your answer is correct.

+Some tips to give a high-quality answer to an issue:

+- Be as concise and minimal as possible.

+- Stay on topic. An answer to the issue should concern the issue and only the issue.

+- Provide links to code, papers, or other sources that prove or encourage your point.

+- Answer in code. If a simple code snippet is the answer to the issue or shows how the issue can be solved, please provide a fully reproducible code snippet.

+

+Also, many issues tend to be simply off-topic, duplicates of other issues, or irrelevant. It is of great

+help to the maintainers if you can answer such issues, encouraging the author of the issue to be

+more precise, provide the link to a duplicated issue or redirect them to [the forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) or [Discord](https://discord.gg/G7tWnz98XR).

+

+If you have verified that the issued bug report is correct and requires a correction in the source code,

+please have a look at the next sections.

+

+For all of the following contributions, you will need to open a PR. It is explained in detail how to do so in the [Opening a pull request](#how-to-open-a-pr) section.

+

+### 4. Fixing a "Good first issue"

+

+*Good first issues* are marked by the [Good first issue](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22) label. Usually, the issue already

+explains how a potential solution should look so that it is easier to fix.

+If the issue hasn't been closed and you would like to try to fix this issue, you can just leave a message "I would like to try this issue.". There are usually three scenarios:

+- a.) The issue description already proposes a fix. In this case and if the solution makes sense to you, you can open a PR or draft PR to fix it.

+- b.) The issue description does not propose a fix. In this case, you can ask what a proposed fix could look like and someone from the Diffusers team should answer shortly. If you have a good idea of how to fix it, feel free to directly open a PR.

+- c.) There is already an open PR to fix the issue, but the issue hasn't been closed yet. If the PR has gone stale, you can simply open a new PR and link to the stale PR. PRs often go stale if the original contributor who wanted to fix the issue suddenly cannot find the time anymore to proceed. This often happens in open-source and is very normal. In this case, the community will be very happy if you give it a new try and leverage the knowledge of the existing PR. If there is already a PR and it is active, you can help the author by giving suggestions, reviewing the PR or even asking whether you can contribute to the PR.

+

+

+### 5. Contribute to the documentation

+

+A good library **always** has good documentation! The official documentation is often one of the first points of contact for new users of the library, and therefore contributing to the documentation is a **highly

+valuable contribution**.

+

+Contributing to the library can have many forms:

+

+- Correcting spelling or grammatical errors.

+- Correct incorrect formatting of the docstring. If you see that the official documentation is weirdly displayed or a link is broken, we are very happy if you take some time to correct it.

+- Correct the shape or dimensions of a docstring input or output tensor.

+- Clarify documentation that is hard to understand or incorrect.

+- Update outdated code examples.

+- Translating the documentation to another language.

+

+Anything displayed on [the official Diffusers doc page](https://huggingface.co/docs/diffusers/index) is part of the official documentation and can be corrected, adjusted in the respective [documentation source](https://github.com/huggingface/diffusers/tree/main/docs/source).

+

+Please have a look at [this page](https://github.com/huggingface/diffusers/tree/main/docs) on how to verify changes made to the documentation locally.

+

+

+### 6. Contribute a community pipeline

+

+[Pipelines](https://huggingface.co/docs/diffusers/api/pipelines/overview) are usually the first point of contact between the Diffusers library and the user.

+Pipelines are examples of how to use Diffusers [models](https://huggingface.co/docs/diffusers/api/models/overview) and [schedulers](https://huggingface.co/docs/diffusers/api/schedulers/overview).

+We support two types of pipelines:

+

+- Official Pipelines

+- Community Pipelines

+

+Both official and community pipelines follow the same design and consist of the same type of components.

+

+Official pipelines are tested and maintained by the core maintainers of Diffusers. Their code

+resides in [src/diffusers/pipelines](https://github.com/huggingface/diffusers/tree/main/src/diffusers/pipelines).

+In contrast, community pipelines are contributed and maintained purely by the **community** and are **not** tested.

+They reside in [examples/community](https://github.com/huggingface/diffusers/tree/main/examples/community) and while they can be accessed via the [PyPI diffusers package](https://pypi.org/project/diffusers/), their code is not part of the PyPI distribution.

+

+The reason for the distinction is that the core maintainers of the Diffusers library cannot maintain and test all

+possible ways diffusion models can be used for inference, but some of them may be of interest to the community.

+Officially released diffusion pipelines,

+such as Stable Diffusion are added to the core src/diffusers/pipelines package which ensures

+high quality of maintenance, no backward-breaking code changes, and testing.

+More bleeding edge pipelines should be added as community pipelines. If usage for a community pipeline is high, the pipeline can be moved to the official pipelines upon request from the community. This is one of the ways we strive to be a community-driven library.

+

+To add a community pipeline, one should add a

+

+Whichever way you choose to contribute, we strive to be part of an open, welcoming, and kind community. Please, read our [code of conduct](https://github.com/huggingface/diffusers/blob/main/CODE_OF_CONDUCT.md) and be mindful to respect it during your interactions. We also recommend you become familiar with the [ethical guidelines](https://huggingface.co/docs/diffusers/conceptual/ethical_guidelines) that guide our project and ask you to adhere to the same principles of transparency and responsibility.

+

+We enormously value feedback from the community, so please do not be afraid to speak up if you believe you have valuable feedback that can help improve the library - every message, comment, issue, and pull request (PR) is read and considered.

+

+## Overview

+

+You can contribute in many ways ranging from answering questions on issues to adding new diffusion models to

+the core library.

+

+In the following, we give an overview of different ways to contribute, ranked by difficulty in ascending order. All of them are valuable to the community.

+

+* 1. Asking and answering questions on [the Diffusers discussion forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers) or on [Discord](https://discord.gg/G7tWnz98XR).

+* 2. Opening new issues on [the GitHub Issues tab](https://github.com/huggingface/diffusers/issues/new/choose).

+* 3. Answering issues on [the GitHub Issues tab](https://github.com/huggingface/diffusers/issues).

+* 4. Fix a simple issue, marked by the "Good first issue" label, see [here](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22).

+* 5. Contribute to the [documentation](https://github.com/huggingface/diffusers/tree/main/docs/source).

+* 6. Contribute a [Community Pipeline](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3Acommunity-examples).

+* 7. Contribute to the [examples](https://github.com/huggingface/diffusers/tree/main/examples).

+* 8. Fix a more difficult issue, marked by the "Good second issue" label, see [here](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22Good+second+issue%22).

+* 9. Add a new pipeline, model, or scheduler, see ["New Pipeline/Model"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+pipeline%2Fmodel%22) and ["New scheduler"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+scheduler%22) issues. For this contribution, please have a look at [Design Philosophy](https://github.com/huggingface/diffusers/blob/main/PHILOSOPHY.md).

+

+As said before, **all contributions are valuable to the community**.

+In the following, we will explain each contribution a bit more in detail.

+

+For all contributions 4-9, you will need to open a PR. It is explained in detail how to do so in [Opening a pull request](#how-to-open-a-pr).

+

+### 1. Asking and answering questions on the Diffusers discussion forum or on the Diffusers Discord

+

+Any question or comment related to the Diffusers library can be asked on the [discussion forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/) or on [Discord](https://discord.gg/G7tWnz98XR). Such questions and comments include (but are not limited to):

+- Reports of training or inference experiments in an attempt to share knowledge

+- Presentation of personal projects

+- Questions to non-official training examples

+- Project proposals

+- General feedback

+- Paper summaries

+- Asking for help on personal projects that build on top of the Diffusers library

+- General questions

+- Ethical questions regarding diffusion models

+- ...

+

+Every question that is asked on the forum or on Discord actively encourages the community to publicly

+share knowledge and might very well help a beginner in the future that has the same question you're

+having. Please do pose any questions you might have.

+In the same spirit, you are of immense help to the community by answering such questions because this way you are publicly documenting knowledge for everybody to learn from.

+

+**Please** keep in mind that the more effort you put into asking or answering a question, the higher

+the quality of the publicly documented knowledge. In the same way, well-posed and well-answered questions create a high-quality knowledge database accessible to everybody, while badly posed questions or answers reduce the overall quality of the public knowledge database.

+In short, a high quality question or answer is *precise*, *concise*, *relevant*, *easy-to-understand*, *accessible*, and *well-formated/well-posed*. For more information, please have a look through the [How to write a good issue](#how-to-write-a-good-issue) section.

+

+**NOTE about channels**:

+[*The forum*](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) is much better indexed by search engines, such as Google. Posts are ranked by popularity rather than chronologically. Hence, it's easier to look up questions and answers that we posted some time ago.

+In addition, questions and answers posted in the forum can easily be linked to.

+In contrast, *Discord* has a chat-like format that invites fast back-and-forth communication.

+While it will most likely take less time for you to get an answer to your question on Discord, your

+question won't be visible anymore over time. Also, it's much harder to find information that was posted a while back on Discord. We therefore strongly recommend using the forum for high-quality questions and answers in an attempt to create long-lasting knowledge for the community. If discussions on Discord lead to very interesting answers and conclusions, we recommend posting the results on the forum to make the information more available for future readers.

+

+### 2. Opening new issues on the GitHub issues tab

+

+The 🧨 Diffusers library is robust and reliable thanks to the users who notify us of

+the problems they encounter. So thank you for reporting an issue.

+

+Remember, GitHub issues are reserved for technical questions directly related to the Diffusers library, bug reports, feature requests, or feedback on the library design.

+

+In a nutshell, this means that everything that is **not** related to the **code of the Diffusers library** (including the documentation) should **not** be asked on GitHub, but rather on either the [forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) or [Discord](https://discord.gg/G7tWnz98XR).

+

+**Please consider the following guidelines when opening a new issue**:

+- Make sure you have searched whether your issue has already been asked before (use the search bar on GitHub under Issues).

+- Please never report a new issue on another (related) issue. If another issue is highly related, please

+open a new issue nevertheless and link to the related issue.

+- Make sure your issue is written in English. Please use one of the great, free online translation services, such as [DeepL](https://www.deepl.com/translator) to translate from your native language to English if you are not comfortable in English.

+- Check whether your issue might be solved by updating to the newest Diffusers version. Before posting your issue, please make sure that `python -c "import diffusers; print(diffusers.__version__)"` is higher or matches the latest Diffusers version.

+- Remember that the more effort you put into opening a new issue, the higher the quality of your answer will be and the better the overall quality of the Diffusers issues.

+

+New issues usually include the following.

+

+#### 2.1. Reproducible, minimal bug reports

+

+A bug report should always have a reproducible code snippet and be as minimal and concise as possible.

+This means in more detail:

+- Narrow the bug down as much as you can, **do not just dump your whole code file**.

+- Format your code.

+- Do not include any external libraries except for Diffusers depending on them.

+- **Always** provide all necessary information about your environment; for this, you can run: `diffusers-cli env` in your shell and copy-paste the displayed information to the issue.

+- Explain the issue. If the reader doesn't know what the issue is and why it is an issue, she cannot solve it.

+- **Always** make sure the reader can reproduce your issue with as little effort as possible. If your code snippet cannot be run because of missing libraries or undefined variables, the reader cannot help you. Make sure your reproducible code snippet is as minimal as possible and can be copy-pasted into a simple Python shell.

+- If in order to reproduce your issue a model and/or dataset is required, make sure the reader has access to that model or dataset. You can always upload your model or dataset to the [Hub](https://huggingface.co) to make it easily downloadable. Try to keep your model and dataset as small as possible, to make the reproduction of your issue as effortless as possible.

+

+For more information, please have a look through the [How to write a good issue](#how-to-write-a-good-issue) section.

+

+You can open a bug report [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=bug&projects=&template=bug-report.yml).

+

+#### 2.2. Feature requests

+

+A world-class feature request addresses the following points:

+

+1. Motivation first:

+* Is it related to a problem/frustration with the library? If so, please explain

+why. Providing a code snippet that demonstrates the problem is best.

+* Is it related to something you would need for a project? We'd love to hear

+about it!

+* Is it something you worked on and think could benefit the community?

+Awesome! Tell us what problem it solved for you.

+2. Write a *full paragraph* describing the feature;

+3. Provide a **code snippet** that demonstrates its future use;

+4. In case this is related to a paper, please attach a link;

+5. Attach any additional information (drawings, screenshots, etc.) you think may help.

+

+You can open a feature request [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feature_request.md&title=).

+

+#### 2.3 Feedback

+

+Feedback about the library design and why it is good or not good helps the core maintainers immensely to build a user-friendly library. To understand the philosophy behind the current design philosophy, please have a look [here](https://huggingface.co/docs/diffusers/conceptual/philosophy). If you feel like a certain design choice does not fit with the current design philosophy, please explain why and how it should be changed. If a certain design choice follows the design philosophy too much, hence restricting use cases, explain why and how it should be changed.

+If a certain design choice is very useful for you, please also leave a note as this is great feedback for future design decisions.

+

+You can open an issue about feedback [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feedback.md&title=).

+

+#### 2.4 Technical questions

+

+Technical questions are mainly about why certain code of the library was written in a certain way, or what a certain part of the code does. Please make sure to link to the code in question and please provide detail on

+why this part of the code is difficult to understand.

+

+You can open an issue about a technical question [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=bug&template=bug-report.yml).

+

+#### 2.5 Proposal to add a new model, scheduler, or pipeline

+

+If the diffusion model community released a new model, pipeline, or scheduler that you would like to see in the Diffusers library, please provide the following information:

+

+* Short description of the diffusion pipeline, model, or scheduler and link to the paper or public release.

+* Link to any of its open-source implementation.

+* Link to the model weights if they are available.

+

+If you are willing to contribute to the model yourself, let us know so we can best guide you. Also, don't forget

+to tag the original author of the component (model, scheduler, pipeline, etc.) by GitHub handle if you can find it.

+

+You can open a request for a model/pipeline/scheduler [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=New+model%2Fpipeline%2Fscheduler&template=new-model-addition.yml).

+

+### 3. Answering issues on the GitHub issues tab

+

+Answering issues on GitHub might require some technical knowledge of Diffusers, but we encourage everybody to give it a try even if you are not 100% certain that your answer is correct.

+Some tips to give a high-quality answer to an issue:

+- Be as concise and minimal as possible.

+- Stay on topic. An answer to the issue should concern the issue and only the issue.

+- Provide links to code, papers, or other sources that prove or encourage your point.

+- Answer in code. If a simple code snippet is the answer to the issue or shows how the issue can be solved, please provide a fully reproducible code snippet.

+

+Also, many issues tend to be simply off-topic, duplicates of other issues, or irrelevant. It is of great

+help to the maintainers if you can answer such issues, encouraging the author of the issue to be

+more precise, provide the link to a duplicated issue or redirect them to [the forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) or [Discord](https://discord.gg/G7tWnz98XR).

+

+If you have verified that the issued bug report is correct and requires a correction in the source code,

+please have a look at the next sections.

+

+For all of the following contributions, you will need to open a PR. It is explained in detail how to do so in the [Opening a pull request](#how-to-open-a-pr) section.

+

+### 4. Fixing a "Good first issue"

+

+*Good first issues* are marked by the [Good first issue](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22) label. Usually, the issue already

+explains how a potential solution should look so that it is easier to fix.

+If the issue hasn't been closed and you would like to try to fix this issue, you can just leave a message "I would like to try this issue.". There are usually three scenarios:

+- a.) The issue description already proposes a fix. In this case and if the solution makes sense to you, you can open a PR or draft PR to fix it.

+- b.) The issue description does not propose a fix. In this case, you can ask what a proposed fix could look like and someone from the Diffusers team should answer shortly. If you have a good idea of how to fix it, feel free to directly open a PR.

+- c.) There is already an open PR to fix the issue, but the issue hasn't been closed yet. If the PR has gone stale, you can simply open a new PR and link to the stale PR. PRs often go stale if the original contributor who wanted to fix the issue suddenly cannot find the time anymore to proceed. This often happens in open-source and is very normal. In this case, the community will be very happy if you give it a new try and leverage the knowledge of the existing PR. If there is already a PR and it is active, you can help the author by giving suggestions, reviewing the PR or even asking whether you can contribute to the PR.

+

+

+### 5. Contribute to the documentation

+

+A good library **always** has good documentation! The official documentation is often one of the first points of contact for new users of the library, and therefore contributing to the documentation is a **highly

+valuable contribution**.

+

+Contributing to the library can have many forms:

+

+- Correcting spelling or grammatical errors.

+- Correct incorrect formatting of the docstring. If you see that the official documentation is weirdly displayed or a link is broken, we are very happy if you take some time to correct it.

+- Correct the shape or dimensions of a docstring input or output tensor.

+- Clarify documentation that is hard to understand or incorrect.

+- Update outdated code examples.

+- Translating the documentation to another language.

+

+Anything displayed on [the official Diffusers doc page](https://huggingface.co/docs/diffusers/index) is part of the official documentation and can be corrected, adjusted in the respective [documentation source](https://github.com/huggingface/diffusers/tree/main/docs/source).

+

+Please have a look at [this page](https://github.com/huggingface/diffusers/tree/main/docs) on how to verify changes made to the documentation locally.

+

+

+### 6. Contribute a community pipeline

+

+[Pipelines](https://huggingface.co/docs/diffusers/api/pipelines/overview) are usually the first point of contact between the Diffusers library and the user.

+Pipelines are examples of how to use Diffusers [models](https://huggingface.co/docs/diffusers/api/models/overview) and [schedulers](https://huggingface.co/docs/diffusers/api/schedulers/overview).

+We support two types of pipelines:

+

+- Official Pipelines

+- Community Pipelines

+

+Both official and community pipelines follow the same design and consist of the same type of components.

+

+Official pipelines are tested and maintained by the core maintainers of Diffusers. Their code

+resides in [src/diffusers/pipelines](https://github.com/huggingface/diffusers/tree/main/src/diffusers/pipelines).

+In contrast, community pipelines are contributed and maintained purely by the **community** and are **not** tested.

+They reside in [examples/community](https://github.com/huggingface/diffusers/tree/main/examples/community) and while they can be accessed via the [PyPI diffusers package](https://pypi.org/project/diffusers/), their code is not part of the PyPI distribution.

+

+The reason for the distinction is that the core maintainers of the Diffusers library cannot maintain and test all

+possible ways diffusion models can be used for inference, but some of them may be of interest to the community.

+Officially released diffusion pipelines,

+such as Stable Diffusion are added to the core src/diffusers/pipelines package which ensures

+high quality of maintenance, no backward-breaking code changes, and testing.

+More bleeding edge pipelines should be added as community pipelines. If usage for a community pipeline is high, the pipeline can be moved to the official pipelines upon request from the community. This is one of the ways we strive to be a community-driven library.

+

+To add a community pipeline, one should add a

+

+  +

+

+

+

+

+

. We discuss the hottest trends about diffusion models, help each other with contributions, personal projects or just hang out ☕.

+

+

+## Popular Tasks & Pipelines

+

+

. We discuss the hottest trends about diffusion models, help each other with contributions, personal projects or just hang out ☕.

+

+

+## Popular Tasks & Pipelines

+

+ +

+  +

+  +

+ +

+ +

+ +

++  +

+ |

+

| Source Video | +Output Video | +

|---|---|

|

+ raccoon playing a guitar

+ +  +

+ |

+

+ panda playing a guitar

+ +  +

+ |

+

|

+ closeup of margot robbie, fireworks in the background, high quality

+ +  +

+ |

+

+ closeup of tony stark, robert downey jr, fireworks

+ +  +

+ |

+

+  +

+ |

+

+  +

+ |

+

| Without FreeInit enabled | +With FreeInit enabled | +

|---|---|

|

+ panda playing a guitar

+ +  +

+ |

+

+ panda playing a guitar

+ +  +

+ |

+

+  +

+ |

+

+  +

+ |

+

+  +

+ |

+

+  +

+ |

+

+  +

+ |

+

| + Pipeline + | ++ Supported tasks + | ++ 🤗 Space + | +

|---|---|---|

| + StableDiffusion + | +text-to-image | + +

+ |

+

| + StableDiffusionImg2Img + | +image-to-image | + +

+ |

+

| + StableDiffusionInpaint + | +inpainting | + +

+ |

+

| + StableDiffusionDepth2Img + | +depth-to-image | + +

+ |

+

| + StableDiffusionImageVariation + | +image variation | + +

+ |

+

| + StableDiffusionPipelineSafe + | +filtered text-to-image | + +

+ |

+

| + StableDiffusion2 + | +text-to-image, inpainting, depth-to-image, super-resolution | + +

+ |

+

| + StableDiffusionXL + | +text-to-image, image-to-image | + +

+ |

+

| + StableDiffusionLatentUpscale + | +super-resolution | + +

+ |

+

| + StableDiffusionUpscale + | +super-resolution | +|

| + StableDiffusionLDM3D + | +text-to-rgb, text-to-depth, text-to-pano | + +

+ |

+

| + StableDiffusionUpscaleLDM3D + | +ldm3d super-resolution | +

+  +

+ |

+ +  +

+ |

+

+  +

+ |

+

+

+Whichever way you choose to contribute, we strive to be part of an open, welcoming, and kind community. Please, read our [code of conduct](https://github.com/huggingface/diffusers/blob/main/CODE_OF_CONDUCT.md) and be mindful to respect it during your interactions. We also recommend you become familiar with the [ethical guidelines](https://huggingface.co/docs/diffusers/conceptual/ethical_guidelines) that guide our project and ask you to adhere to the same principles of transparency and responsibility.

+

+We enormously value feedback from the community, so please do not be afraid to speak up if you believe you have valuable feedback that can help improve the library - every message, comment, issue, and pull request (PR) is read and considered.

+

+## Overview

+

+You can contribute in many ways ranging from answering questions on issues to adding new diffusion models to

+the core library.

+

+In the following, we give an overview of different ways to contribute, ranked by difficulty in ascending order. All of them are valuable to the community.

+

+* 1. Asking and answering questions on [the Diffusers discussion forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers) or on [Discord](https://discord.gg/G7tWnz98XR).

+* 2. Opening new issues on [the GitHub Issues tab](https://github.com/huggingface/diffusers/issues/new/choose).

+* 3. Answering issues on [the GitHub Issues tab](https://github.com/huggingface/diffusers/issues).

+* 4. Fix a simple issue, marked by the "Good first issue" label, see [here](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22).

+* 5. Contribute to the [documentation](https://github.com/huggingface/diffusers/tree/main/docs/source).

+* 6. Contribute a [Community Pipeline](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3Acommunity-examples).

+* 7. Contribute to the [examples](https://github.com/huggingface/diffusers/tree/main/examples).

+* 8. Fix a more difficult issue, marked by the "Good second issue" label, see [here](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22Good+second+issue%22).

+* 9. Add a new pipeline, model, or scheduler, see ["New Pipeline/Model"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+pipeline%2Fmodel%22) and ["New scheduler"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+scheduler%22) issues. For this contribution, please have a look at [Design Philosophy](https://github.com/huggingface/diffusers/blob/main/PHILOSOPHY.md).

+

+As said before, **all contributions are valuable to the community**.

+In the following, we will explain each contribution a bit more in detail.

+

+For all contributions 4 - 9, you will need to open a PR. It is explained in detail how to do so in [Opening a pull request](#how-to-open-a-pr).

+

+### 1. Asking and answering questions on the Diffusers discussion forum or on the Diffusers Discord

+

+Any question or comment related to the Diffusers library can be asked on the [discussion forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/) or on [Discord](https://discord.gg/G7tWnz98XR). Such questions and comments include (but are not limited to):

+- Reports of training or inference experiments in an attempt to share knowledge

+- Presentation of personal projects

+- Questions to non-official training examples

+- Project proposals

+- General feedback

+- Paper summaries

+- Asking for help on personal projects that build on top of the Diffusers library

+- General questions

+- Ethical questions regarding diffusion models

+- ...

+

+Every question that is asked on the forum or on Discord actively encourages the community to publicly

+share knowledge and might very well help a beginner in the future who has the same question you're

+having. Please do pose any questions you might have.

+In the same spirit, you are of immense help to the community by answering such questions because this way you are publicly documenting knowledge for everybody to learn from.

+

+**Please** keep in mind that the more effort you put into asking or answering a question, the higher

+the quality of the publicly documented knowledge. In the same way, well-posed and well-answered questions create a high-quality knowledge database accessible to everybody, while badly posed questions or answers reduce the overall quality of the public knowledge database.

+In short, a high quality question or answer is *precise*, *concise*, *relevant*, *easy-to-understand*, *accessible*, and *well-formated/well-posed*. For more information, please have a look through the [How to write a good issue](#how-to-write-a-good-issue) section.

+

+**NOTE about channels**:

+[*The forum*](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) is much better indexed by search engines, such as Google. Posts are ranked by popularity rather than chronologically. Hence, it's easier to look up questions and answers that we posted some time ago.

+In addition, questions and answers posted in the forum can easily be linked to.

+In contrast, *Discord* has a chat-like format that invites fast back-and-forth communication.

+While it will most likely take less time for you to get an answer to your question on Discord, your

+question won't be visible anymore over time. Also, it's much harder to find information that was posted a while back on Discord. We therefore strongly recommend using the forum for high-quality questions and answers in an attempt to create long-lasting knowledge for the community. If discussions on Discord lead to very interesting answers and conclusions, we recommend posting the results on the forum to make the information more available for future readers.

+

+### 2. Opening new issues on the GitHub issues tab

+

+The 🧨 Diffusers library is robust and reliable thanks to the users who notify us of

+the problems they encounter. So thank you for reporting an issue.

+

+Remember, GitHub issues are reserved for technical questions directly related to the Diffusers library, bug reports, feature requests, or feedback on the library design.

+

+In a nutshell, this means that everything that is **not** related to the **code of the Diffusers library** (including the documentation) should **not** be asked on GitHub, but rather on either the [forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) or [Discord](https://discord.gg/G7tWnz98XR).

+

+**Please consider the following guidelines when opening a new issue**:

+- Make sure you have searched whether your issue has already been asked before (use the search bar on GitHub under Issues).

+- Please never report a new issue on another (related) issue. If another issue is highly related, please

+open a new issue nevertheless and link to the related issue.

+- Make sure your issue is written in English. Please use one of the great, free online translation services, such as [DeepL](https://www.deepl.com/translator) to translate from your native language to English if you are not comfortable in English.

+- Check whether your issue might be solved by updating to the newest Diffusers version. Before posting your issue, please make sure that `python -c "import diffusers; print(diffusers.__version__)"` is higher or matches the latest Diffusers version.

+- Remember that the more effort you put into opening a new issue, the higher the quality of your answer will be and the better the overall quality of the Diffusers issues.

+

+New issues usually include the following.

+

+#### 2.1. Reproducible, minimal bug reports

+

+A bug report should always have a reproducible code snippet and be as minimal and concise as possible.

+This means in more detail:

+- Narrow the bug down as much as you can, **do not just dump your whole code file**.

+- Format your code.

+- Do not include any external libraries except for Diffusers depending on them.

+- **Always** provide all necessary information about your environment; for this, you can run: `diffusers-cli env` in your shell and copy-paste the displayed information to the issue.

+- Explain the issue. If the reader doesn't know what the issue is and why it is an issue, she cannot solve it.

+- **Always** make sure the reader can reproduce your issue with as little effort as possible. If your code snippet cannot be run because of missing libraries or undefined variables, the reader cannot help you. Make sure your reproducible code snippet is as minimal as possible and can be copy-pasted into a simple Python shell.

+- If in order to reproduce your issue a model and/or dataset is required, make sure the reader has access to that model or dataset. You can always upload your model or dataset to the [Hub](https://huggingface.co) to make it easily downloadable. Try to keep your model and dataset as small as possible, to make the reproduction of your issue as effortless as possible.

+

+For more information, please have a look through the [How to write a good issue](#how-to-write-a-good-issue) section.

+

+You can open a bug report [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=bug&projects=&template=bug-report.yml).

+

+#### 2.2. Feature requests

+

+A world-class feature request addresses the following points:

+

+1. Motivation first:

+* Is it related to a problem/frustration with the library? If so, please explain

+why. Providing a code snippet that demonstrates the problem is best.

+* Is it related to something you would need for a project? We'd love to hear

+about it!

+* Is it something you worked on and think could benefit the community?

+Awesome! Tell us what problem it solved for you.

+2. Write a *full paragraph* describing the feature;

+3. Provide a **code snippet** that demonstrates its future use;

+4. In case this is related to a paper, please attach a link;

+5. Attach any additional information (drawings, screenshots, etc.) you think may help.

+

+You can open a feature request [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feature_request.md&title=).

+

+#### 2.3 Feedback

+

+Feedback about the library design and why it is good or not good helps the core maintainers immensely to build a user-friendly library. To understand the philosophy behind the current design philosophy, please have a look [here](https://huggingface.co/docs/diffusers/conceptual/philosophy). If you feel like a certain design choice does not fit with the current design philosophy, please explain why and how it should be changed. If a certain design choice follows the design philosophy too much, hence restricting use cases, explain why and how it should be changed.

+If a certain design choice is very useful for you, please also leave a note as this is great feedback for future design decisions.

+

+You can open an issue about feedback [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feedback.md&title=).

+

+#### 2.4 Technical questions

+

+Technical questions are mainly about why certain code of the library was written in a certain way, or what a certain part of the code does. Please make sure to link to the code in question and please provide details on

+why this part of the code is difficult to understand.

+

+You can open an issue about a technical question [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=bug&template=bug-report.yml).

+

+#### 2.5 Proposal to add a new model, scheduler, or pipeline

+

+If the diffusion model community released a new model, pipeline, or scheduler that you would like to see in the Diffusers library, please provide the following information:

+

+* Short description of the diffusion pipeline, model, or scheduler and link to the paper or public release.

+* Link to any of its open-source implementation(s).

+* Link to the model weights if they are available.

+

+If you are willing to contribute to the model yourself, let us know so we can best guide you. Also, don't forget

+to tag the original author of the component (model, scheduler, pipeline, etc.) by GitHub handle if you can find it.

+

+You can open a request for a model/pipeline/scheduler [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=New+model%2Fpipeline%2Fscheduler&template=new-model-addition.yml).

+

+### 3. Answering issues on the GitHub issues tab

+

+Answering issues on GitHub might require some technical knowledge of Diffusers, but we encourage everybody to give it a try even if you are not 100% certain that your answer is correct.

+Some tips to give a high-quality answer to an issue:

+- Be as concise and minimal as possible.

+- Stay on topic. An answer to the issue should concern the issue and only the issue.

+- Provide links to code, papers, or other sources that prove or encourage your point.

+- Answer in code. If a simple code snippet is the answer to the issue or shows how the issue can be solved, please provide a fully reproducible code snippet.

+

+Also, many issues tend to be simply off-topic, duplicates of other issues, or irrelevant. It is of great

+help to the maintainers if you can answer such issues, encouraging the author of the issue to be

+more precise, provide the link to a duplicated issue or redirect them to [the forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) or [Discord](https://discord.gg/G7tWnz98XR).

+

+If you have verified that the issued bug report is correct and requires a correction in the source code,

+please have a look at the next sections.

+

+For all of the following contributions, you will need to open a PR. It is explained in detail how to do so in the [Opening a pull request](#how-to-open-a-pr) section.

+

+### 4. Fixing a "Good first issue"

+

+*Good first issues* are marked by the [Good first issue](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22) label. Usually, the issue already

+explains how a potential solution should look so that it is easier to fix.

+If the issue hasn't been closed and you would like to try to fix this issue, you can just leave a message "I would like to try this issue.". There are usually three scenarios:

+- a.) The issue description already proposes a fix. In this case and if the solution makes sense to you, you can open a PR or draft PR to fix it.

+- b.) The issue description does not propose a fix. In this case, you can ask what a proposed fix could look like and someone from the Diffusers team should answer shortly. If you have a good idea of how to fix it, feel free to directly open a PR.

+- c.) There is already an open PR to fix the issue, but the issue hasn't been closed yet. If the PR has gone stale, you can simply open a new PR and link to the stale PR. PRs often go stale if the original contributor who wanted to fix the issue suddenly cannot find the time anymore to proceed. This often happens in open-source and is very normal. In this case, the community will be very happy if you give it a new try and leverage the knowledge of the existing PR. If there is already a PR and it is active, you can help the author by giving suggestions, reviewing the PR or even asking whether you can contribute to the PR.

+

+

+### 5. Contribute to the documentation

+

+A good library **always** has good documentation! The official documentation is often one of the first points of contact for new users of the library, and therefore contributing to the documentation is a **highly

+valuable contribution**.

+

+Contributing to the library can have many forms:

+

+- Correcting spelling or grammatical errors.

+- Correct incorrect formatting of the docstring. If you see that the official documentation is weirdly displayed or a link is broken, we would be very happy if you take some time to correct it.

+- Correct the shape or dimensions of a docstring input or output tensor.

+- Clarify documentation that is hard to understand or incorrect.

+- Update outdated code examples.

+- Translating the documentation to another language.

+

+Anything displayed on [the official Diffusers doc page](https://huggingface.co/docs/diffusers/index) is part of the official documentation and can be corrected, adjusted in the respective [documentation source](https://github.com/huggingface/diffusers/tree/main/docs/source).

+

+Please have a look at [this page](https://github.com/huggingface/diffusers/tree/main/docs) on how to verify changes made to the documentation locally.

+

+### 6. Contribute a community pipeline

+

+> [!TIP]

+> Read the [Community pipelines](../using-diffusers/custom_pipeline_overview#community-pipelines) guide to learn more about the difference between a GitHub and Hugging Face Hub community pipeline. If you're interested in why we have community pipelines, take a look at GitHub Issue [#841](https://github.com/huggingface/diffusers/issues/841) (basically, we can't maintain all the possible ways diffusion models can be used for inference but we also don't want to prevent the community from building them).

+

+Contributing a community pipeline is a great way to share your creativity and work with the community. It lets you build on top of the [`DiffusionPipeline`] so that anyone can load and use it by setting the `custom_pipeline` parameter. This section will walk you through how to create a simple pipeline where the UNet only does a single forward pass and calls the scheduler once (a "one-step" pipeline).

+

+1. Create a one_step_unet.py file for your community pipeline. This file can contain whatever package you want to use as long as it's installed by the user. Make sure you only have one pipeline class that inherits from [`DiffusionPipeline`] to load model weights and the scheduler configuration from the Hub. Add a UNet and scheduler to the `__init__` function.

+

+ You should also add the `register_modules` function to ensure your pipeline and its components can be saved with [`~DiffusionPipeline.save_pretrained`].

+

+```py

+from diffusers import DiffusionPipeline

+import torch

+

+class UnetSchedulerOneForwardPipeline(DiffusionPipeline):

+ def __init__(self, unet, scheduler):

+ super().__init__()

+

+ self.register_modules(unet=unet, scheduler=scheduler)

+```

+

+1. In the forward pass (which we recommend defining as `__call__`), you can add any feature you'd like. For the "one-step" pipeline, create a random image and call the UNet and scheduler once by setting `timestep=1`.

+

+```py

+ from diffusers import DiffusionPipeline

+ import torch

+

+ class UnetSchedulerOneForwardPipeline(DiffusionPipeline):

+ def __init__(self, unet, scheduler):

+ super().__init__()

+

+ self.register_modules(unet=unet, scheduler=scheduler)

+

+ def __call__(self):

+ image = torch.randn(

+ (1, self.unet.config.in_channels, self.unet.config.sample_size, self.unet.config.sample_size),

+ )

+ timestep = 1

+

+ model_output = self.unet(image, timestep).sample

+ scheduler_output = self.scheduler.step(model_output, timestep, image).prev_sample

+

+ return scheduler_output

+```

+

+Now you can run the pipeline by passing a UNet and scheduler to it or load pretrained weights if the pipeline structure is identical.

+

+```py

+from diffusers import DDPMScheduler, UNet2DModel

+

+scheduler = DDPMScheduler()

+unet = UNet2DModel()

+

+pipeline = UnetSchedulerOneForwardPipeline(unet=unet, scheduler=scheduler)

+output = pipeline()

+# load pretrained weights

+pipeline = UnetSchedulerOneForwardPipeline.from_pretrained("google/ddpm-cifar10-32", use_safetensors=True)

+output = pipeline()

+```

+

+You can either share your pipeline as a GitHub community pipeline or Hub community pipeline.

+

+

+

+Whichever way you choose to contribute, we strive to be part of an open, welcoming, and kind community. Please, read our [code of conduct](https://github.com/huggingface/diffusers/blob/main/CODE_OF_CONDUCT.md) and be mindful to respect it during your interactions. We also recommend you become familiar with the [ethical guidelines](https://huggingface.co/docs/diffusers/conceptual/ethical_guidelines) that guide our project and ask you to adhere to the same principles of transparency and responsibility.

+

+We enormously value feedback from the community, so please do not be afraid to speak up if you believe you have valuable feedback that can help improve the library - every message, comment, issue, and pull request (PR) is read and considered.

+

+## Overview

+

+You can contribute in many ways ranging from answering questions on issues to adding new diffusion models to

+the core library.

+

+In the following, we give an overview of different ways to contribute, ranked by difficulty in ascending order. All of them are valuable to the community.

+

+* 1. Asking and answering questions on [the Diffusers discussion forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers) or on [Discord](https://discord.gg/G7tWnz98XR).

+* 2. Opening new issues on [the GitHub Issues tab](https://github.com/huggingface/diffusers/issues/new/choose).

+* 3. Answering issues on [the GitHub Issues tab](https://github.com/huggingface/diffusers/issues).

+* 4. Fix a simple issue, marked by the "Good first issue" label, see [here](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22).

+* 5. Contribute to the [documentation](https://github.com/huggingface/diffusers/tree/main/docs/source).

+* 6. Contribute a [Community Pipeline](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3Acommunity-examples).

+* 7. Contribute to the [examples](https://github.com/huggingface/diffusers/tree/main/examples).

+* 8. Fix a more difficult issue, marked by the "Good second issue" label, see [here](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22Good+second+issue%22).

+* 9. Add a new pipeline, model, or scheduler, see ["New Pipeline/Model"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+pipeline%2Fmodel%22) and ["New scheduler"](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22New+scheduler%22) issues. For this contribution, please have a look at [Design Philosophy](https://github.com/huggingface/diffusers/blob/main/PHILOSOPHY.md).

+

+As said before, **all contributions are valuable to the community**.

+In the following, we will explain each contribution a bit more in detail.

+

+For all contributions 4 - 9, you will need to open a PR. It is explained in detail how to do so in [Opening a pull request](#how-to-open-a-pr).

+

+### 1. Asking and answering questions on the Diffusers discussion forum or on the Diffusers Discord

+

+Any question or comment related to the Diffusers library can be asked on the [discussion forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/) or on [Discord](https://discord.gg/G7tWnz98XR). Such questions and comments include (but are not limited to):

+- Reports of training or inference experiments in an attempt to share knowledge

+- Presentation of personal projects

+- Questions to non-official training examples

+- Project proposals

+- General feedback

+- Paper summaries

+- Asking for help on personal projects that build on top of the Diffusers library

+- General questions

+- Ethical questions regarding diffusion models

+- ...

+

+Every question that is asked on the forum or on Discord actively encourages the community to publicly

+share knowledge and might very well help a beginner in the future who has the same question you're

+having. Please do pose any questions you might have.

+In the same spirit, you are of immense help to the community by answering such questions because this way you are publicly documenting knowledge for everybody to learn from.

+

+**Please** keep in mind that the more effort you put into asking or answering a question, the higher

+the quality of the publicly documented knowledge. In the same way, well-posed and well-answered questions create a high-quality knowledge database accessible to everybody, while badly posed questions or answers reduce the overall quality of the public knowledge database.

+In short, a high quality question or answer is *precise*, *concise*, *relevant*, *easy-to-understand*, *accessible*, and *well-formated/well-posed*. For more information, please have a look through the [How to write a good issue](#how-to-write-a-good-issue) section.

+

+**NOTE about channels**:

+[*The forum*](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) is much better indexed by search engines, such as Google. Posts are ranked by popularity rather than chronologically. Hence, it's easier to look up questions and answers that we posted some time ago.

+In addition, questions and answers posted in the forum can easily be linked to.

+In contrast, *Discord* has a chat-like format that invites fast back-and-forth communication.

+While it will most likely take less time for you to get an answer to your question on Discord, your

+question won't be visible anymore over time. Also, it's much harder to find information that was posted a while back on Discord. We therefore strongly recommend using the forum for high-quality questions and answers in an attempt to create long-lasting knowledge for the community. If discussions on Discord lead to very interesting answers and conclusions, we recommend posting the results on the forum to make the information more available for future readers.

+

+### 2. Opening new issues on the GitHub issues tab

+

+The 🧨 Diffusers library is robust and reliable thanks to the users who notify us of

+the problems they encounter. So thank you for reporting an issue.

+

+Remember, GitHub issues are reserved for technical questions directly related to the Diffusers library, bug reports, feature requests, or feedback on the library design.

+

+In a nutshell, this means that everything that is **not** related to the **code of the Diffusers library** (including the documentation) should **not** be asked on GitHub, but rather on either the [forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) or [Discord](https://discord.gg/G7tWnz98XR).

+

+**Please consider the following guidelines when opening a new issue**:

+- Make sure you have searched whether your issue has already been asked before (use the search bar on GitHub under Issues).

+- Please never report a new issue on another (related) issue. If another issue is highly related, please

+open a new issue nevertheless and link to the related issue.

+- Make sure your issue is written in English. Please use one of the great, free online translation services, such as [DeepL](https://www.deepl.com/translator) to translate from your native language to English if you are not comfortable in English.

+- Check whether your issue might be solved by updating to the newest Diffusers version. Before posting your issue, please make sure that `python -c "import diffusers; print(diffusers.__version__)"` is higher or matches the latest Diffusers version.

+- Remember that the more effort you put into opening a new issue, the higher the quality of your answer will be and the better the overall quality of the Diffusers issues.

+

+New issues usually include the following.

+

+#### 2.1. Reproducible, minimal bug reports

+

+A bug report should always have a reproducible code snippet and be as minimal and concise as possible.

+This means in more detail:

+- Narrow the bug down as much as you can, **do not just dump your whole code file**.

+- Format your code.

+- Do not include any external libraries except for Diffusers depending on them.

+- **Always** provide all necessary information about your environment; for this, you can run: `diffusers-cli env` in your shell and copy-paste the displayed information to the issue.

+- Explain the issue. If the reader doesn't know what the issue is and why it is an issue, she cannot solve it.

+- **Always** make sure the reader can reproduce your issue with as little effort as possible. If your code snippet cannot be run because of missing libraries or undefined variables, the reader cannot help you. Make sure your reproducible code snippet is as minimal as possible and can be copy-pasted into a simple Python shell.

+- If in order to reproduce your issue a model and/or dataset is required, make sure the reader has access to that model or dataset. You can always upload your model or dataset to the [Hub](https://huggingface.co) to make it easily downloadable. Try to keep your model and dataset as small as possible, to make the reproduction of your issue as effortless as possible.

+

+For more information, please have a look through the [How to write a good issue](#how-to-write-a-good-issue) section.

+

+You can open a bug report [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=bug&projects=&template=bug-report.yml).

+

+#### 2.2. Feature requests

+

+A world-class feature request addresses the following points:

+

+1. Motivation first:

+* Is it related to a problem/frustration with the library? If so, please explain

+why. Providing a code snippet that demonstrates the problem is best.

+* Is it related to something you would need for a project? We'd love to hear

+about it!

+* Is it something you worked on and think could benefit the community?

+Awesome! Tell us what problem it solved for you.

+2. Write a *full paragraph* describing the feature;

+3. Provide a **code snippet** that demonstrates its future use;

+4. In case this is related to a paper, please attach a link;

+5. Attach any additional information (drawings, screenshots, etc.) you think may help.

+

+You can open a feature request [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feature_request.md&title=).

+

+#### 2.3 Feedback

+

+Feedback about the library design and why it is good or not good helps the core maintainers immensely to build a user-friendly library. To understand the philosophy behind the current design philosophy, please have a look [here](https://huggingface.co/docs/diffusers/conceptual/philosophy). If you feel like a certain design choice does not fit with the current design philosophy, please explain why and how it should be changed. If a certain design choice follows the design philosophy too much, hence restricting use cases, explain why and how it should be changed.

+If a certain design choice is very useful for you, please also leave a note as this is great feedback for future design decisions.

+

+You can open an issue about feedback [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=&template=feedback.md&title=).

+

+#### 2.4 Technical questions

+

+Technical questions are mainly about why certain code of the library was written in a certain way, or what a certain part of the code does. Please make sure to link to the code in question and please provide details on

+why this part of the code is difficult to understand.

+

+You can open an issue about a technical question [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=bug&template=bug-report.yml).

+

+#### 2.5 Proposal to add a new model, scheduler, or pipeline

+

+If the diffusion model community released a new model, pipeline, or scheduler that you would like to see in the Diffusers library, please provide the following information:

+

+* Short description of the diffusion pipeline, model, or scheduler and link to the paper or public release.

+* Link to any of its open-source implementation(s).

+* Link to the model weights if they are available.

+

+If you are willing to contribute to the model yourself, let us know so we can best guide you. Also, don't forget

+to tag the original author of the component (model, scheduler, pipeline, etc.) by GitHub handle if you can find it.

+

+You can open a request for a model/pipeline/scheduler [here](https://github.com/huggingface/diffusers/issues/new?assignees=&labels=New+model%2Fpipeline%2Fscheduler&template=new-model-addition.yml).

+

+### 3. Answering issues on the GitHub issues tab

+

+Answering issues on GitHub might require some technical knowledge of Diffusers, but we encourage everybody to give it a try even if you are not 100% certain that your answer is correct.

+Some tips to give a high-quality answer to an issue:

+- Be as concise and minimal as possible.

+- Stay on topic. An answer to the issue should concern the issue and only the issue.

+- Provide links to code, papers, or other sources that prove or encourage your point.

+- Answer in code. If a simple code snippet is the answer to the issue or shows how the issue can be solved, please provide a fully reproducible code snippet.

+

+Also, many issues tend to be simply off-topic, duplicates of other issues, or irrelevant. It is of great

+help to the maintainers if you can answer such issues, encouraging the author of the issue to be

+more precise, provide the link to a duplicated issue or redirect them to [the forum](https://discuss.huggingface.co/c/discussion-related-to-httpsgithubcomhuggingfacediffusers/63) or [Discord](https://discord.gg/G7tWnz98XR).

+

+If you have verified that the issued bug report is correct and requires a correction in the source code,

+please have a look at the next sections.

+

+For all of the following contributions, you will need to open a PR. It is explained in detail how to do so in the [Opening a pull request](#how-to-open-a-pr) section.

+

+### 4. Fixing a "Good first issue"

+

+*Good first issues* are marked by the [Good first issue](https://github.com/huggingface/diffusers/issues?q=is%3Aopen+is%3Aissue+label%3A%22good+first+issue%22) label. Usually, the issue already

+explains how a potential solution should look so that it is easier to fix.

+If the issue hasn't been closed and you would like to try to fix this issue, you can just leave a message "I would like to try this issue.". There are usually three scenarios:

+- a.) The issue description already proposes a fix. In this case and if the solution makes sense to you, you can open a PR or draft PR to fix it.