Spaces:

Running

on

Zero

A newer version of the Gradio SDK is available:

4.39.0

title: StableMaterials

emoji: 🧱

thumbnail: https://gvecchio.com/stablematerials/static/images/teaser.jpg

colorFrom: blue

colorTo: blue

sdk: gradio

sdk_version: 4.36.1

app_file: app.py

pinned: false

license: openrail

StableMaterials

StableMaterials is a diffusion-based model designed for generating photorealistic physical-based rendering (PBR) materials. This model integrates semi-supervised learning with Latent Diffusion Models (LDMs) to produce high-resolution, tileable material maps from text or image prompts. StableMaterials can infer both diffuse (Basecolor) and specular (Roughness, Metallic) properties, as well as the material mesostructure (Height, Normal). 🌟

For more details, visit the project page or read the full paper on arXiv.

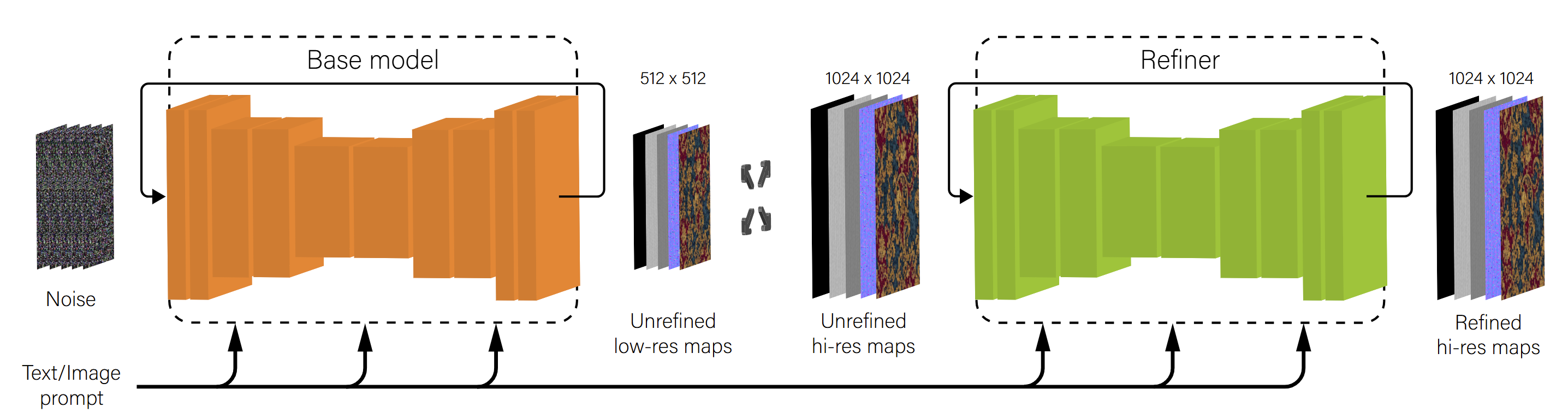

Model Architecture

🧩 Base Model

The base model generates low-resolution (512x512) material maps using a compression VAE (Variational Autoencoder) followed by a latent diffusion process. The architecture is based on the MatFuse adaptation of the LDM paradigm, optimized for material map generation with a focus on diversity and high visual fidelity. 🖼️

🔑 Key Features

- Semi-Supervised Learning: The model is trained using both annotated and unannotated data, leveraging adversarial training to distill knowledge from large-scale pretrained image generation models. 📚

- Knowledge Distillation: Incorporates unannotated texture samples generated using the SDXL model into the training process, bridging the gap between different data distributions. 🌐

- Latent Consistency: Employs a latent consistency model to facilitate fast generation, reducing the inference steps required to produce high-quality outputs. ⚡

- Feature Rolling: Introduces a novel tileability technique by rolling feature maps for each convolutional and attention layer in the U-Net architecture. 🎢

Intended Use

StableMaterials is designed for generating high-quality, realistic PBR materials for applications in computer graphics, such as video game development, architectural visualization, and digital content creation. The model supports both text and image-based prompting, allowing for versatile and intuitive material generation. 🕹️🏛️📸

📖 Citation

If you use this model in your research, please cite the following paper:

@article{vecchio2024stablematerials,

title={StableMaterials: Enhancing Diversity in Material Generation via Semi-Supervised Learning},

author={Vecchio, Giuseppe},

journal={arXiv preprint arXiv:2406.09293},

year={2024}

}