Spaces:

Running

on

Zero

Running

on

Zero

Support ZeroGPU

Browse files- Cloned the Git repo directly

- Revamp the app.py for single prompt (more efficient)

- .gitattributes +4 -0

- LICENSE +202 -0

- README.md +1 -1

- app.py +4 -4

- contributing.md +28 -0

- demo_stylealigned_controlnet.py +139 -0

- demo_stylealigned_multidiffusion.py +104 -0

- demo_stylealigned_sdxl.py +85 -0

- doc/cn_example.jpg +3 -0

- doc/md_example.jpg +3 -0

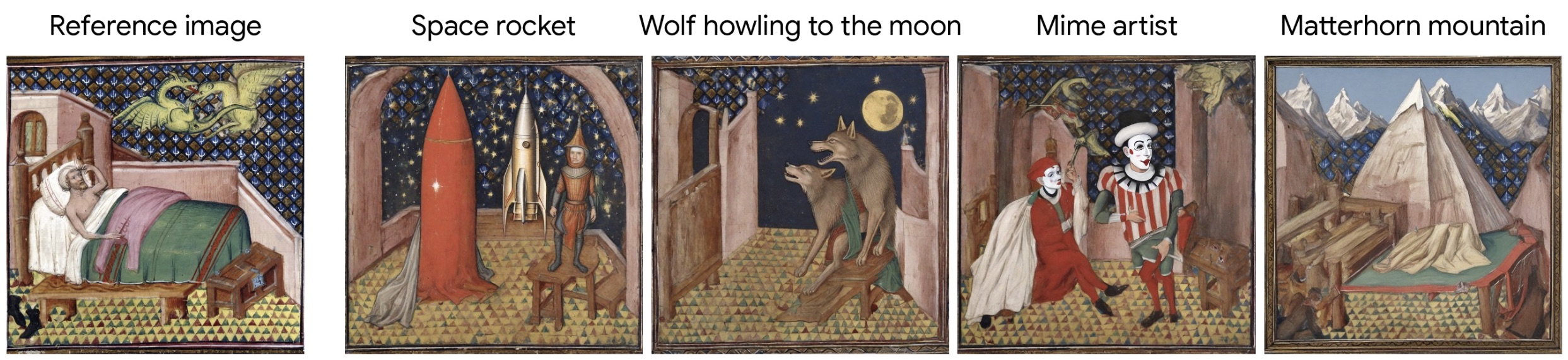

- doc/sa_example.jpg +0 -0

- doc/sa_transfer_example.jpeg +0 -0

- example_image/A.png +0 -0

- example_image/camel.png +3 -0

- example_image/medieval-bed.jpeg +0 -0

- example_image/sun.png +0 -0

- example_image/train.png +3 -0

- example_image/whale.png +0 -0

- inversion.py +125 -0

- pipeline_calls.py +552 -0

- requirements.txt +6 -0

- sa_handler.py +279 -0

- style_aligned_sd1.ipynb +162 -0

- style_aligned_sdxl.ipynb +142 -0

- style_aligned_transfer_sdxl.ipynb +186 -0

- style_aligned_w_controlnet.ipynb +200 -0

- style_aligned_w_multidiffusion.ipynb +156 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,7 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

doc/cn_example.jpg filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

doc/md_example.jpg filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

example_image/camel.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

example_image/train.png filter=lfs diff=lfs merge=lfs -text

|

LICENSE

ADDED

|

@@ -0,0 +1,202 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

Apache License

|

| 3 |

+

Version 2.0, January 2004

|

| 4 |

+

http://www.apache.org/licenses/

|

| 5 |

+

|

| 6 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 7 |

+

|

| 8 |

+

1. Definitions.

|

| 9 |

+

|

| 10 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 11 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 12 |

+

|

| 13 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 14 |

+

the copyright owner that is granting the License.

|

| 15 |

+

|

| 16 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 17 |

+

other entities that control, are controlled by, or are under common

|

| 18 |

+

control with that entity. For the purposes of this definition,

|

| 19 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 20 |

+

direction or management of such entity, whether by contract or

|

| 21 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 22 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 23 |

+

|

| 24 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 25 |

+

exercising permissions granted by this License.

|

| 26 |

+

|

| 27 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 28 |

+

including but not limited to software source code, documentation

|

| 29 |

+

source, and configuration files.

|

| 30 |

+

|

| 31 |

+

"Object" form shall mean any form resulting from mechanical

|

| 32 |

+

transformation or translation of a Source form, including but

|

| 33 |

+

not limited to compiled object code, generated documentation,

|

| 34 |

+

and conversions to other media types.

|

| 35 |

+

|

| 36 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 37 |

+

Object form, made available under the License, as indicated by a

|

| 38 |

+

copyright notice that is included in or attached to the work

|

| 39 |

+

(an example is provided in the Appendix below).

|

| 40 |

+

|

| 41 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 42 |

+

form, that is based on (or derived from) the Work and for which the

|

| 43 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 44 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 45 |

+

of this License, Derivative Works shall not include works that remain

|

| 46 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 47 |

+

the Work and Derivative Works thereof.

|

| 48 |

+

|

| 49 |

+

"Contribution" shall mean any work of authorship, including

|

| 50 |

+

the original version of the Work and any modifications or additions

|

| 51 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 52 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 53 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 54 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 55 |

+

means any form of electronic, verbal, or written communication sent

|

| 56 |

+

to the Licensor or its representatives, including but not limited to

|

| 57 |

+

communication on electronic mailing lists, source code control systems,

|

| 58 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 59 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 60 |

+

excluding communication that is conspicuously marked or otherwise

|

| 61 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 62 |

+

|

| 63 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 64 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 65 |

+

subsequently incorporated within the Work.

|

| 66 |

+

|

| 67 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 68 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 69 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 70 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 71 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 72 |

+

Work and such Derivative Works in Source or Object form.

|

| 73 |

+

|

| 74 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 75 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 76 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 77 |

+

(except as stated in this section) patent license to make, have made,

|

| 78 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 79 |

+

where such license applies only to those patent claims licensable

|

| 80 |

+

by such Contributor that are necessarily infringed by their

|

| 81 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 82 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 83 |

+

institute patent litigation against any entity (including a

|

| 84 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 85 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 86 |

+

or contributory patent infringement, then any patent licenses

|

| 87 |

+

granted to You under this License for that Work shall terminate

|

| 88 |

+

as of the date such litigation is filed.

|

| 89 |

+

|

| 90 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 91 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 92 |

+

modifications, and in Source or Object form, provided that You

|

| 93 |

+

meet the following conditions:

|

| 94 |

+

|

| 95 |

+

(a) You must give any other recipients of the Work or

|

| 96 |

+

Derivative Works a copy of this License; and

|

| 97 |

+

|

| 98 |

+

(b) You must cause any modified files to carry prominent notices

|

| 99 |

+

stating that You changed the files; and

|

| 100 |

+

|

| 101 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 102 |

+

that You distribute, all copyright, patent, trademark, and

|

| 103 |

+

attribution notices from the Source form of the Work,

|

| 104 |

+

excluding those notices that do not pertain to any part of

|

| 105 |

+

the Derivative Works; and

|

| 106 |

+

|

| 107 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 108 |

+

distribution, then any Derivative Works that You distribute must

|

| 109 |

+

include a readable copy of the attribution notices contained

|

| 110 |

+

within such NOTICE file, excluding those notices that do not

|

| 111 |

+

pertain to any part of the Derivative Works, in at least one

|

| 112 |

+

of the following places: within a NOTICE text file distributed

|

| 113 |

+

as part of the Derivative Works; within the Source form or

|

| 114 |

+

documentation, if provided along with the Derivative Works; or,

|

| 115 |

+

within a display generated by the Derivative Works, if and

|

| 116 |

+

wherever such third-party notices normally appear. The contents

|

| 117 |

+

of the NOTICE file are for informational purposes only and

|

| 118 |

+

do not modify the License. You may add Your own attribution

|

| 119 |

+

notices within Derivative Works that You distribute, alongside

|

| 120 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 121 |

+

that such additional attribution notices cannot be construed

|

| 122 |

+

as modifying the License.

|

| 123 |

+

|

| 124 |

+

You may add Your own copyright statement to Your modifications and

|

| 125 |

+

may provide additional or different license terms and conditions

|

| 126 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 127 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 128 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 129 |

+

the conditions stated in this License.

|

| 130 |

+

|

| 131 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 132 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 133 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 134 |

+

this License, without any additional terms or conditions.

|

| 135 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 136 |

+

the terms of any separate license agreement you may have executed

|

| 137 |

+

with Licensor regarding such Contributions.

|

| 138 |

+

|

| 139 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 140 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 141 |

+

except as required for reasonable and customary use in describing the

|

| 142 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 143 |

+

|

| 144 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 145 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 146 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 147 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 148 |

+

implied, including, without limitation, any warranties or conditions

|

| 149 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 150 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 151 |

+

appropriateness of using or redistributing the Work and assume any

|

| 152 |

+

risks associated with Your exercise of permissions under this License.

|

| 153 |

+

|

| 154 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 155 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 156 |

+

unless required by applicable law (such as deliberate and grossly

|

| 157 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 158 |

+

liable to You for damages, including any direct, indirect, special,

|

| 159 |

+

incidental, or consequential damages of any character arising as a

|

| 160 |

+

result of this License or out of the use or inability to use the

|

| 161 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 162 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 163 |

+

other commercial damages or losses), even if such Contributor

|

| 164 |

+

has been advised of the possibility of such damages.

|

| 165 |

+

|

| 166 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 167 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 168 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 169 |

+

or other liability obligations and/or rights consistent with this

|

| 170 |

+

License. However, in accepting such obligations, You may act only

|

| 171 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 172 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 173 |

+

defend, and hold each Contributor harmless for any liability

|

| 174 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 175 |

+

of your accepting any such warranty or additional liability.

|

| 176 |

+

|

| 177 |

+

END OF TERMS AND CONDITIONS

|

| 178 |

+

|

| 179 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 180 |

+

|

| 181 |

+

To apply the Apache License to your work, attach the following

|

| 182 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 183 |

+

replaced with your own identifying information. (Don't include

|

| 184 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 185 |

+

comment syntax for the file format. We also recommend that a

|

| 186 |

+

file or class name and description of purpose be included on the

|

| 187 |

+

same "printed page" as the copyright notice for easier

|

| 188 |

+

identification within third-party archives.

|

| 189 |

+

|

| 190 |

+

Copyright [yyyy] [name of copyright owner]

|

| 191 |

+

|

| 192 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 193 |

+

you may not use this file except in compliance with the License.

|

| 194 |

+

You may obtain a copy of the License at

|

| 195 |

+

|

| 196 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 197 |

+

|

| 198 |

+

Unless required by applicable law or agreed to in writing, software

|

| 199 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 200 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 201 |

+

See the License for the specific language governing permissions and

|

| 202 |

+

limitations under the License.

|

README.md

CHANGED

|

@@ -3,7 +3,7 @@ title: StyleAligned Transfer

|

|

| 3 |

emoji: 🐠

|

| 4 |

colorFrom: blue

|

| 5 |

colorTo: pink

|

| 6 |

-

sdk:

|

| 7 |

pinned: false

|

| 8 |

---

|

| 9 |

|

|

|

|

| 3 |

emoji: 🐠

|

| 4 |

colorFrom: blue

|

| 5 |

colorTo: pink

|

| 6 |

+

sdk: gradio

|

| 7 |

pinned: false

|

| 8 |

---

|

| 9 |

|

app.py

CHANGED

|

@@ -6,6 +6,7 @@ import math

|

|

| 6 |

from diffusers.utils import load_image

|

| 7 |

import inversion

|

| 8 |

import numpy as np

|

|

|

|

| 9 |

|

| 10 |

# init models

|

| 11 |

|

|

@@ -22,6 +23,7 @@ pipeline = StableDiffusionXLPipeline.from_pretrained(

|

|

| 22 |

pipeline.enable_model_cpu_offload()

|

| 23 |

pipeline.enable_vae_slicing()

|

| 24 |

|

|

|

|

| 25 |

def run(ref_path, ref_style, ref_prompt, prompt1, prompt2, prompt3):

|

| 26 |

# DDIM inversion

|

| 27 |

src_style = f"{ref_style}"

|

|

@@ -41,8 +43,6 @@ def run(ref_path, ref_style, ref_prompt, prompt1, prompt2, prompt3):

|

|

| 41 |

prompts = [

|

| 42 |

src_prompt,

|

| 43 |

prompt1,

|

| 44 |

-

prompt2,

|

| 45 |

-

prompt3

|

| 46 |

]

|

| 47 |

|

| 48 |

# some parameters you can adjust to control fidelity to reference

|

|

@@ -105,8 +105,8 @@ with gr.Blocks(css=css) as demo:

|

|

| 105 |

with gr.Group():

|

| 106 |

results = gr.Gallery()

|

| 107 |

prompt1 = gr.Textbox(label="Prompt1", value="A man working on a laptop")

|

| 108 |

-

prompt2 = gr.Textbox(label="Prompt2", value="A man eating pizza")

|

| 109 |

-

prompt3 = gr.Textbox(label="Prompt3", value="A woman playing on saxophone")

|

| 110 |

run_button = gr.Button("Submit")

|

| 111 |

|

| 112 |

|

|

|

|

| 6 |

from diffusers.utils import load_image

|

| 7 |

import inversion

|

| 8 |

import numpy as np

|

| 9 |

+

import spaces

|

| 10 |

|

| 11 |

# init models

|

| 12 |

|

|

|

|

| 23 |

pipeline.enable_model_cpu_offload()

|

| 24 |

pipeline.enable_vae_slicing()

|

| 25 |

|

| 26 |

+

@spaces.GPU(duration=120)

|

| 27 |

def run(ref_path, ref_style, ref_prompt, prompt1, prompt2, prompt3):

|

| 28 |

# DDIM inversion

|

| 29 |

src_style = f"{ref_style}"

|

|

|

|

| 43 |

prompts = [

|

| 44 |

src_prompt,

|

| 45 |

prompt1,

|

|

|

|

|

|

|

| 46 |

]

|

| 47 |

|

| 48 |

# some parameters you can adjust to control fidelity to reference

|

|

|

|

| 105 |

with gr.Group():

|

| 106 |

results = gr.Gallery()

|

| 107 |

prompt1 = gr.Textbox(label="Prompt1", value="A man working on a laptop")

|

| 108 |

+

prompt2 = gr.Textbox(label="Prompt2", value="A man eating pizza", visible=False)

|

| 109 |

+

prompt3 = gr.Textbox(label="Prompt3", value="A woman playing on saxophone", visible=False)

|

| 110 |

run_button = gr.Button("Submit")

|

| 111 |

|

| 112 |

|

contributing.md

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# How to Contribute

|

| 2 |

+

|

| 3 |

+

We'd love to accept your patches and contributions to this project. There are

|

| 4 |

+

just a few small guidelines you need to follow.

|

| 5 |

+

|

| 6 |

+

## Contributor License Agreement

|

| 7 |

+

|

| 8 |

+

Contributions to this project must be accompanied by a Contributor License

|

| 9 |

+

Agreement. You (or your employer) retain the copyright to your contribution;

|

| 10 |

+

this simply gives us permission to use and redistribute your contributions as

|

| 11 |

+

part of the project. Head over to <https://cla.developers.google.com/> to see

|

| 12 |

+

your current agreements on file or to sign a new one.

|

| 13 |

+

|

| 14 |

+

You generally only need to submit a CLA once, so if you've already submitted one

|

| 15 |

+

(even if it was for a different project), you probably don't need to do it

|

| 16 |

+

again.

|

| 17 |

+

|

| 18 |

+

## Code Reviews

|

| 19 |

+

|

| 20 |

+

All submissions, including submissions by project members, require review. We

|

| 21 |

+

use GitHub pull requests for this purpose. Consult

|

| 22 |

+

[GitHub Help](https://help.github.com/articles/about-pull-requests/) for more

|

| 23 |

+

information on using pull requests.

|

| 24 |

+

|

| 25 |

+

## Community Guidelines

|

| 26 |

+

|

| 27 |

+

This project follows [Google's Open Source Community

|

| 28 |

+

Guidelines](https://opensource.google/conduct/).

|

demo_stylealigned_controlnet.py

ADDED

|

@@ -0,0 +1,139 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from diffusers import ControlNetModel, StableDiffusionXLControlNetPipeline, AutoencoderKL

|

| 3 |

+

from diffusers.utils import load_image

|

| 4 |

+

from transformers import DPTImageProcessor, DPTForDepthEstimation

|

| 5 |

+

import torch

|

| 6 |

+

import sa_handler

|

| 7 |

+

import pipeline_calls

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

# Initialize models

|

| 12 |

+

depth_estimator = DPTForDepthEstimation.from_pretrained("Intel/dpt-hybrid-midas").to("cuda")

|

| 13 |

+

feature_processor = DPTImageProcessor.from_pretrained("Intel/dpt-hybrid-midas")

|

| 14 |

+

|

| 15 |

+

controlnet = ControlNetModel.from_pretrained(

|

| 16 |

+

"diffusers/controlnet-depth-sdxl-1.0",

|

| 17 |

+

variant="fp16",

|

| 18 |

+

use_safetensors=True,

|

| 19 |

+

torch_dtype=torch.float16,

|

| 20 |

+

).to("cuda")

|

| 21 |

+

vae = AutoencoderKL.from_pretrained("madebyollin/sdxl-vae-fp16-fix", torch_dtype=torch.float16).to("cuda")

|

| 22 |

+

pipeline = StableDiffusionXLControlNetPipeline.from_pretrained(

|

| 23 |

+

"stabilityai/stable-diffusion-xl-base-1.0",

|

| 24 |

+

controlnet=controlnet,

|

| 25 |

+

vae=vae,

|

| 26 |

+

variant="fp16",

|

| 27 |

+

use_safetensors=True,

|

| 28 |

+

torch_dtype=torch.float16,

|

| 29 |

+

).to("cuda")

|

| 30 |

+

# Configure pipeline for CPU offloading and VAE slicing

|

| 31 |

+

pipeline.enable_model_cpu_offload()

|

| 32 |

+

pipeline.enable_vae_slicing()

|

| 33 |

+

|

| 34 |

+

# Initialize style-aligned handler

|

| 35 |

+

sa_args = sa_handler.StyleAlignedArgs(share_group_norm=False,

|

| 36 |

+

share_layer_norm=False,

|

| 37 |

+

share_attention=True,

|

| 38 |

+

adain_queries=True,

|

| 39 |

+

adain_keys=True,

|

| 40 |

+

adain_values=False,

|

| 41 |

+

)

|

| 42 |

+

handler = sa_handler.Handler(pipeline)

|

| 43 |

+

handler.register(sa_args, )

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

# Function to run ControlNet depth with StyleAligned

|

| 47 |

+

def style_aligned_controlnet(ref_style_prompt, depth_map, ref_image, img_generation_prompt, seed):

|

| 48 |

+

try:

|

| 49 |

+

if depth_map == True:

|

| 50 |

+

image = load_image(ref_image)

|

| 51 |

+

depth_image = pipeline_calls.get_depth_map(image, feature_processor, depth_estimator)

|

| 52 |

+

else:

|

| 53 |

+

depth_image = load_image(ref_image).resize((1024, 1024))

|

| 54 |

+

controlnet_conditioning_scale = 0.8

|

| 55 |

+

gen = None if seed is None else torch.manual_seed(int(seed))

|

| 56 |

+

num_images_per_prompt = 3 # adjust according to VRAM size

|

| 57 |

+

latents = torch.randn(1 + num_images_per_prompt, 4, 128, 128, generator=gen).to(pipeline.unet.dtype)

|

| 58 |

+

|

| 59 |

+

images = pipeline_calls.controlnet_call(pipeline, [ref_style_prompt, img_generation_prompt],

|

| 60 |

+

image=depth_image,

|

| 61 |

+

num_inference_steps=50,

|

| 62 |

+

controlnet_conditioning_scale=controlnet_conditioning_scale,

|

| 63 |

+

num_images_per_prompt=num_images_per_prompt,

|

| 64 |

+

latents=latents)

|

| 65 |

+

return [images[0], depth_image] + images[1:], gr.Image(value=images[0], visible=True)

|

| 66 |

+

except Exception as e:

|

| 67 |

+

raise gr.Error(f"Error in generating images:{e}")

|

| 68 |

+

|

| 69 |

+

# Create a Gradio UI

|

| 70 |

+

with gr.Blocks() as demo:

|

| 71 |

+

gr.HTML('<h1 style="text-align: center;">ControlNet with StyleAligned</h1>')

|

| 72 |

+

with gr.Row():

|

| 73 |

+

|

| 74 |

+

with gr.Column(variant='panel'):

|

| 75 |

+

# Textbox for reference style prompt

|

| 76 |

+

ref_style_prompt = gr.Textbox(

|

| 77 |

+

label='Reference style prompt',

|

| 78 |

+

info="Enter a Prompt to generate the reference image", placeholder='a poster in <style name> style'

|

| 79 |

+

)

|

| 80 |

+

with gr.Row(variant='panel'):

|

| 81 |

+

# Checkbox for using controller depth-map

|

| 82 |

+

depth_map = gr.Checkbox(label='Depth-map',)

|

| 83 |

+

seed = gr.Number(value=1234, label="Seed", precision=0, step=1, scale=3,

|

| 84 |

+

info="Enter a seed of a previous reference image "

|

| 85 |

+

"or leave empty for a random generation.")

|

| 86 |

+

# Image display for the generated reference style image

|

| 87 |

+

ref_style_image = gr.Image(visible=False, label='Reference style image', scale=1)

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

with gr.Column(variant='panel'):

|

| 91 |

+

# Image upload option for uploading a reference image for controlnet

|

| 92 |

+

ref_image = gr.Image(label="Upload the reference image",

|

| 93 |

+

type='filepath' )

|

| 94 |

+

# Textbox for ControlNet prompt

|

| 95 |

+

img_generation_prompt = gr.Textbox(

|

| 96 |

+

label='Generation Prompt',

|

| 97 |

+

info="Enter a Prompt to generate images using ControlNet and StyleAligned",

|

| 98 |

+

)

|

| 99 |

+

|

| 100 |

+

# Button to trigger image generation

|

| 101 |

+

btn = gr.Button("Generate", size='sm')

|

| 102 |

+

# Gallery to display generated images

|

| 103 |

+

gallery = gr.Gallery(label="Style-Aligned ControlNet - Generated images",

|

| 104 |

+

elem_id="gallery",

|

| 105 |

+

columns=5,

|

| 106 |

+

rows=1,

|

| 107 |

+

object_fit="contain",

|

| 108 |

+

height="auto",

|

| 109 |

+

)

|

| 110 |

+

|

| 111 |

+

btn.click(fn=style_aligned_controlnet,

|

| 112 |

+

inputs=[ref_style_prompt, depth_map, ref_image, img_generation_prompt, seed],

|

| 113 |

+

outputs=[gallery, ref_style_image],

|

| 114 |

+

api_name="style_aligned_controlnet")

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

# Example inputs for the Gradio interface

|

| 118 |

+

gr.Examples(

|

| 119 |

+

examples=[

|

| 120 |

+

['A couple sitting a wooden bench, in colorful clay animation, claymation style.', True,

|

| 121 |

+

'example_image/train.png', 'A train in colorful clay animation, claymation style.',],

|

| 122 |

+

['A couple sitting a wooden bench, in colorful clay animation, claymation style.', False,

|

| 123 |

+

'example_image/sun.png', 'Sun in colorful clay animation, claymation style.',],

|

| 124 |

+

['A poster in a papercut art style.', False,

|

| 125 |

+

'example_image/A.png', 'Letter A in a papercut art style.', None],

|

| 126 |

+

['A bull in a low-poly, colorful origami style.', True, 'example_image/whale.png',

|

| 127 |

+

'A whale in a low-poly, colorful origami style.', None],

|

| 128 |

+

['An image in ancient egyptian art style, hieroglyphics style.', True, 'example_image/camel.png',

|

| 129 |

+

'A camel in a painterly, digital illustration style.',],

|

| 130 |

+

['An image in ancient egyptian art style, hieroglyphics style.', True, 'example_image/whale.png',

|

| 131 |

+

'A whale in ancient egyptian art style, hieroglyphics style.',],

|

| 132 |

+

],

|

| 133 |

+

inputs=[ref_style_prompt, depth_map, ref_image, img_generation_prompt,],

|

| 134 |

+

outputs=[gallery, ref_style_image],

|

| 135 |

+

fn=style_aligned_controlnet,

|

| 136 |

+

)

|

| 137 |

+

|

| 138 |

+

# Launch the Gradio demo

|

| 139 |

+

demo.launch()

|

demo_stylealigned_multidiffusion.py

ADDED

|

@@ -0,0 +1,104 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import torch

|

| 3 |

+

from diffusers import StableDiffusionPanoramaPipeline, DDIMScheduler

|

| 4 |

+

import sa_handler

|

| 5 |

+

import pipeline_calls

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

# init models

|

| 9 |

+

model_ckpt = "stabilityai/stable-diffusion-2-base"

|

| 10 |

+

scheduler = DDIMScheduler.from_pretrained(model_ckpt, subfolder="scheduler")

|

| 11 |

+

pipeline = StableDiffusionPanoramaPipeline.from_pretrained(

|

| 12 |

+

model_ckpt, scheduler=scheduler, torch_dtype=torch.float16

|

| 13 |

+

).to("cuda")

|

| 14 |

+

# Configure the pipeline for CPU offloading and VAE slicing

|

| 15 |

+

pipeline.enable_model_cpu_offload()

|

| 16 |

+

pipeline.enable_vae_slicing()

|

| 17 |

+

sa_args = sa_handler.StyleAlignedArgs(share_group_norm=True,

|

| 18 |

+

share_layer_norm=True,

|

| 19 |

+

share_attention=True,

|

| 20 |

+

adain_queries=True,

|

| 21 |

+

adain_keys=True,

|

| 22 |

+

adain_values=False,

|

| 23 |

+

)

|

| 24 |

+

# Initialize the style-aligned handler

|

| 25 |

+

handler = sa_handler.Handler(pipeline)

|

| 26 |

+

handler.register(sa_args)

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

# Define the function to run MultiDiffusion with StyleAligned

|

| 30 |

+

def style_aligned_multidiff(ref_style_prompt, img_generation_prompt, seed):

|

| 31 |

+

try:

|

| 32 |

+

view_batch_size = 25 # adjust according to VRAM size

|

| 33 |

+

gen = None if seed is None else torch.manual_seed(int(seed))

|

| 34 |

+

reference_latent = torch.randn(1, 4, 64, 64, generator=gen)

|

| 35 |

+

images = pipeline_calls.panorama_call(pipeline,

|

| 36 |

+

[ref_style_prompt, img_generation_prompt],

|

| 37 |

+

reference_latent=reference_latent,

|

| 38 |

+

view_batch_size=view_batch_size)

|

| 39 |

+

|

| 40 |

+

return images, gr.Image(value=images[0], visible=True)

|

| 41 |

+

except Exception as e:

|

| 42 |

+

raise gr.Error(f"Error in generating images:{e}")

|

| 43 |

+

|

| 44 |

+

# Create a Gradio UI

|

| 45 |

+

with gr.Blocks() as demo:

|

| 46 |

+

gr.HTML('<h1 style="text-align: center;">MultiDiffusion with StyleAligned </h1>')

|

| 47 |

+

with gr.Row():

|

| 48 |

+

with gr.Column(variant='panel'):

|

| 49 |

+

# Textbox for reference style prompt

|

| 50 |

+

ref_style_prompt = gr.Textbox(

|

| 51 |

+

label='Reference style prompt',

|

| 52 |

+

info='Enter a Prompt to generate the reference image',

|

| 53 |

+

placeholder='A poster in a papercut art style.'

|

| 54 |

+

)

|

| 55 |

+

seed = gr.Number(value=1234, label="Seed", precision=0, step=1,

|

| 56 |

+

info="Enter a seed of a previous reference image "

|

| 57 |

+

"or leave empty for a random generation.")

|

| 58 |

+

# Image display for the reference style image

|

| 59 |

+

ref_style_image = gr.Image(visible=False, label='Reference style image')

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

with gr.Column(variant='panel'):

|

| 63 |

+

# Textbox for prompt for MultiDiffusion panoramas

|

| 64 |

+

img_generation_prompt = gr.Textbox(

|

| 65 |

+

label='MultiDiffusion Prompt',

|

| 66 |

+

info='Enter a Prompt to generate panoramic images using Style-aligned combined with MultiDiffusion',

|

| 67 |

+

placeholder= 'A village in a papercut art style.'

|

| 68 |

+

)

|

| 69 |

+

|

| 70 |

+

# Button to trigger image generation

|

| 71 |

+

btn = gr.Button('Style Aligned MultiDiffusion - Generate', size='sm')

|

| 72 |

+

# Gallery to display generated style image and the panorama

|

| 73 |

+

gallery = gr.Gallery(label='StyleAligned MultiDiffusion - generated images',

|

| 74 |

+

elem_id='gallery',

|

| 75 |

+

columns=5,

|

| 76 |

+

rows=1,

|

| 77 |

+

object_fit='contain',

|

| 78 |

+

height='auto',

|

| 79 |

+

allow_preview=True,

|

| 80 |

+

preview=True,

|

| 81 |

+

)

|

| 82 |

+

# Button click event

|

| 83 |

+

btn.click(fn=style_aligned_multidiff,

|

| 84 |

+

inputs=[ref_style_prompt, img_generation_prompt, seed],

|

| 85 |

+

outputs=[gallery, ref_style_image,],

|

| 86 |

+

api_name='style_aligned_multidiffusion')

|

| 87 |

+

|

| 88 |

+

# Example inputs for the Gradio demo

|

| 89 |

+

gr.Examples(

|

| 90 |

+

examples=[

|

| 91 |

+

['A poster in a papercut art style.', 'A village in a papercut art style.'],

|

| 92 |

+

['A poster in a papercut art style.', 'Futuristic cityscape in a papercut art style.'],

|

| 93 |

+

['A poster in a papercut art style.', 'A jungle in a papercut art style.'],

|

| 94 |

+

['A poster in a flat design style.', 'Giraffes in a flat design style.'],

|

| 95 |

+

['A poster in a flat design style.', 'Houses in a flat design style.'],

|

| 96 |

+

['A poster in a flat design style.', 'Mountains in a flat design style.'],

|

| 97 |

+

],

|

| 98 |

+

inputs=[ref_style_prompt, img_generation_prompt],

|

| 99 |

+

outputs=[gallery, ref_style_image],

|

| 100 |

+

fn=style_aligned_multidiff,

|

| 101 |

+

)

|

| 102 |

+

|

| 103 |

+

# Launch the Gradio demo

|

| 104 |

+

demo.launch()

|

demo_stylealigned_sdxl.py

ADDED

|

@@ -0,0 +1,85 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from diffusers import StableDiffusionXLPipeline, DDIMScheduler

|

| 3 |

+

import torch

|

| 4 |

+

import sa_handler

|

| 5 |

+

|

| 6 |

+

# init models

|

| 7 |

+

scheduler = DDIMScheduler(beta_start=0.00085, beta_end=0.012, beta_schedule="scaled_linear", clip_sample=False,

|

| 8 |

+

set_alpha_to_one=False)

|

| 9 |

+

pipeline = StableDiffusionXLPipeline.from_pretrained(

|

| 10 |

+

"stabilityai/stable-diffusion-xl-base-1.0", torch_dtype=torch.float16, variant="fp16", use_safetensors=True,

|

| 11 |

+

scheduler=scheduler

|

| 12 |

+

).to("cuda")

|

| 13 |

+

# Configure the pipeline for CPU offloading and VAE slicing#pipeline.enable_sequential_cpu_offload()

|

| 14 |

+

pipeline.enable_model_cpu_offload()

|

| 15 |

+

pipeline.enable_vae_slicing()

|

| 16 |

+

# Initialize the style-aligned handler

|

| 17 |

+

handler = sa_handler.Handler(pipeline)

|

| 18 |

+

sa_args = sa_handler.StyleAlignedArgs(share_group_norm=False,

|

| 19 |

+

share_layer_norm=False,

|

| 20 |

+

share_attention=True,

|

| 21 |

+

adain_queries=True,

|

| 22 |

+

adain_keys=True,

|

| 23 |

+

adain_values=False,

|

| 24 |

+

)

|

| 25 |

+

|

| 26 |

+

handler.register(sa_args, )

|

| 27 |

+

|

| 28 |

+

# Define the function to generate style-aligned images

|

| 29 |

+

def style_aligned_sdxl(initial_prompt1, initial_prompt2, initial_prompt3, initial_prompt4,

|

| 30 |

+

initial_prompt5, style_prompt, seed):

|

| 31 |

+

try:

|

| 32 |

+

# Combine the style prompt with each initial prompt

|

| 33 |

+

gen = None if seed is None else torch.manual_seed(int(seed))

|

| 34 |

+

sets_of_prompts = [prompt + " in the style of " + style_prompt for prompt in [initial_prompt1, initial_prompt2, initial_prompt3, initial_prompt4, initial_prompt5] if prompt]

|

| 35 |

+

# Generate images using the pipeline

|

| 36 |

+

images = pipeline(sets_of_prompts, generator=gen).images

|

| 37 |

+

return images

|

| 38 |

+

except Exception as e:

|

| 39 |

+

raise gr.Error(f"Error in generating images: {e}")

|

| 40 |

+

|

| 41 |

+

with gr.Blocks() as demo:

|

| 42 |

+

gr.HTML('<h1 style="text-align: center;">StyleAligned SDXL</h1>')

|

| 43 |

+

with gr.Group():

|

| 44 |

+

with gr.Column():

|

| 45 |

+

with gr.Accordion(label='Enter upto 5 different initial prompts', open=True):

|

| 46 |

+

with gr.Row(variant='panel'):

|

| 47 |

+

# Textboxes for initial prompts

|

| 48 |

+

initial_prompt1 = gr.Textbox(label='Initial prompt 1', value='', show_label=False, container=False, placeholder='a toy train')

|

| 49 |

+

initial_prompt2 = gr.Textbox(label='Initial prompt 2', value='', show_label=False, container=False, placeholder='a toy airplane')

|

| 50 |

+

initial_prompt3 = gr.Textbox(label='Initial prompt 3', value='', show_label=False, container=False, placeholder='a toy bicycle')

|

| 51 |

+

initial_prompt4 = gr.Textbox(label='Initial prompt 4', value='', show_label=False, container=False, placeholder='a toy car')

|

| 52 |

+

initial_prompt5 = gr.Textbox(label='Initial prompt 5', value='', show_label=False, container=False, placeholder='a toy boat')

|

| 53 |

+

with gr.Row():

|

| 54 |

+

# Textbox for the style prompt

|

| 55 |

+

style_prompt = gr.Textbox(label="Enter a style prompt", placeholder='macro photo, 3d game asset', scale=3)

|

| 56 |

+

seed = gr.Number(value=1234, label="Seed", precision=0, step=1, scale=1,

|

| 57 |

+

info="Enter a seed of a previous run "

|

| 58 |

+

"or leave empty for a random generation.")

|

| 59 |

+

# Button to generate images

|

| 60 |

+

btn = gr.Button("Generate a set of Style-aligned SDXL images",)

|

| 61 |

+

# Display the generated images

|

| 62 |

+

output = gr.Gallery(label="Style aligned text-to-image on SDXL ", elem_id="gallery",columns=5, rows=1,

|

| 63 |

+

object_fit="contain", height="auto",)

|

| 64 |

+

|

| 65 |

+

# Button click event

|

| 66 |

+

btn.click(fn=style_aligned_sdxl,

|

| 67 |

+

inputs=[initial_prompt1, initial_prompt2, initial_prompt3, initial_prompt4, initial_prompt5,

|

| 68 |

+

style_prompt, seed],

|

| 69 |

+

outputs=output,

|

| 70 |

+

api_name="style_aligned_sdxl")

|

| 71 |

+

|

| 72 |

+

# Providing Example inputs for the demo

|

| 73 |

+

gr.Examples(examples=[

|

| 74 |

+

["a toy train", "a toy airplane", "a toy bicycle", "a toy car", "a toy boat", "macro photo. 3d game asset."],

|

| 75 |

+

["a toy train", "a toy airplane", "a toy bicycle", "a toy car", "a toy boat", "BW logo. high contrast."],

|

| 76 |

+

["a cat", "a dog", "a bear", "a man on a bicycle", "a girl working on laptop", "minimal origami."],

|

| 77 |

+

["a firewoman", "a Gardner", "a scientist", "a policewoman", "a saxophone player", "made of claymation, stop motion animation."],

|

| 78 |

+

["a firewoman", "a Gardner", "a scientist", "a policewoman", "a saxophone player", "sketch, character sheet."],

|

| 79 |

+

],

|

| 80 |

+

inputs=[initial_prompt1, initial_prompt2, initial_prompt3, initial_prompt4, initial_prompt5, style_prompt],

|

| 81 |

+

outputs=[output],

|

| 82 |

+

fn=style_aligned_sdxl)

|

| 83 |

+

|

| 84 |

+

# Launch the Gradio demo

|

| 85 |

+

demo.launch()

|

doc/cn_example.jpg

ADDED

|

Git LFS Details

|

doc/md_example.jpg

ADDED

|

Git LFS Details

|

doc/sa_example.jpg

ADDED

|

doc/sa_transfer_example.jpeg

ADDED

|

example_image/A.png

ADDED

|

example_image/camel.png

ADDED

|

Git LFS Details

|

example_image/medieval-bed.jpeg

ADDED

|

example_image/sun.png

ADDED

|

example_image/train.png

ADDED

|

Git LFS Details

|

example_image/whale.png

ADDED

|

inversion.py

ADDED

|

@@ -0,0 +1,125 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright 2023 Google LLC

|

| 2 |

+

#

|

| 3 |

+

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 4 |

+

# you may not use this file except in compliance with the License.

|

| 5 |

+

# You may obtain a copy of the License at

|

| 6 |

+

#

|

| 7 |

+

# http://www.apache.org/licenses/LICENSE-2.0

|

| 8 |

+

#

|

| 9 |

+

# Unless required by applicable law or agreed to in writing, software

|

| 10 |

+

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 11 |

+

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 12 |

+

# See the License for the specific language governing permissions and

|

| 13 |

+

# limitations under the License.

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

from __future__ import annotations

|

| 17 |

+

from typing import Callable

|

| 18 |

+

from diffusers import StableDiffusionXLPipeline

|

| 19 |

+

import torch

|

| 20 |

+

from tqdm import tqdm

|

| 21 |

+

import numpy as np

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

T = torch.Tensor

|

| 25 |

+

TN = T | None

|

| 26 |

+

InversionCallback = Callable[[StableDiffusionXLPipeline, int, T, dict[str, T]], dict[str, T]]

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

def _get_text_embeddings(prompt: str, tokenizer, text_encoder, device):

|

| 30 |

+

# Tokenize text and get embeddings

|

| 31 |

+

text_inputs = tokenizer(prompt, padding='max_length', max_length=tokenizer.model_max_length, truncation=True, return_tensors='pt')

|

| 32 |

+

text_input_ids = text_inputs.input_ids

|

| 33 |

+

|

| 34 |

+

with torch.no_grad():

|

| 35 |

+

prompt_embeds = text_encoder(

|

| 36 |

+

text_input_ids.to(device),

|

| 37 |

+

output_hidden_states=True,

|

| 38 |

+

)

|

| 39 |

+

|

| 40 |

+

pooled_prompt_embeds = prompt_embeds[0]

|

| 41 |

+

prompt_embeds = prompt_embeds.hidden_states[-2]

|

| 42 |

+

if prompt == '':

|

| 43 |

+

negative_prompt_embeds = torch.zeros_like(prompt_embeds)

|

| 44 |

+

negative_pooled_prompt_embeds = torch.zeros_like(pooled_prompt_embeds)

|

| 45 |

+

return negative_prompt_embeds, negative_pooled_prompt_embeds

|

| 46 |

+

return prompt_embeds, pooled_prompt_embeds

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

def _encode_text_sdxl(model: StableDiffusionXLPipeline, prompt: str) -> tuple[dict[str, T], T]:

|

| 50 |

+

device = model._execution_device

|

| 51 |

+

prompt_embeds, pooled_prompt_embeds, = _get_text_embeddings(prompt, model.tokenizer, model.text_encoder, device)

|

| 52 |

+

prompt_embeds_2, pooled_prompt_embeds2, = _get_text_embeddings( prompt, model.tokenizer_2, model.text_encoder_2, device)

|

| 53 |

+

prompt_embeds = torch.cat((prompt_embeds, prompt_embeds_2), dim=-1)

|

| 54 |

+

text_encoder_projection_dim = model.text_encoder_2.config.projection_dim

|

| 55 |

+

add_time_ids = model._get_add_time_ids((1024, 1024), (0, 0), (1024, 1024), torch.float16,

|

| 56 |

+

text_encoder_projection_dim).to(device)

|

| 57 |

+

added_cond_kwargs = {"text_embeds": pooled_prompt_embeds2, "time_ids": add_time_ids}

|

| 58 |

+

return added_cond_kwargs, prompt_embeds

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

def _encode_text_sdxl_with_negative(model: StableDiffusionXLPipeline, prompt: str) -> tuple[dict[str, T], T]:

|

| 62 |

+

added_cond_kwargs, prompt_embeds = _encode_text_sdxl(model, prompt)

|

| 63 |

+

added_cond_kwargs_uncond, prompt_embeds_uncond = _encode_text_sdxl(model, "")

|

| 64 |

+

prompt_embeds = torch.cat((prompt_embeds_uncond, prompt_embeds, ))

|

| 65 |

+

added_cond_kwargs = {"text_embeds": torch.cat((added_cond_kwargs_uncond["text_embeds"], added_cond_kwargs["text_embeds"])),

|

| 66 |

+

"time_ids": torch.cat((added_cond_kwargs_uncond["time_ids"], added_cond_kwargs["time_ids"])),}

|

| 67 |

+

return added_cond_kwargs, prompt_embeds

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

def _encode_image(model: StableDiffusionXLPipeline, image: np.ndarray) -> T:

|

| 71 |

+

model.vae.to(dtype=torch.float32)

|

| 72 |

+

image = torch.from_numpy(image).float() / 255.

|

| 73 |

+

image = (image * 2 - 1).permute(2, 0, 1).unsqueeze(0)

|

| 74 |

+

latent = model.vae.encode(image.to(model.vae.device))['latent_dist'].mean * model.vae.config.scaling_factor

|

| 75 |

+

model.vae.to(dtype=torch.float16)

|

| 76 |

+

return latent

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

def _next_step(model: StableDiffusionXLPipeline, model_output: T, timestep: int, sample: T) -> T:

|

| 80 |

+

timestep, next_timestep = min(timestep - model.scheduler.config.num_train_timesteps // model.scheduler.num_inference_steps, 999), timestep

|

| 81 |

+

alpha_prod_t = model.scheduler.alphas_cumprod[int(timestep)] if timestep >= 0 else model.scheduler.final_alpha_cumprod

|

| 82 |

+

alpha_prod_t_next = model.scheduler.alphas_cumprod[int(next_timestep)]

|

| 83 |

+

beta_prod_t = 1 - alpha_prod_t

|

| 84 |

+

next_original_sample = (sample - beta_prod_t ** 0.5 * model_output) / alpha_prod_t ** 0.5

|

| 85 |

+

next_sample_direction = (1 - alpha_prod_t_next) ** 0.5 * model_output

|

| 86 |

+

next_sample = alpha_prod_t_next ** 0.5 * next_original_sample + next_sample_direction

|

| 87 |

+

return next_sample

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

def _get_noise_pred(model: StableDiffusionXLPipeline, latent: T, t: T, context: T, guidance_scale: float, added_cond_kwargs: dict[str, T]):

|

| 91 |

+

latents_input = torch.cat([latent] * 2)

|

| 92 |

+

noise_pred = model.unet(latents_input, t, encoder_hidden_states=context, added_cond_kwargs=added_cond_kwargs)["sample"]

|

| 93 |

+

noise_pred_uncond, noise_prediction_text = noise_pred.chunk(2)

|

| 94 |

+

noise_pred = noise_pred_uncond + guidance_scale * (noise_prediction_text - noise_pred_uncond)

|

| 95 |

+

# latents = next_step(model, noise_pred, t, latent)

|

| 96 |

+

return noise_pred

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

def _ddim_loop(model: StableDiffusionXLPipeline, z0, prompt, guidance_scale) -> T:

|

| 100 |

+

all_latent = [z0]

|

| 101 |

+

added_cond_kwargs, text_embedding = _encode_text_sdxl_with_negative(model, prompt)

|

| 102 |

+

latent = z0.clone().detach().half()

|

| 103 |

+

for i in tqdm(range(model.scheduler.num_inference_steps)):

|

| 104 |

+

t = model.scheduler.timesteps[len(model.scheduler.timesteps) - i - 1]

|

| 105 |

+

noise_pred = _get_noise_pred(model, latent, t, text_embedding, guidance_scale, added_cond_kwargs)

|

| 106 |

+

latent = _next_step(model, noise_pred, t, latent)

|

| 107 |

+

all_latent.append(latent)

|

| 108 |

+

return torch.cat(all_latent).flip(0)

|