Spaces:

Running

Running

File size: 2,421 Bytes

80200b5 b7ddea4 80200b5 e5ec521 b7ddea4 e5ec521 b7ddea4 e5ec521 b7ddea4 e5ec521 80200b5 e5ec521 b7ddea4 e5ec521 80200b5 b7ddea4 e5ec521 80200b5 e5ec521 80200b5 e5ec521 80200b5 b7ddea4 80200b5 e5ec521 b7ddea4 80200b5 e5ec521 b7ddea4 e5ec521 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 |

# Italian CLIP

....

# Novel Contributions

The original CLIP model was trained on 400millions text-image pairs; this amount of data is not available for Italian and the only datasets for captioning in the literature are MSCOCO-IT (translated version of MSCOCO) and WIT. To get competitive results we follewed three directions: 1) more data 2) better augmentation and 3) better training.

## More Data

We eventually had to deal with the fact that we do not have the same data that OpenAI had during the training of CLIP.

Thus, we opted for one choice, data of medium-high quality.

We considered three main sources of data:

+ WIT. Most of these captions describe ontological knowledge and encyclopedic facts (e.g., Roberto Baggio in 1994).

However, this kind of text, without more information, is not useful to learn a good mapping between images and captions. On the other hand,

this text is written in Italian and it is good quality. To prevent polluting the data with captions that are not meaningful, we used POS tagging

on the data and removed all the captions that were composed for the 80% or more by PROPN.

+ MSCOCO-IT

+ CC

## Better Augmentations

## Better Training

### Optimizer

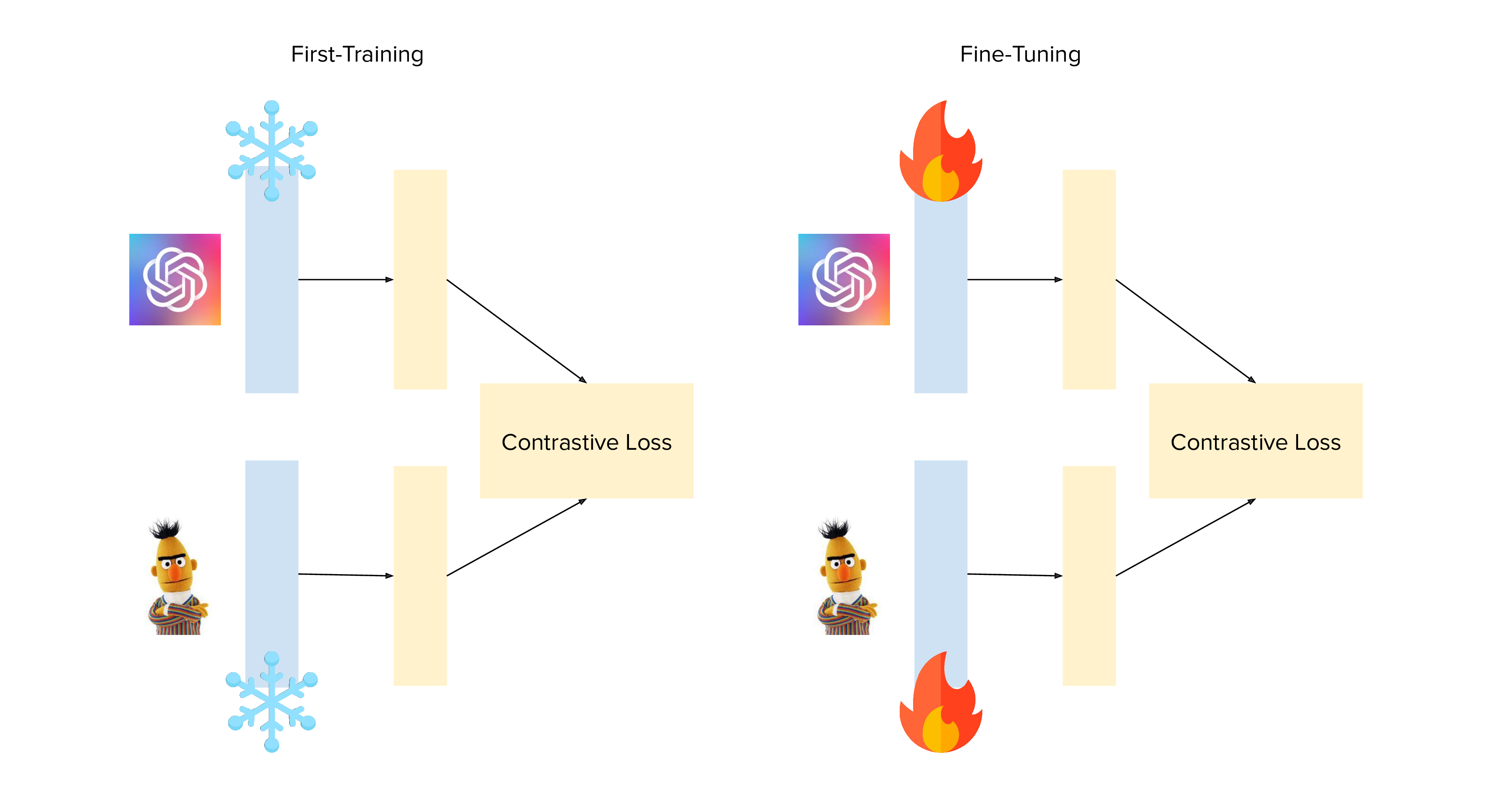

### Backbone Freezing

# Scientific Validity

Those images are definitely cool and interesting, but a model is nothing without validation.

To better understand how well our clip-italian model works we run an experimental evaluation. Since this is the first clip-based model in Italian, we used the multilingual CLIP model as a comparison baseline.

## mCLIP

## Tasks

We selected two different tasks:

+ image-retrieval

+ zero-shot classification

### Image Retrieval

| MRR | CLIP-Italian | mCLIP |

| --------------- | ------------ |-------|

| MRR@1 | | |

| MRR@5 | | |

| MRR@10 | | |

### Zero-shot classification

| Accuracy | CLIP-Italian | mCLIP |

| --------------- | ------------ |-------|

| Accuracy@1 | | |

| Accuracy@5 | | |

| Accuracy@10 | | |

| Accuracy@100 | 81.08 | 67.11 |

# Broader Outlook

# Other Notes

This readme has been designed using resources from Flaticon.com |