Spaces:

Running

Running

LinB203

commited on

Commit

•

5c98ca3

1

Parent(s):

c4ba24f

add project files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- README.md +132 -13

- a_cls/__pycache__/precision.cpython-38.pyc +0 -0

- a_cls/__pycache__/stats.cpython-38.pyc +0 -0

- a_cls/__pycache__/zero_shot.cpython-38.pyc +0 -0

- a_cls/__pycache__/zero_shot_classifier.cpython-38.pyc +0 -0

- a_cls/__pycache__/zero_shot_metadata.cpython-38.pyc +0 -0

- a_cls/__pycache__/zeroshot_cls.cpython-38.pyc +0 -0

- a_cls/class_labels_indices.csv +528 -0

- a_cls/dataloader.py +90 -0

- a_cls/datasets.py +93 -0

- a_cls/filter_eval_audio.py +21 -0

- a_cls/precision.py +12 -0

- a_cls/stats.py +57 -0

- a_cls/util.py +306 -0

- a_cls/zero_shot.py +234 -0

- a_cls/zero_shot_classifier.py +111 -0

- a_cls/zero_shot_metadata.py +183 -0

- a_cls/zeroshot_cls.py +46 -0

- app.py +327 -0

- assets/languagebind.jpg +0 -0

- assets/logo.png +0 -0

- assets/res1.jpg +0 -0

- assets/res2.jpg +0 -0

- d_cls/__pycache__/precision.cpython-38.pyc +0 -0

- d_cls/__pycache__/zero_shot.cpython-38.pyc +0 -0

- d_cls/__pycache__/zero_shot_classifier.cpython-38.pyc +0 -0

- d_cls/__pycache__/zero_shot_metadata.cpython-38.pyc +0 -0

- d_cls/__pycache__/zeroshot_cls.cpython-38.pyc +0 -0

- d_cls/cp_zero_shot_metadata.py +117 -0

- d_cls/datasets.py +20 -0

- d_cls/precision.py +12 -0

- d_cls/zero_shot.py +90 -0

- d_cls/zero_shot_classifier.py +111 -0

- d_cls/zero_shot_metadata.py +117 -0

- d_cls/zeroshot_cls.py +47 -0

- data/__pycache__/base_datasets.cpython-38.pyc +0 -0

- data/__pycache__/build_datasets.cpython-38.pyc +0 -0

- data/__pycache__/new_loadvat.cpython-38.pyc +0 -0

- data/__pycache__/process_audio.cpython-38.pyc +0 -0

- data/__pycache__/process_depth.cpython-38.pyc +0 -0

- data/__pycache__/process_image.cpython-38.pyc +0 -0

- data/__pycache__/process_text.cpython-38.pyc +0 -0

- data/__pycache__/process_thermal.cpython-38.pyc +0 -0

- data/__pycache__/process_video.cpython-38.pyc +0 -0

- data/base_datasets.py +159 -0

- data/bpe_simple_vocab_16e6.txt.gz +3 -0

- data/build_datasets.py +174 -0

- data/new_loadvat.py +498 -0

- data/process_audio.py +131 -0

- data/process_depth.py +55 -0

README.md

CHANGED

|

@@ -1,13 +1,132 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<!---

|

| 2 |

+

Copyright 2023 The OFA-Sys Team.

|

| 3 |

+

All rights reserved.

|

| 4 |

+

This source code is licensed under the Apache 2.0 license found in the LICENSE file in the root directory.

|

| 5 |

+

-->

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

<p align="center">

|

| 10 |

+

<img src="assets/logo.png" width="250" />

|

| 11 |

+

<p>

|

| 12 |

+

<h2 align="center"> LanguageBind: Extending Video-Language Pretraining to N-modality by Language-based Semantic Alignment </h2>

|

| 13 |

+

|

| 14 |

+

<h5 align="center"> If you like our project, please give us a star ✨ on Github for latest update. </h2>

|

| 15 |

+

|

| 16 |

+

[//]: # (<p align="center">)

|

| 17 |

+

|

| 18 |

+

[//]: # ( 📖 <a href="https://arxiv.org/abs/2305.11172">Paper</a>  |  <a href="datasets.md">Datasets</a>)

|

| 19 |

+

|

| 20 |

+

[//]: # (</p>)

|

| 21 |

+

<br>

|

| 22 |

+

|

| 23 |

+

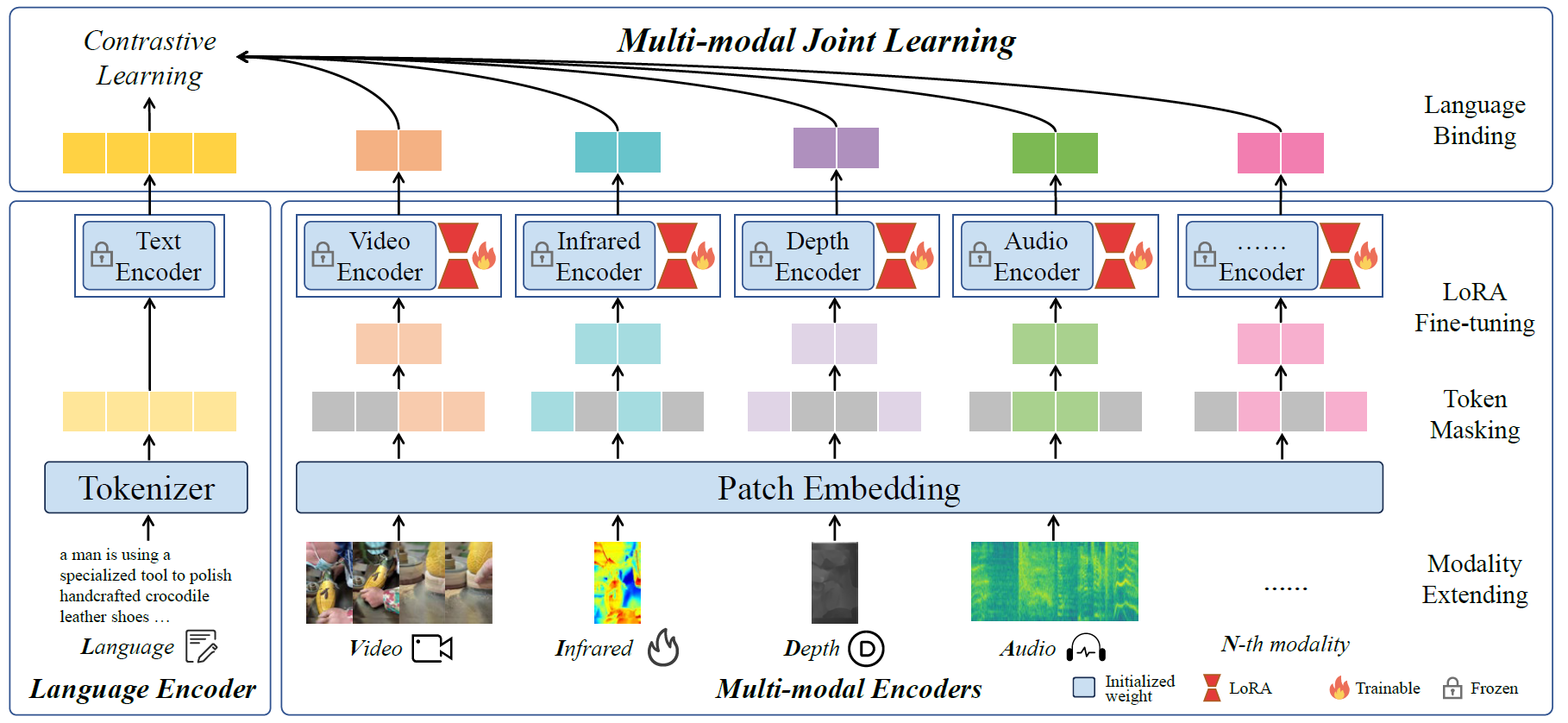

LanguageBind is a language-centric multimodal pretraining approach, taking the language as the bind across different modalities because the language modality is well-explored and contains rich semantics. As a result, **all modalities are mapped to a shared feature space**, implementing multimodal semantic alignment. While LanguageBind ensures that we can extend VL modalities to N modalities, we also need a high-quality dataset with alignment data pairs centered on language. We thus propose **VIDAL-10M with 10 Million data with Video, Infrared, Depth, Audio and their corresponding Language.** In our VIDAL-10M, all videos are from short video platforms with **complete semantics** rather than truncated segments from long videos, and all the video, depth, infrared, and audio modalities are aligned to their textual descriptions

|

| 24 |

+

|

| 25 |

+

We have **open-sourced the VIDAL-10M dataset**, which greatly expands the data beyond visual modalities. The following figure shows the architecture of LanguageBind. LanguageBind can be easily extended to segmentation, detection tasks, and potentially to unlimited modalities.

|

| 26 |

+

|

| 27 |

+

<p align="center">

|

| 28 |

+

<img src="assets/languagebind.jpg" width=100%>

|

| 29 |

+

</p>

|

| 30 |

+

|

| 31 |

+

<br>

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

# News

|

| 35 |

+

* **2023.10.02:** Released the code. Training & validating scripts and checkpoints.

|

| 36 |

+

<br></br>

|

| 37 |

+

# Online Demo

|

| 38 |

+

Coming soon...

|

| 39 |

+

|

| 40 |

+

# Models and Results

|

| 41 |

+

## Model Zoo

|

| 42 |

+

We list the parameters and pretrained checkpoints of LanguageBind below. Note that LanguageBind can be disassembled into different branches to handle different tasks.

|

| 43 |

+

The cache comes from OpenCLIP, which we downloaded from HuggingFace. Note that the original cache for pretrained weights is the Image-Language weights, just a few more HF profiles.

|

| 44 |

+

We additionally trained Video-Language with the LanguageBind method, which is stronger than on CLIP4Clip framework.

|

| 45 |

+

<table border="1" width="100%">

|

| 46 |

+

<tr align="center">

|

| 47 |

+

<th>Model</th><th>Ckpt</th><th>Params</th><th>Modality Hidden size</th><th>Modality Layers</th><th>Language Hidden size</th><th>Language Layers</th>

|

| 48 |

+

</tr>

|

| 49 |

+

<tr align="center">

|

| 50 |

+

<td>Video-Language</td><td>TODO</td><td>330M</td><td>1024</td><td>24</td><td>768</td><td>12</td>

|

| 51 |

+

</tr>

|

| 52 |

+

</tr>

|

| 53 |

+

<tr align="center">

|

| 54 |

+

<td>Audio-Language</td><td><a href="https://pan.baidu.com/s/1PFN8aGlnzsOkGjVk6Mzlfg?pwd=sisz">BaiDu</a></td><td>330M</td><td>1024</td><td>24</td><td>768</td><td>12</td>

|

| 55 |

+

</tr>

|

| 56 |

+

</tr>

|

| 57 |

+

<tr align="center">

|

| 58 |

+

<td>Depth-Language</td><td><a href="https://pan.baidu.com/s/1YWlaxqTRhpGvXqCyBbmhyg?pwd=olom">BaiDu</a></td><td>330M</td><td>1024</td><td>24</td><td>768</td><td>12</td>

|

| 59 |

+

</tr>

|

| 60 |

+

</tr>

|

| 61 |

+

<tr align="center">

|

| 62 |

+

<td>Thermal(Infrared)-Language</td><td><a href="https://pan.baidu.com/s/1luUyyKxhadKKc1nk1wizWg?pwd=raf5">BaiDu</a></td><td>330M</td><td>1024</td><td>24</td><td>768</td><td>12</td>

|

| 63 |

+

</tr>

|

| 64 |

+

</tr>

|

| 65 |

+

<tr align="center">

|

| 66 |

+

<td>Image-Language</td><td><a href="https://pan.baidu.com/s/1VBE4OjecMTeIzU08axfFHA?pwd=7j0m">BaiDu</a></td><td>330M</td><td>1024</td><td>24</td><td>768</td><td>12</td>

|

| 67 |

+

</tr>

|

| 68 |

+

</tr>

|

| 69 |

+

<tr align="center">

|

| 70 |

+

<td>Cache for pretrained weight</td><td><a href="https://pan.baidu.com/s/1Tytx5MDSo96rwUmQZVY1Ww?pwd=c7r0">BaiDu</a></td><td>330M</td><td>1024</td><td>24</td><td>768</td><td>12</td>

|

| 71 |

+

</tr>

|

| 72 |

+

|

| 73 |

+

</table>

|

| 74 |

+

<br>

|

| 75 |

+

|

| 76 |

+

## Results

|

| 77 |

+

Zero-shot Video-Text Retrieval Performance on MSR-VTT and MSVD datasets. We focus on reporting the parameters of the vision

|

| 78 |

+

encoder. Our experiments are based on 3 million video-text pairs of VIDAL-10M, and we train on the CLIP4Clip framework..

|

| 79 |

+

<p align="center">

|

| 80 |

+

<img src="assets/res1.jpg" width=100%>

|

| 81 |

+

</p>

|

| 82 |

+

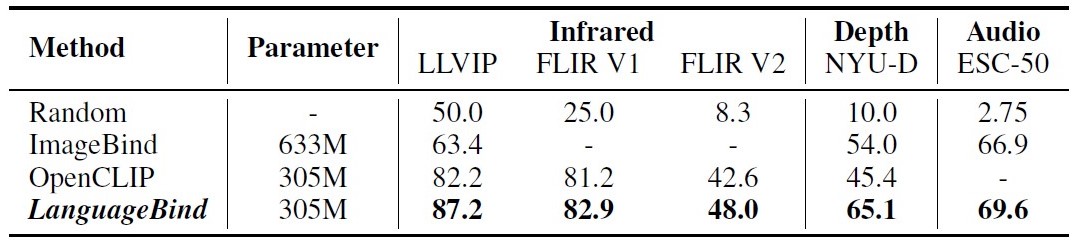

Infrared-Language, Depth-Language, and Audio-Language zero-shot classification. We report the top-1 classification accuracy for all datasets.

|

| 83 |

+

<p align="center">

|

| 84 |

+

<img src="assets/res2.jpg" width=100%>

|

| 85 |

+

</p>

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

<br></br>

|

| 89 |

+

|

| 90 |

+

# Requirements and Installation

|

| 91 |

+

* Python >= 3.8

|

| 92 |

+

* Pytorch >= 1.13.0

|

| 93 |

+

* CUDA Version >= 10.2 (recommend 11.6)

|

| 94 |

+

* Install required packages:

|

| 95 |

+

```bash

|

| 96 |

+

git clone https://github.com/PKU-YuanGroup/LanguageBind

|

| 97 |

+

cd LanguageBind

|

| 98 |

+

pip install -r requirements.txt

|

| 99 |

+

```

|

| 100 |

+

|

| 101 |

+

<br></br>

|

| 102 |

+

|

| 103 |

+

# VIDAL-10M

|

| 104 |

+

Release the dataset after publication...

|

| 105 |

+

|

| 106 |

+

<br></br>

|

| 107 |

+

|

| 108 |

+

# Training & Inference

|

| 109 |

+

Release run scripts, details coming soon...

|

| 110 |

+

|

| 111 |

+

<br></br>

|

| 112 |

+

|

| 113 |

+

# Downstream datasets

|

| 114 |

+

Coming soon...

|

| 115 |

+

|

| 116 |

+

<br></br>

|

| 117 |

+

|

| 118 |

+

# Acknowledgement

|

| 119 |

+

* [OpenCLIP](https://github.com/mlfoundations/open_clip) An open source pretraining framework.

|

| 120 |

+

|

| 121 |

+

<br></br>

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

# Citation

|

| 125 |

+

|

| 126 |

+

If you find our paper and code useful in your research, please consider giving a star :star: and citation :pencil: :)

|

| 127 |

+

|

| 128 |

+

<br></br>

|

| 129 |

+

|

| 130 |

+

```BibTeX

|

| 131 |

+

|

| 132 |

+

```

|

a_cls/__pycache__/precision.cpython-38.pyc

ADDED

|

Binary file (582 Bytes). View file

|

|

|

a_cls/__pycache__/stats.cpython-38.pyc

ADDED

|

Binary file (1.45 kB). View file

|

|

|

a_cls/__pycache__/zero_shot.cpython-38.pyc

ADDED

|

Binary file (6.38 kB). View file

|

|

|

a_cls/__pycache__/zero_shot_classifier.cpython-38.pyc

ADDED

|

Binary file (4.25 kB). View file

|

|

|

a_cls/__pycache__/zero_shot_metadata.cpython-38.pyc

ADDED

|

Binary file (16.7 kB). View file

|

|

|

a_cls/__pycache__/zeroshot_cls.cpython-38.pyc

ADDED

|

Binary file (1.44 kB). View file

|

|

|

a_cls/class_labels_indices.csv

ADDED

|

@@ -0,0 +1,528 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

index,mid,display_name

|

| 2 |

+

0,/m/09x0r,"Speech"

|

| 3 |

+

1,/m/05zppz,"Male speech, man speaking"

|

| 4 |

+

2,/m/02zsn,"Female speech, woman speaking"

|

| 5 |

+

3,/m/0ytgt,"Child speech, kid speaking"

|

| 6 |

+

4,/m/01h8n0,"Conversation"

|

| 7 |

+

5,/m/02qldy,"Narration, monologue"

|

| 8 |

+

6,/m/0261r1,"Babbling"

|

| 9 |

+

7,/m/0brhx,"Speech synthesizer"

|

| 10 |

+

8,/m/07p6fty,"Shout"

|

| 11 |

+

9,/m/07q4ntr,"Bellow"

|

| 12 |

+

10,/m/07rwj3x,"Whoop"

|

| 13 |

+

11,/m/07sr1lc,"Yell"

|

| 14 |

+

12,/m/04gy_2,"Battle cry"

|

| 15 |

+

13,/t/dd00135,"Children shouting"

|

| 16 |

+

14,/m/03qc9zr,"Screaming"

|

| 17 |

+

15,/m/02rtxlg,"Whispering"

|

| 18 |

+

16,/m/01j3sz,"Laughter"

|

| 19 |

+

17,/t/dd00001,"Baby laughter"

|

| 20 |

+

18,/m/07r660_,"Giggle"

|

| 21 |

+

19,/m/07s04w4,"Snicker"

|

| 22 |

+

20,/m/07sq110,"Belly laugh"

|

| 23 |

+

21,/m/07rgt08,"Chuckle, chortle"

|

| 24 |

+

22,/m/0463cq4,"Crying, sobbing"

|

| 25 |

+

23,/t/dd00002,"Baby cry, infant cry"

|

| 26 |

+

24,/m/07qz6j3,"Whimper"

|

| 27 |

+

25,/m/07qw_06,"Wail, moan"

|

| 28 |

+

26,/m/07plz5l,"Sigh"

|

| 29 |

+

27,/m/015lz1,"Singing"

|

| 30 |

+

28,/m/0l14jd,"Choir"

|

| 31 |

+

29,/m/01swy6,"Yodeling"

|

| 32 |

+

30,/m/02bk07,"Chant"

|

| 33 |

+

31,/m/01c194,"Mantra"

|

| 34 |

+

32,/t/dd00003,"Male singing"

|

| 35 |

+

33,/t/dd00004,"Female singing"

|

| 36 |

+

34,/t/dd00005,"Child singing"

|

| 37 |

+

35,/t/dd00006,"Synthetic singing"

|

| 38 |

+

36,/m/06bxc,"Rapping"

|

| 39 |

+

37,/m/02fxyj,"Humming"

|

| 40 |

+

38,/m/07s2xch,"Groan"

|

| 41 |

+

39,/m/07r4k75,"Grunt"

|

| 42 |

+

40,/m/01w250,"Whistling"

|

| 43 |

+

41,/m/0lyf6,"Breathing"

|

| 44 |

+

42,/m/07mzm6,"Wheeze"

|

| 45 |

+

43,/m/01d3sd,"Snoring"

|

| 46 |

+

44,/m/07s0dtb,"Gasp"

|

| 47 |

+

45,/m/07pyy8b,"Pant"

|

| 48 |

+

46,/m/07q0yl5,"Snort"

|

| 49 |

+

47,/m/01b_21,"Cough"

|

| 50 |

+

48,/m/0dl9sf8,"Throat clearing"

|

| 51 |

+

49,/m/01hsr_,"Sneeze"

|

| 52 |

+

50,/m/07ppn3j,"Sniff"

|

| 53 |

+

51,/m/06h7j,"Run"

|

| 54 |

+

52,/m/07qv_x_,"Shuffle"

|

| 55 |

+

53,/m/07pbtc8,"Walk, footsteps"

|

| 56 |

+

54,/m/03cczk,"Chewing, mastication"

|

| 57 |

+

55,/m/07pdhp0,"Biting"

|

| 58 |

+

56,/m/0939n_,"Gargling"

|

| 59 |

+

57,/m/01g90h,"Stomach rumble"

|

| 60 |

+

58,/m/03q5_w,"Burping, eructation"

|

| 61 |

+

59,/m/02p3nc,"Hiccup"

|

| 62 |

+

60,/m/02_nn,"Fart"

|

| 63 |

+

61,/m/0k65p,"Hands"

|

| 64 |

+

62,/m/025_jnm,"Finger snapping"

|

| 65 |

+

63,/m/0l15bq,"Clapping"

|

| 66 |

+

64,/m/01jg02,"Heart sounds, heartbeat"

|

| 67 |

+

65,/m/01jg1z,"Heart murmur"

|

| 68 |

+

66,/m/053hz1,"Cheering"

|

| 69 |

+

67,/m/028ght,"Applause"

|

| 70 |

+

68,/m/07rkbfh,"Chatter"

|

| 71 |

+

69,/m/03qtwd,"Crowd"

|

| 72 |

+

70,/m/07qfr4h,"Hubbub, speech noise, speech babble"

|

| 73 |

+

71,/t/dd00013,"Children playing"

|

| 74 |

+

72,/m/0jbk,"Animal"

|

| 75 |

+

73,/m/068hy,"Domestic animals, pets"

|

| 76 |

+

74,/m/0bt9lr,"Dog"

|

| 77 |

+

75,/m/05tny_,"Bark"

|

| 78 |

+

76,/m/07r_k2n,"Yip"

|

| 79 |

+

77,/m/07qf0zm,"Howl"

|

| 80 |

+

78,/m/07rc7d9,"Bow-wow"

|

| 81 |

+

79,/m/0ghcn6,"Growling"

|

| 82 |

+

80,/t/dd00136,"Whimper (dog)"

|

| 83 |

+

81,/m/01yrx,"Cat"

|

| 84 |

+

82,/m/02yds9,"Purr"

|

| 85 |

+

83,/m/07qrkrw,"Meow"

|

| 86 |

+

84,/m/07rjwbb,"Hiss"

|

| 87 |

+

85,/m/07r81j2,"Caterwaul"

|

| 88 |

+

86,/m/0ch8v,"Livestock, farm animals, working animals"

|

| 89 |

+

87,/m/03k3r,"Horse"

|

| 90 |

+

88,/m/07rv9rh,"Clip-clop"

|

| 91 |

+

89,/m/07q5rw0,"Neigh, whinny"

|

| 92 |

+

90,/m/01xq0k1,"Cattle, bovinae"

|

| 93 |

+

91,/m/07rpkh9,"Moo"

|

| 94 |

+

92,/m/0239kh,"Cowbell"

|

| 95 |

+

93,/m/068zj,"Pig"

|

| 96 |

+

94,/t/dd00018,"Oink"

|

| 97 |

+

95,/m/03fwl,"Goat"

|

| 98 |

+

96,/m/07q0h5t,"Bleat"

|

| 99 |

+

97,/m/07bgp,"Sheep"

|

| 100 |

+

98,/m/025rv6n,"Fowl"

|

| 101 |

+

99,/m/09b5t,"Chicken, rooster"

|

| 102 |

+

100,/m/07st89h,"Cluck"

|

| 103 |

+

101,/m/07qn5dc,"Crowing, cock-a-doodle-doo"

|

| 104 |

+

102,/m/01rd7k,"Turkey"

|

| 105 |

+

103,/m/07svc2k,"Gobble"

|

| 106 |

+

104,/m/09ddx,"Duck"

|

| 107 |

+

105,/m/07qdb04,"Quack"

|

| 108 |

+

106,/m/0dbvp,"Goose"

|

| 109 |

+

107,/m/07qwf61,"Honk"

|

| 110 |

+

108,/m/01280g,"Wild animals"

|

| 111 |

+

109,/m/0cdnk,"Roaring cats (lions, tigers)"

|

| 112 |

+

110,/m/04cvmfc,"Roar"

|

| 113 |

+

111,/m/015p6,"Bird"

|

| 114 |

+

112,/m/020bb7,"Bird vocalization, bird call, bird song"

|

| 115 |

+

113,/m/07pggtn,"Chirp, tweet"

|

| 116 |

+

114,/m/07sx8x_,"Squawk"

|

| 117 |

+

115,/m/0h0rv,"Pigeon, dove"

|

| 118 |

+

116,/m/07r_25d,"Coo"

|

| 119 |

+

117,/m/04s8yn,"Crow"

|

| 120 |

+

118,/m/07r5c2p,"Caw"

|

| 121 |

+

119,/m/09d5_,"Owl"

|

| 122 |

+

120,/m/07r_80w,"Hoot"

|

| 123 |

+

121,/m/05_wcq,"Bird flight, flapping wings"

|

| 124 |

+

122,/m/01z5f,"Canidae, dogs, wolves"

|

| 125 |

+

123,/m/06hps,"Rodents, rats, mice"

|

| 126 |

+

124,/m/04rmv,"Mouse"

|

| 127 |

+

125,/m/07r4gkf,"Patter"

|

| 128 |

+

126,/m/03vt0,"Insect"

|

| 129 |

+

127,/m/09xqv,"Cricket"

|

| 130 |

+

128,/m/09f96,"Mosquito"

|

| 131 |

+

129,/m/0h2mp,"Fly, housefly"

|

| 132 |

+

130,/m/07pjwq1,"Buzz"

|

| 133 |

+

131,/m/01h3n,"Bee, wasp, etc."

|

| 134 |

+

132,/m/09ld4,"Frog"

|

| 135 |

+

133,/m/07st88b,"Croak"

|

| 136 |

+

134,/m/078jl,"Snake"

|

| 137 |

+

135,/m/07qn4z3,"Rattle"

|

| 138 |

+

136,/m/032n05,"Whale vocalization"

|

| 139 |

+

137,/m/04rlf,"Music"

|

| 140 |

+

138,/m/04szw,"Musical instrument"

|

| 141 |

+

139,/m/0fx80y,"Plucked string instrument"

|

| 142 |

+

140,/m/0342h,"Guitar"

|

| 143 |

+

141,/m/02sgy,"Electric guitar"

|

| 144 |

+

142,/m/018vs,"Bass guitar"

|

| 145 |

+

143,/m/042v_gx,"Acoustic guitar"

|

| 146 |

+

144,/m/06w87,"Steel guitar, slide guitar"

|

| 147 |

+

145,/m/01glhc,"Tapping (guitar technique)"

|

| 148 |

+

146,/m/07s0s5r,"Strum"

|

| 149 |

+

147,/m/018j2,"Banjo"

|

| 150 |

+

148,/m/0jtg0,"Sitar"

|

| 151 |

+

149,/m/04rzd,"Mandolin"

|

| 152 |

+

150,/m/01bns_,"Zither"

|

| 153 |

+

151,/m/07xzm,"Ukulele"

|

| 154 |

+

152,/m/05148p4,"Keyboard (musical)"

|

| 155 |

+

153,/m/05r5c,"Piano"

|

| 156 |

+

154,/m/01s0ps,"Electric piano"

|

| 157 |

+

155,/m/013y1f,"Organ"

|

| 158 |

+

156,/m/03xq_f,"Electronic organ"

|

| 159 |

+

157,/m/03gvt,"Hammond organ"

|

| 160 |

+

158,/m/0l14qv,"Synthesizer"

|

| 161 |

+

159,/m/01v1d8,"Sampler"

|

| 162 |

+

160,/m/03q5t,"Harpsichord"

|

| 163 |

+

161,/m/0l14md,"Percussion"

|

| 164 |

+

162,/m/02hnl,"Drum kit"

|

| 165 |

+

163,/m/0cfdd,"Drum machine"

|

| 166 |

+

164,/m/026t6,"Drum"

|

| 167 |

+

165,/m/06rvn,"Snare drum"

|

| 168 |

+

166,/m/03t3fj,"Rimshot"

|

| 169 |

+

167,/m/02k_mr,"Drum roll"

|

| 170 |

+

168,/m/0bm02,"Bass drum"

|

| 171 |

+

169,/m/011k_j,"Timpani"

|

| 172 |

+

170,/m/01p970,"Tabla"

|

| 173 |

+

171,/m/01qbl,"Cymbal"

|

| 174 |

+

172,/m/03qtq,"Hi-hat"

|

| 175 |

+

173,/m/01sm1g,"Wood block"

|

| 176 |

+

174,/m/07brj,"Tambourine"

|

| 177 |

+

175,/m/05r5wn,"Rattle (instrument)"

|

| 178 |

+

176,/m/0xzly,"Maraca"

|

| 179 |

+

177,/m/0mbct,"Gong"

|

| 180 |

+

178,/m/016622,"Tubular bells"

|

| 181 |

+

179,/m/0j45pbj,"Mallet percussion"

|

| 182 |

+

180,/m/0dwsp,"Marimba, xylophone"

|

| 183 |

+

181,/m/0dwtp,"Glockenspiel"

|

| 184 |

+

182,/m/0dwt5,"Vibraphone"

|

| 185 |

+

183,/m/0l156b,"Steelpan"

|

| 186 |

+

184,/m/05pd6,"Orchestra"

|

| 187 |

+

185,/m/01kcd,"Brass instrument"

|

| 188 |

+

186,/m/0319l,"French horn"

|

| 189 |

+

187,/m/07gql,"Trumpet"

|

| 190 |

+

188,/m/07c6l,"Trombone"

|

| 191 |

+

189,/m/0l14_3,"Bowed string instrument"

|

| 192 |

+

190,/m/02qmj0d,"String section"

|

| 193 |

+

191,/m/07y_7,"Violin, fiddle"

|

| 194 |

+

192,/m/0d8_n,"Pizzicato"

|

| 195 |

+

193,/m/01xqw,"Cello"

|

| 196 |

+

194,/m/02fsn,"Double bass"

|

| 197 |

+

195,/m/085jw,"Wind instrument, woodwind instrument"

|

| 198 |

+

196,/m/0l14j_,"Flute"

|

| 199 |

+

197,/m/06ncr,"Saxophone"

|

| 200 |

+

198,/m/01wy6,"Clarinet"

|

| 201 |

+

199,/m/03m5k,"Harp"

|

| 202 |

+

200,/m/0395lw,"Bell"

|

| 203 |

+

201,/m/03w41f,"Church bell"

|

| 204 |

+

202,/m/027m70_,"Jingle bell"

|

| 205 |

+

203,/m/0gy1t2s,"Bicycle bell"

|

| 206 |

+

204,/m/07n_g,"Tuning fork"

|

| 207 |

+

205,/m/0f8s22,"Chime"

|

| 208 |

+

206,/m/026fgl,"Wind chime"

|

| 209 |

+

207,/m/0150b9,"Change ringing (campanology)"

|

| 210 |

+

208,/m/03qjg,"Harmonica"

|

| 211 |

+

209,/m/0mkg,"Accordion"

|

| 212 |

+

210,/m/0192l,"Bagpipes"

|

| 213 |

+

211,/m/02bxd,"Didgeridoo"

|

| 214 |

+

212,/m/0l14l2,"Shofar"

|

| 215 |

+

213,/m/07kc_,"Theremin"

|

| 216 |

+

214,/m/0l14t7,"Singing bowl"

|

| 217 |

+

215,/m/01hgjl,"Scratching (performance technique)"

|

| 218 |

+

216,/m/064t9,"Pop music"

|

| 219 |

+

217,/m/0glt670,"Hip hop music"

|

| 220 |

+

218,/m/02cz_7,"Beatboxing"

|

| 221 |

+

219,/m/06by7,"Rock music"

|

| 222 |

+

220,/m/03lty,"Heavy metal"

|

| 223 |

+

221,/m/05r6t,"Punk rock"

|

| 224 |

+

222,/m/0dls3,"Grunge"

|

| 225 |

+

223,/m/0dl5d,"Progressive rock"

|

| 226 |

+

224,/m/07sbbz2,"Rock and roll"

|

| 227 |

+

225,/m/05w3f,"Psychedelic rock"

|

| 228 |

+

226,/m/06j6l,"Rhythm and blues"

|

| 229 |

+

227,/m/0gywn,"Soul music"

|

| 230 |

+

228,/m/06cqb,"Reggae"

|

| 231 |

+

229,/m/01lyv,"Country"

|

| 232 |

+

230,/m/015y_n,"Swing music"

|

| 233 |

+

231,/m/0gg8l,"Bluegrass"

|

| 234 |

+

232,/m/02x8m,"Funk"

|

| 235 |

+

233,/m/02w4v,"Folk music"

|

| 236 |

+

234,/m/06j64v,"Middle Eastern music"

|

| 237 |

+

235,/m/03_d0,"Jazz"

|

| 238 |

+

236,/m/026z9,"Disco"

|

| 239 |

+

237,/m/0ggq0m,"Classical music"

|

| 240 |

+

238,/m/05lls,"Opera"

|

| 241 |

+

239,/m/02lkt,"Electronic music"

|

| 242 |

+

240,/m/03mb9,"House music"

|

| 243 |

+

241,/m/07gxw,"Techno"

|

| 244 |

+

242,/m/07s72n,"Dubstep"

|

| 245 |

+

243,/m/0283d,"Drum and bass"

|

| 246 |

+

244,/m/0m0jc,"Electronica"

|

| 247 |

+

245,/m/08cyft,"Electronic dance music"

|

| 248 |

+

246,/m/0fd3y,"Ambient music"

|

| 249 |

+

247,/m/07lnk,"Trance music"

|

| 250 |

+

248,/m/0g293,"Music of Latin America"

|

| 251 |

+

249,/m/0ln16,"Salsa music"

|

| 252 |

+

250,/m/0326g,"Flamenco"

|

| 253 |

+

251,/m/0155w,"Blues"

|

| 254 |

+

252,/m/05fw6t,"Music for children"

|

| 255 |

+

253,/m/02v2lh,"New-age music"

|

| 256 |

+

254,/m/0y4f8,"Vocal music"

|

| 257 |

+

255,/m/0z9c,"A capella"

|

| 258 |

+

256,/m/0164x2,"Music of Africa"

|

| 259 |

+

257,/m/0145m,"Afrobeat"

|

| 260 |

+

258,/m/02mscn,"Christian music"

|

| 261 |

+

259,/m/016cjb,"Gospel music"

|

| 262 |

+

260,/m/028sqc,"Music of Asia"

|

| 263 |

+

261,/m/015vgc,"Carnatic music"

|

| 264 |

+

262,/m/0dq0md,"Music of Bollywood"

|

| 265 |

+

263,/m/06rqw,"Ska"

|

| 266 |

+

264,/m/02p0sh1,"Traditional music"

|

| 267 |

+

265,/m/05rwpb,"Independent music"

|

| 268 |

+

266,/m/074ft,"Song"

|

| 269 |

+

267,/m/025td0t,"Background music"

|

| 270 |

+

268,/m/02cjck,"Theme music"

|

| 271 |

+

269,/m/03r5q_,"Jingle (music)"

|

| 272 |

+

270,/m/0l14gg,"Soundtrack music"

|

| 273 |

+

271,/m/07pkxdp,"Lullaby"

|

| 274 |

+

272,/m/01z7dr,"Video game music"

|

| 275 |

+

273,/m/0140xf,"Christmas music"

|

| 276 |

+

274,/m/0ggx5q,"Dance music"

|

| 277 |

+

275,/m/04wptg,"Wedding music"

|

| 278 |

+

276,/t/dd00031,"Happy music"

|

| 279 |

+

277,/t/dd00032,"Funny music"

|

| 280 |

+

278,/t/dd00033,"Sad music"

|

| 281 |

+

279,/t/dd00034,"Tender music"

|

| 282 |

+

280,/t/dd00035,"Exciting music"

|

| 283 |

+

281,/t/dd00036,"Angry music"

|

| 284 |

+

282,/t/dd00037,"Scary music"

|

| 285 |

+

283,/m/03m9d0z,"Wind"

|

| 286 |

+

284,/m/09t49,"Rustling leaves"

|

| 287 |

+

285,/t/dd00092,"Wind noise (microphone)"

|

| 288 |

+

286,/m/0jb2l,"Thunderstorm"

|

| 289 |

+

287,/m/0ngt1,"Thunder"

|

| 290 |

+

288,/m/0838f,"Water"

|

| 291 |

+

289,/m/06mb1,"Rain"

|

| 292 |

+

290,/m/07r10fb,"Raindrop"

|

| 293 |

+

291,/t/dd00038,"Rain on surface"

|

| 294 |

+

292,/m/0j6m2,"Stream"

|

| 295 |

+

293,/m/0j2kx,"Waterfall"

|

| 296 |

+

294,/m/05kq4,"Ocean"

|

| 297 |

+

295,/m/034srq,"Waves, surf"

|

| 298 |

+

296,/m/06wzb,"Steam"

|

| 299 |

+

297,/m/07swgks,"Gurgling"

|

| 300 |

+

298,/m/02_41,"Fire"

|

| 301 |

+

299,/m/07pzfmf,"Crackle"

|

| 302 |

+

300,/m/07yv9,"Vehicle"

|

| 303 |

+

301,/m/019jd,"Boat, Water vehicle"

|

| 304 |

+

302,/m/0hsrw,"Sailboat, sailing ship"

|

| 305 |

+

303,/m/056ks2,"Rowboat, canoe, kayak"

|

| 306 |

+

304,/m/02rlv9,"Motorboat, speedboat"

|

| 307 |

+

305,/m/06q74,"Ship"

|

| 308 |

+

306,/m/012f08,"Motor vehicle (road)"

|

| 309 |

+

307,/m/0k4j,"Car"

|

| 310 |

+

308,/m/0912c9,"Vehicle horn, car horn, honking"

|

| 311 |

+

309,/m/07qv_d5,"Toot"

|

| 312 |

+

310,/m/02mfyn,"Car alarm"

|

| 313 |

+

311,/m/04gxbd,"Power windows, electric windows"

|

| 314 |

+

312,/m/07rknqz,"Skidding"

|

| 315 |

+

313,/m/0h9mv,"Tire squeal"

|

| 316 |

+

314,/t/dd00134,"Car passing by"

|

| 317 |

+

315,/m/0ltv,"Race car, auto racing"

|

| 318 |

+

316,/m/07r04,"Truck"

|

| 319 |

+

317,/m/0gvgw0,"Air brake"

|

| 320 |

+

318,/m/05x_td,"Air horn, truck horn"

|

| 321 |

+

319,/m/02rhddq,"Reversing beeps"

|

| 322 |

+

320,/m/03cl9h,"Ice cream truck, ice cream van"

|

| 323 |

+

321,/m/01bjv,"Bus"

|

| 324 |

+

322,/m/03j1ly,"Emergency vehicle"

|

| 325 |

+

323,/m/04qvtq,"Police car (siren)"

|

| 326 |

+

324,/m/012n7d,"Ambulance (siren)"

|

| 327 |

+

325,/m/012ndj,"Fire engine, fire truck (siren)"

|

| 328 |

+

326,/m/04_sv,"Motorcycle"

|

| 329 |

+

327,/m/0btp2,"Traffic noise, roadway noise"

|

| 330 |

+

328,/m/06d_3,"Rail transport"

|

| 331 |

+

329,/m/07jdr,"Train"

|

| 332 |

+

330,/m/04zmvq,"Train whistle"

|

| 333 |

+

331,/m/0284vy3,"Train horn"

|

| 334 |

+

332,/m/01g50p,"Railroad car, train wagon"

|

| 335 |

+

333,/t/dd00048,"Train wheels squealing"

|

| 336 |

+

334,/m/0195fx,"Subway, metro, underground"

|

| 337 |

+

335,/m/0k5j,"Aircraft"

|

| 338 |

+

336,/m/014yck,"Aircraft engine"

|

| 339 |

+

337,/m/04229,"Jet engine"

|

| 340 |

+

338,/m/02l6bg,"Propeller, airscrew"

|

| 341 |

+

339,/m/09ct_,"Helicopter"

|

| 342 |

+

340,/m/0cmf2,"Fixed-wing aircraft, airplane"

|

| 343 |

+

341,/m/0199g,"Bicycle"

|

| 344 |

+

342,/m/06_fw,"Skateboard"

|

| 345 |

+

343,/m/02mk9,"Engine"

|

| 346 |

+

344,/t/dd00065,"Light engine (high frequency)"

|

| 347 |

+

345,/m/08j51y,"Dental drill, dentist's drill"

|

| 348 |

+

346,/m/01yg9g,"Lawn mower"

|

| 349 |

+

347,/m/01j4z9,"Chainsaw"

|

| 350 |

+

348,/t/dd00066,"Medium engine (mid frequency)"

|

| 351 |

+

349,/t/dd00067,"Heavy engine (low frequency)"

|

| 352 |

+

350,/m/01h82_,"Engine knocking"

|

| 353 |

+

351,/t/dd00130,"Engine starting"

|

| 354 |

+

352,/m/07pb8fc,"Idling"

|

| 355 |

+

353,/m/07q2z82,"Accelerating, revving, vroom"

|

| 356 |

+

354,/m/02dgv,"Door"

|

| 357 |

+

355,/m/03wwcy,"Doorbell"

|

| 358 |

+

356,/m/07r67yg,"Ding-dong"

|

| 359 |

+

357,/m/02y_763,"Sliding door"

|

| 360 |

+

358,/m/07rjzl8,"Slam"

|

| 361 |

+

359,/m/07r4wb8,"Knock"

|

| 362 |

+

360,/m/07qcpgn,"Tap"

|

| 363 |

+

361,/m/07q6cd_,"Squeak"

|

| 364 |

+

362,/m/0642b4,"Cupboard open or close"

|

| 365 |

+

363,/m/0fqfqc,"Drawer open or close"

|

| 366 |

+

364,/m/04brg2,"Dishes, pots, and pans"

|

| 367 |

+

365,/m/023pjk,"Cutlery, silverware"

|

| 368 |

+

366,/m/07pn_8q,"Chopping (food)"

|

| 369 |

+

367,/m/0dxrf,"Frying (food)"

|

| 370 |

+

368,/m/0fx9l,"Microwave oven"

|

| 371 |

+

369,/m/02pjr4,"Blender"

|

| 372 |

+

370,/m/02jz0l,"Water tap, faucet"

|

| 373 |

+

371,/m/0130jx,"Sink (filling or washing)"

|

| 374 |

+

372,/m/03dnzn,"Bathtub (filling or washing)"

|

| 375 |

+

373,/m/03wvsk,"Hair dryer"

|

| 376 |

+

374,/m/01jt3m,"Toilet flush"

|

| 377 |

+

375,/m/012xff,"Toothbrush"

|

| 378 |

+

376,/m/04fgwm,"Electric toothbrush"

|

| 379 |

+

377,/m/0d31p,"Vacuum cleaner"

|

| 380 |

+

378,/m/01s0vc,"Zipper (clothing)"

|

| 381 |

+

379,/m/03v3yw,"Keys jangling"

|

| 382 |

+

380,/m/0242l,"Coin (dropping)"

|

| 383 |

+

381,/m/01lsmm,"Scissors"

|

| 384 |

+

382,/m/02g901,"Electric shaver, electric razor"

|

| 385 |

+

383,/m/05rj2,"Shuffling cards"

|

| 386 |

+

384,/m/0316dw,"Typing"

|

| 387 |

+

385,/m/0c2wf,"Typewriter"

|

| 388 |

+

386,/m/01m2v,"Computer keyboard"

|

| 389 |

+

387,/m/081rb,"Writing"

|

| 390 |

+

388,/m/07pp_mv,"Alarm"

|

| 391 |

+

389,/m/07cx4,"Telephone"

|

| 392 |

+

390,/m/07pp8cl,"Telephone bell ringing"

|

| 393 |

+

391,/m/01hnzm,"Ringtone"

|

| 394 |

+

392,/m/02c8p,"Telephone dialing, DTMF"

|

| 395 |

+

393,/m/015jpf,"Dial tone"

|

| 396 |

+

394,/m/01z47d,"Busy signal"

|

| 397 |

+

395,/m/046dlr,"Alarm clock"

|

| 398 |

+

396,/m/03kmc9,"Siren"

|

| 399 |

+

397,/m/0dgbq,"Civil defense siren"

|

| 400 |

+

398,/m/030rvx,"Buzzer"

|

| 401 |

+

399,/m/01y3hg,"Smoke detector, smoke alarm"

|

| 402 |

+

400,/m/0c3f7m,"Fire alarm"

|

| 403 |

+

401,/m/04fq5q,"Foghorn"

|

| 404 |

+

402,/m/0l156k,"Whistle"

|

| 405 |

+

403,/m/06hck5,"Steam whistle"

|

| 406 |

+

404,/t/dd00077,"Mechanisms"

|

| 407 |

+

405,/m/02bm9n,"Ratchet, pawl"

|

| 408 |

+

406,/m/01x3z,"Clock"

|

| 409 |

+

407,/m/07qjznt,"Tick"

|

| 410 |

+

408,/m/07qjznl,"Tick-tock"

|

| 411 |

+

409,/m/0l7xg,"Gears"

|

| 412 |

+

410,/m/05zc1,"Pulleys"

|

| 413 |

+

411,/m/0llzx,"Sewing machine"

|

| 414 |

+

412,/m/02x984l,"Mechanical fan"

|

| 415 |

+

413,/m/025wky1,"Air conditioning"

|

| 416 |

+

414,/m/024dl,"Cash register"

|

| 417 |

+

415,/m/01m4t,"Printer"

|

| 418 |

+

416,/m/0dv5r,"Camera"

|

| 419 |

+

417,/m/07bjf,"Single-lens reflex camera"

|

| 420 |

+

418,/m/07k1x,"Tools"

|

| 421 |

+

419,/m/03l9g,"Hammer"

|

| 422 |

+

420,/m/03p19w,"Jackhammer"

|

| 423 |

+

421,/m/01b82r,"Sawing"

|

| 424 |

+

422,/m/02p01q,"Filing (rasp)"

|

| 425 |

+

423,/m/023vsd,"Sanding"

|

| 426 |

+

424,/m/0_ksk,"Power tool"

|

| 427 |

+

425,/m/01d380,"Drill"

|

| 428 |

+

426,/m/014zdl,"Explosion"

|

| 429 |

+

427,/m/032s66,"Gunshot, gunfire"

|

| 430 |

+

428,/m/04zjc,"Machine gun"

|

| 431 |

+

429,/m/02z32qm,"Fusillade"

|

| 432 |

+

430,/m/0_1c,"Artillery fire"

|

| 433 |

+

431,/m/073cg4,"Cap gun"

|

| 434 |

+

432,/m/0g6b5,"Fireworks"

|

| 435 |

+

433,/g/122z_qxw,"Firecracker"

|

| 436 |

+

434,/m/07qsvvw,"Burst, pop"

|

| 437 |

+

435,/m/07pxg6y,"Eruption"

|

| 438 |

+

436,/m/07qqyl4,"Boom"

|

| 439 |

+

437,/m/083vt,"Wood"

|

| 440 |

+

438,/m/07pczhz,"Chop"

|

| 441 |

+

439,/m/07pl1bw,"Splinter"

|

| 442 |

+

440,/m/07qs1cx,"Crack"

|

| 443 |

+

441,/m/039jq,"Glass"

|

| 444 |

+

442,/m/07q7njn,"Chink, clink"

|

| 445 |

+

443,/m/07rn7sz,"Shatter"

|

| 446 |

+

444,/m/04k94,"Liquid"

|

| 447 |

+

445,/m/07rrlb6,"Splash, splatter"

|

| 448 |

+

446,/m/07p6mqd,"Slosh"

|

| 449 |

+

447,/m/07qlwh6,"Squish"

|

| 450 |

+

448,/m/07r5v4s,"Drip"

|

| 451 |

+

449,/m/07prgkl,"Pour"

|

| 452 |

+

450,/m/07pqc89,"Trickle, dribble"

|

| 453 |

+

451,/t/dd00088,"Gush"

|

| 454 |

+

452,/m/07p7b8y,"Fill (with liquid)"

|

| 455 |

+

453,/m/07qlf79,"Spray"

|

| 456 |

+

454,/m/07ptzwd,"Pump (liquid)"

|

| 457 |

+

455,/m/07ptfmf,"Stir"

|

| 458 |

+

456,/m/0dv3j,"Boiling"

|

| 459 |

+

457,/m/0790c,"Sonar"

|

| 460 |

+

458,/m/0dl83,"Arrow"

|

| 461 |

+

459,/m/07rqsjt,"Whoosh, swoosh, swish"

|

| 462 |

+

460,/m/07qnq_y,"Thump, thud"

|

| 463 |

+

461,/m/07rrh0c,"Thunk"

|

| 464 |

+

462,/m/0b_fwt,"Electronic tuner"

|

| 465 |

+

463,/m/02rr_,"Effects unit"

|

| 466 |

+

464,/m/07m2kt,"Chorus effect"

|

| 467 |

+

465,/m/018w8,"Basketball bounce"

|

| 468 |

+

466,/m/07pws3f,"Bang"

|

| 469 |

+

467,/m/07ryjzk,"Slap, smack"

|

| 470 |

+

468,/m/07rdhzs,"Whack, thwack"

|

| 471 |

+

469,/m/07pjjrj,"Smash, crash"

|

| 472 |

+

470,/m/07pc8lb,"Breaking"

|

| 473 |

+

471,/m/07pqn27,"Bouncing"

|

| 474 |

+

472,/m/07rbp7_,"Whip"

|

| 475 |

+

473,/m/07pyf11,"Flap"

|

| 476 |

+

474,/m/07qb_dv,"Scratch"

|

| 477 |

+

475,/m/07qv4k0,"Scrape"

|

| 478 |

+

476,/m/07pdjhy,"Rub"

|

| 479 |

+

477,/m/07s8j8t,"Roll"

|

| 480 |

+

478,/m/07plct2,"Crushing"

|

| 481 |

+

479,/t/dd00112,"Crumpling, crinkling"

|

| 482 |

+

480,/m/07qcx4z,"Tearing"

|

| 483 |

+

481,/m/02fs_r,"Beep, bleep"

|

| 484 |

+

482,/m/07qwdck,"Ping"

|

| 485 |

+

483,/m/07phxs1,"Ding"

|

| 486 |

+

484,/m/07rv4dm,"Clang"

|

| 487 |

+

485,/m/07s02z0,"Squeal"

|

| 488 |

+

486,/m/07qh7jl,"Creak"

|

| 489 |

+

487,/m/07qwyj0,"Rustle"

|

| 490 |

+

488,/m/07s34ls,"Whir"

|

| 491 |

+

489,/m/07qmpdm,"Clatter"

|

| 492 |

+

490,/m/07p9k1k,"Sizzle"

|

| 493 |

+

491,/m/07qc9xj,"Clicking"

|

| 494 |

+

492,/m/07rwm0c,"Clickety-clack"

|

| 495 |

+

493,/m/07phhsh,"Rumble"

|

| 496 |

+

494,/m/07qyrcz,"Plop"

|

| 497 |

+

495,/m/07qfgpx,"Jingle, tinkle"

|

| 498 |

+

496,/m/07rcgpl,"Hum"

|

| 499 |

+

497,/m/07p78v5,"Zing"

|

| 500 |

+

498,/t/dd00121,"Boing"

|

| 501 |

+

499,/m/07s12q4,"Crunch"

|

| 502 |

+

500,/m/028v0c,"Silence"

|

| 503 |

+

501,/m/01v_m0,"Sine wave"

|

| 504 |

+

502,/m/0b9m1,"Harmonic"

|

| 505 |

+

503,/m/0hdsk,"Chirp tone"

|

| 506 |

+

504,/m/0c1dj,"Sound effect"

|

| 507 |

+

505,/m/07pt_g0,"Pulse"

|

| 508 |

+

506,/t/dd00125,"Inside, small room"

|

| 509 |

+

507,/t/dd00126,"Inside, large room or hall"

|

| 510 |

+

508,/t/dd00127,"Inside, public space"

|

| 511 |

+

509,/t/dd00128,"Outside, urban or manmade"

|

| 512 |

+

510,/t/dd00129,"Outside, rural or natural"

|

| 513 |

+

511,/m/01b9nn,"Reverberation"

|

| 514 |

+

512,/m/01jnbd,"Echo"

|

| 515 |

+

513,/m/096m7z,"Noise"

|

| 516 |

+

514,/m/06_y0by,"Environmental noise"

|

| 517 |

+

515,/m/07rgkc5,"Static"

|

| 518 |

+

516,/m/06xkwv,"Mains hum"

|

| 519 |

+

517,/m/0g12c5,"Distortion"

|

| 520 |

+

518,/m/08p9q4,"Sidetone"

|

| 521 |

+

519,/m/07szfh9,"Cacophony"

|

| 522 |

+

520,/m/0chx_,"White noise"

|

| 523 |

+

521,/m/0cj0r,"Pink noise"

|

| 524 |

+

522,/m/07p_0gm,"Throbbing"

|

| 525 |

+

523,/m/01jwx6,"Vibration"

|

| 526 |

+

524,/m/07c52,"Television"

|

| 527 |

+

525,/m/06bz3,"Radio"

|

| 528 |

+

526,/m/07hvw1,"Field recording"

|

a_cls/dataloader.py

ADDED

|

@@ -0,0 +1,90 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: utf-8 -*-

|

| 2 |

+

# @Time : 6/19/21 12:23 AM

|

| 3 |

+

# @Author : Yuan Gong

|

| 4 |

+

# @Affiliation : Massachusetts Institute of Technology

|

| 5 |

+

# @Email : yuangong@mit.edu

|

| 6 |

+

# @File : dataloader.py

|

| 7 |

+

|

| 8 |

+

# modified from:

|

| 9 |

+

# Author: David Harwath

|

| 10 |

+

# with some functions borrowed from https://github.com/SeanNaren/deepspeech.pytorch

|

| 11 |

+

|

| 12 |

+

import csv

|

| 13 |

+

import json

|

| 14 |

+

import logging

|

| 15 |

+

|

| 16 |

+

import torchaudio

|

| 17 |

+

import numpy as np

|

| 18 |

+

import torch

|

| 19 |

+

import torch.nn.functional

|

| 20 |

+

from torch.utils.data import Dataset

|

| 21 |

+

import random

|

| 22 |

+

|

| 23 |

+

def make_index_dict(label_csv):

|

| 24 |

+

index_lookup = {}

|

| 25 |

+

with open(label_csv, 'r') as f:

|

| 26 |

+

csv_reader = csv.DictReader(f)

|

| 27 |

+

line_count = 0

|

| 28 |

+

for row in csv_reader:

|

| 29 |

+

index_lookup[row['mid']] = row['index']

|

| 30 |

+

line_count += 1

|

| 31 |

+

return index_lookup

|

| 32 |

+

|

| 33 |

+

def make_name_dict(label_csv):

|

| 34 |

+

name_lookup = {}

|

| 35 |

+

with open(label_csv, 'r') as f:

|

| 36 |

+

csv_reader = csv.DictReader(f)

|

| 37 |

+

line_count = 0

|

| 38 |

+

for row in csv_reader:

|

| 39 |

+

name_lookup[row['index']] = row['display_name']

|

| 40 |

+

line_count += 1

|

| 41 |

+

return name_lookup

|

| 42 |

+

|

| 43 |

+

def lookup_list(index_list, label_csv):

|

| 44 |

+

label_list = []

|

| 45 |

+

table = make_name_dict(label_csv)

|

| 46 |

+

for item in index_list:

|

| 47 |

+

label_list.append(table[item])

|

| 48 |

+

return label_list

|

| 49 |

+

|

| 50 |

+

def preemphasis(signal,coeff=0.97):

|

| 51 |

+

"""perform preemphasis on the input signal.

|

| 52 |

+

|

| 53 |

+

:param signal: The signal to filter.

|

| 54 |

+

:param coeff: The preemphasis coefficient. 0 is none, default 0.97.

|

| 55 |

+

:returns: the filtered signal.

|

| 56 |

+

"""

|

| 57 |

+

return np.append(signal[0],signal[1:]-coeff*signal[:-1])

|

| 58 |

+

|

| 59 |

+

class AudiosetDataset(Dataset):

|

| 60 |

+

def __init__(self, dataset_json_file, audio_conf, label_csv=None):

|

| 61 |

+

"""

|

| 62 |

+

Dataset that manages audio recordings

|

| 63 |

+

:param audio_conf: Dictionary containing the audio loading and preprocessing settings

|

| 64 |

+

:param dataset_json_file

|

| 65 |

+

"""

|

| 66 |

+

self.datapath = dataset_json_file

|

| 67 |

+

with open(dataset_json_file, 'r') as fp:

|

| 68 |

+

data_json = json.load(fp)

|

| 69 |

+

self.data = data_json['data']

|

| 70 |

+

self.index_dict = make_index_dict(label_csv)

|

| 71 |

+

self.label_num = len(self.index_dict)

|

| 72 |

+

|

| 73 |

+

def __getitem__(self, index):

|

| 74 |

+

datum = self.data[index]

|

| 75 |

+

label_indices = np.zeros(self.label_num)

|

| 76 |

+

try:

|

| 77 |

+

fbank, mix_lambda = self._wav2fbank(datum['wav'])

|

| 78 |

+

except Exception as e:

|

| 79 |

+

logging.warning(f"Error at {datum['wav']} with \"{e}\"")

|

| 80 |

+

return self.__getitem__(random.randint(0, self.__len__()-1))

|

| 81 |

+

for label_str in datum['labels'].split(','):

|

| 82 |

+

label_indices[int(self.index_dict[label_str])] = 1.0

|

| 83 |

+

|

| 84 |

+

label_indices = torch.FloatTensor(label_indices)

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

return fbank, label_indices

|

| 88 |

+

|

| 89 |

+

def __len__(self):

|

| 90 |

+

return len(self.data)

|

a_cls/datasets.py

ADDED

|

@@ -0,0 +1,93 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os.path

|

| 2 |

+

|

| 3 |

+

import torch

|

| 4 |

+

|

| 5 |

+

from data.build_datasets import DataInfo

|

| 6 |

+

from data.process_audio import get_audio_transform, torchaudio_loader

|

| 7 |

+

from torchvision import datasets

|

| 8 |

+

|

| 9 |

+

# -*- coding: utf-8 -*-

|

| 10 |

+

# @Time : 6/19/21 12:23 AM

|

| 11 |

+

# @Author : Yuan Gong

|

| 12 |

+

# @Affiliation : Massachusetts Institute of Technology

|

| 13 |

+

# @Email : yuangong@mit.edu

|

| 14 |

+

# @File : dataloader.py

|

| 15 |

+

|

| 16 |

+

# modified from:

|

| 17 |

+

# Author: David Harwath

|

| 18 |

+

# with some functions borrowed from https://github.com/SeanNaren/deepspeech.pytorch

|

| 19 |

+

|

| 20 |

+

import csv

|

| 21 |

+

import json

|

| 22 |

+

import logging

|

| 23 |

+

|

| 24 |

+

import torchaudio

|

| 25 |

+

import numpy as np

|

| 26 |

+

import torch

|

| 27 |

+

import torch.nn.functional

|

| 28 |

+

from torch.utils.data import Dataset

|

| 29 |

+

import random

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

def make_index_dict(label_csv):

|

| 33 |

+

index_lookup = {}

|

| 34 |

+

with open(label_csv, 'r') as f:

|

| 35 |

+

csv_reader = csv.DictReader(f)

|

| 36 |

+

line_count = 0

|

| 37 |

+

for row in csv_reader:

|

| 38 |

+

index_lookup[row['mid']] = row['index']

|

| 39 |

+

line_count += 1

|

| 40 |

+

return index_lookup

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

class AudiosetDataset(Dataset):

|

| 44 |

+

def __init__(self, args, transform, loader):

|

| 45 |

+

self.audio_root = '/apdcephfs_cq3/share_1311970/downstream_datasets/Audio/audioset/eval_segments'

|

| 46 |

+

dataset_json_file = '/apdcephfs_cq3/share_1311970/downstream_datasets/Audio/audioset/filter_eval.json'

|

| 47 |

+